36 R. Qi et al. / Signal Processing 153 (2018) 34–46

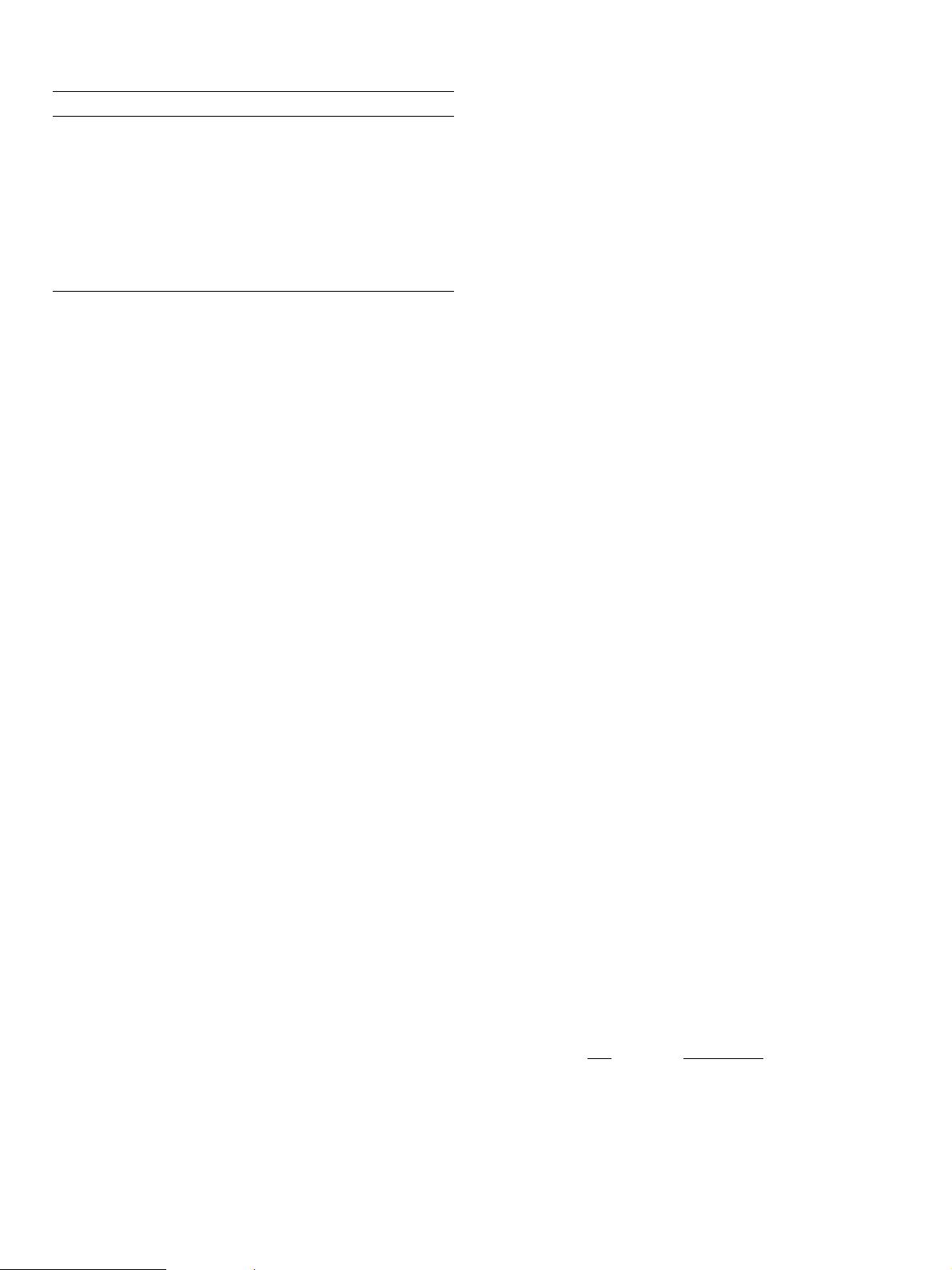

Algorithm 1 The B gOMP algorithm.

Input : y ∈ R

m

,

∈ R

m ×n

, K , ɛ , N .

Initialize : k = 0 , r

0

= y ,

0

= ∅ .

1: While k < K and

r

k

2

> ɛ do

2: k = k + 1 ;

3: Select N block indices

{

i

} |

i =1 , 2 , ···,N

corresponding to N largest entries

contained in

r

k −1

,

[ j]

2

, j ∈

= { 1 , 2 , ···, L } ;

4:

k

=

k −1

∪

{

1

,

2

, ···,

N

} | ;

5:

ˆ

x

k

=

+

k

· y ;

6: r

k

= y −

k

ˆ

x

k

.

7: end while

Output :

ˆ

x = arg min

x : supp (x )=

k

y − x

2

.

where x

2 , 1

=

L

i =1

x [ i ]

2

. It’s worth noting that x

2, ∞

is also

used in our paper, which denotes the maximum norm of each x [ i ].

In the remainder of this paper, we will pay our attention to how

and in what conditions the support set of block sparse signals can

be recovered exactly both in noiseless and noisy scenarios respec-

tively. To analyze the performance of algorithms for block sparse

signals, the classic RIP was extended to the block RIP.

2.2. Block RIP

In this section, we would like to introduce the definition

of block RIP of a measurement matrix, which first appeared in

[37] and is a natural extension to the standard definition of RIP.

Definition 1. (Block RIP) Let ∈ R

m ×n

be a given matrix. Then

is said to have the block RIP over I = { d

1

, d

2

, ···, d

L

} with constant

δ

K | I

if for every x ∈ R

n

that is block K -sparse signal over I , we have

that

1 − δ

K

|

I

x

2

2

≤

x

2

2

≤

1 + δ

K

|

I

x

2

2

. (10)

For convenience, in the rest of this paper, we still use δ

K

, in-

stead of δ

K | I

, to represent the block RIP constant whenever the con-

fusion is not caused.

To be mentioned, we mainly discuss the recovery of block

sparse signal with even block size in this paper, i.e., d

1

= d

2

= ···=

d

L

= d. Note that a block K -sparse signal is Kd sparse in the con-

ventional sense. If satisfies the RIP of order Kd , it must hold for

all block K -sparse signals. On the contrary, if satisfies the block

RIP of order K , it may not hold for all Kd sparse signals. That is

to say, the block RIP (of order K ) is a less stringent requirement

comparing with the standard RIP (of order Kd ).

It was established in [37] that certain random matrices satisfy

the block RIP with overwhelming probability, and that this prob-

ability is substantially larger than that of satisfying the standard

RIP.

3. Description of BgOMP algorithm

The BgOMP is shown as Algorithm 1 . At the k th it-

eration, the BgOMP algorithm first chooses N block indices

{

i

} |

i =1 , 2 , ···,N

corresponding to N largest entries contained in

r

k −1

, [ j]

2

( j ∈ ) , where = { 1 , 2 , ···, L } denotes the whole

block indices. N is a fixed positive integer determining the number

of indices chosen. Specializing for the case that N = 1 , the BgOMP

algorithm boils down to BOMP which selects only one block in-

dex corresponding to the maximal norm of correlations each time.

When N > 1, the BgOMP algorithm selects more than one block in-

dex each time. It is obvious that bigger N results in much more

block indices, and subsequently speeds up the algorithm. After ob-

taining the least-square (LS) solution

ˆ

x

k

=

+

k

· y , we produce a

new residual by using LS fit. The algorithm is repeated until the it-

eration number reaches maximum k

max

= K or the norm of resid-

ual r

k

2

is smaller than a threshold ɛ .

The most important difference between BOMP algorithm and

the proposed algorithm is that the BgOMP algorithm chooses N

block indices at each time. Bigger N indicates more block indices

will be selected, which results in shorter running time. On the con-

trary, smaller N results in smaller block indices at each time, which

leads to longer running time. What’s more, together with BOMP al-

gorithm, BgOMP algorithm also need block sparsity to be known as

a prior.

To be mentioned, the residual r

k

in the k th iteration of the

BgOMP is orthogonal to the column of

k

, i.e.,

k

, r

k

=

k

, P

⊥

k

y

=

T

k

P

⊥

k

y

=

T

k

P

⊥

k

T

y =

P

⊥

k

k

T

y = 0 , (11)

where we have used the following result

P

⊥

k

k

=

(

I − P

k

)

k

=

k

−

k

+

k

k

= 0 . (12)

As mentioned in BgOMP algorithm, newly selected block indices

are not overlapped with previous, that is to say |

k

| = Nk . How-

ever, some of the block indices selected in each iteration may be

wrong, hence we have | S ∩

k

| ≤Nk . Also, convergence in a maxi-

mum of K steps indicates that at least one correct block index is

chosen at each iteration, in other words, | S ∩

k

| ≥k .

4. Simulation results

Our simulations are performed in Mathworks MATLAB R2014a

on the environment of Intel Core2 Quad CPU 2.66 GHz processor

with 3.25 G of memory. Several recovery algorithms are used for

comparison with respect to the probability of exact reconstruction

and running time. Three greedy algorithm including OMP [5] , SP

[7] and gOMP [10] are taken from the respective author’s site. BSL0

algorithm is a convex optimization algorithm mentioned in the pa-

per [34] . The BOMP code is implemented based on the paper [37] .

The details of the main items are as follows:

(1) Construct m ×n sensing matrix whose entries drawn from

Gaussian distribution N (0, 1), each column of is normal-

ized to unity. The observation vector is calculated by y = x ,

where m = 128 and n = 256 are fixed in experiment 4.1, 4.3

and 4.5.

(2) Generate two types of block sparse signals x with block

sparsity K (block size d = 2 ). The nonzero blocks are ran-

domly chosen and nonzero elements are 1) drawn indepen-

dently from standard Gaussian distribution N (0, 1), or 2)

chosen randomly from the set { ±1}, the elements of other

blocks are zero. We refer to them as Gaussian or 2-ary pulse

amplitude modulation (2-PAM) block sparse signals.

(3) The reconstruction is regarded as successful recovery if

x −

ˆ

x

2

< 10

−3

, (13)

where x and

ˆ

x denote the original and it’s recovery signal, re-

spectively.

(4) To evaluate the performance of recovery and original sig-

nal, we use a measure named Averaged Normalized Mean

Squared Error (ANMSE) defined as

ANMSE =

1

200

200

i =1

10 log

10

x

(i )

−

ˆ

x

(i )

2

2

x

(i )

2

2

, (14)

where x

( i )

and

ˆ

x

(i )

denote the original signal and it’s estimation

in i th trial, respectively.

(5) Each test is repeated 200 times, the values of the probability

of exact reconstruction and running time are averaged.

(6) The number of indices N to be chosen per iteration is fixed

to 2 except for experiment 4.3 and 4.4.