0 2 4 6 8 10 12 14 16

0.2

0.25

0.3

0.35

0.4

0.45

0.5

# epoch

clip accuracy

depth−1

depth−3

depth−5

depth−7

0 2 4 6 8 10 12 14 16

0.3

0.32

0.34

0.36

0.38

0.4

0.42

0.44

0.46

# epoch

clip accuracy

depth−3

increase

descrease

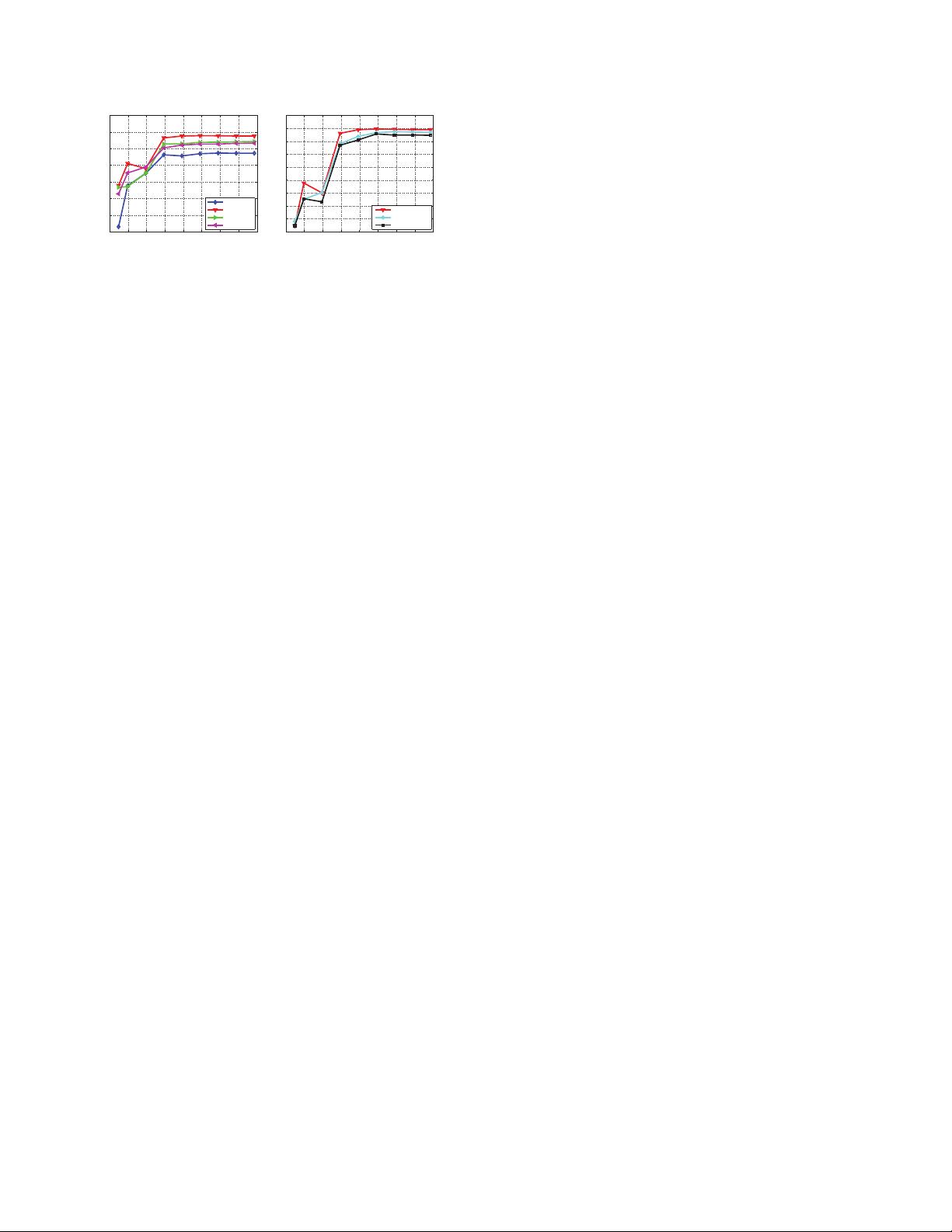

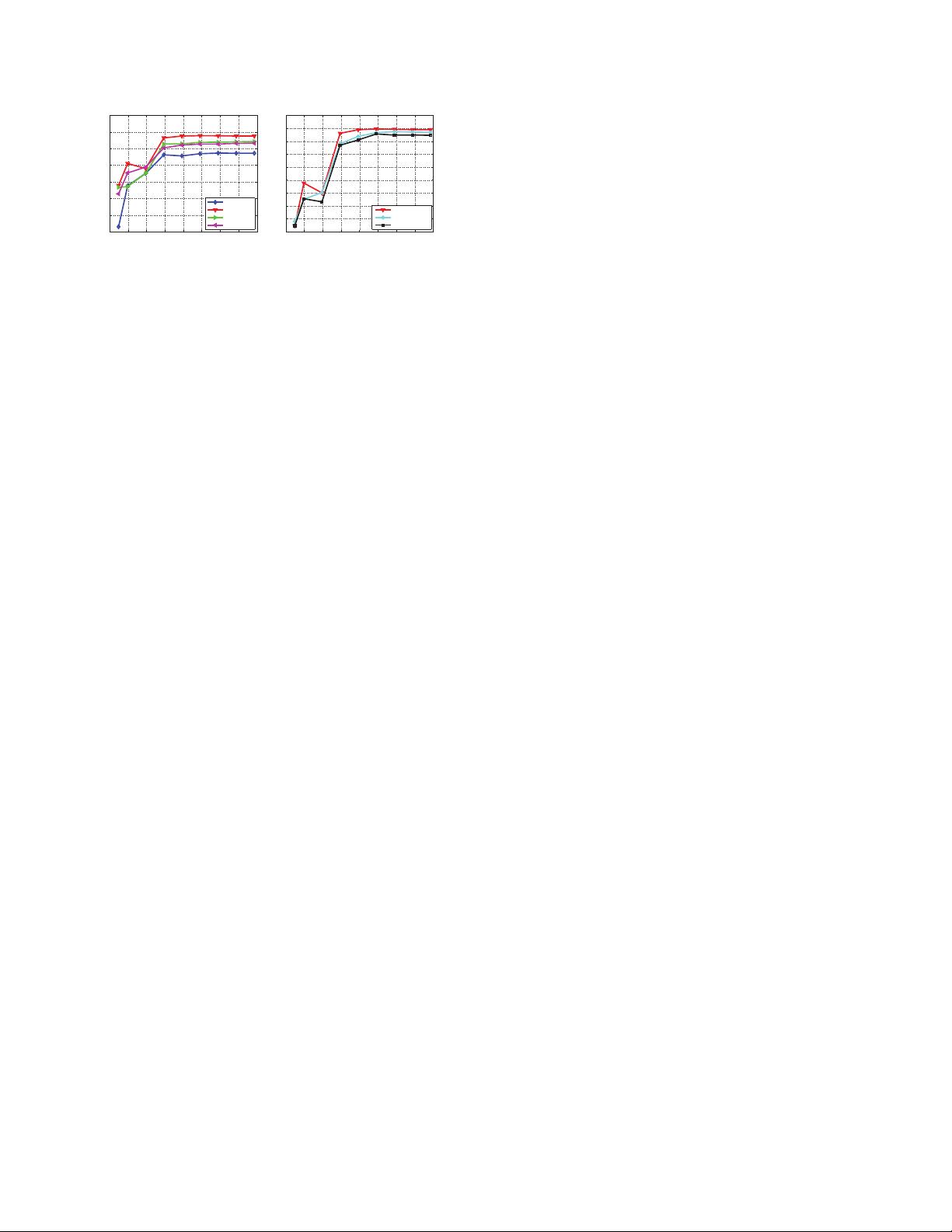

Figure 2. 3D convolution kernel temporal depth search. Action

recognition clip accuracy on UCF101 test split-1 of different ker-

nel temporal depth settings. 2D ConvNet performs worst and 3D

ConvNet with 3 × 3 × 3 kernels performs best among the experi-

mented nets.

17K parameters fewer or more from each other. The biggest

difference in number of parameters is between depth-1 net

and depth-7 net where depth-7 net has 51K more parame-

ters which is less than 0.3% of the total of 17.5 millions pa-

rameters of each network. This indicates that the learning

capacity of the networks are comparable and the differences

in number of parameters should not affect the results of our

architecture search.

3.2. Exploring kernel temporal depth

We train these networks on the train split 1 of UCF101.

Figure 2 presents clip accuracy of different architectures on

UCF101 test split 1. The left plot shows results of nets with

homogeneous temporal depth and the right plot presents re-

sults of nets that changing kernel temporal depth. Depth-

3 performs best among the homogeneous nets. Note that

depth-1 is significantly worse than the other nets which we

believe is due to lack of motion modeling. Compared to the

varying temporal depth nets, depth-3 is the best performer,

but the gap is smaller. We also experiment with bigger spa-

tial receptive field (e.g. 5 × 5) and/or full input resolution

(240 × 320 frame inputs) and still observe similar behav-

ior. This suggests 3 × 3 × 3 is the best kernel choice for

3D ConvNets (according to our subset of experiments) and

3D ConvNets are consistently better than 2D ConvNets for

video classification. We also verify that 3D ConvNet con-

sistently performs better than 2D ConvNet on a large-scale

internal dataset, namely I380K.

3.3. Spatiotemporal feature learning

Network architecture: Our findings in the previous sec-

tion indicate that homogeneous setting with convolution

kernels of 3 × 3 × 3 is the best option for 3D ConvNets.

This finding is also consistent with a similar finding in 2D

ConvNets [37]. With a large-scale dataset, one can train a

3D ConvNet with 3×3×3 kernel as deep as possible subject

to the machine memory limit and computation affordability.

With current GPU memory, we design our 3D ConvNet to

have 8 convolution layers, 5 pooling layers, followed by two

fully connected layers, and a softmax output layer. The net-

work architecture is presented in figure 3. For simplicity,

we call this net C3D from now on. All of 3D convolution

filters are 3 × 3 × 3 with stride 1 × 1 × 1. All 3D pooling

layers are 2 × 2 × 2 with stride 2 × 2 × 2 except for pool1

which has kernel size of 1 × 2 × 2 and stride 1 × 2 × 2

with the intention of preserving the temporal information in

the early phase. Each fully connected layer has 4096 output

units.

Dataset. To learn spatiotemproal features, we train

our C3D on Sports-1M dataset [18] which is currently the

largest video classification benchmark. The dataset consists

of 1.1 million sports videos. Each video belongs to one

of 487 sports categories. Compared with UCF101, Sports-

1M has 5 times the number of categories and 100 times the

number of videos.

Training: Training is done on the Sports-1M train split.

As Sports-1M has many long videos, we randomly extract

five 2-second long clips from every training video. Clips are

resized to have a frame size of 128 × 171. On training, we

randomly crop input clips into 16× 112× 112 crops for spa-

tial and temporal jittering. We also horizontally flip them

with 50% probability. Training is done by SGD with mini-

batch size of 30 examples. Initial learning rate is 0.003,

and is divided by 2 every 150K iterations. The optimization

is stopped at 1.9M iterations (about 13 epochs). Beside the

C3D net trained from scratch, we also experiment with C3D

net fine-tuned from the model pre-trained on I380K.

Sports-1M classification results: Table 2 presents

the results of our C3D networks compared with Deep-

Video [18] and Convolution pooling [29]. We use only a

single center crop per clip, and pass it through the network

to make the clip prediction. For video predictions, we av-

erage clip predictions of 10 clips which are randomly ex-

tracted from the video. It is worth noting some setting dif-

ferences between the comparing methods. DeepVideo and

C3D use short clips while Convolution pooling [29] uses

much longer clips. DeepVideo uses more crops: 4 crops per

clip and 80 crops per video compared with 1 and 10 used by

C3D, respectively. The C3D network trained from scratch

yields an accuracy of 84.4% and the one fine-tuned from

the I380K pre-trained model yields 85.5% at video top-

5 accuracy. Both C3D networks outperform DeepVideo’s

networks. C3D is still 5.6% below the method of [29].

However, this method uses convolution pooling of deep

image features on long clips of 120 frames, thus it is not

directly comparable to C3D and DeepVideo which oper-

ate on much shorter clips. We note that the difference in

top-1 accuracy for clips and videos of this method is small

(1.6%) as it already uses 120-frame clips as inputs. In prac-

tice, convolution pooling or more sophisticated aggregation

schemes [29] can be applied on top of C3D features to im-

prove video hit performance.

C3D video descriptor: After training, C3D can be used

as a feature extractor for other video analysis tasks. To