In the above equation, f(x) could be for example the discriminant function of a classifier, or the output of a

regression predictor.

A kernel is local when K(x, x

i

) > ρ is true only for x in some connected region around x

i

(for some

threshold ρ). The size of that region can usually be controlled by a hyper-parameter of the kernel function.

An example of local kernel is the Gaussian kernel K(x, x

i

) = e

−||x−x

i

||

2

/σ

2

, where σ controls the size of

the region around x

i

. We can see the Gaussian kernel as computing a soft conjunction, because it can be

written as a product of one-dimensional conditions: K(u, v) =

Q

j

e

−(u

j

−v

j

)

2

/σ

2

. If |u

j

− v

j

|/σ is small

for all dimensions j, then the pattern matches and K(u, v) is large. If |u

j

− v

j

|/σ is large for a single j,

then there is no match and K(u, v) is small.

Well-known examples of kernel machines include Support Vector Machines (SVMs) (Boser, Guyon, &

Vapnik, 1992; Cortes & Vapnik, 1995) and Gaussian processes (Williams & Rasmussen, 1996)

3

for classifi-

cation and regression, but also classical non-parametric learning algorithms for classification, regression and

density estimation, such as the k-nearest neighbor algorithm, Nadaraya-Watson or Parzen windows density

and regression estimators, etc. Below, we discuss manifold learning algorithms such as Isomap and LLE that

can also be seen as local kernel machines, as well as related semi-supervised learning algorithms also based

on the construction of a neighborhood graph (with one node per example and arcs between neighboring

examples).

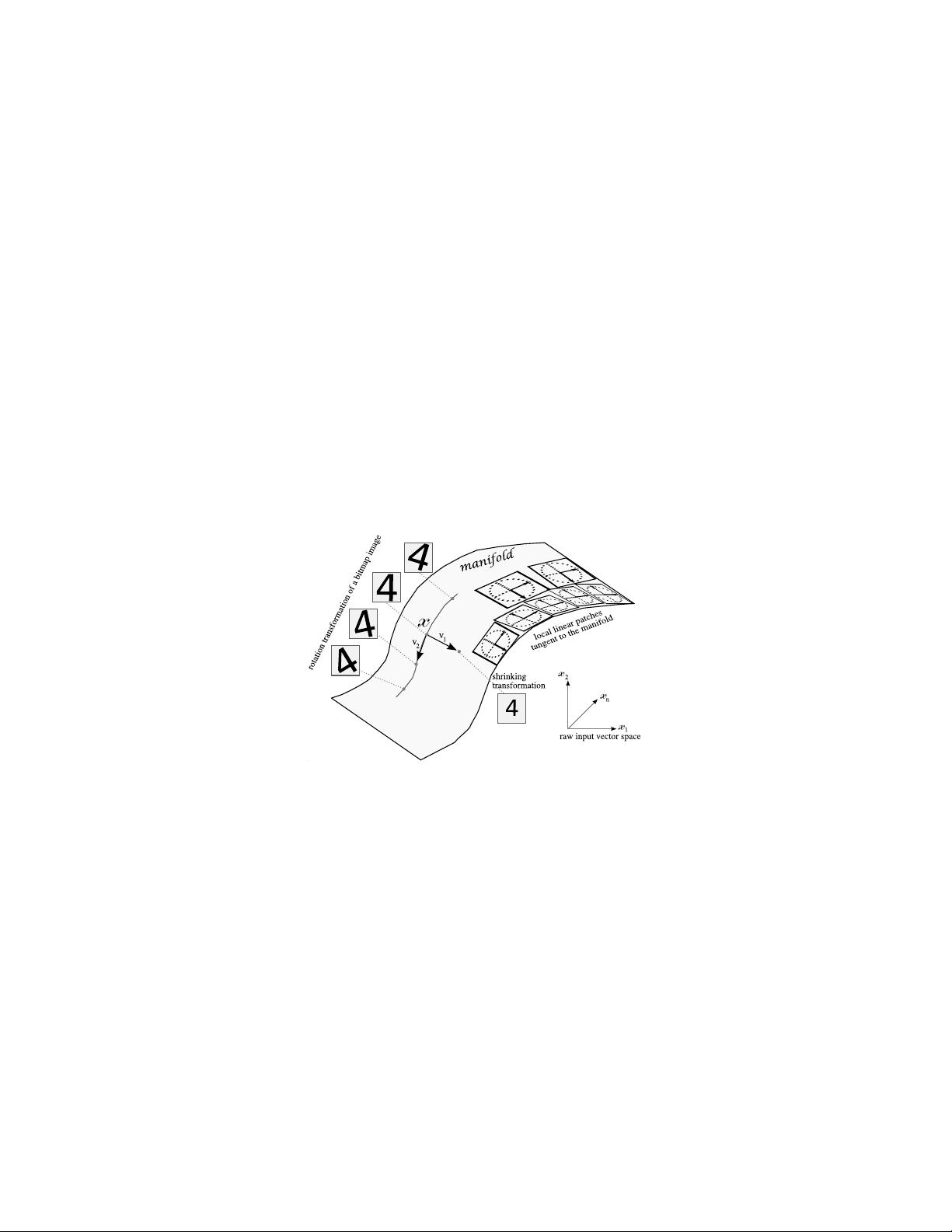

Kernel machines with a local kernel yield generalization by exploiting what could be called the smooth-

ness prior: the assumption that the target function is smooth or can be well approximated with a smooth

function. For example, in supervised learning, if we have the training example (x

i

, y

i

), then it makes sense

to construct a predictor f (x) which will output something close to y

i

when x is close to x

i

. Note how this

prior requires defining a notion of proximity in input space. This is a useful prior, but one of the claims

made in Bengio, Delalleau, and Le Roux (2006) and Bengio and LeCun (2007) is that such a prior is often

insufficient to generalize when the target function is highly-varying in input space.

The limitations of a fixed generic kernel such as the Gaussian kernel have motivated a lot of research in

designing kernels based on prior knowledge about the task (Jaakkola & Haussler, 1998; Sch¨olkopf, Mika,

Burges, Knirsch, M¨uller, R¨atsch, & Smola, 1999b; G¨artner, 2003; Cortes, Haffner, & Mohri, 2004). How-

ever, if we lack sufficient prior knowledge for designing an appropriate kernel, can we learn it? This question

also motivated much research (Lanckriet, Cristianini, Bartlett, El Gahoui, & Jordan, 2002; Wang & Chan,

2002; Cristianini, Shawe-Taylor, Elisseeff, & Kandola, 2002), and deep architectures can be viewed as a

promising development in this direction. It has been shown that a Gaussian Process kernel machine can

be improved using a Deep Belief Network to learn a feature space (Salakhutdinov & Hinton, 2008): after

training the Deep Belief Network, its parameters are used to initialize a deterministic non-linear transfor-

mation (a multi-layer neural network) that computes a feature vector (a new feature space for the data), and

that transformation can be tuned to minimize the prediction error made by the Gaussian process, using a

gradient-based optimization. The feature space can be seen as a learned representation of the data. Good

representations bring close to each other examples which share abstract characteristics that are relevant fac-

tors of variation of the data distribution. Learning algorithms for deep architectures can be seen as ways to

learn a good feature space for kernel machines.

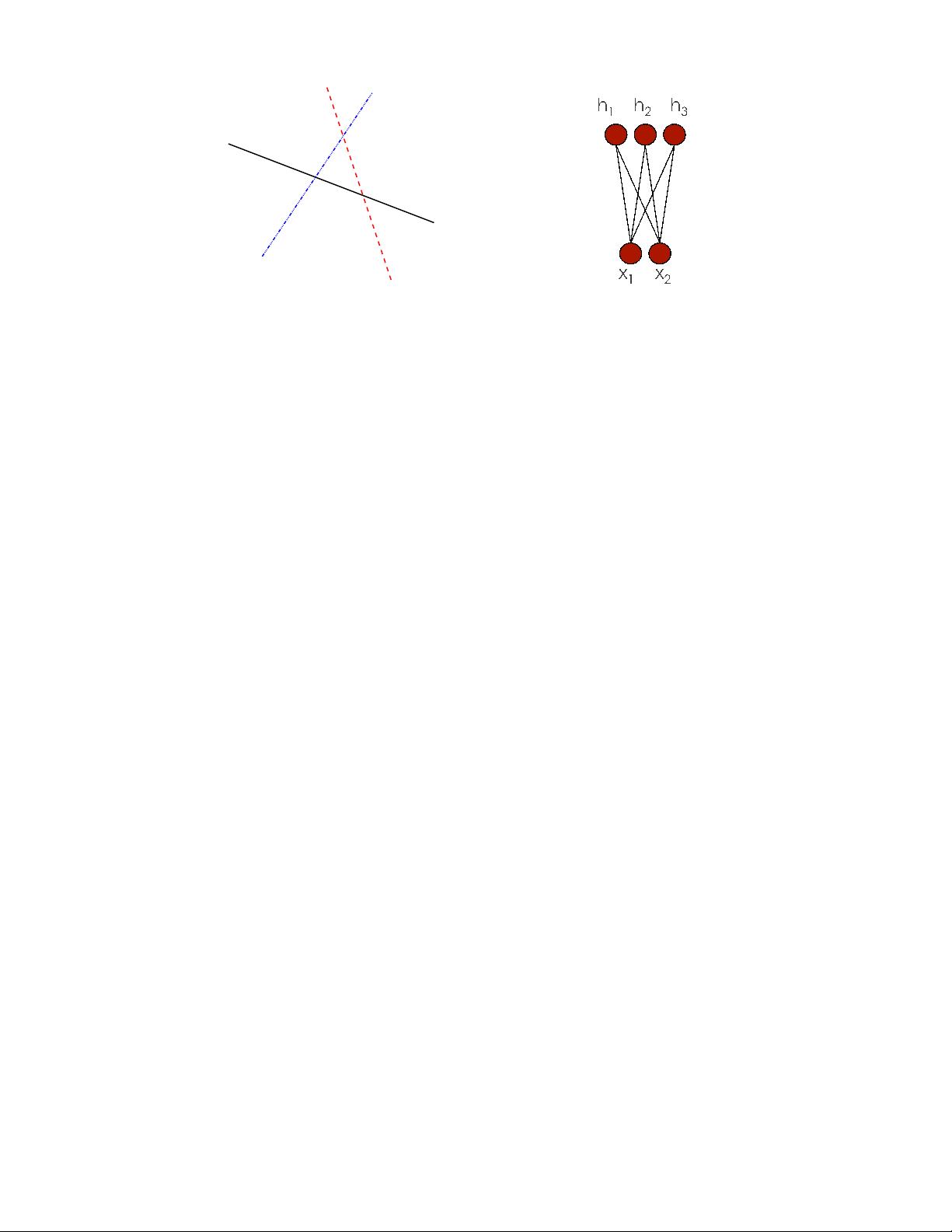

Consider one direction v in which a target function f (what the learner should ideally capture) goes

up and down (i.e. as α increases, f (x + αv) − b crosses 0, becomes positive, then negative, positive,

then negative, etc.), in a series of “bumps”. Following Schmitt (2002), Bengio et al. (2006), Bengio and

LeCun (2007) show that for kernel machines with a Gaussian kernel, the required number of examples

grows linearly with the number of bumps in the target function to be learned. They also show that for a

maximally varying function such as the parity function, the number of examples necessary to achieve some

error rate with a Gaussian kernel machine is exponential in the input dimension. For a learner that only relies

on the prior that the target function is locally smooth (e.g. Gaussian kernel machines), learning a function

with many sign changes in one direction is fundamentally difficult (requiring a large VC-dimension, and a

3

In the Gaussian Process case, as in kernel regression, f(x) in eq. 2 is the conditional expectation of the target variable Y to predict,

given the input x.

12