FANG AND LI: FACE RECOGNITION BY EXPLOITING LOCAL GABOR FEATURES WITH MASR 2607

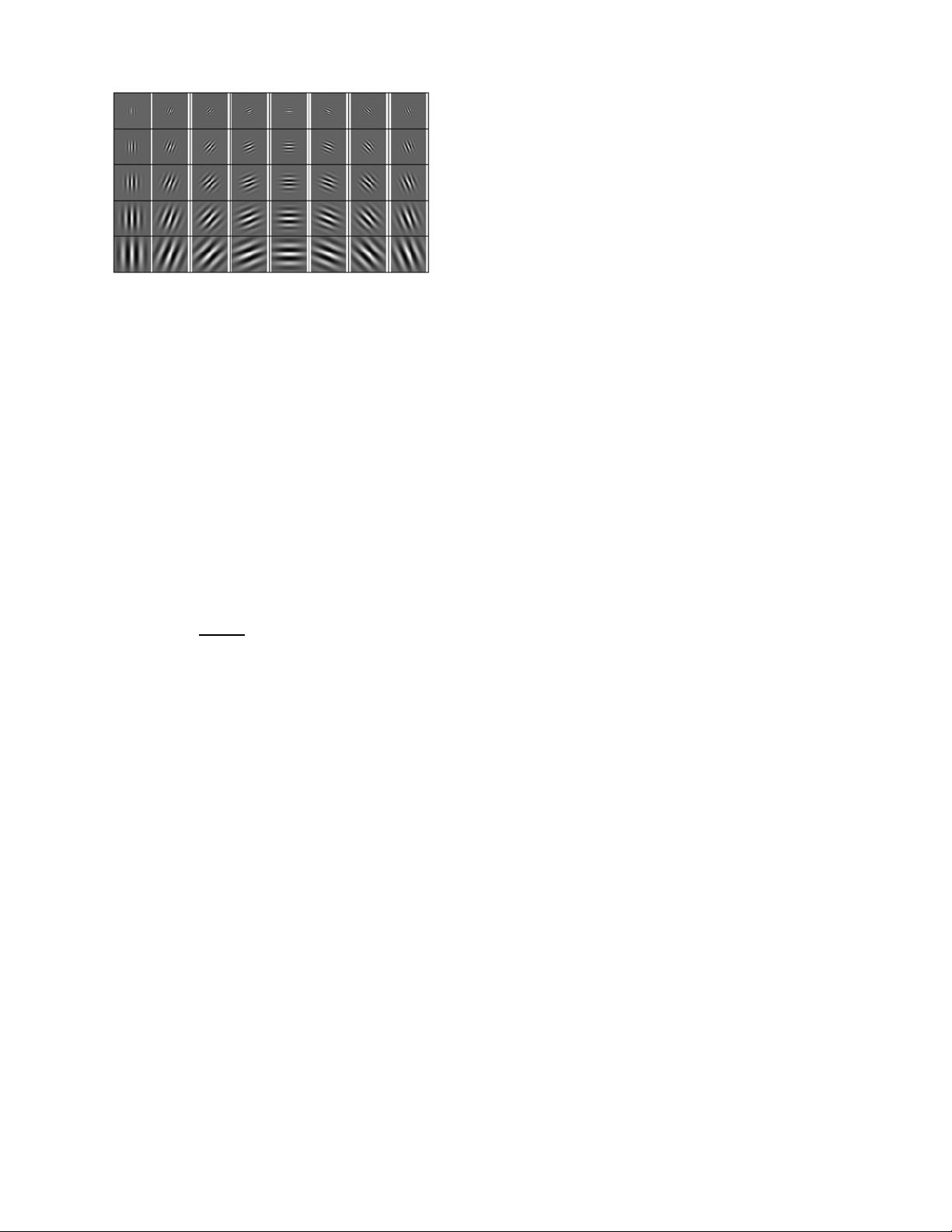

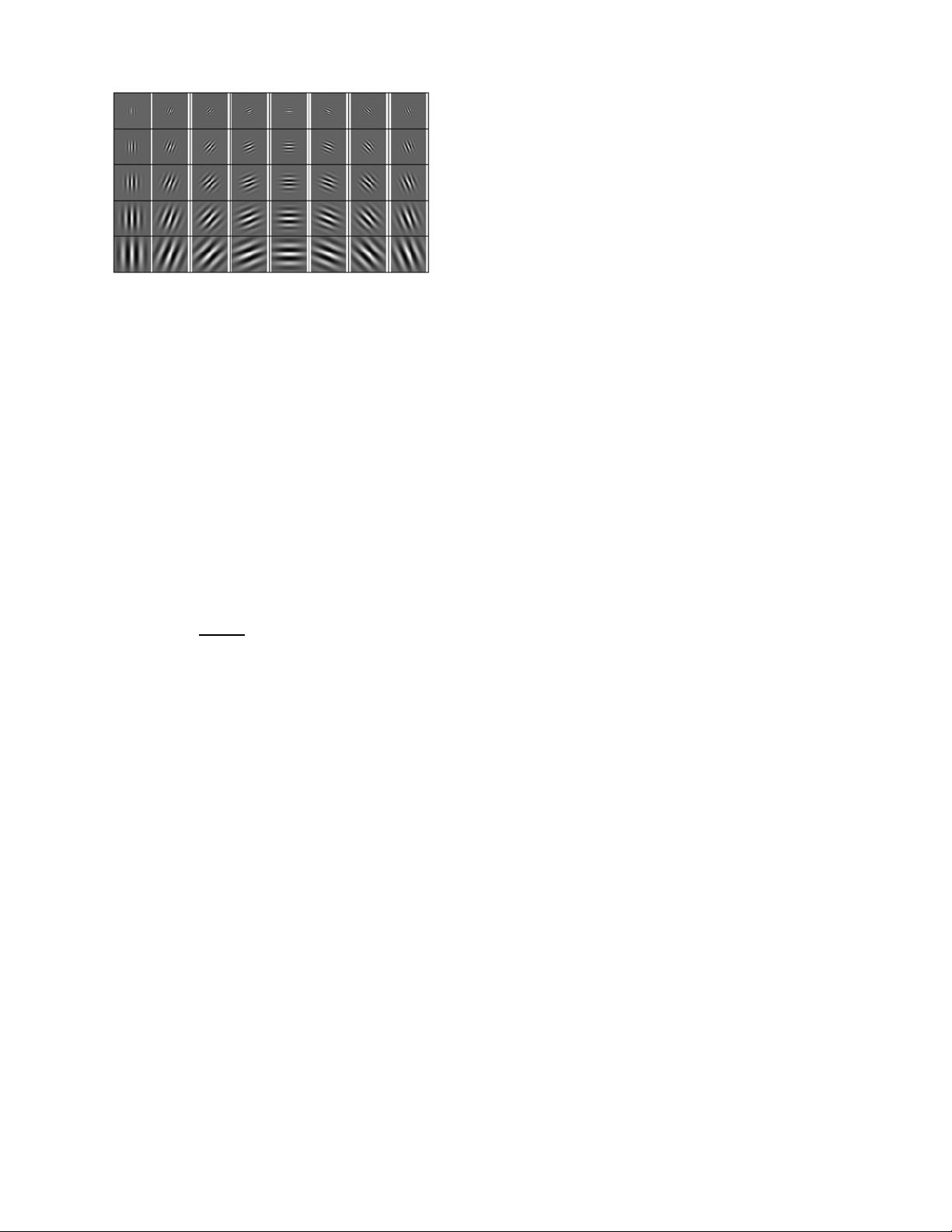

Fig. 1. Gabor wavelet with five scales and eight orientations.

sample y based on the minimum representation error

criteria

ˆc = SRC(y) = arg min

c

y −ˆy

c

2

= arg min

c

y − A

c

(ˆx)

2

(7)

where

c

(

ˆ

X) is an vector operator that preserves

coefficients of ˆx corresponding to class c and sets all

other coefficients to zero.

B. Gabor Wavelet

Gabor wavelet filters are defined as follows [10]:

ψ

o,s

(z) =

k

o,s

2

σ

2

· exp

−k

o,s

2

z

2

/2σ

2

·[exp(ik

o,s

z) − exp(−σ

2

/2)] (8)

where o and s separately represent the orientation and scale

of the Gabor filters, z = (x, y) denotes the pixel, and σ is the

ratio of the Gaussian window width to wavelength. The wave

vector k

o,s

is defined as

k

o,s

= k

s

· exp(iφ

o

) (9)

where k

s

= k

max

/ f

s

and φ

o

= (π · o)/8. k

max

is the maximum

frequency and f

s

is the spacing factor between kernels in the

frequency domain.

Gabor wavelet can have a number of different types by

altering the scale S and orientation O.Fig.1showsthe

Gabor wavelets with five scales and eight orientations. As can

be observed, the Gabor wavelet reflects various kinds of edge

and bar details with different orientations and takes abundant

frequency information with different scales. Therefore, the

Gabor wavelet can extract more details in some meaningful

local regions of face (e.g., nose, eyes, and mouth), which are

very useful for recognition. The convolution of an input face

image with the Gabor wavelet creates O× S magnitude images

and O × S phase images. Since the magnitude information

contains the variation of local energy, this paper only selects

magnitude images as the Gabor features. In addition, to more

effectively utilize the Gabor local information [8], [13], [14],

we will partition the feature image into a set of local

regions.

III. P

ROPOSED METHOD FOR LOCAL

GABOR-FEATURE-BASED FACE RECOGNITION

A. Multitask Sparse Representation Model for Gabor Features

Suppose we have a training dictionary A and a test

sample y, as described aforementioned, the Gabor wavelet can

generate O × S feature dictionaries {A

1

,...,A

O×S

} and test

samples {y

1

,...,y

O×S

} of different orientations and scales.

We arrange all test samples and atoms of training dictionaries

into column vectors and denote their sparse coefficients as a

matrix M =[x

1

,...,x

O×S

]∈R

N×(O×S)

,whereN stands for

the dimension of each sparse coefficient (corresponding to the

number of atoms in the dictionary A). The sparse matrix can

also be represented as row vectors M =[x

1

; ...; x

j

; ...; x

P

],

where x

j

is one row of the matrix M. Note that, for simplicity,

we here only utilize the whole image as the feature. In the later

section, we will discuss how to partition the image into regions

and utilize the SRW strategy to fuse the result of each region.

After Gabor multiscale-orientation dictionaries

{A

1

,...,A

O×S

} and test samples {y

1

,...,y

O×S

} are

obtained, we aim to utilize the information from different

scales and orientations to make a single decision for the

recognition. Based on the SRC model, one simple way

is to rewrite (3) into a multitask sparse representation

problem (Fig. 2)

{ˆx

i

}

O×S

i=1

= arg min

{x

i

}

o×s

i=1

y

i

−A

i

x

i

2

s.t. x

i

0

≤ K ∀1 ≤ i ≤ (O × S). (10)

However, this formulation separately pursuits the sparse

coefficient ˆx

i

for each task and thus does not consider the

relationship among the different tasks (orientations and scales).

To combine the information among the Gabor orientations

and scales, we can use a joint sparse assumption [34], [35]

that the sparse coefficients of different tasks have the same

sparse pattern. That is, for different tasks, the positions of the

nonzero coefficients in all the sparse vectors x

1

,...,x

O×S

are identical, while coefficient values might be varied. Under

this assumption, the nonzero coefficients in M should be on

the same rows and a joint sparse regularization

row,0

can be

placed on the M to select a small number of the representative

nonzero rows

M

row,0

=[x

1

2

; ...;x

j

2

; ...;x

P

2

]

0

(11)

where x

j

is one row in the sparse coefficient matrix M.

Then (10) can be rewritten as

ˆ

M = arg min

{M}

O×S

i=1

y

i

−A

i

x

i

2

s.t. M

row,0

≤ K. (12)

In [34] and [35], the application of the joint sparse prior for

multitask problem is termed as the MJSR model.

B. Multitask Adaptive Sparse Representation

The MJSR model explained above can be directly used to

exploit the Gabor information of different scales and orien-

tations by enforcing the same sparsity pattern among them.