DRBD Features

10

the write cache in situations it deems necessary, as in activity log [107] updates or enforcement

of implicit write-after-write dependencies. This means additional reliability even in the face of

power failure.

It is important to understand that DRBD can use disk flushes only when layered on top of backing

devices that support them. Most reasonably recent kernels support disk flushes for most SCSI

and SATA devices. Linux software RAID (md) supports disk flushes for RAID-1 provided that all

component devices support them too. The same is true for device-mapper devices (LVM2, dm-

raid, multipath).

Controllers with battery-backed write cache (BBWC) use a battery to back up their volatile

storage. On such devices, when power is restored after an outage, the controller flushes all

pending writes out to disk from the battery-backed cache, ensuring that all writes committed

to the volatile cache are actually transferred to stable storage. When running DRBD on top

of such devices, it may be acceptable to disable disk flushes, thereby improving DRBD’s write

performance. See Section6.13, “Disabling backing device flushes” [46] for details.

2.11.Disk�error�handling�strategies

If a hard drive fails which is used as a backing block device for DRBD on one of the nodes, DRBD

may either pass on the I/O error to the upper layer (usually the file system) or it can mask I/O

errors from upper layers.

Passing on I/O errors.If DRBD is configured to pass on I/O errors, any such errors occuring on

the lower-level device are transparently passed to upper I/O layers. Thus, it is left to upper layers

to deal with such errors (this may result in a file system being remounted read-only, for example).

This strategy does not ensure service continuity, and is hence not recommended for most users.

Masking I/O errors.If DRBD is configured to detach on lower-level I/O error, DRBD will do

so, automatically, upon occurrence of the first lower-level I/O error. The I/O error is masked

from upper layers while DRBD transparently fetches the affected block from the peer node, over

the network. From then onwards, DRBD is said to operate in diskless mode, and carries out all

subsequent I/O operations, read and write, on the peer node. Performance in this mode will be

reduced, but the service continues without interruption, and can be moved to the peer node in

a deliberate fashion at a convenient time.

See Section 6.10, “Configuring I/O error handling strategies” [42] for information on

configuring I/O error handling strategies for DRBD.

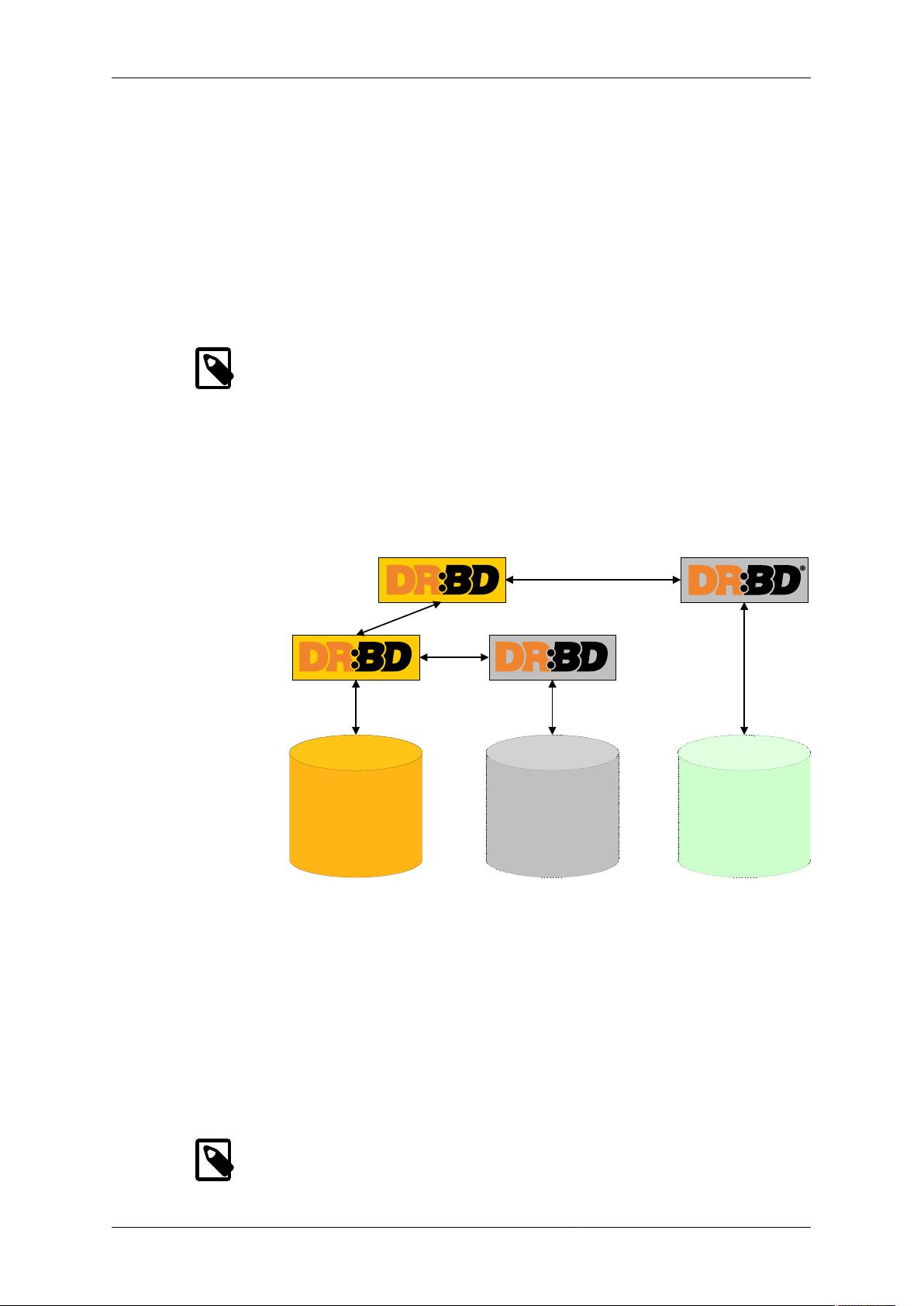

2.12.Strategies�for�dealing�with�outdated�data

DRBD distinguishes between inconsistent and outdated data. Inconsistent data is data that cannot

be expected to be accessible and useful in any manner. The prime example for this is data on

a node that is currently the target of an on-going synchronization. Data on such a node is part

obsolete, part up to date, and impossible to identify as either. Thus, for example, if the device

holds a filesystem (as is commonly the case), that filesystem would be unexpected to mount or

even pass an automatic filesystem check.

Outdated data, by contrast, is data on a secondary node that is consistent, but no longer in

sync with the primary node. This would occur in any interruption of the replication link, whether

temporary or permanent. Data on an outdated, disconnected secondary node is expected to be

clean, but it reflects a state of the peer node some time past. In order to avoid services using

outdated data, DRBD disallows promoting a resource [3] that is in the outdated state.

DRBD has interfaces that allow an external application to outdate a secondary node as soon

as a network interruption occurs. DRBD will then refuse to switch the node to the primary

role, preventing applications from using the outdated data. A complete implementation of this

functionality exists for the Pacemaker cluster management framework [59] (where it uses