2168-7161 (c) 2018 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCC.2018.2846620, IEEE

Transactions on Cloud Computing

3

depends on applied actions and firmware. Huang et al. [12]

investigated three commercial OpenFlow switches and pointed

out that control path delays and flow table designs affect

switching performance. Tai et al. [14] uncovered the source

of forwarding latency caused by forwarding rule insertions on

data plane. Xu et al. [31] proposed two cost-optimized flow

statistics collection schemes using wildcard-based requests,

which could reduce the bandwidth overhead and switch pro-

cessing delay. He et al. [8, 9] studied four SDN switches

and showed the latencies underlying the generation of request

messages and execution of forwarding rules. Different from

previous works, we try to analyze the benefits of adopting

switch buffer through an in-depth measurement study, which

could make a complementary exploitation of SDN switches.

Observed from the analysis results, we find that switch buffer

can reduce the communication overhead between the switches

and the controller.

The root cause of communication overhead stems from

the size limitation of SDN flow tables. Rules in flow tables

have to be updated adapting to network dynamics, i.e., rules

for inactive flows will be kicked out and replaced by rules

for active flows. As a result, packets cannot always match

the rules of the flow tables. Therefore, optimizing flow ta-

ble utilization (such as caching more rules, updating rules

quickly, etc.) can reduce the requests sent to the controller

indirectly. Luo et al. [16] shrank the flow table size and

provided practical methods to achieve fast flow table updates.

Li et al. [17] proposed an efficient flow-driven rule caching

algorithm to optimize the SDN switch cache replacement.

CacheFlow [18] spliced long dependency chains to cache

smaller groups of rules while preserving the per-rule traffic

counts. FlowShadow [19] achieved fast packet processing and

supports uninterrupted update by caching microflows. Zhang

et al. [32] propose a delay-guaranteed approach called D

3

G

to reduce the latency of chained services while obtain fairness

across all the workloads, by means of designing a latency

estimation algorithm and a feedback scheme. Yan et al. [29]

proposed a new rule caching scheme, as well as an adaptive

cache management method. The mechanism can reduce the

cache miss rate by one order of magnitude and the control

path bandwidth usage by a half. Different from these studies,

this paper exploits the benefits of switch buffer to directly

reduce the communication overhead. Buffer has been adopted

to improve performance and achieve QoS guarantee in legacy

switches [20, 21]. However, it receives little notice in SDN.

III. PROBLEM AND EXPERIMENT DESCRIPTION

In this section, we first describe the problem and then show

the measurement methodology.

A. Problem Description

A flow contains many packets of {p

1

, p

2

, ..., p

n

} arriving

at {t

1

, t

2

, ..., t

n

}. If p

1

matches a rule of the flow table, it

will be forwarded at a line rate. Otherwise, the switch will

generate a pkt in message sent to the controller. After the

controller decides how to forward the packet, it will send a pair

of control operation messages (flow mod and pkt out) to the

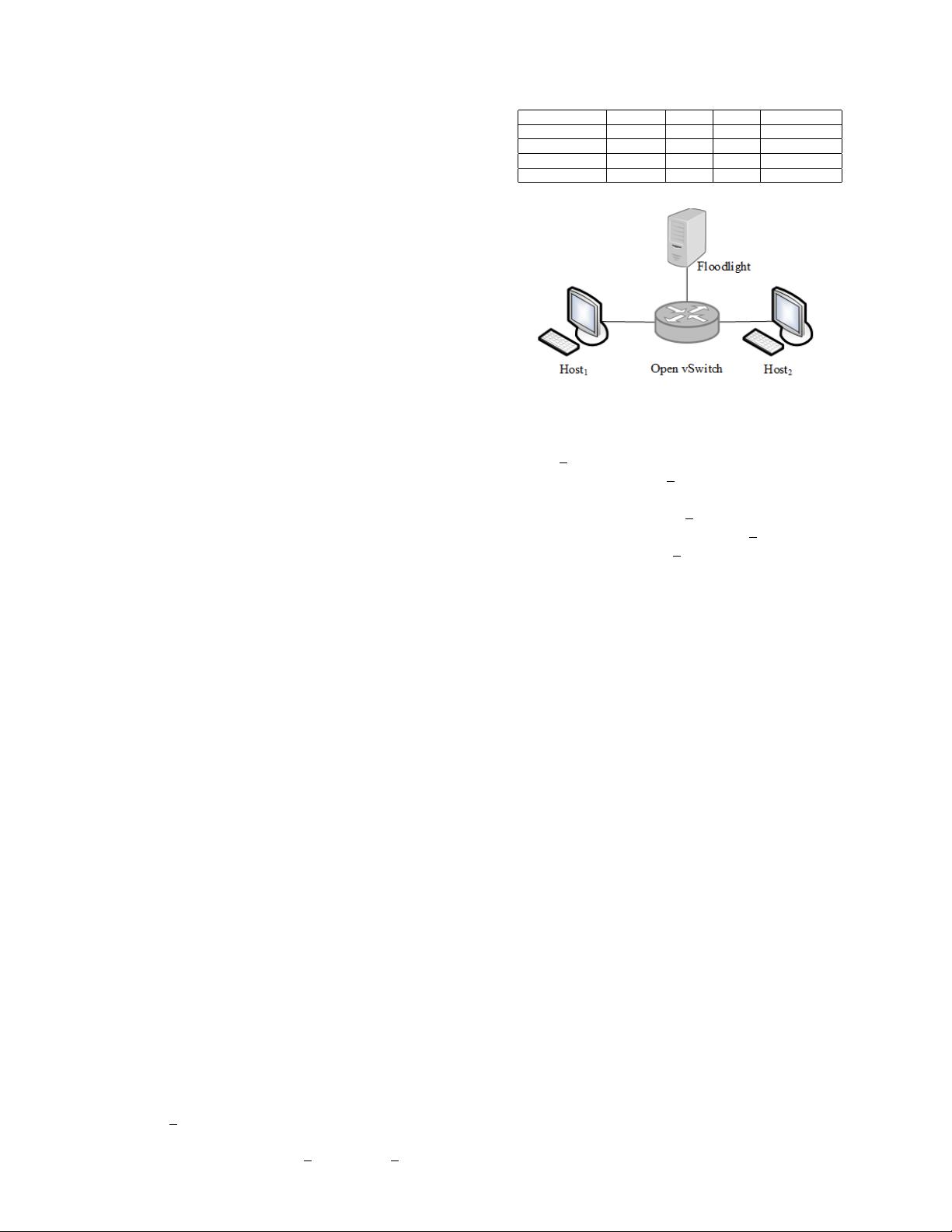

TABLE I: Configurations of Experimental Devices

Device Name CPU Cores RAM NIC

Host

1

3.3GHZ 4 4GB 1×100Mbps

Host

2

3.3GHZ 4 4GB 1×100Mbps

Open vSwitch 3.3GHZ 4 4GB 3×100Mbps

Floodlight 3.3GHZ 4 4GB 1×100Mbps

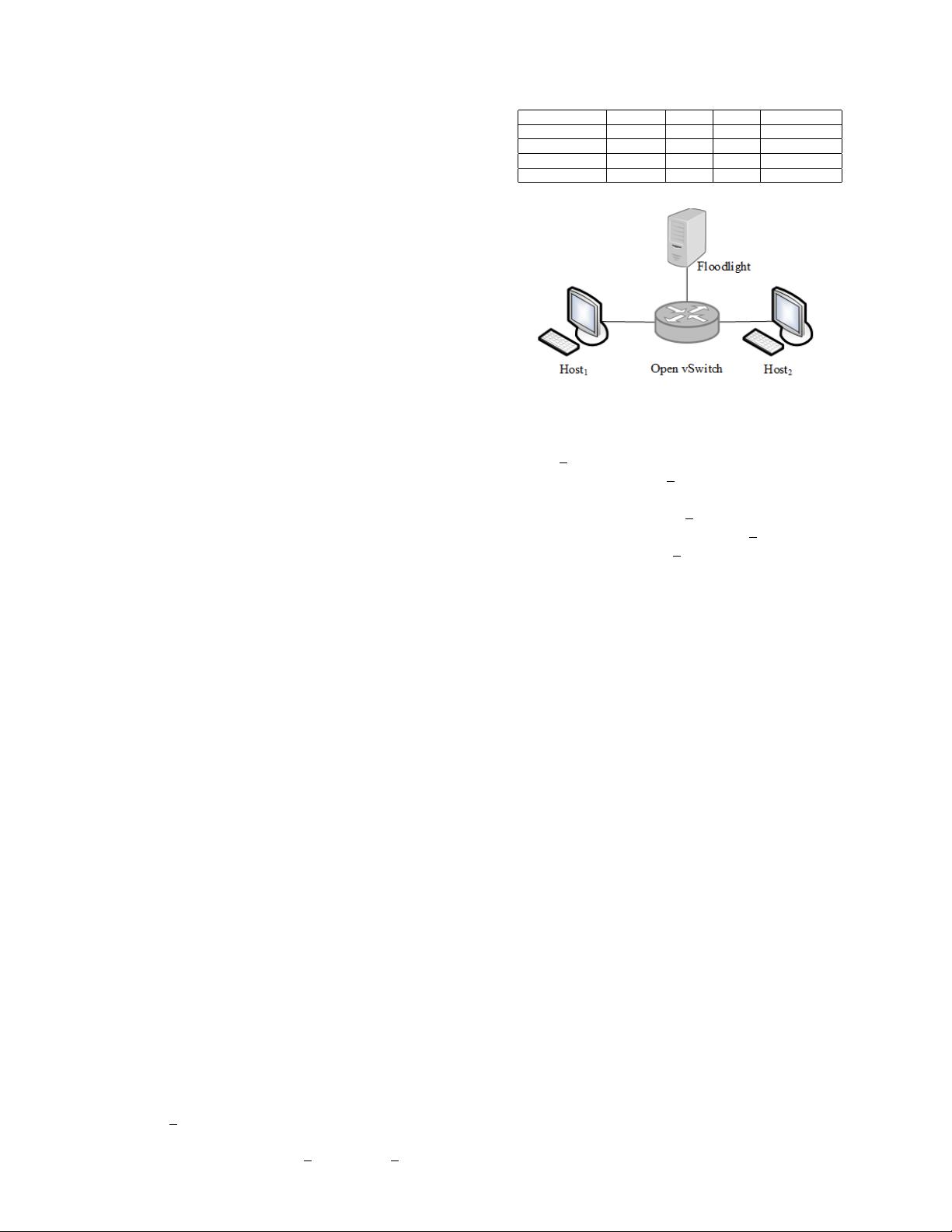

Fig. 1: Topography of the Experimental Platform.

switch: flow mod message carries the forwarding rule that will

be installed in the switch; pkt out message instructs to directly

forward the miss-match packet through a specified interface of

the switch. The time of the flow mod message taking effect is

t

e

. If t

e

> t

2

, p

2

will trigger another pkt in message. In the

worst case, if t

e

> t

n

, n pkt in messages will be triggered.

When massive packets fails to match any rules of the flow

tables simultaneously, a great deal of request messages will

be sent to the controller. Moreover, corresponding control

operation messages will be sent back to the switch. Such

communication overhead needs to be reduced by the following

three reasons.

1) Control path may share the same physical links with the

data path. When the physical links carry heavy data traffic,

control messages may be congested. 2) Even if we reserve the

bandwidth or increase the priority for control traffic, we still

have the requirements of reducing the control messages to

relieve the load on the centralized controller. 3) Concurrent

switch activities, i.e., generating control request messages

and handling control operation messages will increase the

communication delay between the switch and the controller

[9]. This is caused by the competition of the limited resources

of the switch. So it is important to keep the control traffic at

a low level.

B. Experiment Description

Fig. 1 shows our experiments setup. Open vSwitch(OVS)

[22] is an open source OpenFlow virtual switch. Floodlight

[23] is an open source SDN controller. We run OVS and

Floodlight on two commodity PCs respectively. Table I shows

the configurations of the experimental devices. Host

1

and

Host

2

connect to OVS with 100Mbps interfaces. We run

pktgen [24] on Host

1

to generate traffic at rates of 5Mbps

- 100Mbps with the Ethernet frame size of 1000 Bytes. We

run tcpdump [25] to listen on the interfaces that are connected

to the hosts and the controller respectively.

In this paper, we utilize switch buffer to reduce the com-

munication overhead from the following two ways: (1) reduce

Authorized licensed use limited to: Northeastern University. Downloaded on March 24,2020 at 03:43:30 UTC from IEEE Xplore. Restrictions apply.