94 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 57, NO. 1, JANUARY 2009

employed for the fast point estimate of the hyperparameters

and in Fig. 1. This yields a computationally efficient multi-

task CS inference algorithm that extends previous research in

the Bayesian CS analysis [10], wherein the Bayesian inversion

was performed one task at a time (single-task learning,

i.e.,

).

In addition to a hierarchal Bayesian model of multitask CS

and a fast inference algorithm, a modified sparse linear-regres-

sion model is developed, of interest both for the single-task and

multitask CS settings. As discussed further below, this extension

analytically integrates out the noise-variance term in the regres-

sion model, and it yields improved robustness over the previous

formulation.

The remainder of the paper is structured as follows. In

Section II we introduce a hierarchical Bayesian model for

multitask CS that builds naturally upon previous research

on Bayesian CS [10]; a fast sequential optimization algo-

rithm based on an empirical Bayesian procedure is developed

for inference. In Section III we propose a modified sparse

linear-regression model by marginalizing the noise variance,

and develop a fast inference algorithm as well. Example results

on multiple datasets are presented in Section IV. A review of

work related to multitask CS is provided in Section V, followed

in Section VI by conclusions and a discussion of future work.

II. H

IERARCHICAL MULTITASK

CS MODELING

A. Bayesian Regression Formulation

Assume that

sets of CS measurements are performed, with

these multiple sensing tasks statistically interrelated, as defined

precisely later. The

sets of measurements are represented as

, where , and in general each

measurement vector

employs a different random pro-

jection matrix

, for . This gener-

alizes the formulation considered in [12]–[15], [17], wherein a

single

is employed across all the tasks. In the context of a

regression analysis, we assume [10]

(2)

where

is a residual error vector, modeled as i.i.d.

draws of a zero-mean Gaussian random variable with unknown

precision

(variance ). The likelihood function for the

parameters

and , based on the observed data , may there-

fore be expressed as

(3)

The parameters

(here, wavelet coefficients) characteristic of

task

are assumed to be drawn from a product of zero-mean

Gaussian distributions that are shared by all tasks, and it is in

this sense that the

tasks are statistically related. Specifically,

letting

represent the th wavelet (or scaling function) coef-

ficient for CS task

,wehave

(4)

where

is a zero-mean Gaussian density function

with precision

. It is important to note that the hyperparame-

ters

are shared among all tasks, and therefore

the data from all CS measurements

will contribute to

learning the hyperparameters, offering the opportunity to adap-

tively borrow strength from the different measurements to a de-

gree controlled by

.

To promote sparsity over the weights

, Gamma priors are

placed on the hyperparameters

, and similarly on the noise

precision

(5)

(6)

It has been demonstrated [19] that appropriate choice of param-

eters

and encourages a sparse representation for the coeffi-

cients in the vector

, where here this concept is extended to a

multitask CS setting. Typically, when

, with

a small constant, has a large spike concentrated at

zero and a heavy right tail. The spike corresponds to basis func-

tions for which there is essentially no borrowing of information.

Such basis functions characterize components that are idiosyn-

cratic to specific signals. At the other extreme, basis functions

for which

is in the right tail have coefficients that are shrunk

strongly to zero for all tasks, favoring sparseness, while bor-

rowing information about which basis functions are not impor-

tant for any of the signals in the collection. For small

, there will

be many such basis functions. As a default choice which avoids

subjective choice of

, and leads to computational simplifica-

tions, we set

. For the Gamma prior on the noise preci-

sion

, we also let as a default choice. This choice

corresponds to a commonly-used improper prior expressing a

priori ignorance about plausible values for the residual preci-

sion.

2

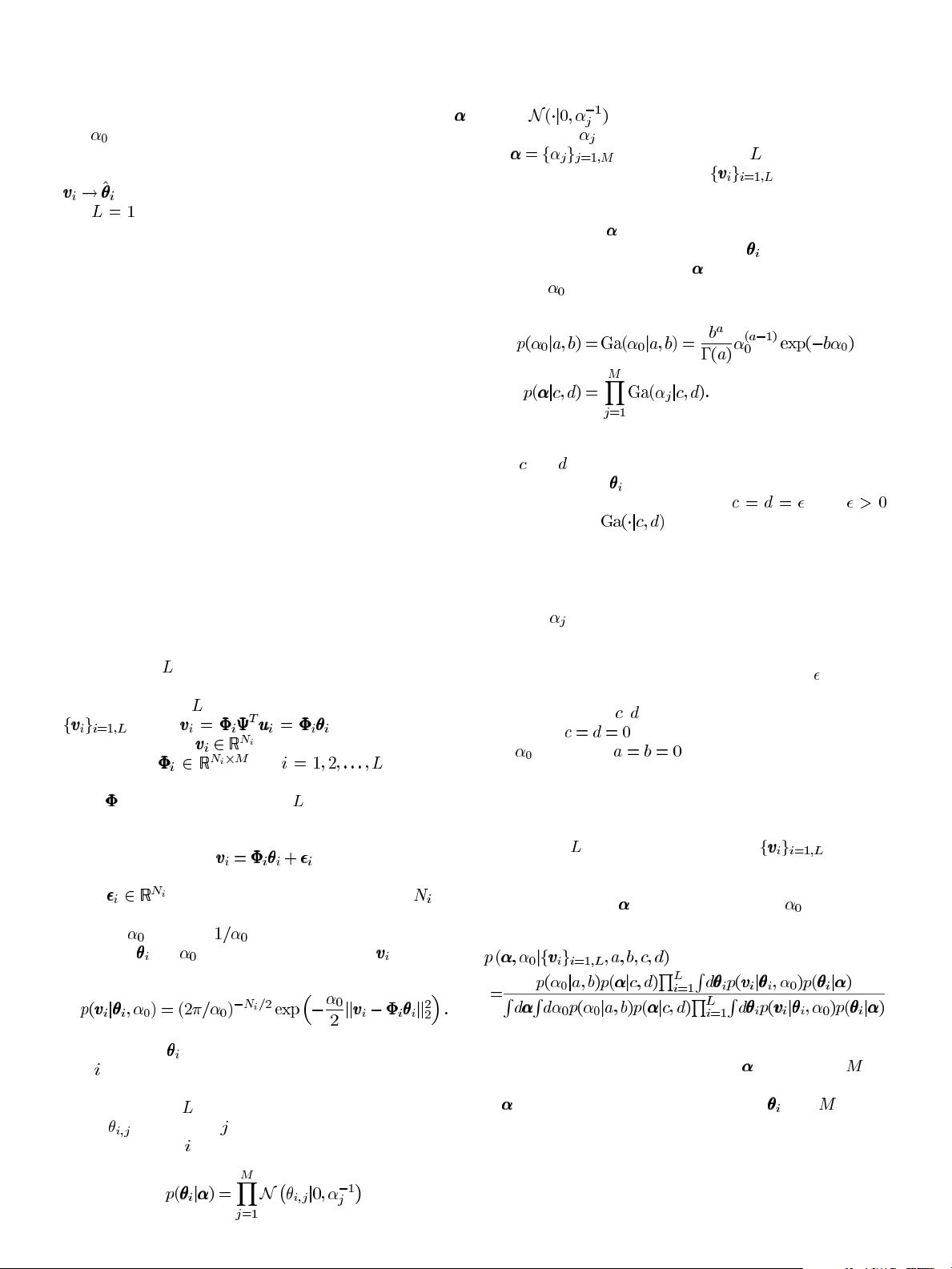

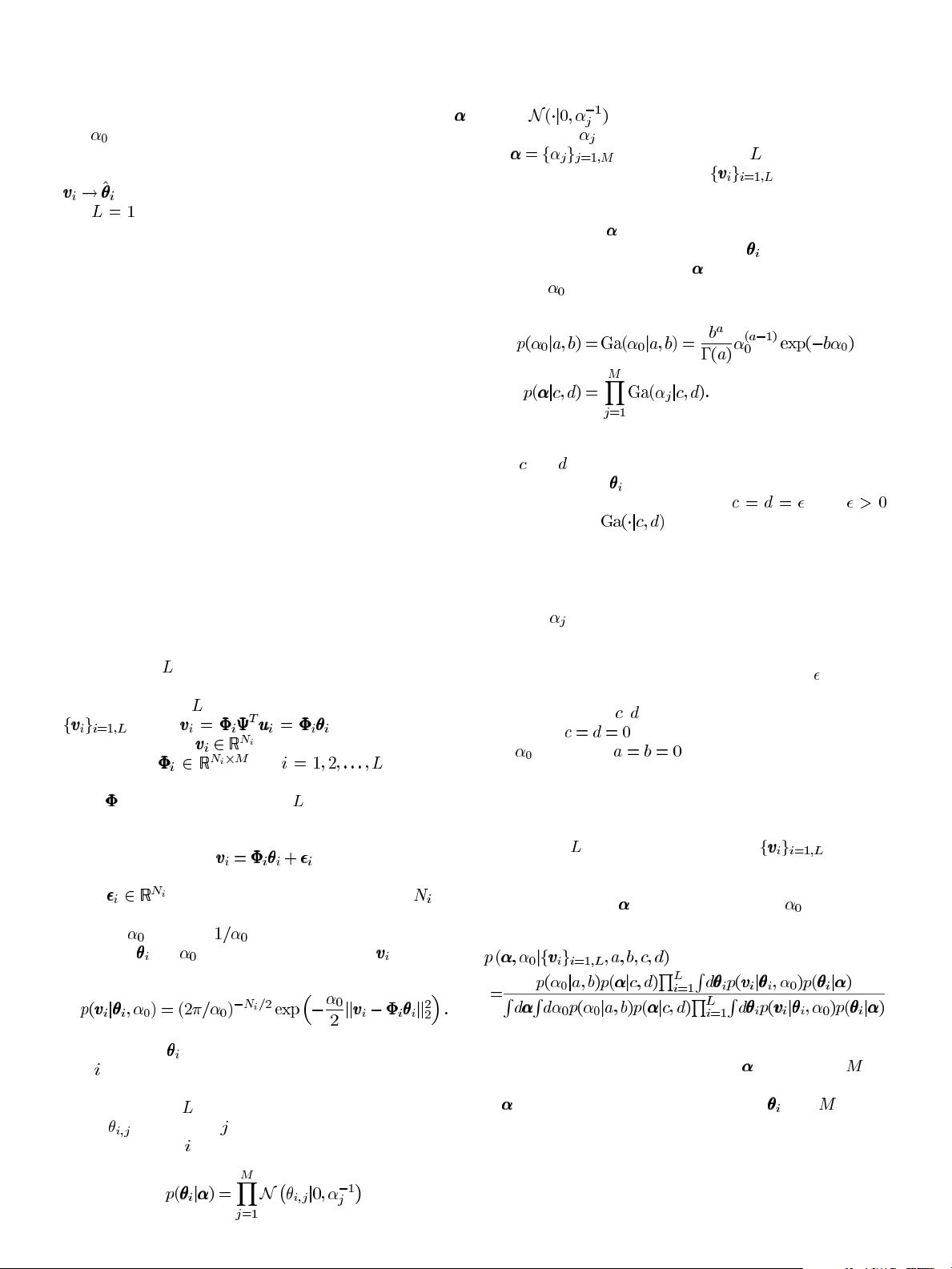

With these parametric definitions, a graphical model rep-

resentation of multitask CS is illustrated in Fig. 1.

Given the

sets of CS measurements from the

(assumed) statistically related sources, by applying the Bayes’

rule, one may in principle infer a posterior density function on

the hyperparameters

and the noise precision

(7)

where the integral in (7) with respect to

is actually an -di-

mensional integral, with each integral linked to one component

of

; similarly, each integral with respect to is an -dimen-

sional integral, over all wavelet-coefficient weights. To avoid the

2

While the sparsity analysis provided here is largely following that of RVM

[19], which is intuitive and conceptual, a more recent and rigorous analysis of

sparse Bayesian learning and its superior performance on sparse representation

can be found at [37], [38]. More relevantly, it is the log-det term of the likelihood

(13) that produces sparsity.