Comparative study of performance of parallel Alpha

Beta Pruning for different architectures

Shubhendra Pal Singhal

Department of Computer Science and Engineering

National Institute of Technology, Tiruchirappalli

shubhendrapalsinghal@gmail.com

M. Sridevi

Department of Computer Science and Engineering

National Institute of Technology, Tiruchirappalli

msridevi@nitt.edu

Abstract—Optimization of searching the best possible action

depending on various states like state of environment, system

goal etc. has been a major area of study in computer systems.

In any search algorithm, searching best possible solution from

the pool of every possibility known can lead to the construction

of the whole state search space popularly called as minimax

algorithm. This may lead to a impractical time complexities

which may not be suitable for real time searching operations.

One of the practical solution for the reduction in computational

time is Alpha Beta pruning. Instead of searching for the whole

state space, we prune the unnecessary branches, which helps

reduce the time by significant amount. This paper focuses on

the various possible implementations of the Alpha Beta pruning

algorithms and gives an insight of what algorithm can be used

for parallelism. Various studies have been conducted on how

to make Alpha Beta pruning faster. Parallelizing Alpha Beta

pruning for the GPUs specific architectures like mesh(CUDA)

etc. or shared memory model(OpenMP) helps in the reduction

of the computational time. This paper studies the comparison

between sequential and different parallel forms of Alpha Beta

pruning and their respective efficiency for the chess game as an

application.

Index Terms—Parallel algorithms, Minimax, Alpha Beta prun-

ing, CUDA, OpenMP, Mesh architecture, Shared memory model

I. INTRODUCTION

Playing a game strategically requires an individual to fore-

see all kinds of winning possibilities. The grading policy

applied to game tree is generally +1 for winning and -1

for losing which ultimately helps the agent decide the next

move. This may require the construction of whole state space

implying that every possibility or action needs to be considered

and whatever suits the best or takes the agent close to its goal,

should be opted. Now, this is the general brute force method

which might work practically for simpler and smaller games

like Tic-Tac-toe, but the complex games like checkers or chess

on 8*8 board, has a gigantic state space and searching using

the brute force method in such a huge space is impractical.

This gives us the motivation to study a better and feasible

method called Alpha Beta pruning. The cases which turns

out to be futile at the start of the search are rejected, thus

reducing the state space for every move by significant amount.

Furthermore, the efficiency of the Alpha Beta pruning can be

further improved, to suit feasibility of running AI applications

[1] consisting of accrued state space. Parallelism turns out

to be one of the factors which can be used to improve

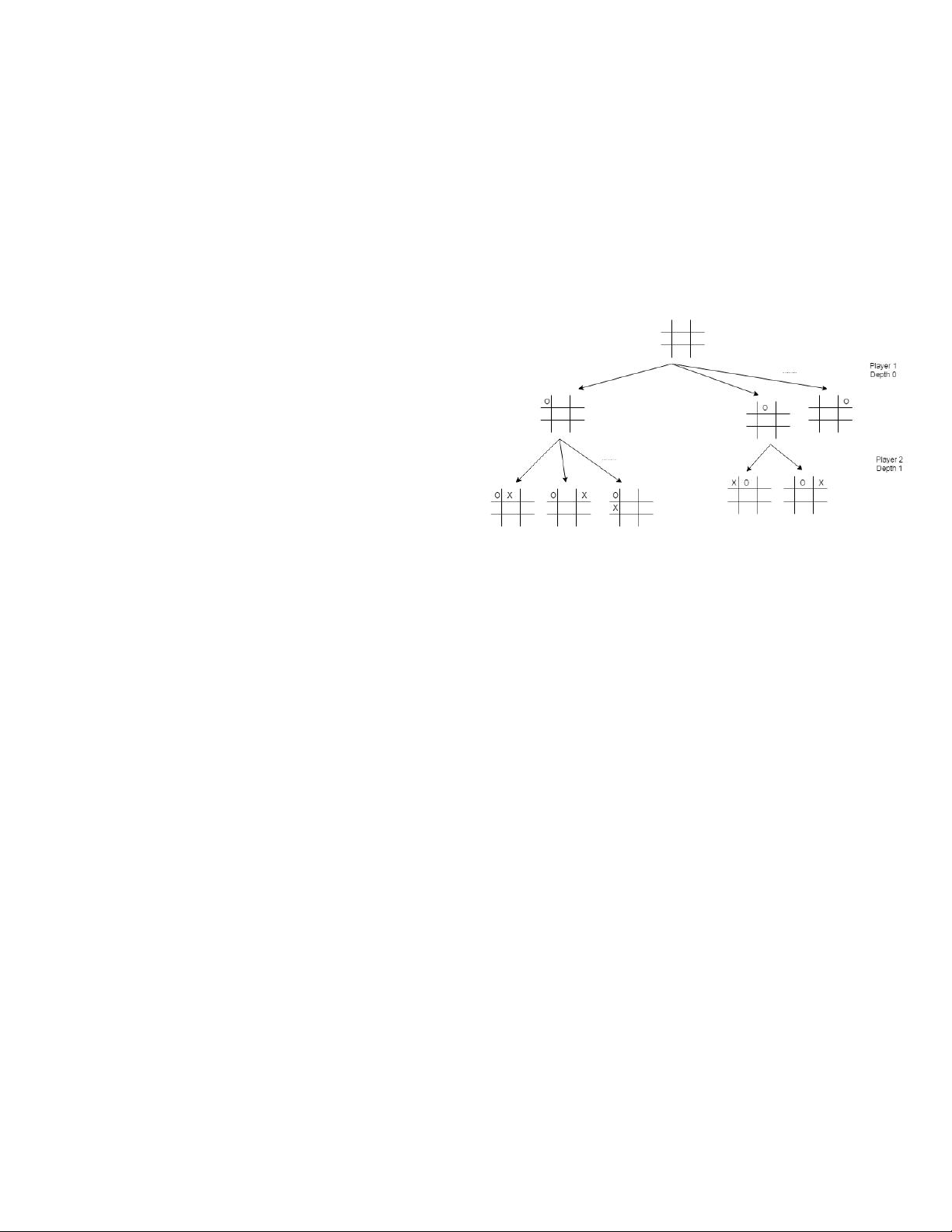

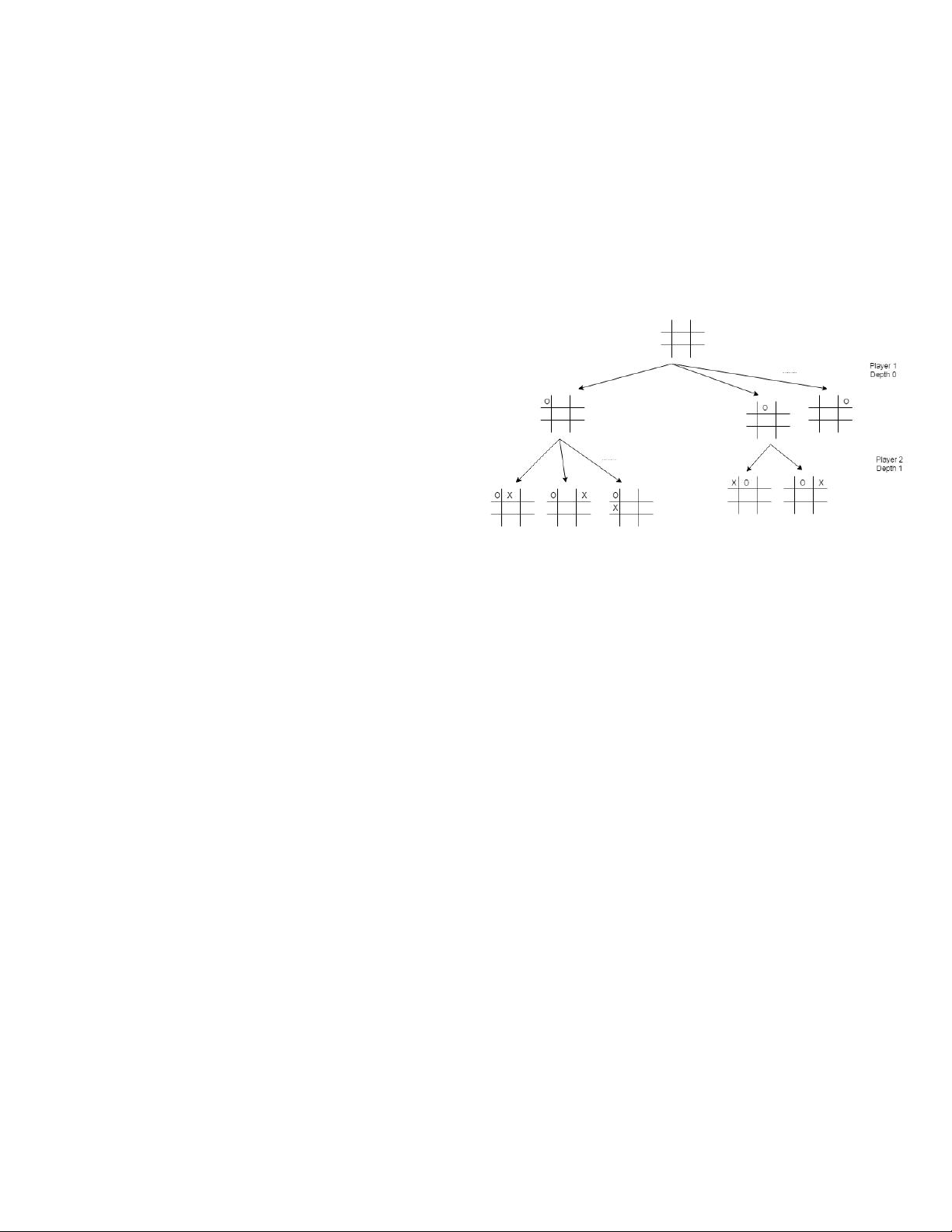

Fig. 1: Game tree for Tic-Tac-toe

the performance. This paper compares the performances and

speedup obtained from various implementations of the parallel

forms of Alpha Beta pruning on different architectures.

The earlier the branches are pruned, the better is the effi-

ciency of Alpha Beta pruning. Different architectures prune

the branches at different time stamps, thus differing in the

computation time.

A. Minimax Algorithm

Brute force search in the state space is the minimax

algorithm. Minimax is a decision-making algorithm [2]. The

main aim of the algorithm is to find the next best move as

shown in Fig1 for the game Tic-Tac-Toe [3] (application of

minimax) .

In the applications of Minimax algorithm (involving two

players), first player is maximizer, and the second player is

the minimizer. The evaluation score is assigned to the game

board, where the maximizer and minimizer aims to get the

highest score, and the lowest score possible respectively [2].

The implementation of the same in shown in the following

algorithm 1 [4].

If every child branch is allocated to one processor and

run the same algorithm in parallel for every child branch,

then the parallel minimax is formed. The parent child will

collect the answer from all the child branches and further

arXiv:1908.11660v2 [cs.DC] 29 Oct 2019