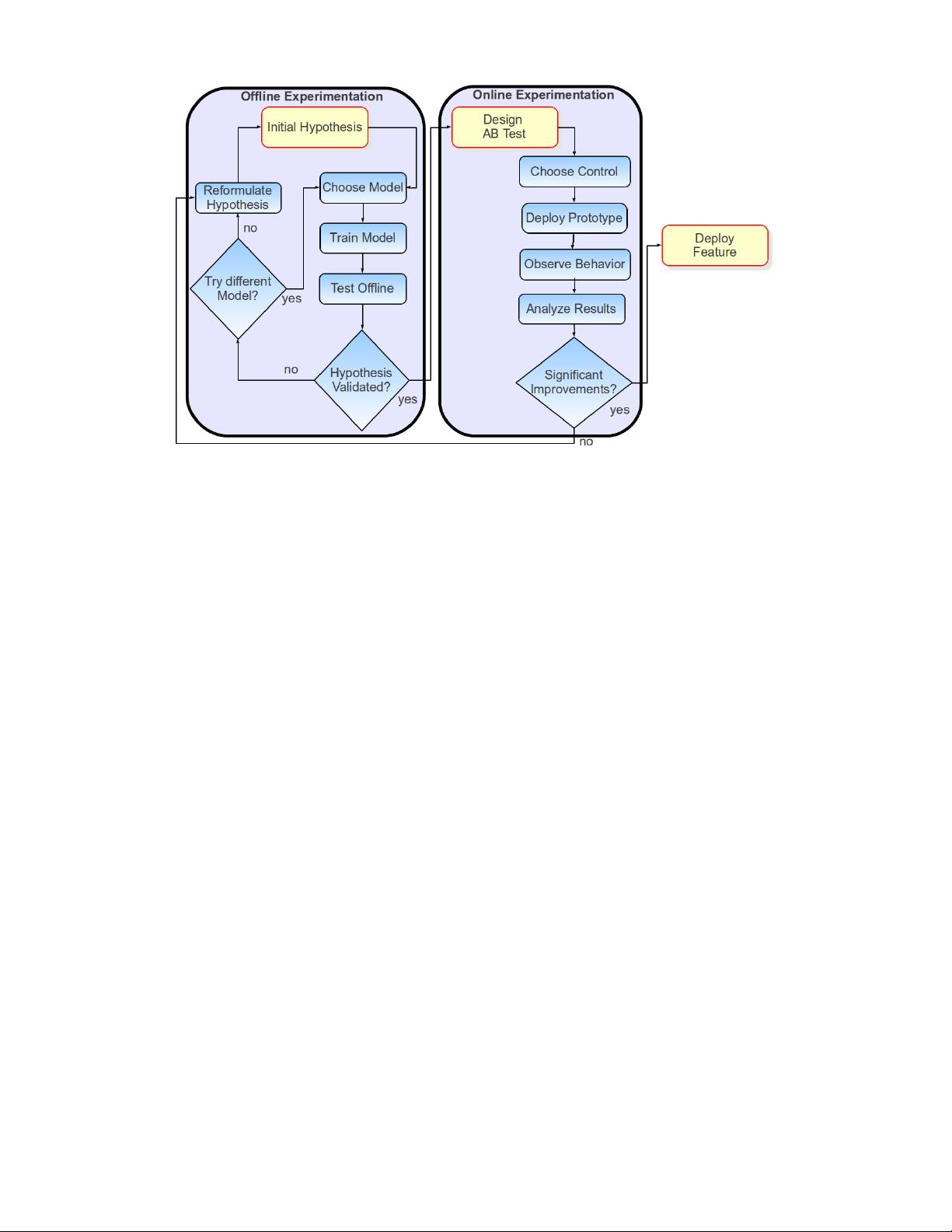

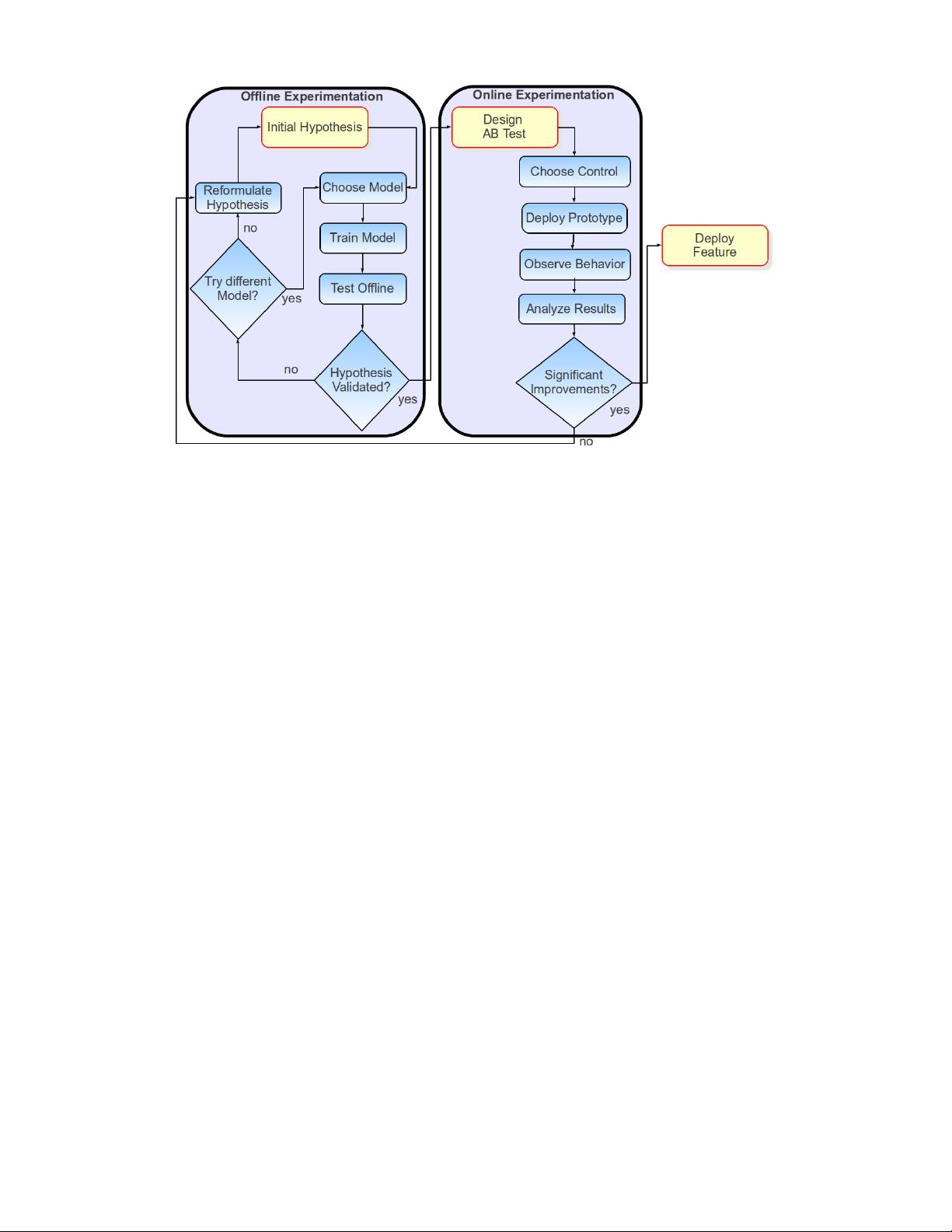

Figure 1: Following an iterative and data-driven offline-online process for innovating in personalization

solution was accomplished by combining many independent

models also highlighted the power of using ensembles.

At Netflix, we evaluated some of the new methods included

in the final solution. The additional accuracy gains that

we measured did not seem to justify the engineering effort

needed to bring them into a production environment. Also,

our focus on improving Netflix personalization had by then

shifted from pure rating prediction to the next level. In the

next section, I will explain the different methods and com-

ponents that make up a complete personalization approach

such as the one used by Netflix.

3. NETFLIX PERSONALIZATION:

BEYOND RATING PREDICTION

Netflix has discovered through the years that there is tremen-

dous value in incorporating recommendations to personal-

ize as much of the experience as possible. This realization

pushed us to propose the Netflix Prize described in the pre-

vious section. In this section, we will go over the main com-

ponents of Netflix personalization. But first let us take a

look at how we manage innovation in this space.

3.1 Consumer Data Science

The abundance of source data, measurements and associated

experiments allow Netflix not only to improve our personal-

ization algorithms but also to operate as a data-driven orga-

nization. We have embedded this approach into our culture

since the company was founded, and we have come to call

it Consumer (Data) Science. Broadly speaking, the main

goal of our Consumer Science approach is to innovate for

members effectively. We strive for an innovation that allows

us to evaluate ideas rapidly, inexpensively, and objectively.

And once we test something, we want to understand why it

failed or succeeded. This lets us focus on the central goal of

improving our service for our members.

So, how does this work in practice? It is a slight variation

on the traditional scientific process called A/B testing (or

bucket testing):

1. Start with a hypothesis: Algorithm/feature/design

X will increase member engagement with our service

and ultimately member retention.

2. Design a test: Develop a solution or prototype. Think

about issues such as dependent & independent vari-

ables, control, and significance.

3. Execute the test: Assign users to the different buck-

ets and let them respond to the different experiences.

4. Let data speak for itself : Analyze significant changes

on primary metrics and try to explain them through

variations in the secondary metrics.

When we execute A/B tests, we track many different met-

rics. But we ultimately trust member engagement (e.g.

viewing hours) and retention. Tests usually have thousands

of members and anywhere from 2 to 20 cells exploring vari-

ations of a base idea. We typically have scores of A/B tests

running in parallel. A/B tests let us try radical ideas or test

many approaches at the same time, but the key advantage

is that they allow our decisions to be data-driven.

An interesting follow-up question that we have faced is how

to integrate our machine learning approaches into this data-

driven A/B test culture at Netflix. We have done this with

an offline-online testing process that tries to combine the

best of both worlds (see Figure 1). The offline testing cycle

is a step where we test and optimize our algorithms prior

to performing online A/B testing. To measure model per-

formance offline we track multiple metrics: from ranking

measures such as normalized discounted cumulative gain, to

classification metrics such as precision, and recall. We also