(i.e., the amount of data to be retrieved across racks for data

reconstruction), which plays an important role in improving

the single failure recovery performance with regard to the

scarce cross-rack bandwidth in a CFS. Second, our single

failure recovery design should address general fault tolerance

(e.g., based on RS codes). Finally, we should balance the

amount of cross-rack repair traffic at the rack level (i.e., across

multiple racks) while keeping the total amount of cross-rack

repair traffic minimum, so as to ensure that the single failure

recovery performance is not bottlenecked by a single rack.

To this end, we propose cross-rack-aware recovery (CAR),

a new single failure recovery algorithm that aims to reduce

and balance the amount of cross-rack repair traffic for a

single failure recovery in a CFS that deploys RS codes for

general fault tolerance. CAR has three key techniques. First,

for each stripe, CAR examines the data layout and finds a

recovery solution in which the resulting repair traffic comes

from the minimum number of racks. Second, CAR performs

intra-rack aggregation for the retrieved chunks in each rack

before transmitting them across racks in order to minimize

the amount of cross-rack repair traffic. Third, CAR examines

the per-stripe recovery solutions across multiple stripes, and

constructs a multi-stripe recovery solution that balances the

amount of cross-rack repair traffic across multiple racks.

Our contributions are summarized as follows.

• We reconsider the single failure recovery problem in the

CFS setting, and identify the open issues that are not

addressed by existing studies on single failure recovery.

• We propose CAR, a new cross-rack-aware single failure

recovery algorithm for a CFS setting. CAR is designed

based on RS codes. It reduces the amount of cross-rack

repair traffic for each stripe, and additionally searches for

a multi-stripe recovery solution that balances the amount

of cross-rack repair traffic across racks.

• We implement CAR and conduct extensive testbed ex-

periments based on different CFS settings with up to 20

nodes. We show that CAR can reduce 66.9% of cross-

rack repair traffic and 53.8% of recovery time when

compared to a baseline single failure recovery design that

does not consider the bandwidth diversity property of a

CFS. Also, we show that CAR effectively balances the

amount cross-rack repair traffic across racks.

The rest of this paper proceeds as follows. Section II

presents the background details of erasure coding and reviews

related work on single failure recovery. Section III formulates

and motivates the problem in the CFS setting. Section IV

presents the design of CAR. Section V presents our evalu-

ation results on CAR based on testbed experiments. Finally,

Section VI concludes the paper.

II. BACKGROUND AND RELATED WORK

In this section, we provide the background details on erasure

coding in the context of a CFS. We also present the open issues

to be addressed in this paper.

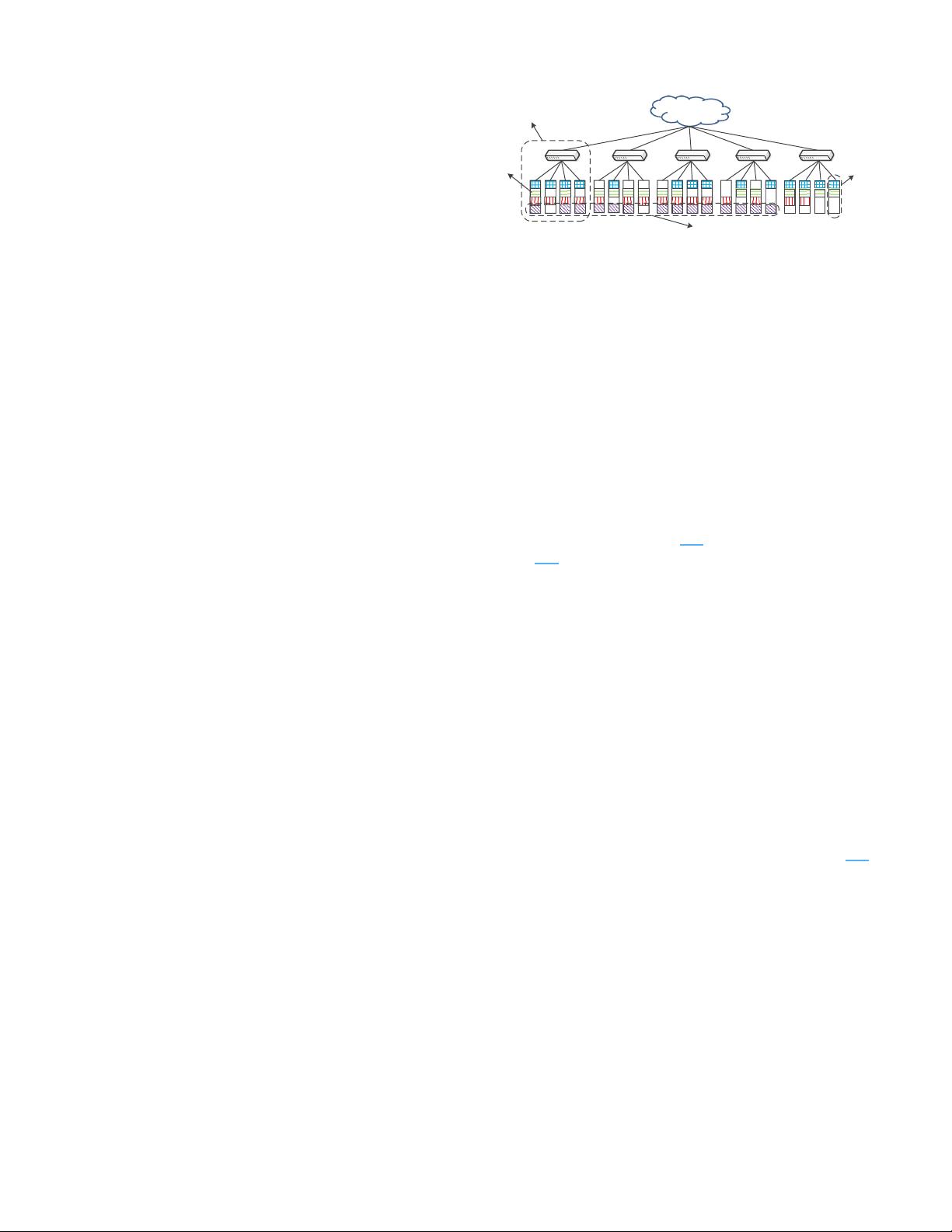

Stripe

Rack

Chunk

Node

Network Core

Fig. 1. Illustration of a CFS architecture that is composed of five racks with

four nodes each. The CFS contains four stripes of 14 chunks encoded by a

(k = 8, m = 6) code, in which the chunks with the same color and fill

pattern belong to the same stripe. Note that the number of chunks in each

node may be different.

A. Basics

This paper considers a special type of distributed storage

system architecture called a clustered file system (CFS), which

arranges storage nodes into racks, such that all nodes within

the same rack are connected by a top-of-rack switch, while

all racks are connected by a network core. Figure 1 illustrates

a CFS composed of five racks with four nodes each (i.e., 20

nodes in total). Some well-known distributed storage systems,

such as Google File System [12], Hadoop Distributed File

System [32], and Windows Azure Storage [4], realize the CFS

architecture.

We use erasure coding to maintain data availability for a

CFS. We consider a popular family of erasure codes that

are: (1) Maximum Distance Separable (MDS) codes, meaning

that fault tolerance is achievable with the minimum storage

redundancy (i.e., the optimal storage efficiency), and (2)

systematic, meaning that the original data is retained after

encoding. Specifically, we construct a (k, m) code (which is

MDS and systematic) with two configurable parameters k and

m. A (k, m) code takes k original (uncoded) data chunks of

the same size as inputs and produces m (coded) parity chunks

that are also of the same size, such that any k out of the

k + m chunks can sufficiently reconstruct all original data

chunks. The k + m chunks collectively form a stripe, and are

distributed over k+m different nodes. Note that the placement

of chunks should also ensure rack-level fault tolerance [18],

such that there are at least k chunks for data reconstruction

in other surviving racks in the presence of rack failures. We

address this issue when we design CAR (see Section IV).

For an erasure-coded CFS that stores a large amount of

data, it contains multiple independent stripes of data/parity

chunks. In this case, each node stores a different number of

chunks that belong to multiple stripes. For example, referring

to the CFS in Figure 1, there are four stripes spanning over 20

nodes, in which the leftmost node stores four chunks, while

the rightmost node stores only two chunks.

B. Erasure Code Constructions

There have been various proposals on erasure code con-

struction in the literature. Practical erasure codes often realize