Robust Loop-Closure Detection with a Learned

Illumination Invariant Representation

for Robot vSLAM*

Shilang Chen

1

, Junjun Wu

1,†

, Yanran Wang

2

, Lin Zhou

1

, Qinghua Lu

1

, Yunzhi Zhang

1

1

School of Mechatronics Engineering, Foshan University, Foshan 528000, China

2

Department of Computer Science, Jinan University, Guangzhou 510632, China

Abstract— Robust loop-closure detection plays a key role

for the long-term robot visual Simultaneous Localization and

Mapping(SLAM) in indoor or outdoor environment, due to

illumination changes can greatly affect the accuracy of online

image matching, and keypoints may fail to match between

images taken at thesame location but different seasons. In this

paper, we propose a robust loop-closure detection method for

robot visual SLAM, which adopts invariant representation as

image descriptors composed of learned features and adapts to

changes in illumination and seasons. We evaluate our method

on real datasets and demonstrate its excellent ability to handle

illumination changes.

Index Terms— Visual SLAM, Loop Closure Detection, Vi-

sual Place Recognition, Illumination Invariant Feature, Moblie

Robot, Convolutional Neural Network

I. INTRODUCTION

Since the development of bionics and intelligent robot

technology, researchers have been longing for one day, robots

can observe and understand the world around them through

their eyes, and be able to walk flexibly in a natural environ-

ment, so as to achieve man-machine integration. After nearly

30 years of theoretical research and technology precipitation,

people have made many exciting achievements on this issue

and gradually applied it to the emerging technology industry,

such as sweeper robot, self-driving, unmanned aerial vehicle,

virtual reality and augmented reality. The rise of these widely

followed industries has been underpinned by a basic technol-

ogy: Simultaneous Localization and Mapping, especially for

visual SLAM.

In the process of visual SLAM, if the current image is

similar to a certain image encountered before, the loop-

closure detection algorithm should give a recognition signal,

and then let the back-end algorithm for verification and

subsequent processing. If the return loop-closure can be

detected correctly, the robot will have the ability to relocate

after the loss, and can effectively reduce the cumulative error

caused by the visual odometry to obtain a globally consistent

map.

*This work is supported by National Natural Science Founda-

tion of China(61603103); Natural Science Foundation of Guangdong,

China(2016A030310293, 2018A030310352); Science and Technology Pro-

gram of Guangzhou, China(201707010013); Foshan University Graduate

Freedom Exploration Fund Project.

†

Corresponding author e-mail: jjunwu@fosu.edu.cn.

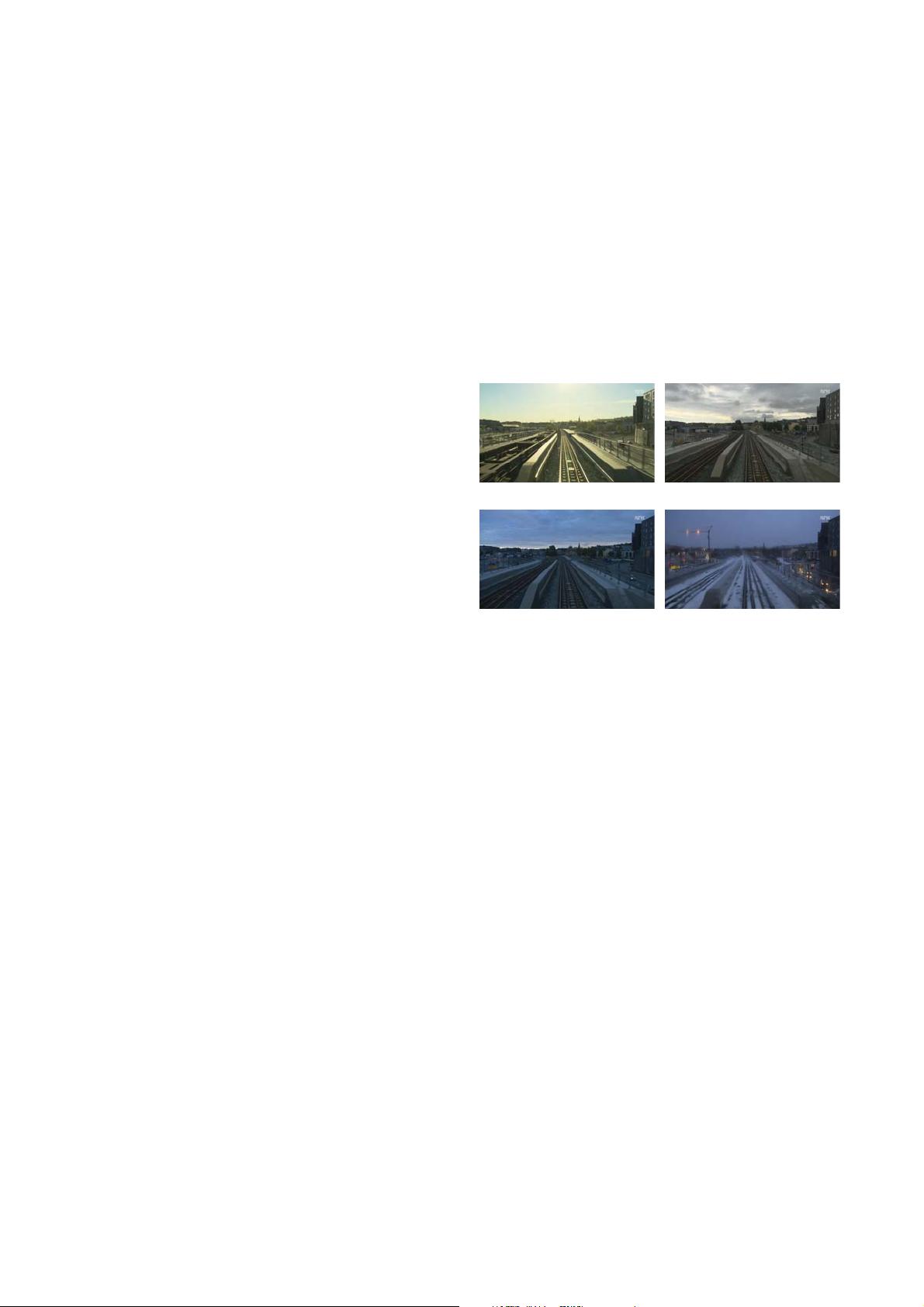

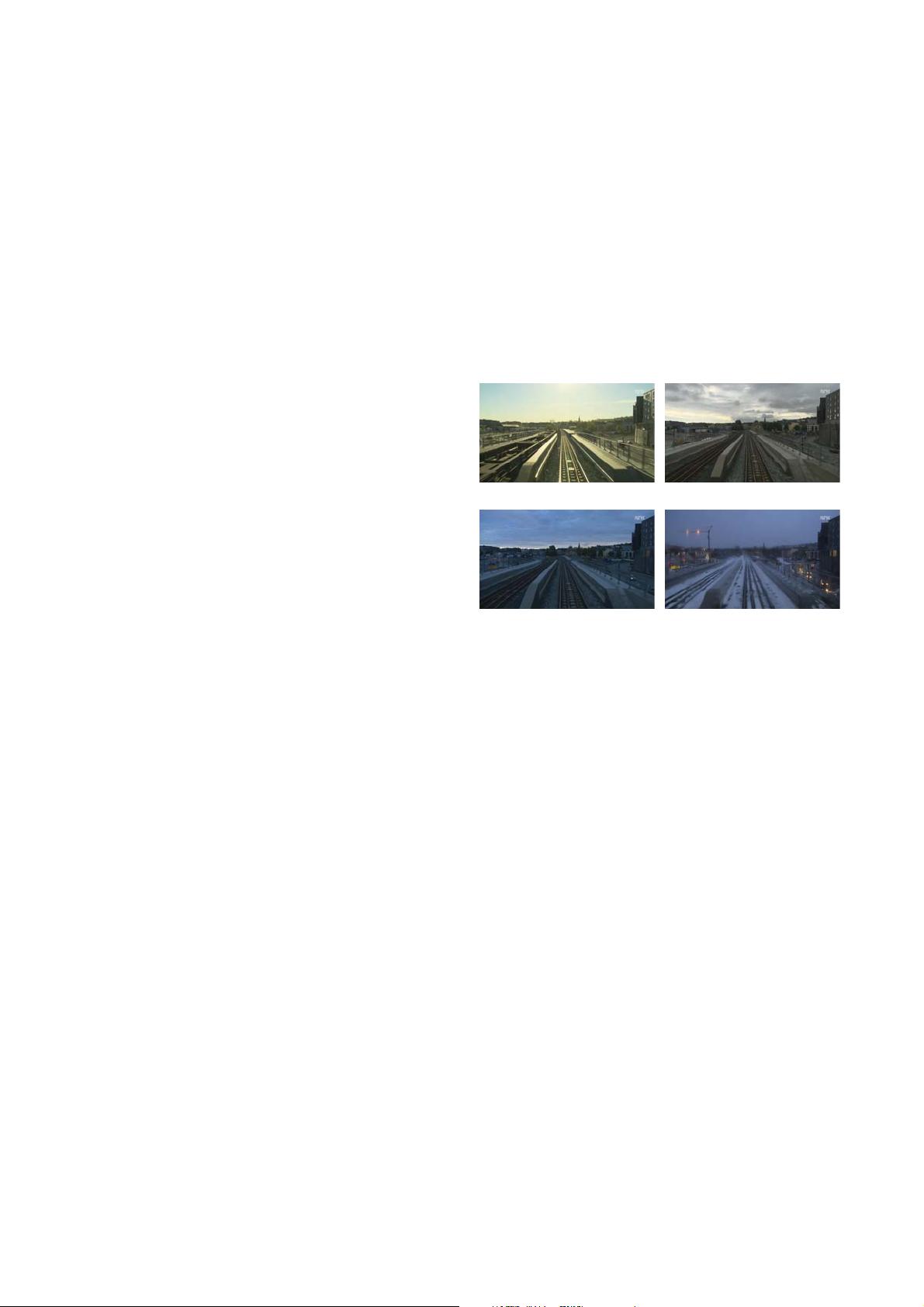

(a) spring (b) summer

(c) fall (d) winter

Fig. 1. Four images observed from the same place in spring, summer, fall,

and winter

In the visual SLAM, mapping relies on visual features

or keyframes to describe the environment, while robot lo-

calization will be completed by matching the image of

features or keyframes on the map [1]. However, mobile

robots are in a complex and changeable environment, which

includes illumination, time, weather, seasons(e.g. Fig. 1)

and the dynamic changes of various objects. Loop-closure

detection is the most important component of visual SLAM,

and loop-closure detection is most susceptible to the above

conditions. Improve the invariance of the mobile robot under

various conditions, especially under illumination changes, is

a necessary condition for mobile robots to work robustly.

In the paper, we propose a novel learning method for loop-

closure detection of visual SLAM. The method does not use

a visual vocabulary(such as bag-of-words) and uses a selec-

tive subset of visual Convolutional Neural Networks(CNN)

features straight for image description. It also has good

illumination invariance and viewpoint invariance. The main

processes include creating CNN database, detecting image

features with Learned Invariant Feature Transform(LIFT)

[2], generating image descriptor, and detecting loop-closure

candidates. In particular, the contributions of this paper are:

(1) A novel illumination invariance image descriptor de-

scribes the keyframe of the environment.

(2) An effective loop-closure detection method without

2019 IEEE 4th International Conference on Advance

Robotics and Mechatronics (ICARM)

978-1-7281-0064-7/19/$31.00 ©2019 IEEE

342