172 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 28, NO. 1, JANUARY 2019

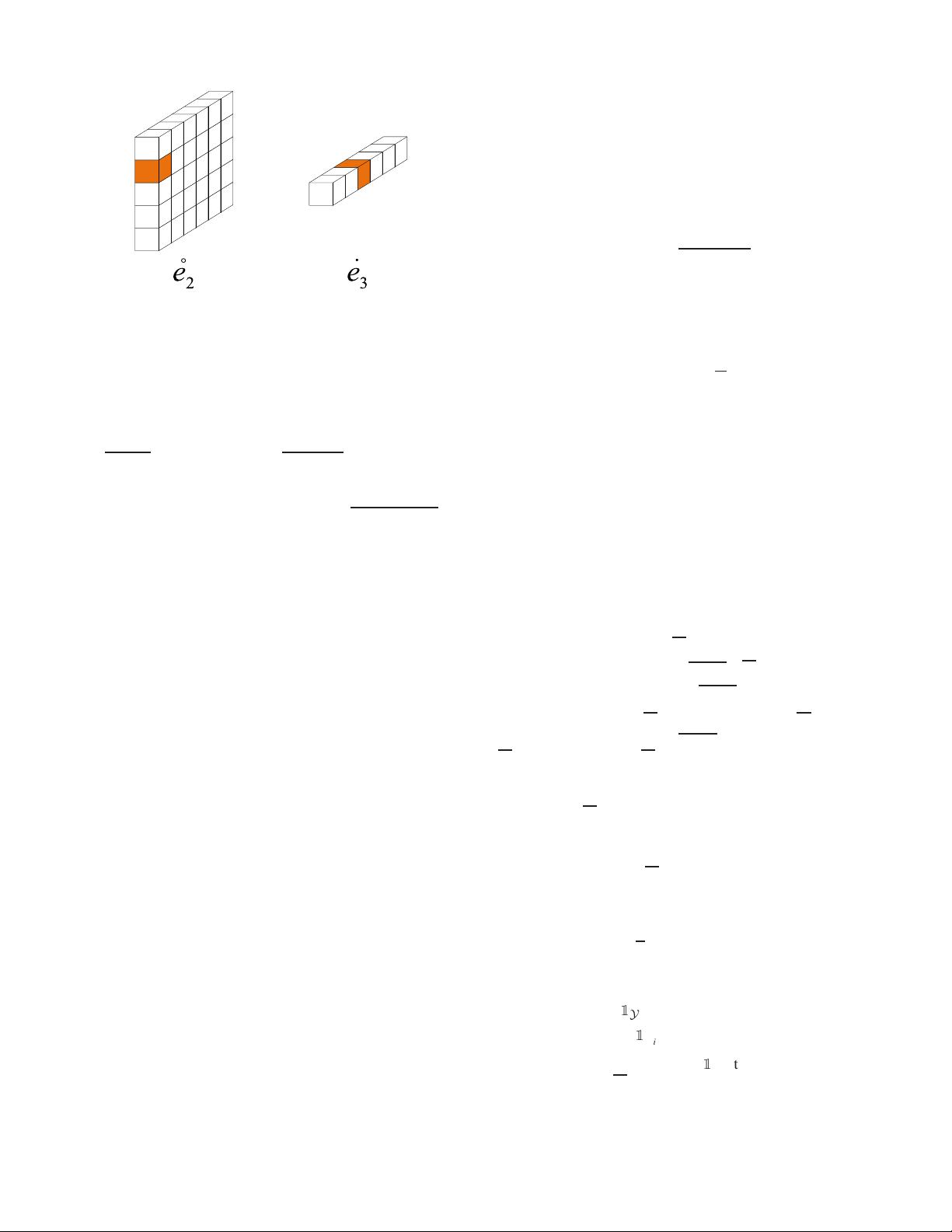

Fig. 2. The column basis

˚

e

2

and the tube basis

˙

e

3

. The marked entry equals

to 1 while others equal to 0.

f

(

·

)

is defined by

K

(

f

(

X

)

f

(

Y

))

=

1

n

1

n

2

n

3

i, j,k

f

X

i, j,k

ln

f

X

i, j,k

f

Y

i, j,k

+

1 − f

X

i, j,k

ln

1 − f

X

i, j,k

1 − f

Y

i, j,k

III. T

HE PROPOSED TENSOR COMPLETION METHOD

In this section, we will formally introduce our algorithm

to implement the one-bit tensor completion problem with

general sampling distribution. Figure 3 illustrates the tensor

completion process implemented by the proposed algorithm.

A. Observation Model

We study the one-bit tensor completion issue in general

random sampling setting. Instead of observing the corrupted

elements of desired tensor directly as [24] and [25], we only

observe a subset of elements of a tensor Y, which has the

following relationship with the desired tensor X :

Y

i, j,k

=

1, if X

i, j,k

+ Z

i, j,k

≥ 0

−1, if X

i, j,k

+ Z

i, j,k

< 0

(

i, j, k

)

∈ , (1)

where Z is noise tensor with i.i.d. entries. And

={

(

i

1

, j

1

, k

1

)

, ···,

(

i

n

, j

n

, k

n

)

}⊆[n

1

]×[n

2

]×[n

3

]

is an index set of i.i.d. random variables with distribution

={π

l,m,s

} on [n

1

]×[n

2

]×[n

3

], which satisfies

P{

(

i

t

, j

t

, k

t

)

=

(

l, m, s

)

}=π

l,m,s

(2)

for all t and

(

l, m, s

)

and

(l,m,s)∈[n

1

]×[n

2

]×[n

3

]

π

l,m,s

= 1.

In addition, the noise Z = 0 makes the one-bit tensor

completion task be a well posed problem, and has a “dithering”

effect [14], [15].

As discussed in [14] and [15], the observation model (1) is

equal to

Y

i, j,k

=

1, with probability f

X

i, j,k

−1, probability 1− f

X

i, j,k

(

i, j, k

)

∈ (3)

for a differentiable function f : R →[0, 1], which can

be treated as a distribution function or a link function in

regression model [26].

Similar to [14] and [15], two natural choices for f ,or

equivalently, for the distribution of {Z

i, j,k

} are as following:

• (Logistic regression/Logistic noise). The Logistic regres-

sion model is taken by (3) with

f

(

x

)

=

exp

(

x

)

1 + exp

(

x

)

,

which coincides with (1) and shows that Z

i, j,k

obeys the

standard logistic distribution.

• (Probit regression/Gaussian noise). The probit regression

model is taken by (3) with

f

(

x

)

=

x

σ

,

which coincides with (1) and shows that Z

i, j,k

obeys the

mean zero Gaussian distribution with variance σ

2

.Here

describes the cumulative distribution function of standard

Gaussian distribution.

B. Optimization Model

We now introduce our optimization model to estimate the

underlying tensor X . Assuming that we observe n independent

identically distributed entries {Y

i

t

, j

t

,k

t

}

n

t=1

of Y and follow the

model (3), it is similar to the matrix completion problem,

which encourages us to deem that the underlying tensor

possesses the ”low rank structure”. Notice that

X

TNN

=X

∗

≤ n

3

√

rn

1

n

2

X

∞

≤ γ n

3

√

rn

1

n

2

(4)

holds, if we assume

X

∞

≤ γ and rank

X

≤ r.It

yields that X

TNN

≤ γ n

3

√

rn

1

n

2

is a loose of conditions

X

∞

≤ γ and rank

X

≤ r. Therefore, we combine the

negative log-likelihood function with the tensor nuclear norm

regularization term to reveal the underlying tensor X under

the constrain

X

∞

≤ γ . The proposed model is:

min

X

ϕ

(

X

)

+ λ X

TNN

s.t. X

∞

≤ γ, (5)

where

ϕ

(

X

)

=−

1

n

n

t=1

L

Y

(

X

(

i

t

, j

t

, k

t

))

(6)

and

L

Y

(

X

(

i

t

, j

t

, k

t

))

=

½

Y

i

t

, j

t

,k

t

=1

ln

f

X

i

t

, j

t

,k

t

+

½

Y

i

t

, j

t

,k

t

=−1

ln

1 − f

X

i

t

, j

t

,k

t

. (7)

λ is the regularization parameter,

½

is the indicator function,

and the condition

X

∞

≤ γ compels the underlying tensor

less “spiky” [14], [15]. From the representation of ϕ

(

X

)

and

the definition of 4, we can see that our proposed model can be

extended to higher order tensors [27], [28]. Using the block-

diagonal of the tensor in the Fourier domain which mentioned