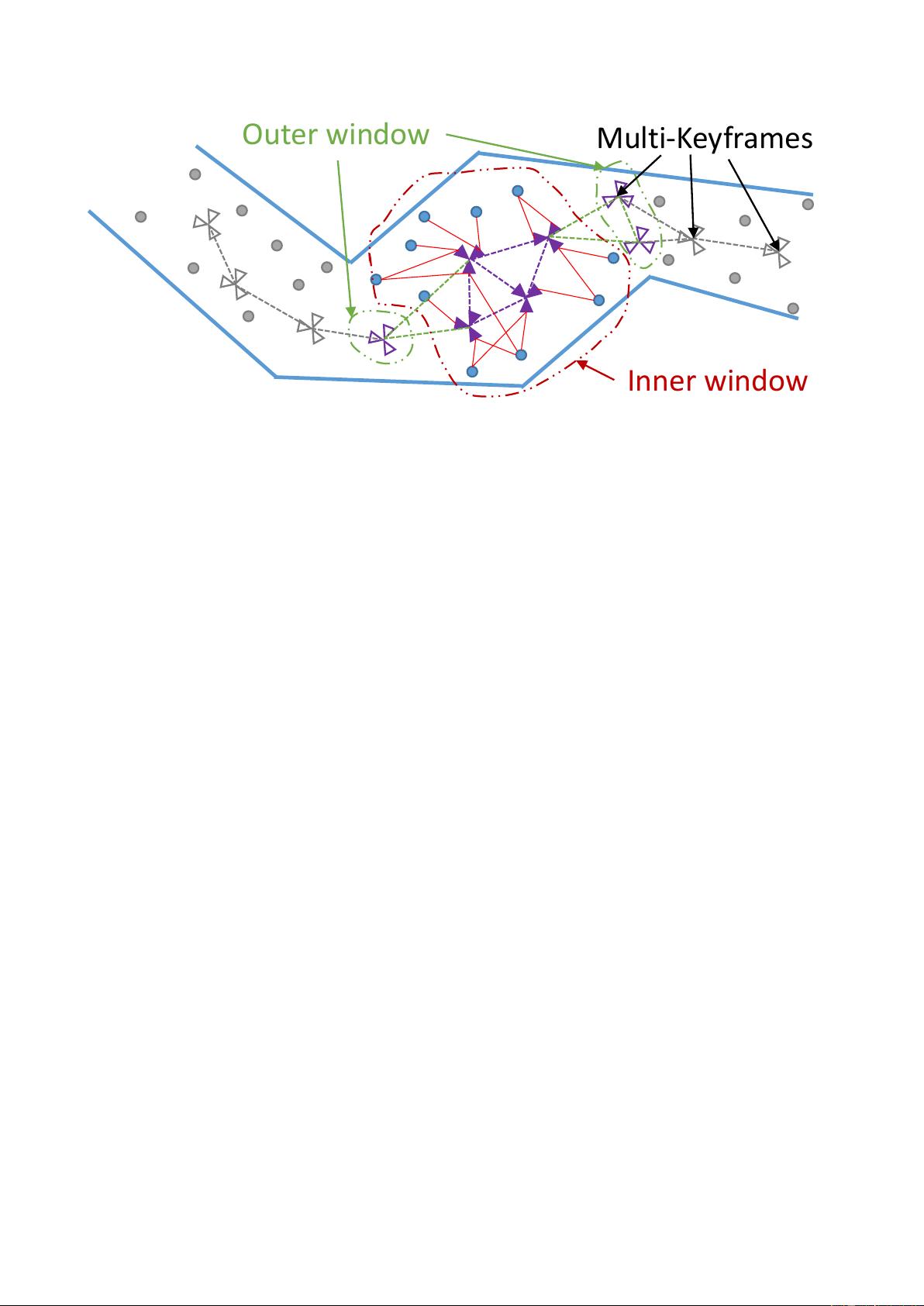

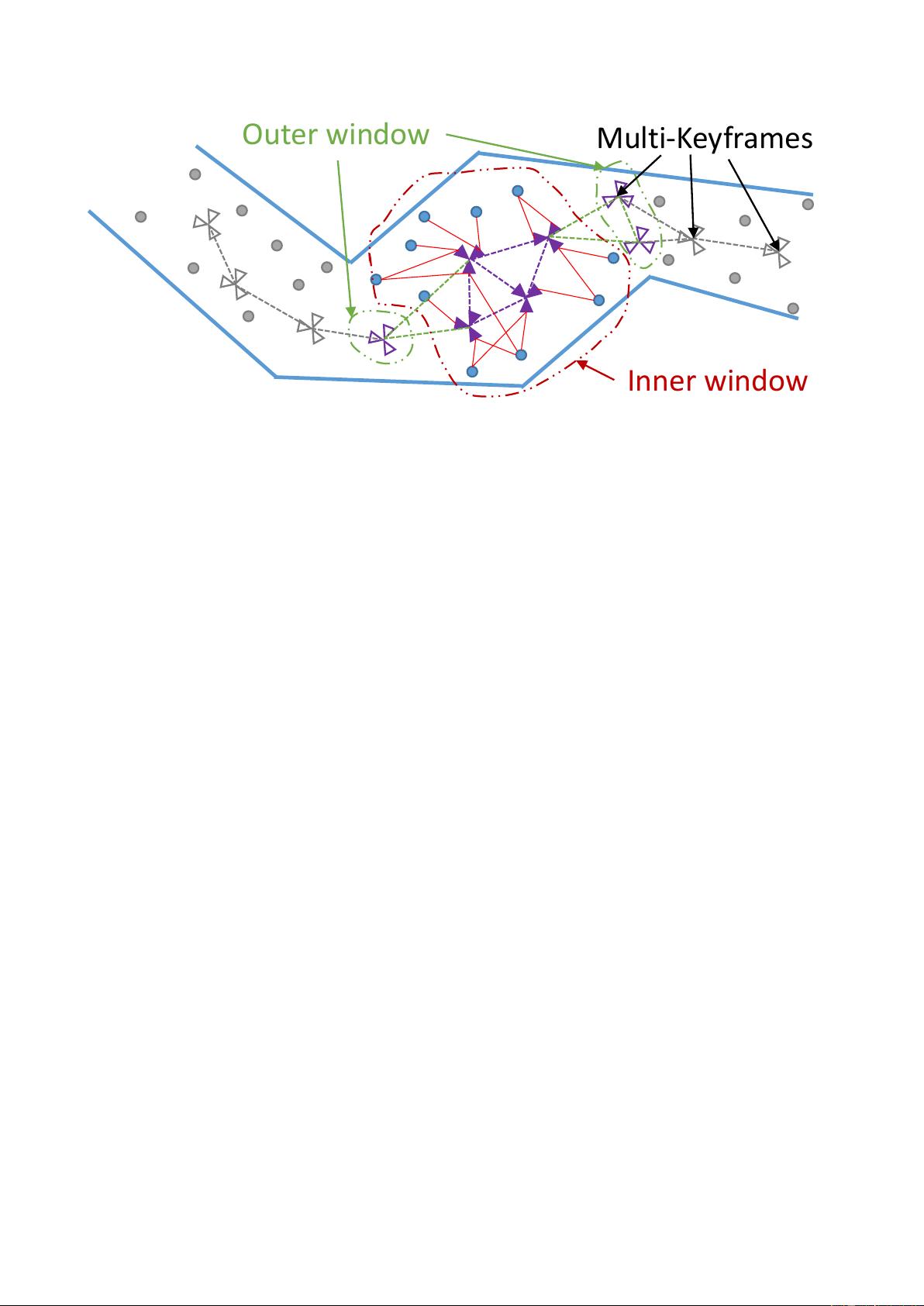

Inner window

Outer window

Multi-Keyframes

Figure 2. Principle of double window SLAM.

map quality. We will build our multi-fisheye camera SLAM upon

ORB-SLAM and explain all changes in the next sections.

The methods described so far either use a single camera (Monoc-

ular SLAM) or a stereo configuration (Stereo SLAM). CoSLAM

(Collaborative SLAM) (Zou and Tan, 2013) aims at combining

the maps build by multiple cameras moving around in dynamic

environments independently. The authors introduce inter-camera

tracking and mapping and methods to distinguish static back-

ground points and dynamically moving foreground objects. In

(Heng et al., 2014), four cameras are rigidly coupled on a MAV.

Two cameras are paired in a stereo configuration respectively and

self-calibrated to an IMU on-line. The mapping pipeline is simi-

lar to ORB-SLAM and also uses ORB descriptors for map point

assignment. Additionally, the authors propose a novel 3-Point

algorithm to estimate the relative motion of the MAV including

IMU measurements. Most recent work on multi-camera SLAM is

dubbed MC-PTAM (Multi-Camera PTAM) (Harmat et al., 2012,

Harmat et al., 2015) and is build upon PTAM. In a first step (Har-

mat et al., 2012), the authors changed the perspective camera

model to the generic polynomial model that is also used in this

paper. This induces further changes, e.g. relating the epipolar

correspondence search that now has to be performed on great cir-

cles on the unit sphere instead of point to line distances in the

plane. In addition, significant changes concerning the tracking

and mapping pipeline had to be made to include multiple rigidly

coupled cameras. Keyframes are extended to MKFs as they now

hold more than one camera. As PTAM, their system uses patches

as image features and warps them prior to matching. Still, the

system lacks a mapping pipeline that is capable to perform in

large-scale environments. Subsequent work (Harmat et al., 2015)

improved upon (Harmat et al., 2012) and is partly similar to the

SLAM system developed in this thesis in that it uses the same

camera model and g2o to perform graph optimization. On top,

the authors integrated an automated calibration pipeline to esti-

mate the relative orientation of each camera in the MCS. Still the

system uses the relatively simple mapping back-end of PTAM in-

stead of double-window optimization that is used in this thesis

and has proven to be superior. In addition, image patches are

used as features making place recognition, loop closing and the

exploration and storage of large environments critical.

Thus far, all approaches were based on local point image features.

Hence, the reconstructed environment will stay relatively sparse

even if hundreds of features are extracted in each keyframe. This

makes it difficult for autonomous vehicles or robots that explore

their surrounding to automatically analyze and extract object struc-

ture or texture information. Thus, most of the time, camera local-

ization is coupled with laser scanners (Lin et al., 2012), struc-

tured light (Kerl et al., 2013), yielding structured object informa-

tion. Recent work on semi-dense (Forster et al., 2014, Engel et

al., 2014) and dense (Newcombe et al., 2011, Concha and Civera,

2015) camera-based SLAM systems make use of a single camera

to estimate dense scene structure instead of reconstructing only

point features.

LSD-SLAM (Engel et al., 2014) is a semi-dense approach that

runs on a single CPU in real-time, in contrast to dense methods

(Newcombe et al., 2011) that need heavy GPU support. Using

direct image-alignment by minimizing the photometric error be-

tween image discontinuities, the method skips the costly feature

extraction and matching stage of all feature-based SLAM sys-

tems. The time saved compensates for the increased BA run-

time, as a huge number of observations is included. In addition,

all scale-drift aware loop closing and large scale double window

optimizations are included, making LSD-SLAM a state-of-the-

art approach that also runs in real-time. However, loop closing

uses FAB-MAP (Cummins and Newman, 2010) for place recog-

nition and thus requires SURF features to be extracted. Subse-

quent work extended the method to mobile phones (Sch

¨

ops et

al., 2014), stereo (Engel et al., 2015) as well as omnidirectional

cameras (Caruso et al., 2015). Instead of coupling camera pose

estimation and semi-dense mapping, in (Mur-Artal and Tard

´

os,

2015) a semi-dense extension to ORB-SLAM is presented. The

semi-dense map is reconstructed from feature-based keyframes

using depth consistency tests and a novel correspondence search.

The semi-dense reconstruction is not obtained in real-time but is

calculated in a CPU thread running in parallel to tracking and

mapping. The methods yields superior performance compared to

LSD-SLAM and it seems that the decoupling is advantageous,

especially in dynamic scenes.

3. CONTRIBUTIONS

We will extend the state-of-the-art ORB-SLAM to multi-fisheye

camera systems using MultiCol (Urban et al., 2016b). Our contri-

butions to ORB-SLAM (and ORB-SLAM2 respectively) are the

following:

1. The introduction of Multi-Keyframes (MKFs).