2.1 Probabilistic inference with a fully-sp ecied model

\brain injury" indicates that the former is not relevant when specifying the conditional

probability of \brain injury" given the variables preceding it. For the model to b e fully

specied, this graphical structure must, of course, b e accompanied by actual numerical

values for the relevant conditional probabilities, or for parameters that determine these.

The diseases in the middle layer of this b elief network are mostly latentvariables, invented

byphysicians to explain patterns of symptoms they have observed in patients. The symp-

toms in the bottom layer and the underlying causes in the top layer would generally b e

considered observable. Neither classication is unambiguous | one might consider micro-

scopic observation of a pathogenic microorganism as a direct observation of a disease, and,

on the other hand, \fever" could be considered a latentvariable invented to explain why

some patients have consistently high thermometer readings.

In any case, many of the variables in such a network will not, in fact, have been observed,

and inference will require a summation over all possible combinations of values for these

unobserved variables, as in equation (2.7). To nd the probability that a patient with

certain symptoms has cholera, for example, wemust sum over all possible combinations of

other diseases the patientmayhaveaswell, and over all possible combinations of underlying

causes. For a complex network, the number of such combinations will b e enormous. For

some networks with sparse connectivity, exact numerical metho ds are nevertheless feasible

(Pearl, 2:1988, Lauritzen and Spiegelhalter, 2:1988). For general networks, Markovchain

Monte Carlo metho ds are an attractive approach to handling the computational diculties

(Pearl, 4:1987).

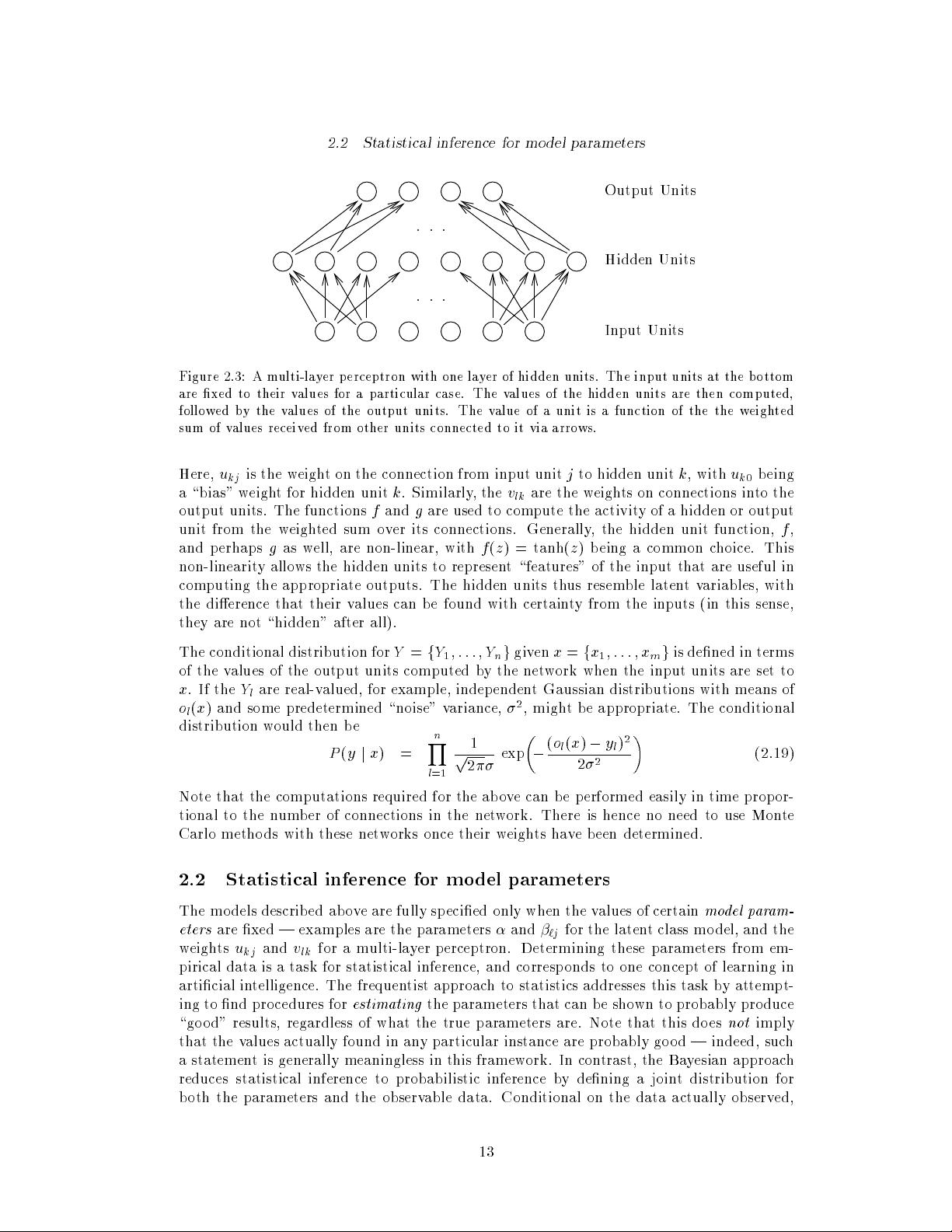

Example: Multi-layer perceptrons.

The most widely-used class of \neural networks" are the

multi-layer perceptron

(or

backpropagation

) networks (Rumelhart, Hinton, and Williams,

2:1986). These networks can be viewed as modeling the conditional distributions for an

output vector,

Y

, given the various p ossible values of an input vector,

X

. The marginal

distribution of

X

is not modeled, so these networks are suitable only for regression or classi-

cation applications, not (directly, at least) for applications where the full joint distribution

of the observed variables is required. Multi-layer p erceptrons have been applied to a great

variety of problems. Perhaps the most typical sorts of application take as input sensory infor-

mation of some type and from that predict some characteristic of what is sensed. (Thodberg

(2:1993), for example, predicts the fat content of meat from spectral information.)

Multi-layer p erceptrons are almost always viewed as non-parametric mo dels. They can have

avariety of architectures, in which \input", \output", and \hidden" units are arranged

and connected in various fashions, with the particular architecture (or several candidate

architectures) being chosen by the designer to t the characteristics of the problem. A

simple and common arrangementistohavea layer of input units, which connect to a layer

of hidden units, which in turn connect to a layer of output units. Such a network is shown

in Figure 2.3. Architectures with more layers, selective connectivity, shared weights on

connections, or other elaborations are also used.

The network of Figure 2.3 operates as follows. First, the input units are set to their observed

values,

x

=

f

x

1

;

...

;x

m

g

.Values for the hidden units,

h

=

f

h

1

;

...

;h

p

g

, and for the output

units,

o

=

f

o

1

;

...

;o

n

g

, are then computed as functions of

x

as follows:

h

k

(

x

) =

f

u

k

0

+

P

j

u

kj

x

j

(2.17)

o

l

(

x

) =

g

v

l

0

+

P

k

v

lk

h

k

(

x

)

(2.18)

12