Recent Advances and Trends in Multimodal Deep Learning: A Review • 7

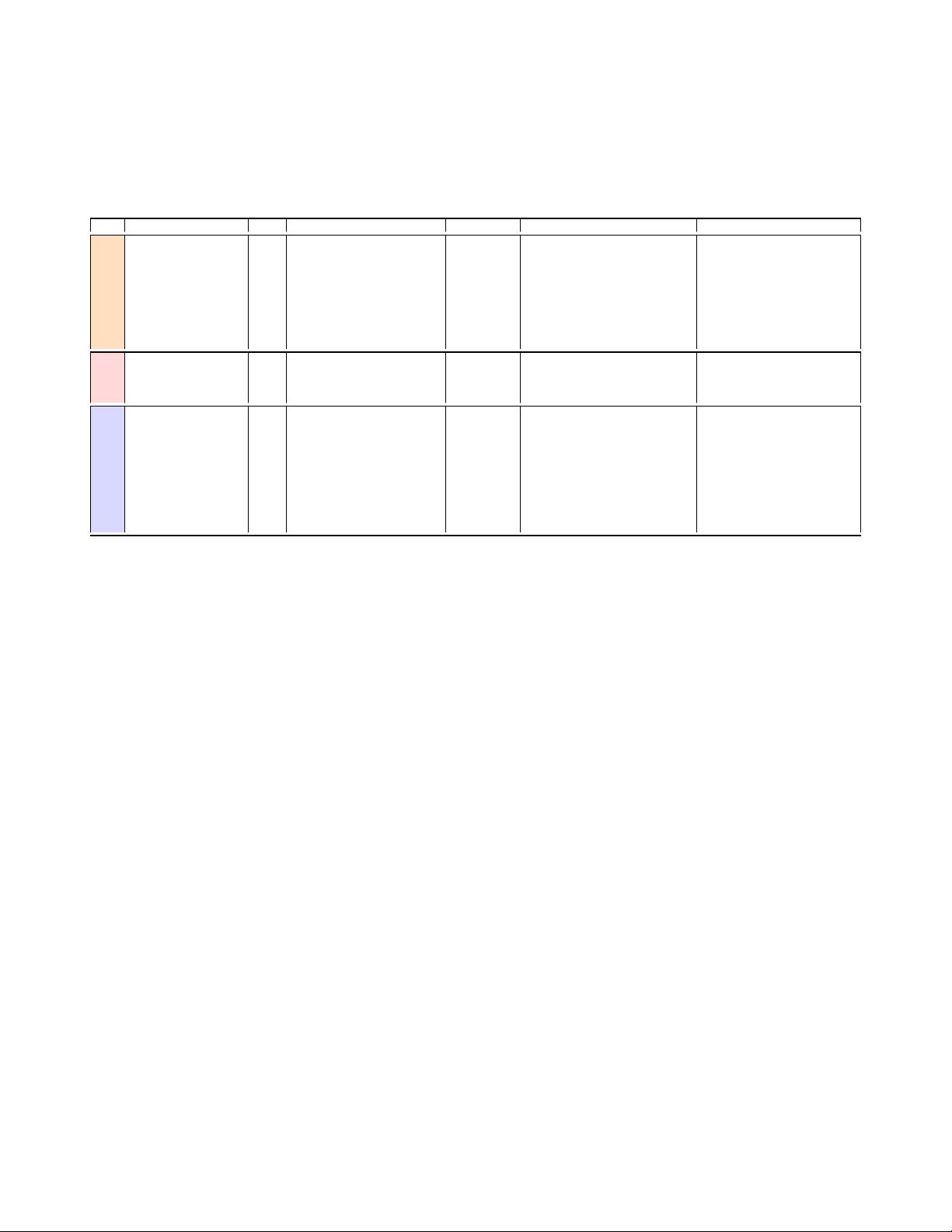

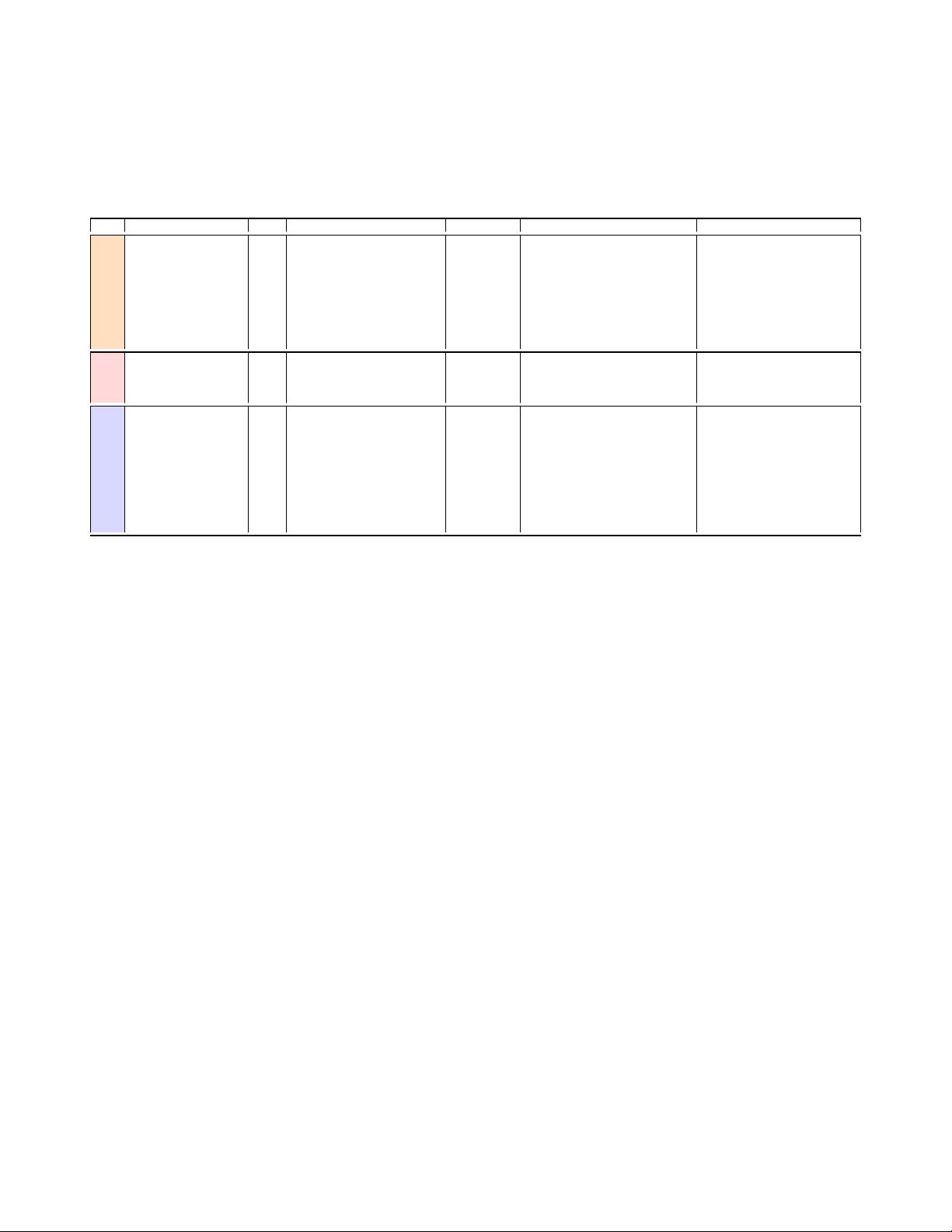

Table 3. Comparative analysis of Image Description models. Where, EDID = Encoder-Decoder based Image Description,

SCID = Semantic Concept-based Image Description, and AID = Aention-based Image Description.

Paper Year Architecture Multimedia Dataset Evaluation Metrics

J. Wu et al. [159] 2017 CNN/VGG16-InceptionV3,

Stacked GRU

Image, Text MS-COCO BLEU, CIDEr, METEOR

R. Hu et al. [60] 2017 Faster RCNN/VGG16,

RNN/BLSTM

Image, Text Visual Genome, Google-Ref Top-1 precision (P@1) metric

EDID L. Guo et al. [46] 2019 Deep CNN, GAN, RNN/LSTM,

GRU

Image, Text FlickrStyle10K, SentiCap, MS-

COCO

BLEU, CIDEr, METEOR, PPLX

X. He et al [54] 2019 CNN/VGG16, RNN/LSTM Image, Text Flickr30k, MS-COCO BLEU, CIDEr, METEOR

Y. Feng et al. [37] 2019 CNN/InceptionV4, RNN/LSTM Image, Text MS-COCO BLEU, ROUGE, CIDEr, METEOR,

SPICE

W. Wang et al. [154] 2018 CNN/VGG16, RNN/LSTM Image,Text MS-COCO BLEU, CIDEr, METEOR

SCID P. Cao et al. [22] 2019 CNN/VGG16, RNN/BLSTM Image, Text Flickr8k, MS-COCO BLEU, CIDEr, METEOR

L. Cheng et al. [29] 2020 Faster-RCNN, RNN/LSTM Image, Text MS-COCO BLEU, SPICE, METEOR, CIDEr,

ROUGE

L. Li et al. [80] 2017 CNN/VGG16-Faster RCNN,

RNN/LSTM

Image, Text Flickr8K, Flickr30K, MS-COCO METEOR, ROUGE

𝐿

, CIDEr,

BLEU

P. Anderson et al. [7] 2018 Faster RCNN/ResNet101,

RNN/LSTM, GRU

Image, Text Visual Genome Dataset, MS-COCO,

VQA v2.0

BLEU, METEOR, CIDEr, SPICE,

ROUGE

M. Liu et al. [86] 2020 CNN/InceptionV4, RNN/LSTM Image, Text Flickr8k-CN, Flickr8k-CN, AIC-ICC BLEU, ROUGE, CIDEr, METEOR

AID M. Liu et al. [87] 2020 CNN/InceptionV4, RNN/LSTM Image, Text AIC-ICC BLEU, ROUGE, CIDEr, METEOR

B. Wang et al. [150] 2020 CNN/InceptionV4, RNN/LSTM Image, Text Flickr8K, Flickr8k-CN BLEU, ROUGE, CIDEr, METEOR

Y. Wei et al. [158] 2020 GAN, RNN/LSTM Image, Text MS-COCO BLEU, METEOR, CIDEr, SPICE,

ROUGE

LU Jiasen et al. [68] 2020 CNN/ResNet, RNN/LSTM Image, Text Flickr30K, MS-COCO BLEU, ROUGE, CIDEr, METEOR

generation. B. Wang et al. [

150

] proposed an E2E-DL approach for image description using a semantic attention

mechanism. In this approach, features are extracted from specic image regions using an attention mechanism for

producing corresponding descriptions. This approach can transform English language knowledge representations

into the Chinese language to get cross-lingual image description. Y. Wei et al. [

158

] proposed an image description

framework by using multi attention mechanism to extract local and non-local feature representations. LU Jiasen

et al. [

68

] proposed an adaptive attention mechanism based image description model. Attention mechanism

merges visual features extracted from the image by CNN architecture and linguistic features by LSTM architecture.

During the deep learning era (2010 to date), many authors contributed a lot by proposing various techniques

to describe the visual contents of an image in the domain of image description. Dierent image description

approaches described in section 3.1.1, 3.1.2, and 3.1.3 are analyzed comparatively according to architectures,

multimedia, publication year, datasets, and evaluation metrics in Table 3. The architectures used in these proposed

techniques are explained briey in section 4. Similarly, datasets and evaluation metrics are discussed in sections

5 and 6, respectively.

3.2 Multimodal Video Description:

Like image Description, video description is used to generate a textual description of visual contents provided

through input video. It has various applications in video subtitling, visually impaired videos, video surveillance,

sign language video description, and human-robot interaction. Advancements in this eld open up many oppor-

tunities in various application domains. During this process, mainly two types of modalities are used, i.e., video

stream and text. The general structure diagram of the video description is shown in Figures 3 (a) & (b) and 4.

During the deep learning era, many authors contribute to video description by using various methods. At the

start of this DL era, some classical and statistical video description approaches are proposed based on Subject,

Object, Verb (SVO) tuple methods [

2

]. These SVO tuple-based methods laid the foundation for the description of