GU

et al.

: CE-NET FOR 2D MEDICAL IMAGE SEGMENTATION 2283

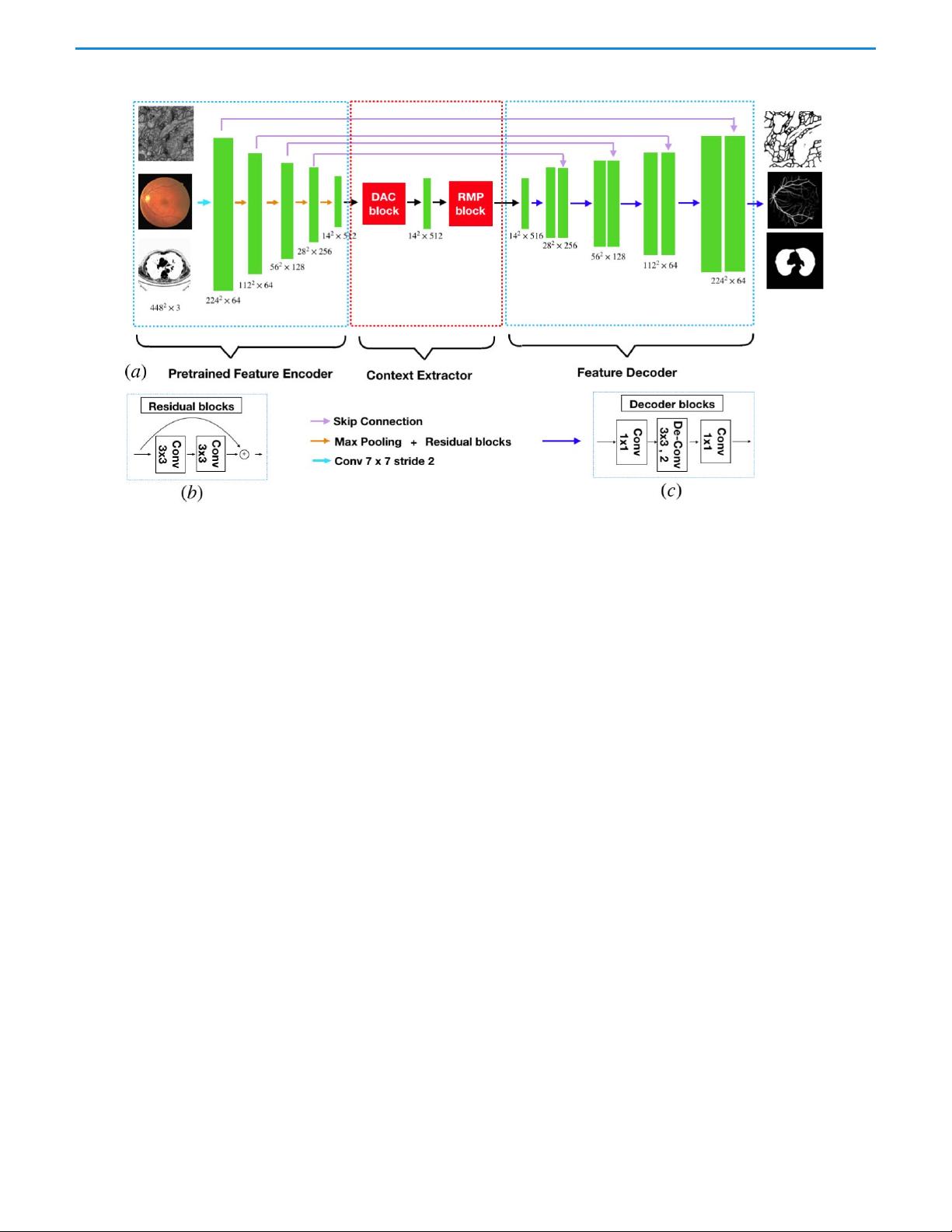

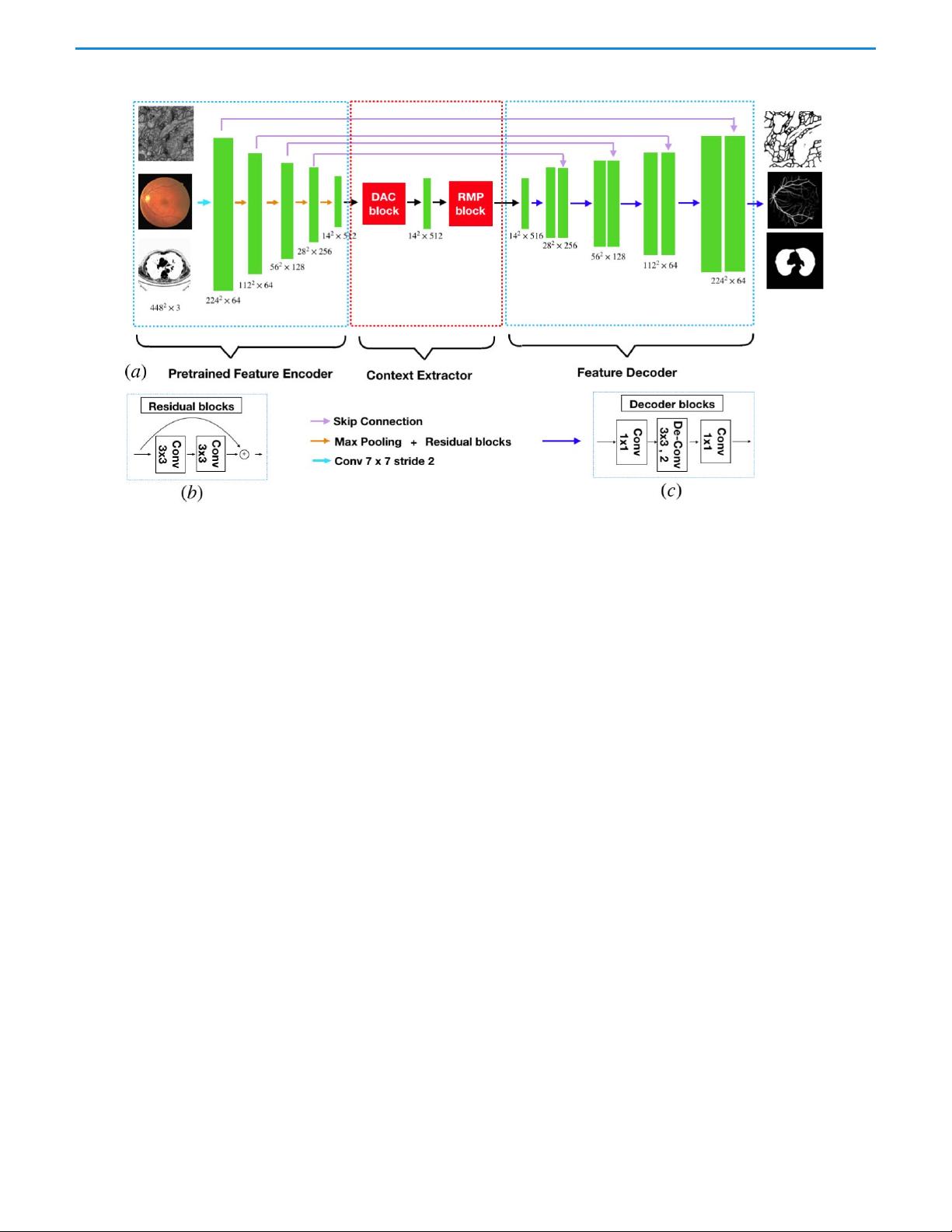

Fig. 1. Illustration of the proposed CE-Net. Firstly, the images are fed into a feature encoder module, where the ResNet-34 block pretrained from

ImageNet is used to replace the original U-Net encoder block. The context extractor is proposed to generate more high-level semantic feature maps.

It contains a dense atrous convolution (DAC) block and a residual multi-kernel pooling (RMP) block. Finally, the extracted features are fed into the

feature decoder module. In this paper, we adopt a decoder block to enlarge the feature size, replacing the original up-sampling operation. T he

decoder block contains 1×1 convolution and 3×3 deconvolution operations. Based on skip connection and the decoder block, we obtain the mask

as the segmentation prediction map.

from spatial pyramid pooling [55]. The RMP block fur-

ther encodes the multi-scale context features of the object

extracted from the DAC module by employing various size

pooling operations, without the extra learning weights. In

summary, the DAC block is proposed to extract enriched

feature representations with multi-scale atrous convolutions,

followed by the RMP block for further context information

with multi-scale pooling operations. Integrating the newly

proposed DAC block and the RMP block with the backbone

encoder-decoder structure, we propose a novel context encoder

network named as CE-Net. It relies on the DAC block and the

RMP block to get more abstract features and preserve more

spatial information to boost the performance of medical image

segmentation.

The main contributions of this work are summarized as

follows:

1) We propose a DAC block and RMP block to capture

more high-level features and preserve more spatial infor-

mation.

2) We integrate the proposed DAC block and RMP block

with encoder-decoder structure for medical image seg-

mentation.

3) We apply the proposed method in different tasks includ-

ing optic disc segmentation, retinal vessel detection,

lung segmentation, cell contour segmentation and retinal

OCT layer segmentation. Results show that the proposed

method outperforms the state-of-the-art methods in these

different tasks.

The remainder of this paper is organized as follows.

Section II introduces the proposed method in details.

Section III presents the experimental results and discussions.

In Section IV, we draw some conclusions.

II. M

ETHOD

The proposed CE-Net consists of three major parts: the

feature encoder module, the context extractor module, and the

feature decoder module, as shown in Fig. 1.

A. Feature Encoder Module

In U-Net architecture, each block of encoder contains two

convolution layers and one max pooling layer. In the proposed

method, we replace it with the pretrained ResNet-34 [53]

in the feature encoder module, which retains the first four

feature extracting blocks without the average pooling layer

and the fully connected layers. Compared with the original

block, ResNet adds shortcut mechanism to avoid the gradient

vanishing and accelerate the network convergence, as shown

in Fig. 1(b). For convenience, we use the modified U-net with

pretrained ResNet as backbone approach.

B. Conte xt Extractor Module

The context extractor module is a newly proposed module,

consisting of the DAC block and the RMP block. This module

extracts context semantic information and generates more

high-level feature maps.