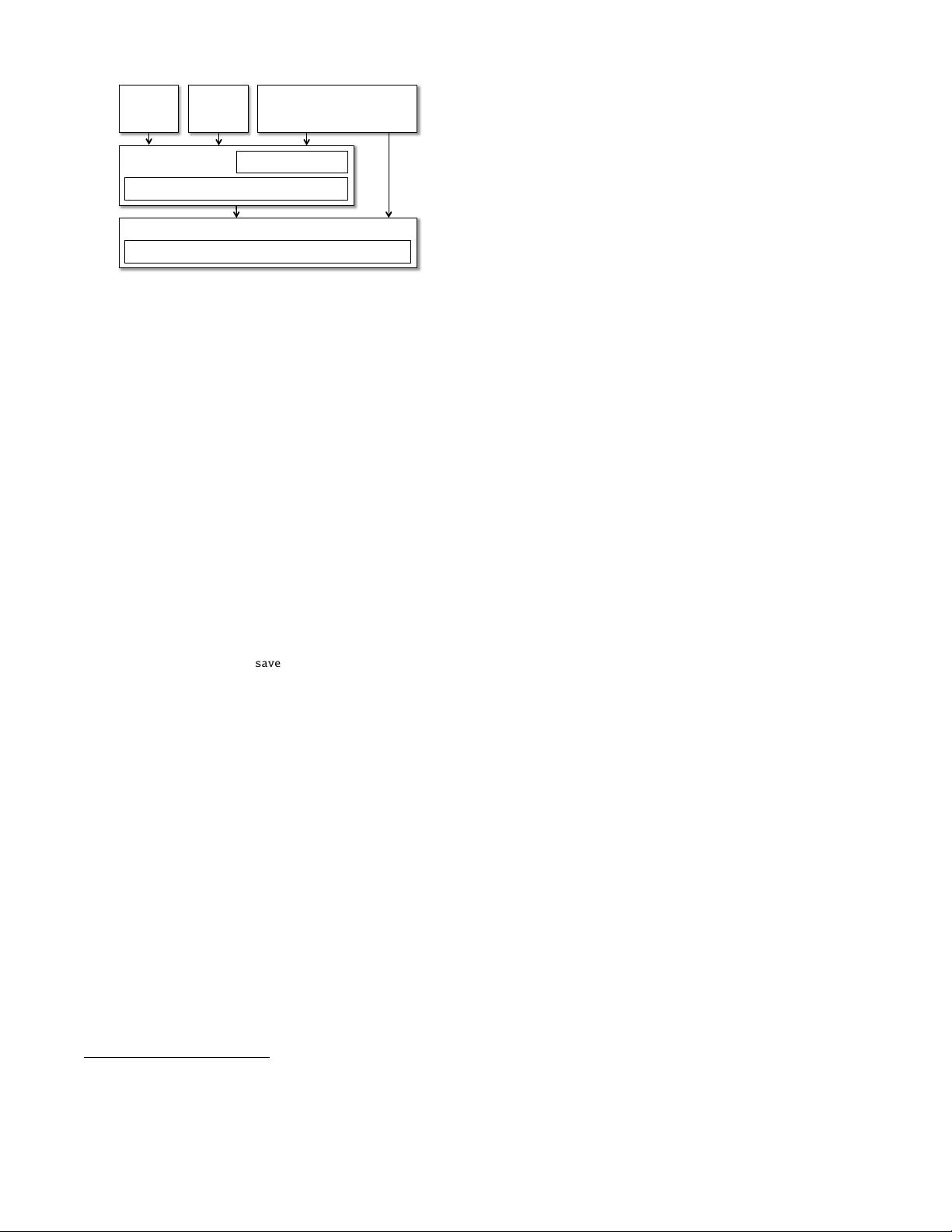

Spark SQL

Resilient Distributed Datasets

Spark

JDBC Console

User Programs

(Java, Scala, Python)

Catalyst Optimizer

DataFrame API

Figure 1: Interfaces to Spark SQL, and interaction with Spark.

3.1 DataFrame API

The main abstraction in Spark SQL’s API is a DataFrame, a dis-

tributed collection of rows with a homogeneous schema. A DataFrame

is equivalent to a table in a relational database, and can also be

manipulated in similar ways to the “native” distributed collections

in Spark (RDDs).

1

Unlike RDDs, DataFrames keep track of their

schema and support various relational operations that lead to more

optimized execution.

DataFrames can be constructed from tables in a system cata-

log (based on external data sources) or from existing RDDs of

native Java/Python objects (Section 3.5). Once constructed, they

can be manipulated with various relational operators, such as where

and groupBy, which take expressions in a domain-specific language

(DSL) similar to data frames in R and Python [32, 30]. Each

DataFrame can also be viewed as an RDD of Row objects, allowing

users to call procedural Spark APIs such as map.

2

Finally, unlike traditional data frame APIs, Spark DataFrames

are lazy, in that each DataFrame object represents a logical plan to

compute a dataset, but no execution occurs until the user calls a spe-

cial “output operation” such as save. This enables rich optimization

across all operations that were used to build the DataFrame.

To illustrate, the Scala code below defines a DataFrame from a

table in Hive, derives another based on it, and prints a result:

ctx = new Hive Cont ext ()

users = ctx.table (" users ")

young = users .where ( users(" age") < 21)

println(young. count ())

In this code, users and young are DataFrames. The snippet

users("age") < 21 is an expression in the data frame DSL, which

is captured as an abstract syntax tree rather than representing a

Scala function as in the traditional Spark API. Finally, each DataFrame

simply represents a logical plan (i.e., read the users table and filter

for age < 21). When the user calls count, which is an output opera-

tion, Spark SQL builds a physical plan to compute the final result.

This might include optimizations such as only scanning the “age”

column of the data if its storage format is columnar, or even using

an index in the data source to count the matching rows.

We next cover the details of the DataFrame API.

3.2 Data Model

Spark SQL uses a nested data model based on Hive [19] for ta-

bles and DataFrames. It supports all major SQL data types, includ-

ing boolean, integer, double, decimal, string, date, and timestamp,

1

We chose the name DataFrame because it is similar to structured data li-

braries in R and Python, and designed our API to resemble those.

2

These Row objects are constructed on the fly and do not necessarily rep-

resent the internal storage format of the data, which is typically columnar.

as well as complex (i.e., non-atomic) data types: structs, arrays,

maps and unions. Complex data types can also be nested together

to create more powerful types. Unlike many traditional DBMSes,

Spark SQL provides first-class support for complex data types in

the query language and the API. In addition, Spark SQL also sup-

ports user-defined types, as described in Section 4.4.2.

Using this type system, we have been able to accurately model

data from a variety of sources and formats, including Hive, rela-

tional databases, JSON, and native objects in Java/Scala/Python.

3.3 DataFrame Operations

Users can perform relational operations on DataFrames using a

domain-specific language (DSL) similar to R data frames [32] and

Python Pandas [30]. DataFrames support all common relational

operators, including projection (select), filter (where), join, and

aggregations (groupBy). These operators all take expression ob-

jects in a limited DSL that lets Spark capture the structure of the

expression. For example, the following code computes the number

of female employees in each department.

employees

.join(dept , employees("deptId") === dept("id"))

.where(employees("gender") === "female")

.groupBy(dept("id"), dept("name"))

.agg(count("name"))

Here, employees is a DataFrame, and employees("deptId") is

an expression representing the deptId column. Expression ob-

jects have many operators that return new expressions, including

the usual comparison operators (e.g., === for equality test, > for

greater than) and arithmetic ones (+, -, etc). They also support ag-

gregates, such as count("name"). All of these operators build up an

abstract syntax tree (AST) of the expression, which is then passed

to Catalyst for optimization. This is unlike the native Spark API

that takes functions containing arbitrary Scala/Java/Python code,

which are then opaque to the runtime engine. For a detailed listing

of the API, we refer readers to Spark’s official documentation [6].

Apart from the relational DSL, DataFrames can be registered as

temporary tables in the system catalog and queried using SQL. The

code below shows an example:

users .where (users ("age ") < 21)

.registerTempTable("young")

ctx .sql (" SEL ECT count (*) , avg ( age) FROM yo ung ")

SQL is sometimes convenient for computing multiple aggregates

concisely, and also allows programs to expose datasets through JD-

BC/ODBC. The DataFrames registered in the catalog are still un-

materialized views, so that optimizations can happen across SQL

and the original DataFrame expressions. However, DataFrames can

also be materialized, as we discuss in Section 3.6.

3.4 DataFrames versus Relational Query Languages

While on the surface, DataFrames provide the same operations as

relational query languages like SQL and Pig [29], we found that

they can be significantly easier for users to work with thanks to

their integration in a full programming language. For example,

users can break up their code into Scala, Java or Python functions

that pass DataFrames between them to build a logical plan, and

will still benefit from optimizations across the whole plan when

they run an output operation. Likewise, developers can use control

structures like if statements and loops to structure their work. One

user said that the DataFrame API is “concise and declarative like

SQL, except I can name intermediate results,” referring to how it is

easier to structure computations and debug intermediate steps.

To simplify programming in DataFrames, we also made API an-

alyze logical plans eagerly (i.e., to identify whether the column