TO APPEAR IN IEEE SIGNAL PROCESSING MAGAZINE, SPECIAL ISSUE ON DEEP LEARNING FOR IMAGE UNDERSTANDING 3

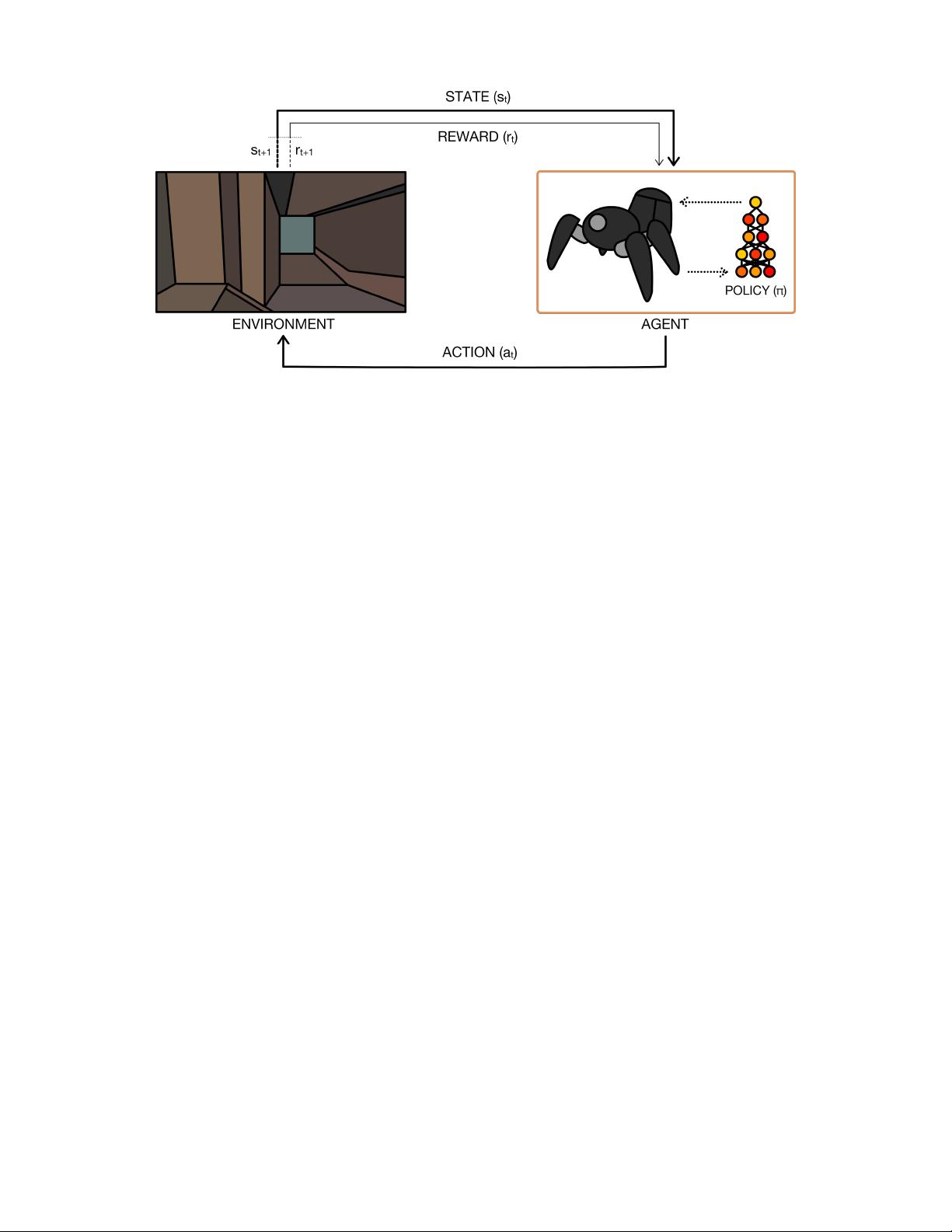

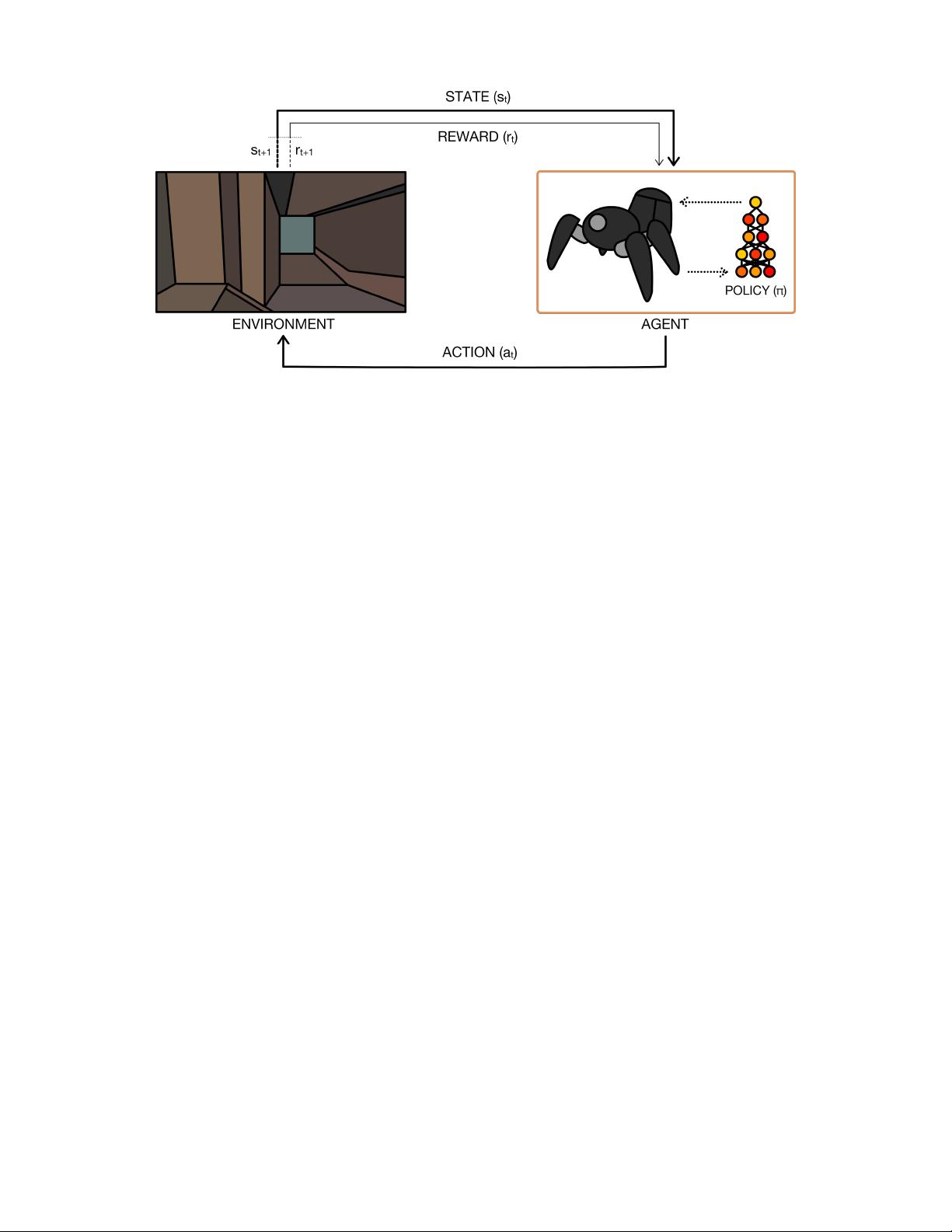

Fig. 2. The perception-action-learning loop. At time t, the agent receives state s

t

from the environment. The agent uses its policy to choose an action a

t

.

Once the action is executed, the environment transitions a step, providing the next state s

t+1

as well as feedback in the form of a reward r

t+1

. The agent

uses knowledge of state transitions, of the form (s

t

, a

t

, s

t+1

, r

t+1

), in order to learn and improve its policy.

learning loop is illustrated in Figure 2.

A. Markov Decision Processes

Formally, RL can be described as a Markov decision process

(MDP), which consists of:

• A set of states S, plus a distribution of starting states

p(s

0

).

• A set of actions A.

• Transition dynamics T (s

t+1

|s

t

, a

t

) that map a state-

action pair at time t onto a distribution of states at time

t + 1.

• An immediate/instantaneous reward function

R(s

t

, a

t

, s

t+1

).

• A discount factor γ ∈ [0, 1], where lower values place

more emphasis on immediate rewards.

In general, the policy π is a mapping from states to a

probability distribution over actions: π : S → p(A = a|S). If

the MDP is episodic, i.e., the state is reset after each episode of

length T , then the sequence of states, actions and rewards in an

episode constitutes a trajectory or rollout of the policy. Every

rollout of a policy accumulates rewards from the environment,

resulting in the return R =

P

T −1

t=0

γ

t

r

t+1

. The goal of RL is

to find an optimal policy, π

∗

, which achieves the maximum

expected return from all states:

π

∗

= argmax

π

E[R|π] (1)

It is also possible to consider non-episodic MDPs, where

T = ∞. In this situation, γ < 1 prevents an infinite sum

of rewards from being accumulated. Furthermore, methods

that rely on complete trajectories are no longer applicable,

but those that use a finite set of transitions still are.

A key concept underlying RL is the Markov property,

i.e., only the current state affects the next state, or in other

words, the future is conditionally independent of the past given

the present state. This means that any decisions made at s

t

can be based solely on s

t−1

, rather than {s

0

, s

1

, . . . , s

t−1

}.

Although this assumption is held by the majority of RL

algorithms, it is somewhat unrealistic, as it requires the states

to be fully observable. A generalisation of MDPs are partially

observable MDPs (POMDPs), in which the agent receives an

observation o

t

∈ Ω, where the distribution of the observation

p(o

t+1

|s

t+1

, a

t

) is dependent on the current state and the

previous action [45]. In a control and signal processing con-

text, the observation would be described by a measurement/

observation mapping in a state-space-model that depends on

the current state and the previously applied action.

POMDP algorithms typically maintain a belief over the

current state given the previous belief state, the action taken

and the current observation. A more common approach in

deep learning is to utilise recurrent neural networks (RNNs)

[138, 35, 36, 72, 82], which, unlike feedforward neural

networks, are dynamical systems. This approach to solving

POMDPs is related to other problems using dynamical systems

and state space models, where the true state can only be

estimated [12].

B. Challenges in RL

It is instructive to emphasise some challenges faced in RL:

• The optimal policy must be inferred by trial-and-error

interaction with the environment. The only learning signal

the agent receives is the reward.

• The observations of the agent depend on its actions and

can contain strong temporal correlations.

• Agents must deal with long-range time dependencies:

Often the consequences of an action only materialise after

many transitions of the environment. This is known as the

(temporal) credit assignment problem [115].

We will illustrate these challenges in the context of an

indoor robotic visual navigation task: if the goal location is

specified, we may be able to estimate the distance remaining

(and use it as a reward signal), but it is unlikely that we will

know exactly what series of actions the robot needs to take

to reach the goal. As the robot must choose where to go as it