is always used to characterize a manifold [21], for example,

the popular k-nearest-neighbor graph in manifold learning

just embodies the local neighborhood relation between

each sample and its k neighbors. Besides, the locality can

vividly reflect the size of structure granularity. Thus, the

locality structure, i.e., the manifestation of manifold

assumption, is introduced in the forthcoming SM frame-

work. Another conception, i.e., the class, is frequently

mentioned in supervised learning. Yet, it nearly is not

regarded as one of structures, because once the training

samples are given, the class just is fixed and thus naturally

is separately addressed. However, the so-called class

essentially is a given rather than assumed prior. So, com-

pared with the structures of cluster and locality, the class is

in nature a structure with the most size of granularity.

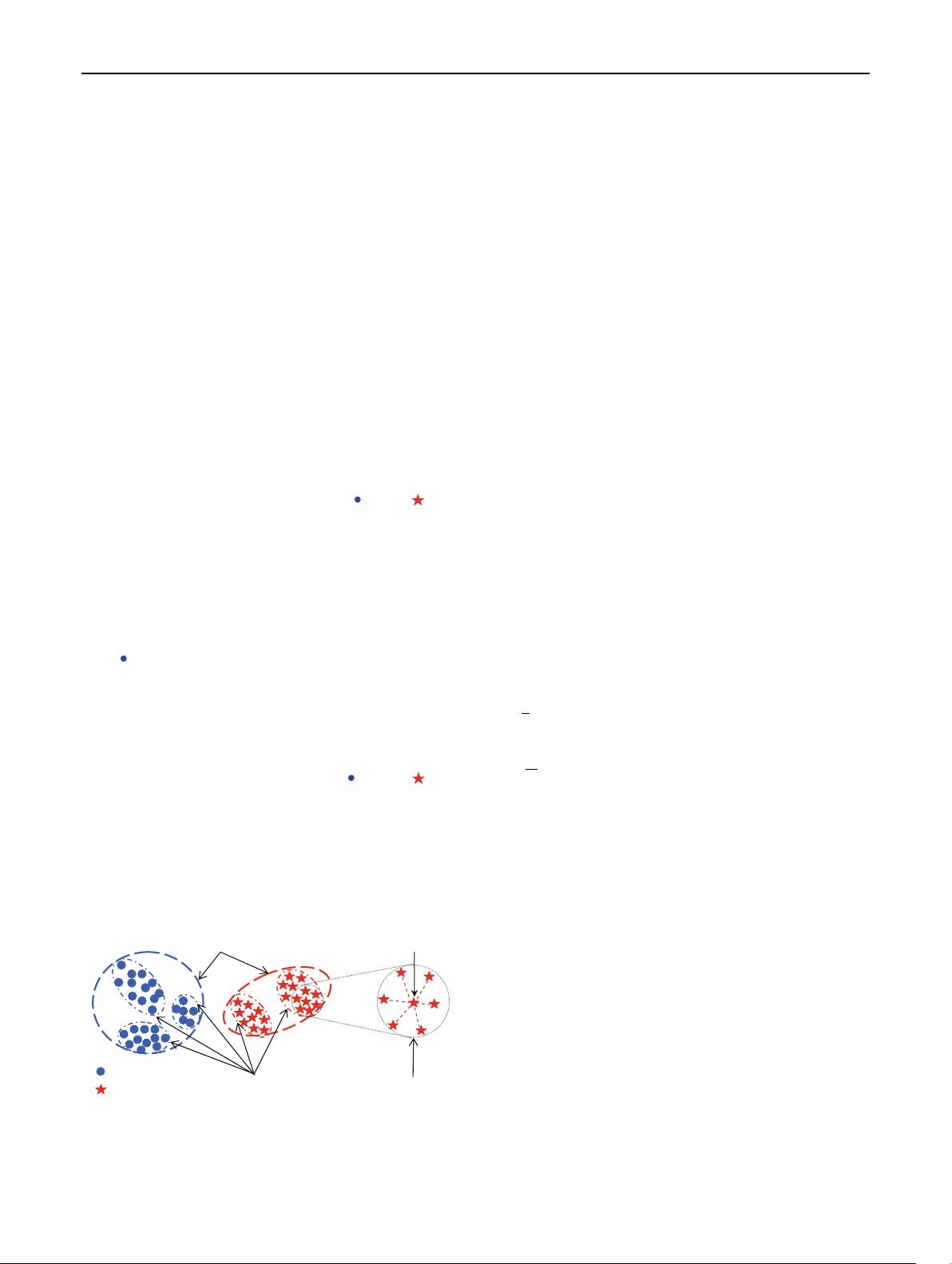

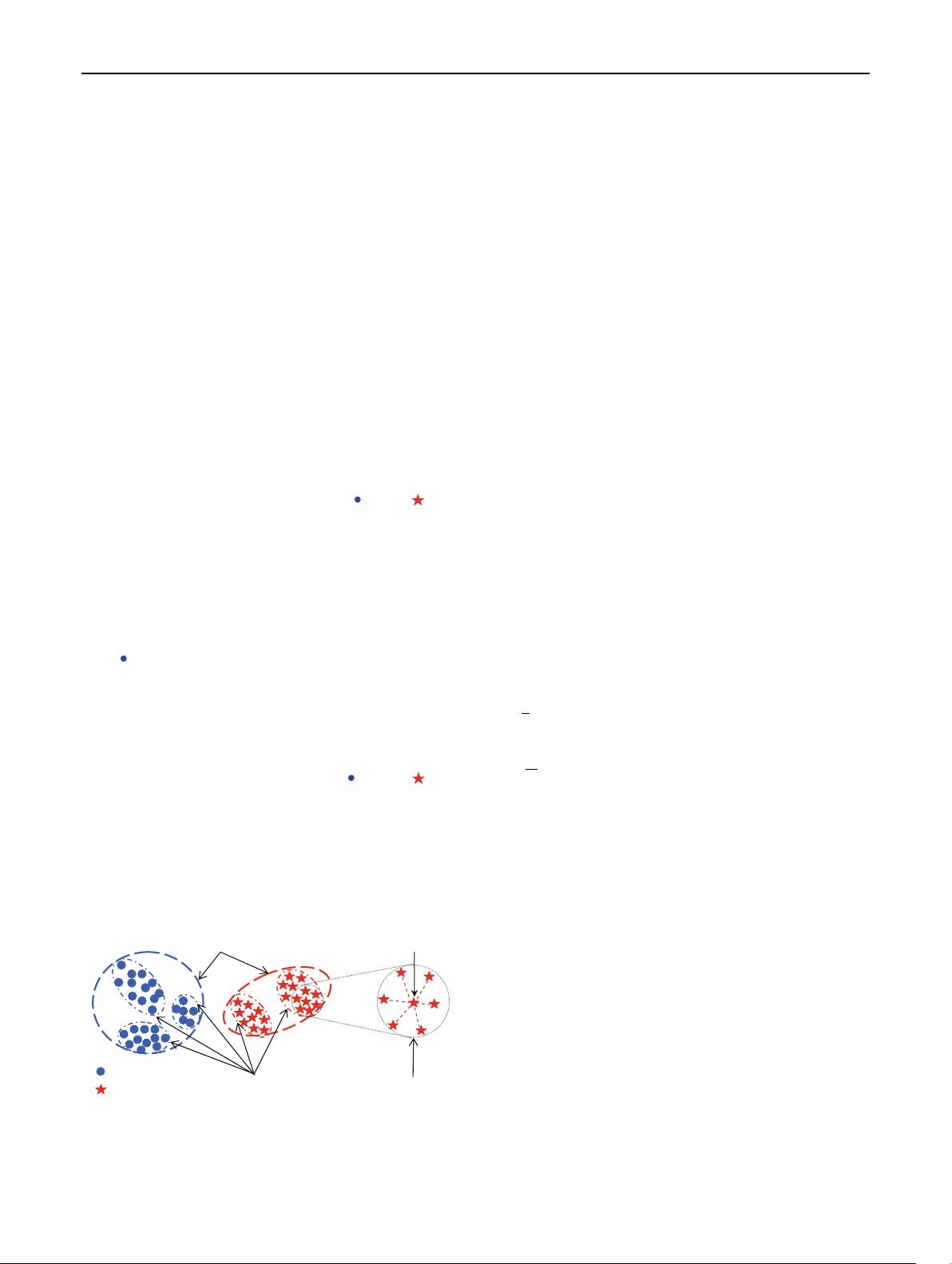

These structures mentioned above are summarized to form

the structure granularity spectrum of SM framework,

namely, from class to cluster and then to locality. Figure 2

illustrates these structure granularities in a two-dimen-

sional space.

For the given samples of two classes ‘‘

’’ a nd ‘‘ ’’, the

formation of class granularity seems natural, that is, one

global structure for each class, as denoted by two big dash-

line ellipses in Fig. 2.MDA[1] is a typical algorithm based

on class granularity. However, it is unavoidable for such

naturally formed simple structure to underfit the data at

hand. For example, the big dash-line ellipse for Class 1

leaves a great deal of blank-space that is not covered by

any ‘‘

’’. By contrast, the cluster granularity structure is

more compact, since each class is congregated into multi-

clusters, each of which, respectively, involves a portion of

all samples, and thus little blank-space is left in it. As those

small dash-dot-line ellipses shown in Fig. 2, they, respec-

tively, indicate three clusters of Class 1 and 2 clusters of

Class 2. In each cluster, only some ‘‘

’’ a nd ‘‘ ’’ are

involved. Clearly, each cluster has very little blank-space

and thus its structure is more compact. For the data of

Fig. 1b, if cluster granularity is used as the prior assump-

tion and the number of clusters is set to 3, then the intrinsic

structure of the data is relatively effectively captured,

because the data really consist of three Gaussians. SDA [4]

is one of the algorithms based on cluster granularity. Fur-

ther, the right zoomed part in Fig. 2 shows the locality

structure around point x

i

, where the six stars connected by

the six dash lines represent its six nearest neighbors. In

fact, each sample has own locality structure and these

locality structures for all sample can be used to charac-

terize the data manifold. For the data in Fig. 1c, if the

locality structure with proper neighbor parameter is adopted,

then the swissroll often can be effectively characterized since

it is just a manifold. MFA [2] exactly utilizes such a structure

to perform manifold learning. Besides, from the three granu-

larities of Class 2 in Fig. 2, it can be found that their sizes

roughly reflect such a relation that class granularity C cluster

granularity C locality granularity.

Next, the typical algorithms corresponding to different

granularities will briefly be reviewed and analyzed.

2.1 MDA with class-granularity structure

Multiple discriminant analysis [1] is one of the most pop-

ular linear methods for DA. It aims to find an optimal

projection matrix U

that yields the maximum ratio of the

between-class scatter S

B

to the within-class scatter S

w

S

B

¼

X

C

i¼1

n

i

ðm

i

mÞðm

i

mÞ

T

ð1aÞ

S

w

¼

X

C

i¼1

S

i

and S

i

¼

X

x2D

i

ðx m

i

Þðx m

i

Þ

T

;

i ¼ 1; ...; C

ð1bÞ

m ¼

1

n

X

n

k¼1

x

k

ð1cÞ

m

i

¼

1

n

i

X

n

i

k¼1

x

k

; i ¼ 1; ...; C ð1dÞ

where C is the class size, n and n

i

are the sizes of all

samples and samples in class i. For LDA, the between-class

scatter S

B

in (1a) is changed as S

B

LDA

in (1e) while the other

formulations are still maintained.

S

LDA

B

¼ðm

1

m

2

Þðm

1

m

2

Þ

T

ð1eÞ

where m

1

and m

2

are, respectively, the means of classes 1

and 2.

Now let us check what structure granularity MDA

adopts. In terms of the construction of its scatter matrices

in (1a) and (1b), it can be found that they use the class-

granularity structure, because the within-class scatter S

w

is

the sum of all matrices S

i

,(i = 1, …, C), and S

i

is con-

structed by all the samples of class i with the class mean as

the representative point of the class, and is traditionally

called as the within-class scatter for class i. While the

between-class scatter S

B

also has a similar construction and

Class 1

Class 2

i

x

Class granularity

Cluster granularity

Locality granularity

Fig. 2 Different structure granularities of structure granularity

spectrum

352 Pattern Anal Applic (2011) 14:349–367

123