[ Team LiB ]

1.1 What is Concurrency?

Two events are said to be concurrent if they occur within the same time interval. Two or more tasks

executing over the same time interval are said to execute concurrently. For our purposes, concurrent

doesn't necessarily mean at the same exact instant. For example, two tasks may occur concurrently within

the same second but with each task executing within different fractions of the second. The first task may

execute for the first tenth of the second and pause, the second task may execute for the next tenth of the

second and pause, the first task may start again executing in the third tenth of a second, and so on. Each

task may alternate executing. However, the length of a second is so short that it appears that both tasks

are executing simultaneously. We may extend this notion to longer time intervals. Two programs

performing some task within the same hour continuously make progress of the task during that hour,

although they may or may not be executing at the same exact instant. We say that the two programs are

executing concurrently for that hour. Tasks that exist at the same time and perform in the same time

period are concurrent. Concurrent tasks can execute in a single or multiprocessing environment. In a

single processing environment, concurrent tasks exist at the same time and execute within the same time

period by context switching. In a multiprocessor environment, if enough processors are free, concurrent

tasks may execute at the same instant over the same time period. The determining factor for what makes

an acceptable time period for concurrency is relative to the application.

Concurrency techniques are used to allow a computer program to do more work over the same time

period or time interval. Rather than designing the program to do one task at a time, the program is broken

down in such a way that some of the tasks can be executed concurrently. In some situations, doing more

work over the same time period is not the goal. Rather, simplifying the programming solution is the goal.

Sometimes it makes more sense to think of the solution to the problem as a set of concurrently executed

tasks. For instance, the solution to the problem of losing weight is best thought of as concurrently

executed tasks: diet and exercise. That is, the improved diet and exercise regimen are supposed to occur

over the same time interval (not necessarily at the same instant ). It is typically not very beneficial to do

one during one time period and the other within a totally different time period. The concurrency of both

processes is the natural form of the solution. Sometimes concurrency is used to make software faster or

get done with its work sooner. Sometimes concurrency is used to make software do more work over the

same interval where speed is secondary to capacity. For instance, some web sites want customers to

stay logged on as long as possible. So it's not how fast they can get the customers on and off of the site

that is the concern—it's how many customers the site can support concurrently. So the goal of the

software design is to handle as many connections as possible for as long a time period as possible.

Finally, concurrency can be used to make the software simpler. Often, one long, complicated sequence of

operations can be implemented easier as a series of small, concurrently executing operations. Whether

concurrency is used to make the software faster, handle larger loads, or simplify the programming

solution, the main object is software improvement using concurrency to make the software better.

1.1.1 The Two Basic Approaches to Achieving Concurrency

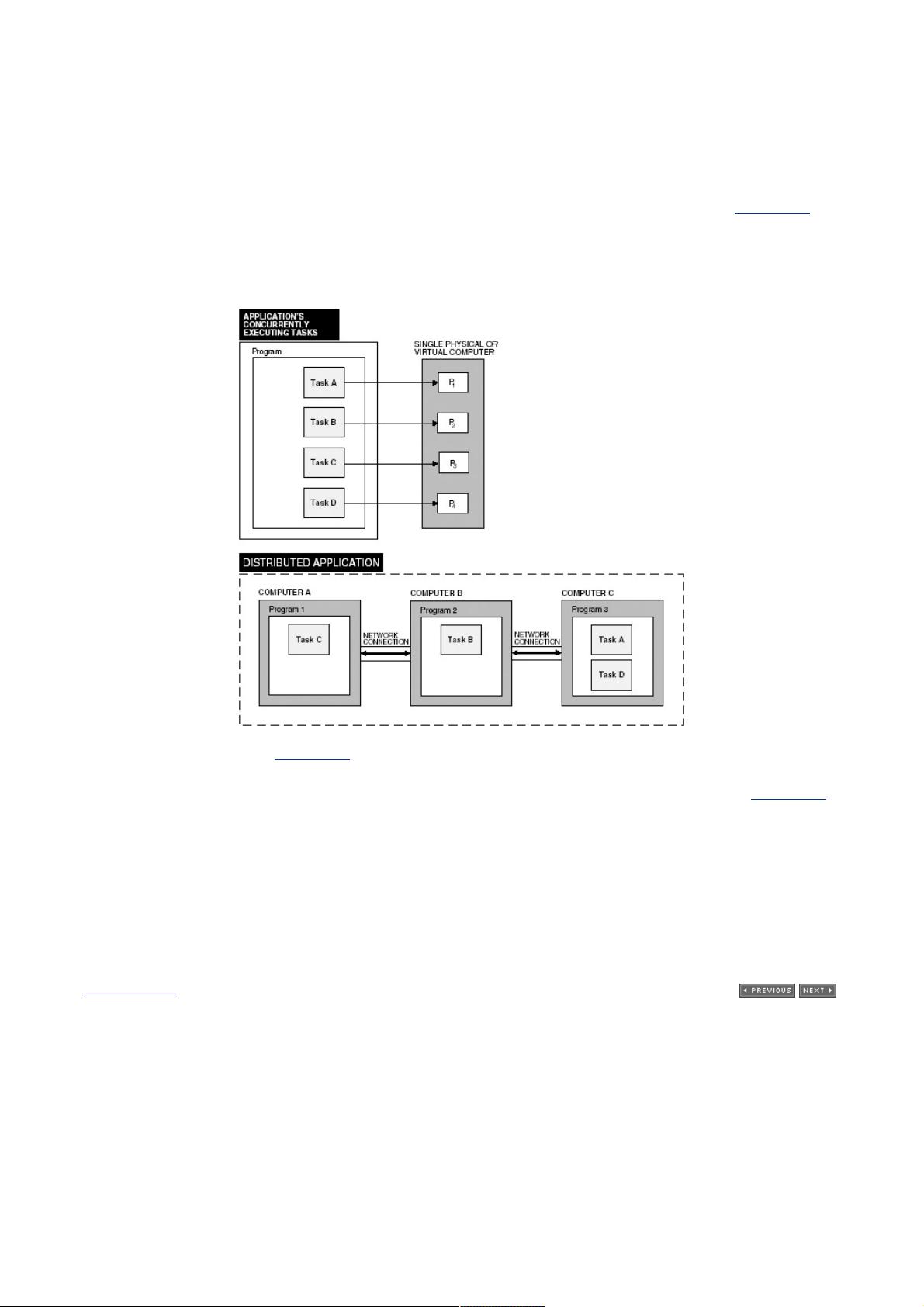

Parallel programming and distributed programming are two basic approaches for achieving concurrency

with a piece of software. They are two different programming paradigms that sometimes intersect.

Parallel programming techniques assign the work a program has to do to two or more processors within

a single physical or a single virtual computer. Distributed programming techniques assign the work a

program has to do to two or more processes—where the processes may or may not exist on the same

computer. That is, the parts of a distributed program often run on different computers connected by a

network or at least in different processes. A program that contains parallelism executes on the same

physical or virtual computer. The parallelism within a program may be divided into processes or threads.

We discuss processes in Chapter 3 and threads in Chapter 4. For our purposes, distributed programs

can only be divided into processes. Multithreading is restricted to parallelism. Technically, parallel

programs are sometimes distributed, as is the case with PVM (Parallel Virtual Machine) programming.

Distributed programming is sometimes used to implement parallelism, as is the case with MPI (Message

Passing Interface) programming. However, not all distributed programs involve parallelism. The parts of a

distributed program may execute at different instances and over different time periods. For instance, a