ZHANG et al.: FAST AND EPSILON-OPTIMAL DISCRETIZED PURSUIT LA 2091

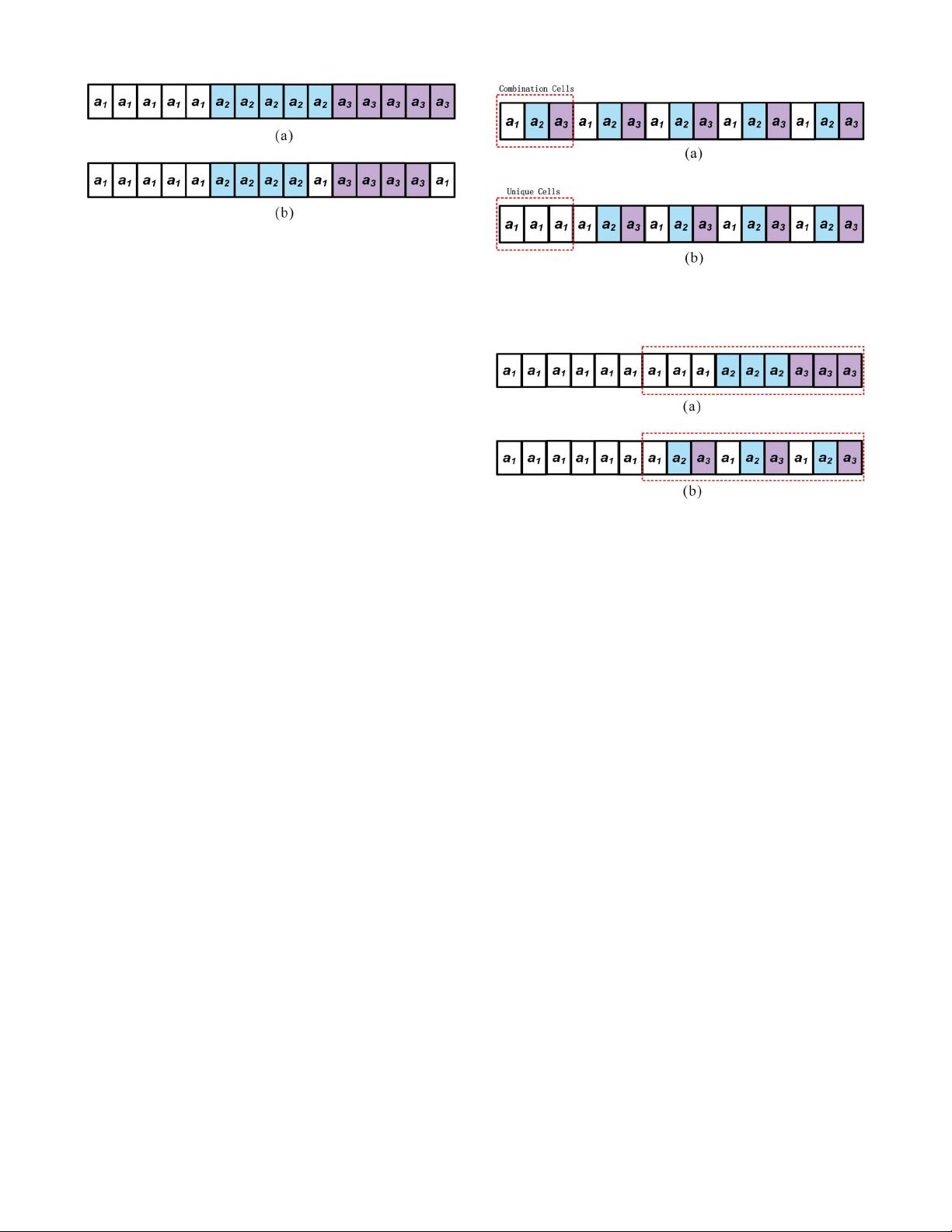

Fig. 2. DP

RI

using r = 3andn = 5, a

1

is the estimated optimal action and

positive response is received. DP

RI

(a) initialization and (b) state probability

update when the chosen action is rewarded.

the state probability of the action with the highest estimate

has to be increased by an integral multiple of the smallest

step size . When the chosen action is penalized, there is

no updating in the state probability vector, and it is thus of

the reward-inaction paradigm. Assume that m is the index of

the estimated optimal action. The update scheme of DP

RI

is

described briefly as follows [1], [36].

Phase 1: Select the next action.

Select an action a(t) = a

k

according to the probability distri-

bution P(t). Update H

k

, G

k

and

d

k

.

Phase 2: Find the optimal estimated action

m = argmax

i

{

d

i

}.

Phase 3: Update the state probability.

If β(t) = 1 Then

p

j

(t + 1) = max

j=m

{p

j

(t) − , 0}, ∀j ∈ N

r

(2)

p

m

(t + 1) = 1 −

j=m

p

j

(t + 1). (3)

Else

p

j

(t + 1) = p

j

(t), ∀j ∈ N

r

. (4)

Endif

III. F

AST DISCRETIZED PURSUIT LA

In this section, FDP

RI

is proposed by exploiting the most

significant pattern of the discretized update scheme in DP

RI

.

This pattern always increases the state probability of the esti-

mated optimal action but decreases others by discretized step

= 1/(rn) where r is the number of allowable actions, and

n is a resolution parameter.

Once an update occurs, (r − 1) ∗ are rewarded to the

estimated optimal action and other actions are all penalized

by .Fig.2 illustrates this update scheme. Each is consid-

ered as a probability cell (PC) shown in Fig. 2(a). Assume a

1

is

the estimated optimal action and positive response is received,

(r − 1) PCs are rewarded to a

1

and one PC is penalized for

other actions as shown in Fig. 2(b), respectively.

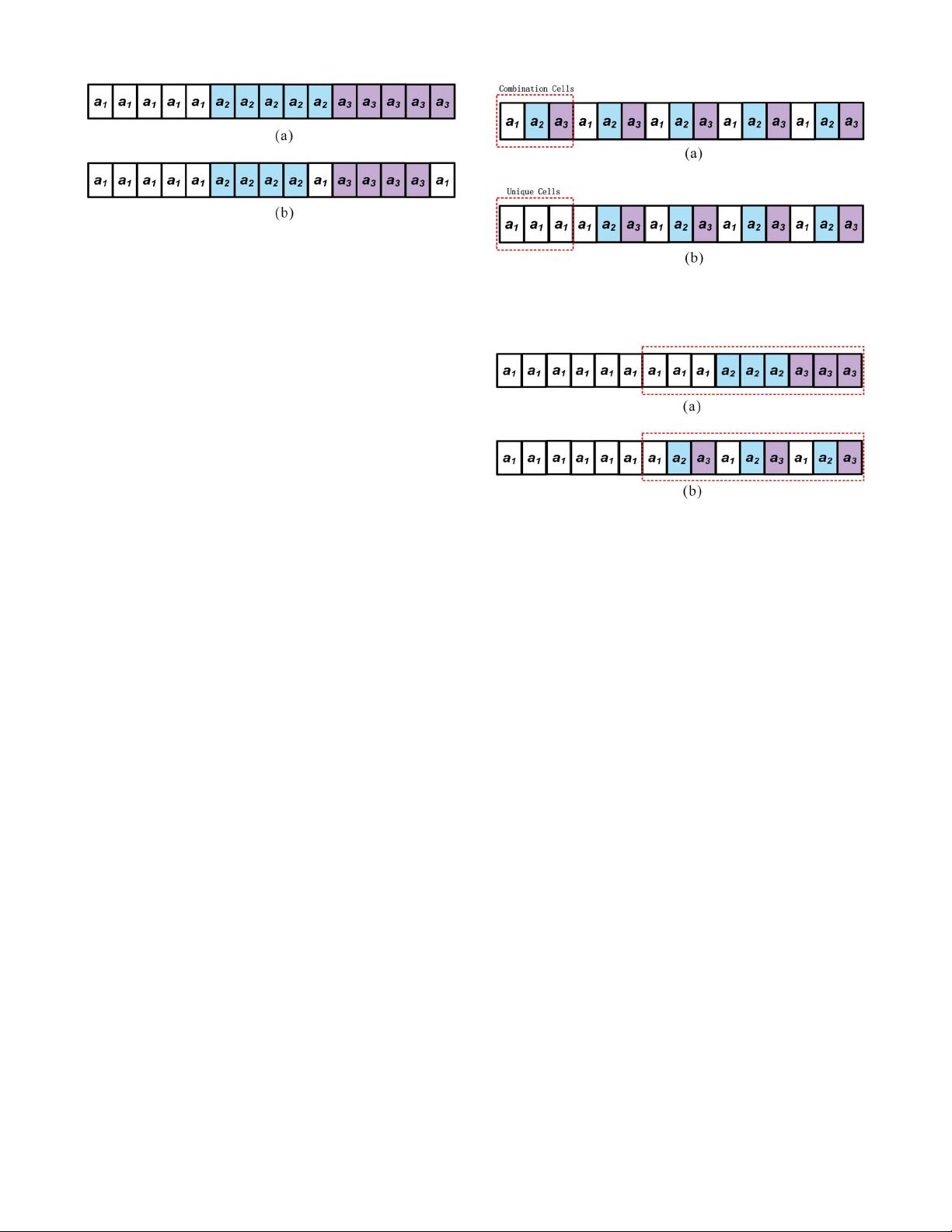

A. Fast Probability Update

In the proposed FDP

RI

, r PCs from each action are initially

composed as a combination (C) unit in Fig. 3(a). n C units

are initialized and equivalent to the one for DP

RI

in Fig. 2(a).

When an update occurs, a C unit is transformed to a unique (U)

unit which is composed r PCs of a

1

as shown in Fig. 3(b).

This updating result is equivalent to the one for DP

RI

as shown

in Fig. 2(b).

Fig. 3. FDP

RI

using r = 3andn = 5, a

1

is the estimated optimal action

and it received positive response. FDP

RI

(a) initialization and (b) rewarded

when C ≥ 1.

Fig. 4. U-C transformation: r = 3andn = 5. (a) All U units. (b) U-C

transformation.

Thus, the computational complexity to update the prob-

ability is reduced from O(r) to O(1) in FDP

RI

because a

type transformation from a C unit to a U unit can finish the

probability updating.

B. U-C Transformation

In FDP

RI

, n C units are initialized. When the probability

update continues, the C units can be used up and transformed

into the U units. Fig. 4 shows that r U units can be trans-

formed into r C units and they are equivalent. In this way, the

transformed C units can be available for the subsequent fast

probability update.

C. Reorganization

An action a

i

is active if its state probability p

i

(t) is nonzero.

When the state probability of an active action turns to be

zero as shown in Fig. 5(a), reorganization is triggered as

shown in Fig. 5(b). Reorganization lets ˜r =˜r − 1 and set

this action as nonactive state where ˜r is number of the active

actions and initially ˜r = r. Hence, the step size turns to be

= 1/(˜rn). The step size of the update scheme in FDP

RI

for p

m

(t) “increases” because more and more state probabili-

ties turn to be zero such that the number of the active actions

decreases with the learning process. It means that the step size

is “accelerated” along with the learning process. It is reason-

able because the number of times for which active actions

are selected increases and the reward probability estimation

of active actions becomes more and more accurate along with

the learning process.

Then the U-C transformation is called to transform U units

into C units for the subsequent fast probability updating. As