On-the-Fly Learning in a Perpetual Learning

Machine

Andrew J.R. Simpson

#1

#

Centre for Vision, Speech and Signal Processing, University of Surrey,UK

1

Andrew.Simpson@Surrey.ac.uk

Abstract—Despite the promise of brain-inspired machine

learning, deep neural networks (DNN) have frustratingly failed

to bridge the deceptively large gap between learning and memory.

Here, we introduce a Perpetual Learning Machine; a new type of

DNN that is capable of brain-like dynamic ‘on the fly’ learning

because it exists in a self-supervised state of Perpetual Stochastic

Gradient Descent. Thus, we provide the means to unify learning

and memory within a machine learning framework. We also

explore the elegant duality of abstraction and synthesis: the Yin

and Yang of deep learning.

Index terms—Perpetual Learning Machine, Perpetual

Stochastic Gradient Descent, self-supervised learning, parallel

dither, Yin and Yang.

I. I

NTRODUCTION

It is an embarassing fact that while deep neural networks

(DNN) are frequently compared to the brain, and even their

performance found to be similar in specific static tasks, there

remains a critical difference; DNN do not exhibit the fluid and

dynamic learning of the brain but are static once trained. For

example, to add a new class of data to a trained DNN it is

necessary to add the respective new training data to the pre-

existing training data and re-train (probably from scratch) to

account for the new class. By contrast, learning is essentially

additive in the brain – if we want to learn a new thing, we do.

Thus, whilst there is little doubt that the learning of the

brain and machine learning are essentially the same, the

learning of the brain involves the emergent phenomenon of

memory which has failed to emerge from machine learning.

Indeed, recent machine-inspired approaches to ‘memory’ have

involved explicit add-on storage facilities [e.g., 1] which

explicitly discriminate between learning (training – i.e., of

weights) and memory (storage – i.e., of data). Thus, the

problem has been brushed under the carpet.

In this article, we describe a novel form of supervised

learning model, which we call a Perpetual Learning Machine,

which gives rise to the basic properties of memory. Our model

involves two DNNs, one for storage and the other for recall.

The storage DNN learns the classes of some training images.

The recall DNN learns to synthesise the same images from the

same classes. Together, the two networks hold, encoded, the

training set. We then place these pair of DNNs in a self-

supervised and homeostatic state of Perpetual Stochastic

Gradient Descent (PSGD). During each step of PSGD, a

random class is chosen and an image synthesised from the

recall DNN. This randomly synthesised image is then used in

combination with the random class to train both DNNs via

non-batch SGD. I.e., the PSGD is driven by training data that

is synthesised from memory according to random classes. We

next demonstrate that new classes may be learned on the fly

by introducing them, via ‘new experience’ SGD steps, into the

path of PSGD. Over time, new classes are assimilated without

disruption of earlier learning and hence we demonstrate a

machine which both learns and remembers.

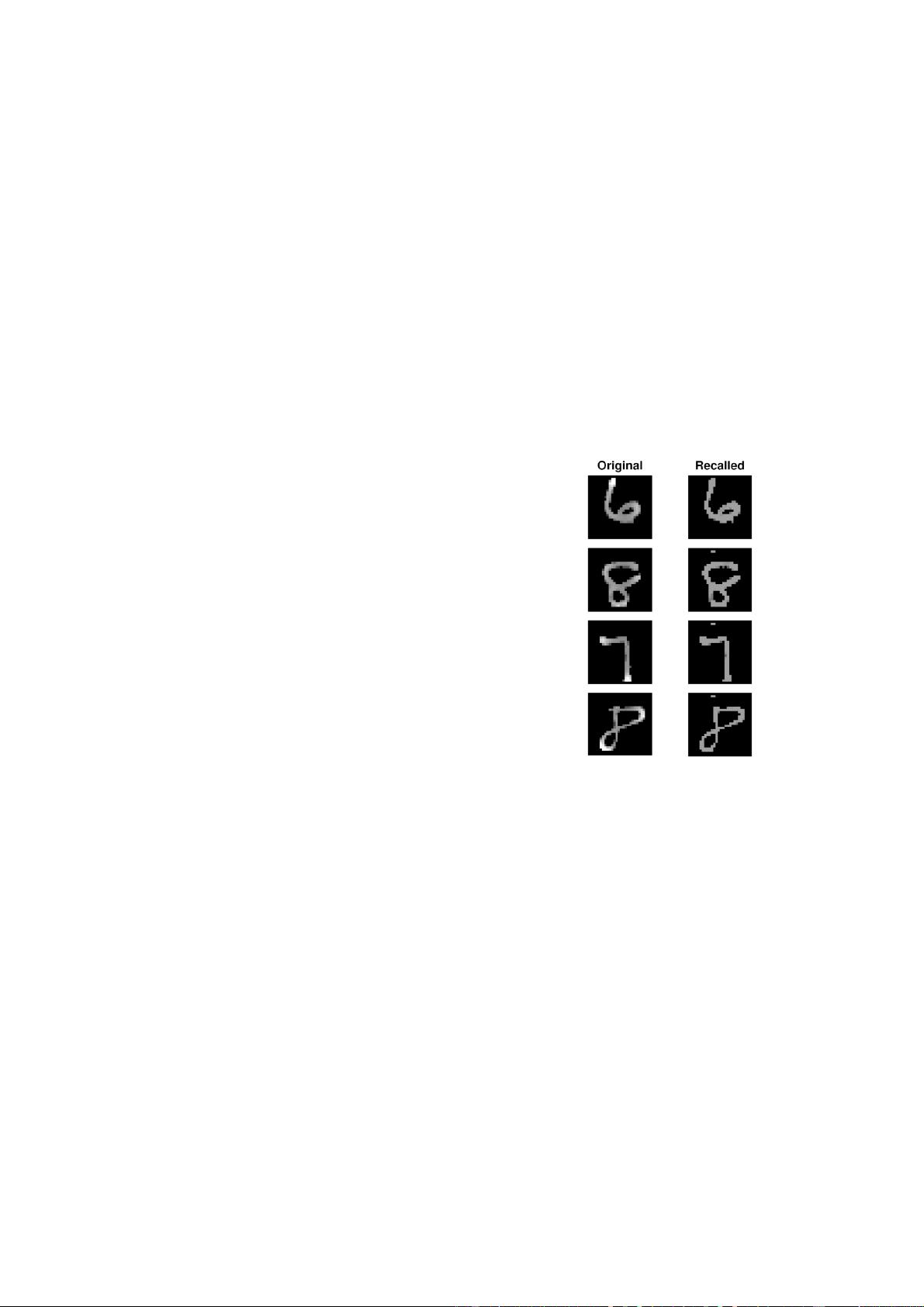

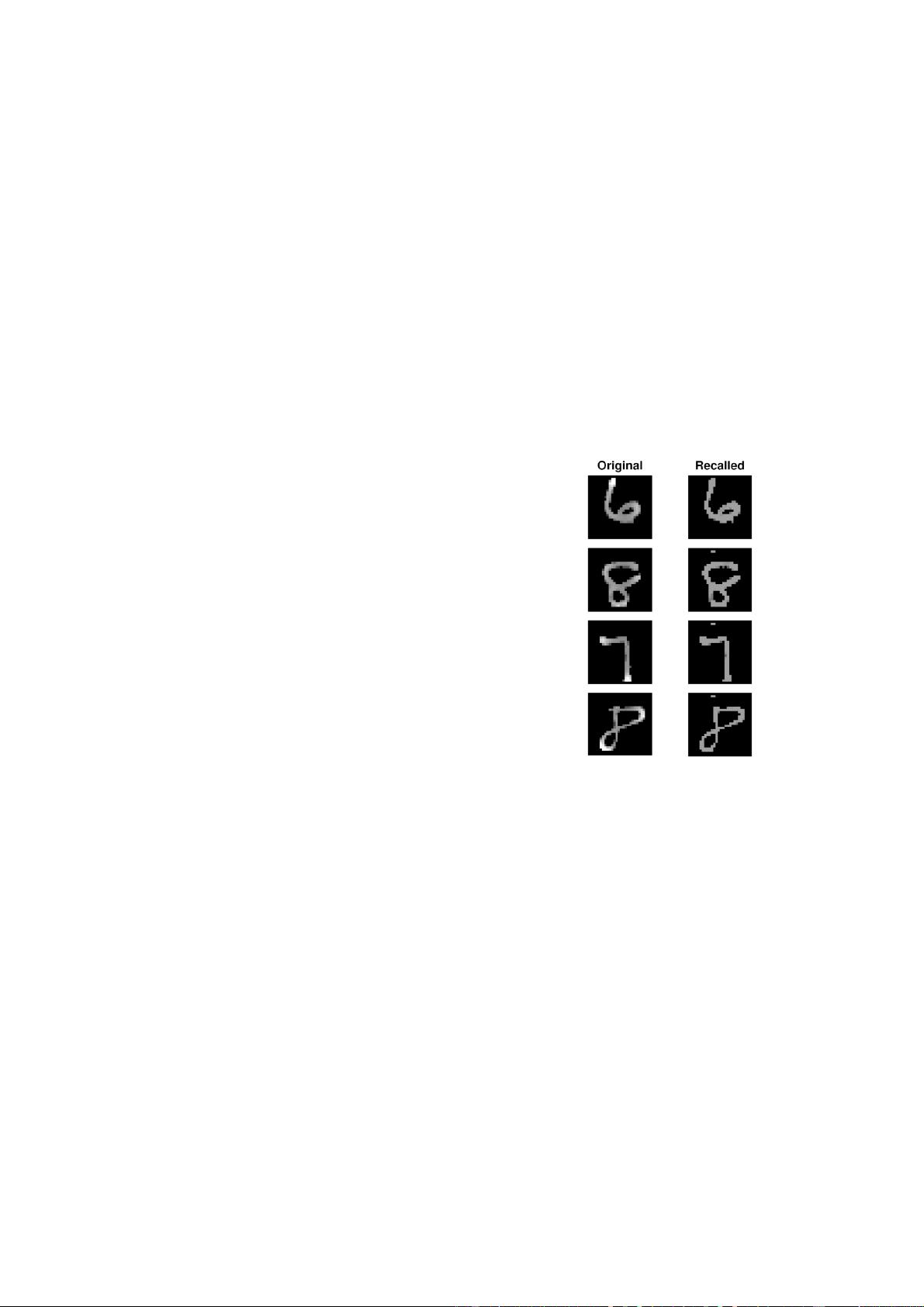

Fig. 1. Recall of training images. On the left are plotted MNIST

digits and on the right are plotted the same digits synthesised using

the recall DNN.

II. M

ETHOD

We chose the well-known MNIST hand-written digit

dataset [2]. First, we unpacked the images of 28x28 pixels into

vectors of length 784. Example digits are given in Fig. 1. Pixel

intensities were normalized to zero mean.

Perpetual Memory. In order to test the idea of perpetual

memory, through perpetual learning, we required our model to

learn to identify a collection of images. We took the first 75 of

the MNIST digits and assigned each to an arbitrary class (this

is arbitrary associative learning). This gave 75 unique classes,

each associated with a single, specific digit. The task of the

model was to recognise the images and assign to them the

correct (arbitrary) classes. We split the 75 digits into 50 ‘learn

during training’ examples and 25 ‘learn later on the fly’ test

examples. The first 50 training examples were learned with

typical SGD and discarded. Hence, they were not available for

later use during assimilation of additional classes. The latter

25 examples were held back for insertion during PSGD.