Unsupervised Domain Adaptation by Backpropagation

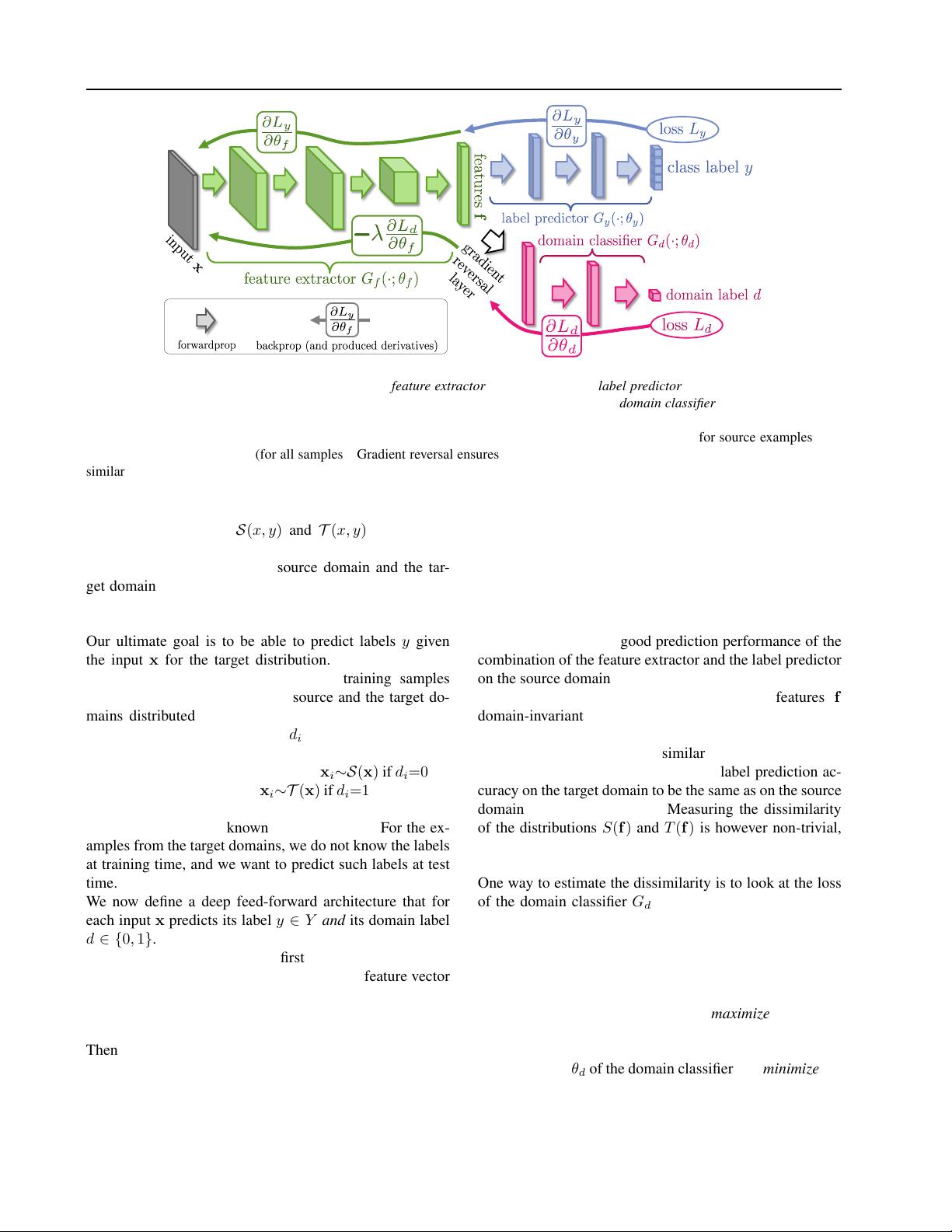

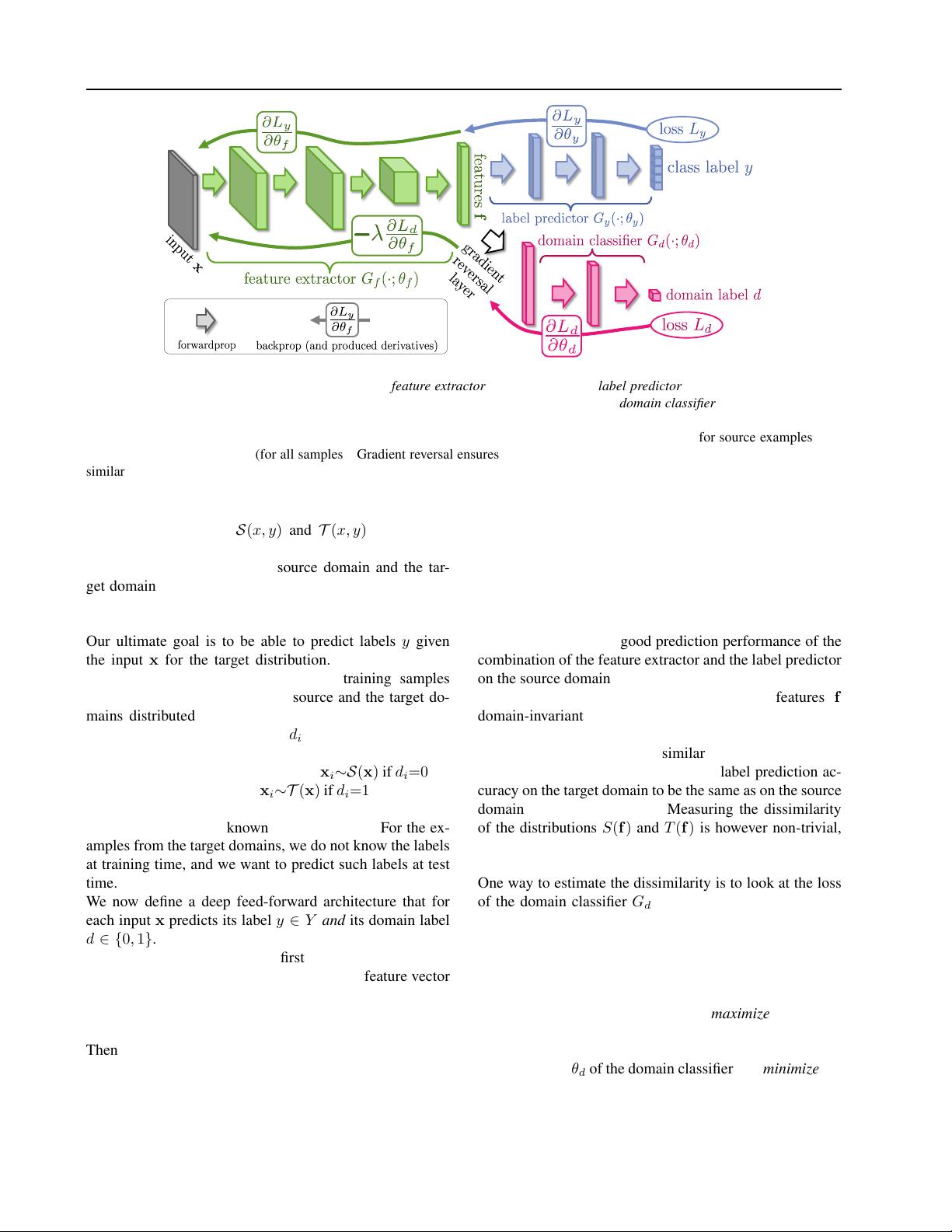

Figure 1. The proposed architecture includes a deep feature extractor (green) and a deep label predictor (blue), which together form

a standard feed-forward architecture. Unsupervised domain adaptation is achieved by adding a domain classifier (red) connected to the

feature extractor via a gradient reversal layer that multiplies the gradient by a certain negative constant during the backpropagation-

based training. Otherwise, the training proceeds in a standard way and minimizes the label prediction loss (for source examples) and

the domain classification loss (for all samples). Gradient reversal ensures that the feature distributions over the two domains are made

similar (as indistinguishable as possible for the domain classifier), thus resulting in the domain-invariant features.

forward models can handle. We further assume that there

exist two distributions S(x, y) and T (x, y) on X ⊗ Y ,

which will be referred to as the source distribution and

the target distribution (or the source domain and the tar-

get domain). Both distributions are assumed complex and

unknown, and furthermore similar but different (in other

words, S is “shifted” from T by some domain shift).

Our ultimate goal is to be able to predict labels y given

the input x for the target distribution. At training time,

we have an access to a large set of training samples

{x

1

, x

2

, . . . , x

N

} from both the source and the target do-

mains distributed according to the marginal distributions

S(x) and T (x). We denote with d

i

the binary variable (do-

main label) for the i-th example, which indicates whether

x

i

come from the source distribution (x

i

∼S(x) if d

i

=0) or

from the target distribution (x

i

∼T (x) if d

i

=1). For the ex-

amples from the source distribution (d

i

=0) the correspond-

ing labels y

i

∈ Y are known at training time. For the ex-

amples from the target domains, we do not know the labels

at training time, and we want to predict such labels at test

time.

We now define a deep feed-forward architecture that for

each input x predicts its label y ∈ Y and its domain label

d ∈ {0, 1}. We decompose such mapping into three parts.

We assume that the input x is first mapped by a mapping

G

f

(a feature extractor) to a D-dimensional feature vector

f ∈ R

D

. The feature mapping may also include several

feed-forward layers and we denote the vector of parame-

ters of all layers in this mapping as θ

f

, i.e. f = G

f

(x; θ

f

).

Then, the feature vector f is mapped by a mapping G

y

(la-

bel predictor) to the label y, and we denote the parameters

of this mapping with θ

y

. Finally, the same feature vector f

is mapped to the domain label d by a mapping G

d

(domain

classifier) with the parameters θ

d

(Figure 1).

During the learning stage, we aim to minimize the label

prediction loss on the annotated part (i.e. the source part)

of the training set, and the parameters of both the feature

extractor and the label predictor are thus optimized in or-

der to minimize the empirical loss for the source domain

samples. This ensures the discriminativeness of the fea-

tures f and the overall good prediction performance of the

combination of the feature extractor and the label predictor

on the source domain.

At the same time, we want to make the features f

domain-invariant. That is, we want to make the dis-

tributions S(f ) = {G

f

(x; θ

f

) | x∼S(x)} and T (f ) =

{G

f

(x; θ

f

) | x∼T (x)} to be similar. Under the covariate

shift assumption, this would make the label prediction ac-

curacy on the target domain to be the same as on the source

domain (Shimodaira, 2000). Measuring the dissimilarity

of the distributions S(f ) and T (f ) is however non-trivial,

given that f is high-dimensional, and that the distributions

themselves are constantly changing as learning progresses.

One way to estimate the dissimilarity is to look at the loss

of the domain classifier G

d

, provided that the parameters

θ

d

of the domain classifier have been trained to discrim-

inate between the two feature distributions in an optimal

way.

This observation leads to our idea. At training time, in or-

der to obtain domain-invariant features, we seek the param-

eters θ

f

of the feature mapping that maximize the loss of

the domain classifier (by making the two feature distribu-

tions as similar as possible), while simultaneously seeking

the parameters θ

d

of the domain classifier that minimize the

loss of the domain classifier. In addition, we seek to mini-

mize the loss of the label predictor.