SoCC ’20, October 19–21, 2020, Virtual Event, USA Ali Tariq, Austin Pahl, Sharat Nimmagadda, Eric Rozner, and Siddharth Lanka

queuing policies. Although results are omitted due to space,

we nd (i) scheduling across frameworks follows a simple

FIFO queuing model and (ii) scheduling is performed on a

per-function basis (instead of other policies like per-chain).

2.2.1 Limitations. This section shows limitations in exist-

ing serverless oerings and how these impact QoS for in-

coming requests and overall performance. Sp ecically, it is

shown that inconsistent and incorrect concurrency limits

are prevalent, mid-chain function drops occur, workloads

such as bursts are not easily supported, HTTP functions are

prioritized without documentation, inecient resource allo-

cation is common, and concurrency collapses under certain

conditions.

0

400

800

1200

5

10

15

20

25

30

35

Count

Time (sec)

Concurrency

Completed

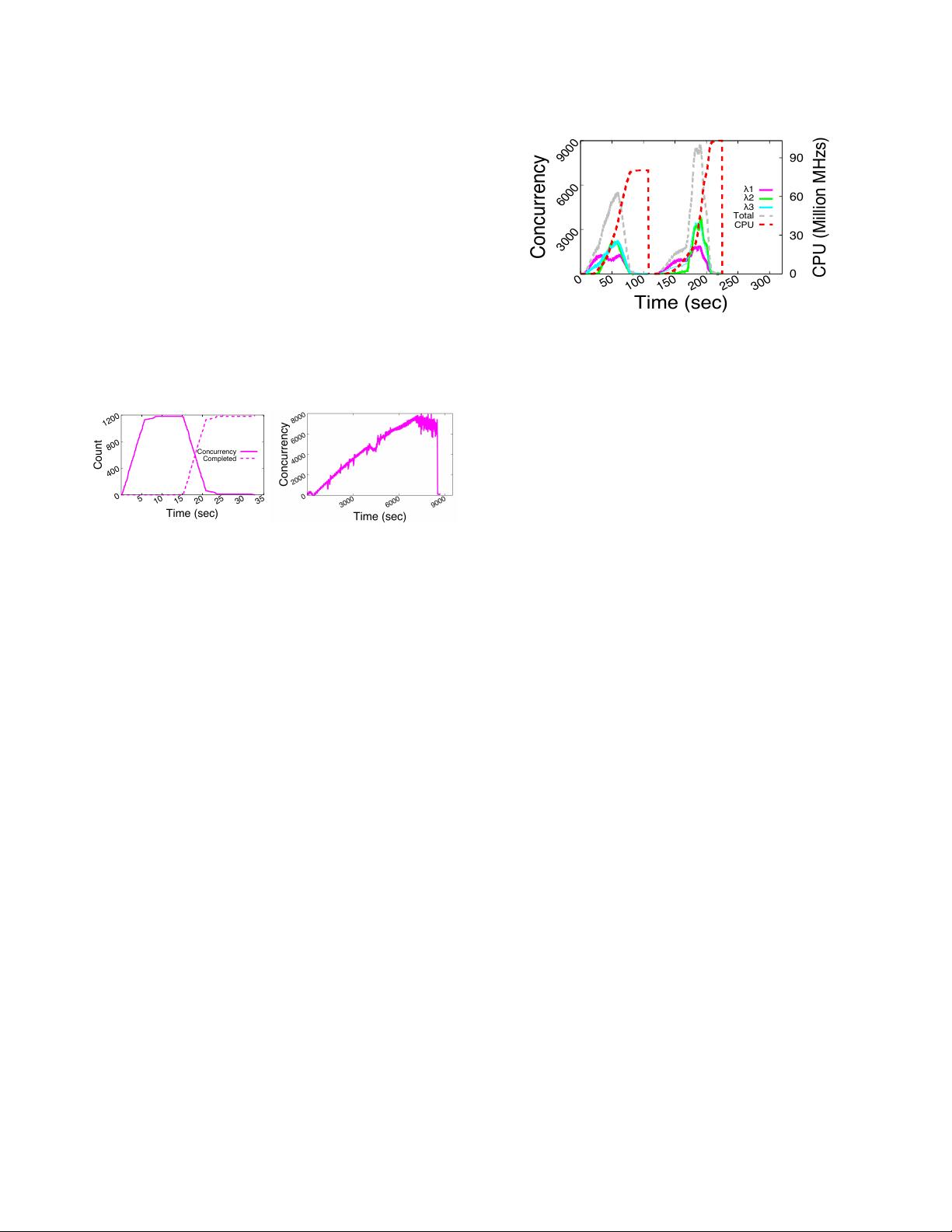

(a) IBM Cloud Functions (b) Azure Functions

Figure 2: Incorrect concurrency limits

Inconsistent and incorrect concurrency limits

We nd

numerous issues with concurrency limits on serverless plat-

forms. IBM suers from a simple issue: default concurrency

limits are documented to be 1,000, but up to 1,200 concurrent

functions are run in parallel. Figure 2a shows a burst of 1,200

Single functions. The x-axis is time, the y-axis is number

of concurrently running functions, the dotted line tracks

completions, and the solid line shows up to 1,200 functions

running simultaneously.

In the worst case, no enforcement can occur in Azure. A

workload is created in which demand is slowly ramped up

over time. Azure does not limit the numb er of concurrent

HTTP functions, which was congured to 1,000, or the num-

ber of instances, which is 200 by default. During the test,

the Function App’s Live Metrics Stream reported up to 440

instances allocated to the Function App with up to 8,000

concurrent requests run at a time, as shown in Figure 2b.

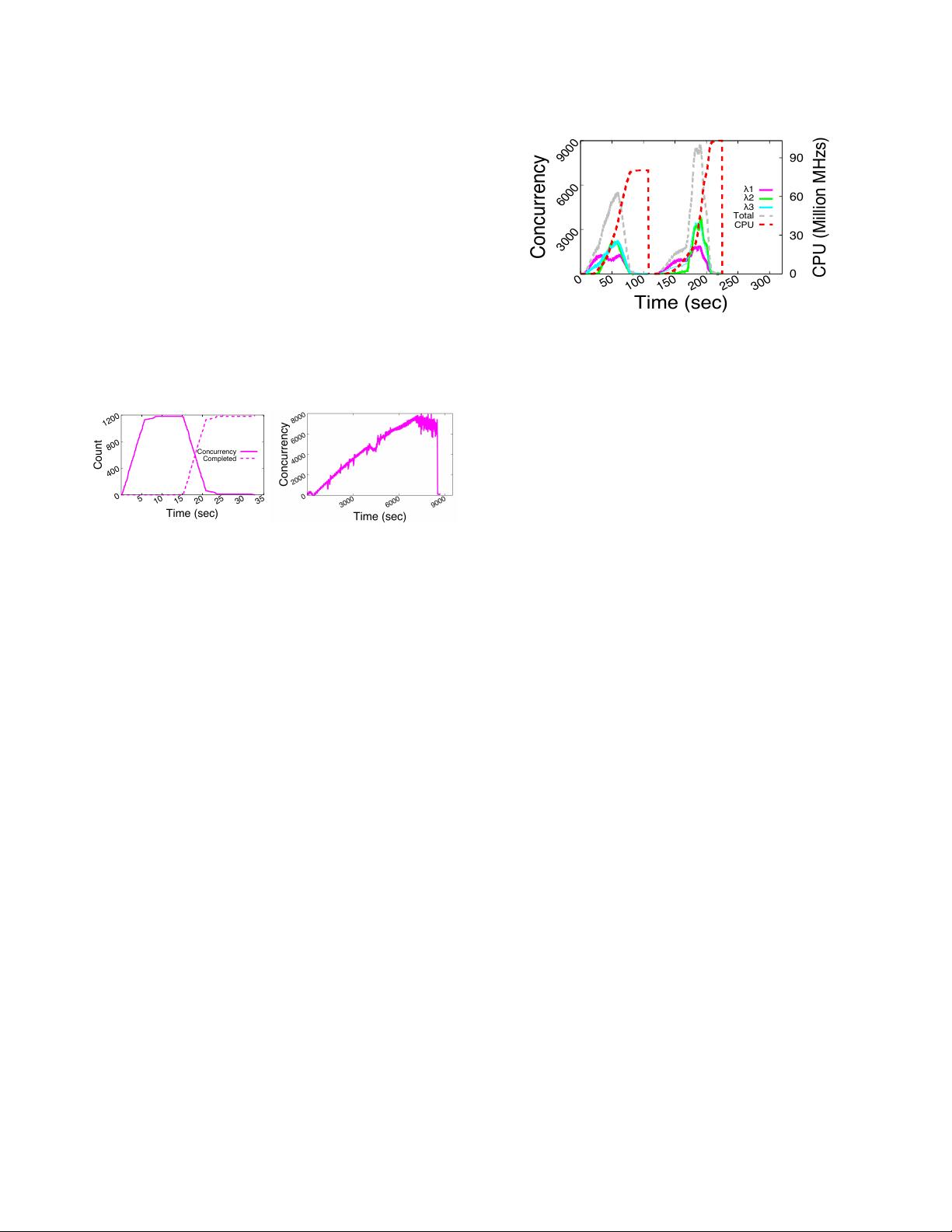

Last, GCF does not limit total CPU consumption in a tight

manner. GCF caps total CPU usage over all functions to a

specied threshold over a 100 second period. CPU consump-

tion is tracked during the period, and when the threshold

is reached, no new functions are invoked. We nd two is-

sues, however. First, any outstanding functions are able to

complete after the limit is reached, violating CPU limits. Sec-

ond, a slow trickle of invocations still occurs after the CPU

limit is reached. Figure 3 shows CPU usage is more than

doubled in the MixedChain workload: CPU limits were set

3000

6000

9000

0

50

100

150

200

250

300

0

30

60

90

Concurrency

CPU (Million MHzs)

Time (sec)

1

2

3

Total

CPU

Figure 3: GCF: MixedChain workload CPU usage

to 40M MHz/s, but over 90M MHz/s consumption was en-

countered (dotted red line). Concurrency for each

and total

concurrency, the sum of all

concurrencies, are also shown.

The above ndings indicate concurrency limits are of-

ten inconsistent or incorrect, placing additional burden on

serverless developers. When limits are under intended values,

workloads may unexpecte dly encounter p oor performance

or increased drops. Dealing with such issues increases server-

less application complexity. When limits are over intended

values, developers may incur higher costs than budgeted for.

And when limits are inconsistent, developers can have di-

culty managing and reasoning about serverless performance.

Mid-chain drops

Some serverless platforms provide a hard

concurrency limit (AWS and IBM) beyond which all subse-

quent requests are dropped. When demand rises above a

specied function invocation limit, functions can be queued

(up to 4 days in the case of AWS [

81

]), silently dropped [

82

],

or returned with an error (in the synchronous case only).

This is problematic for several reasons. First, developers may

rely on function chain completion, and when function chains

drop mid-chain, incorrectness may arise. Alternatively, de-

velopers can solve the problem at the application layer, but

this increases complexity and developer burden, two prob-

lems serverless aims to solve. Third, drops mid-chain result

in ineciency because the resources spent running func-

tions before the drop are wasted and could have been better

used to nish some other outstanding function chain. And

last, if providers queue requests mid-chain, then the total

function chain running time variance can be signicantly

increased, impacting SLAs or otherwise negatively aecting

performance.

To assess the impact of mid-chain drops, a Fan-2 Burst

workload is run on AWS Step Functions and IBM Cloud

Functions, where the burst is the size of the concurrency

limit. Note the “fan” portion of Fan-2 invokes twice as many

functions after

1

completion, meaning a burst of 1,000 Fan-

2’s will ultimately result in 2,000 concurrent functions (i.e.,

2

&

3

) and a violation of concurrency limits. Figure 4 shows

314