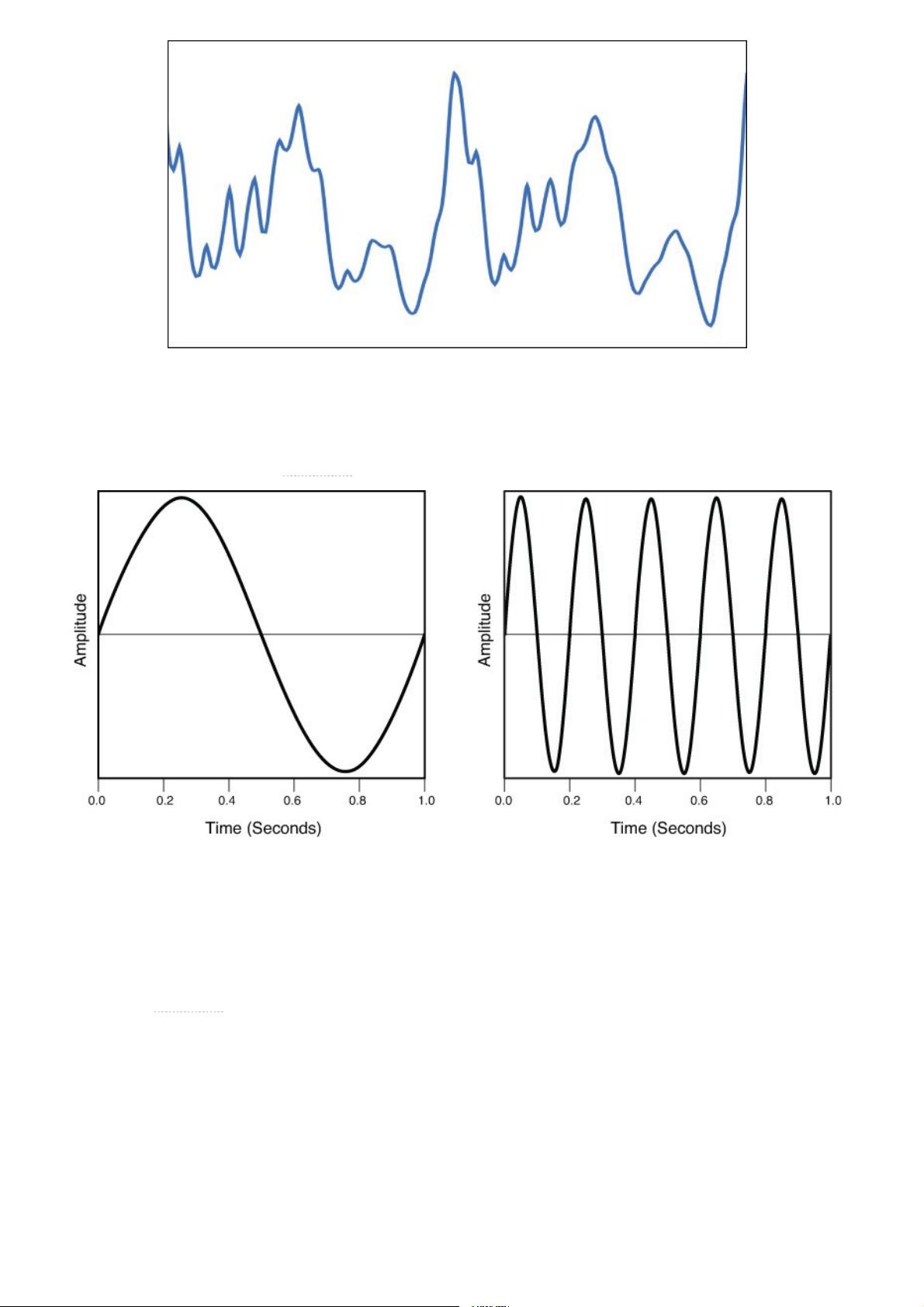

surmise from this example is if you continue to increase the frequency of the sample rate, we should be able to

produce a digital representation that fairly accurately mirrors the original source. Given the limitations of hard-

ware, we may not be able to produce an exact replica, but is there a sample rate that can produce a digital repre-

sentation that is good enough? The answer is yes, and it’s called the Nyquist rate. Harry Nyquist was an engineer

working for Bell Labs in the 1930s who discovered that to accurately capture a particular frequency, you need to

sample at a rate of at least twice the rate of the highest frequency. For instance, if the highest frequency in the au-

dio material you wanted to capture is 10kHz, you need a sample rate of at least 20kHz to provide an accurate digi-

tal representation. CD-quality audio uses a sampling rate of 44.1kHz, which means that it can capture a maximum

frequency of 22.05kHz, which is just above 20kHz upper bound of human hearing. A sampling rate of 44.1kHz

may not capture the complete frequency range contained in the source material, meaning your dog may be upset

by the recording because it doesn’t capture the nuances of the Abbey Road sessions, but for us human beings, it

sounds pristine.

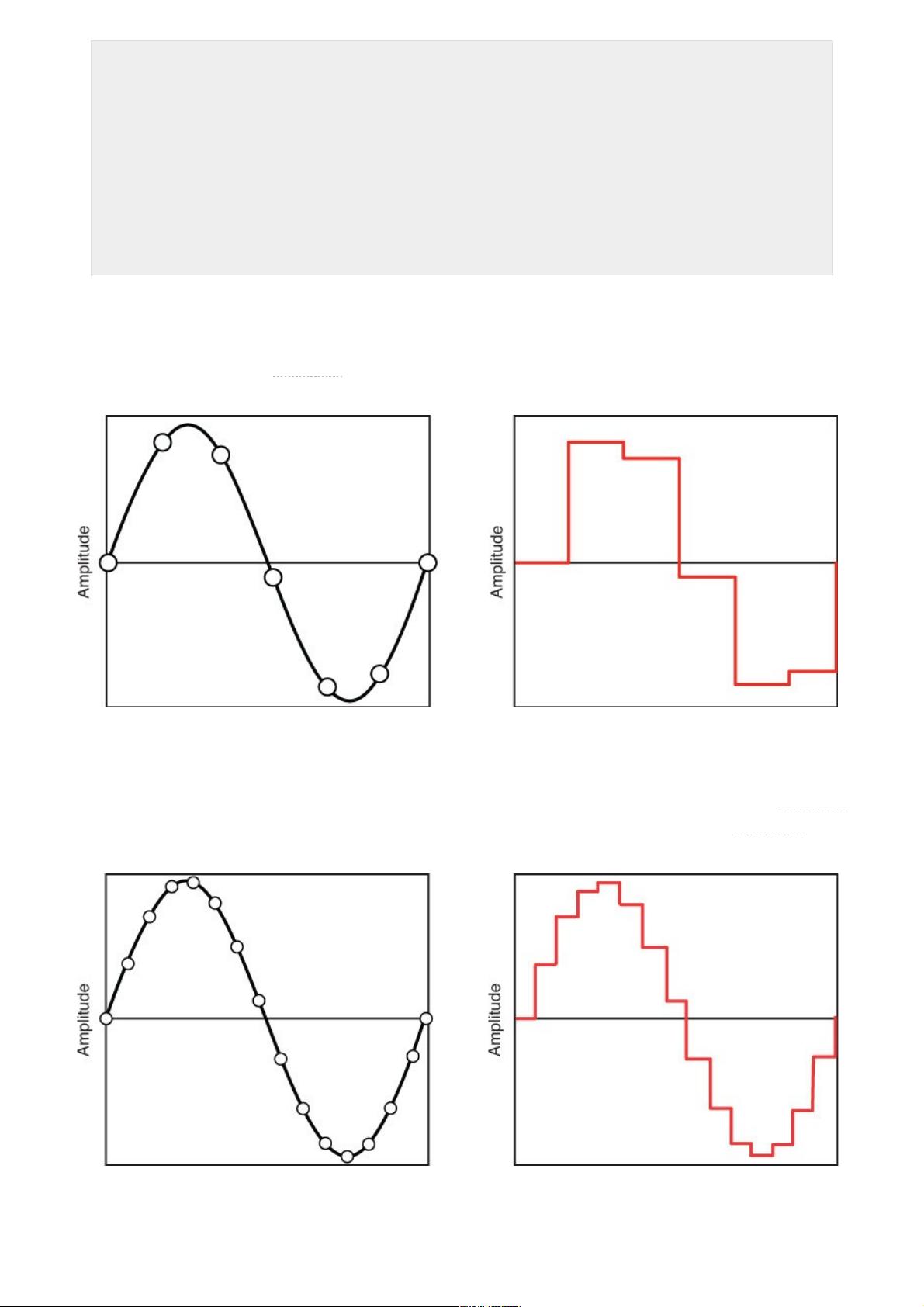

In addition to the sampling rate, another important aspect of digital audio sampling is how accurately we can cap-

ture each audio sample. The amplitude is measured on a linear scale, hence the term Linear PCM. The number of

bits used to store the sample value defines the number of discrete steps available on this linear scale and is re-

ferred to as the audio’s bit depth. Assigning too few bits results in considerable rounding or quantizing of each

sample, leading to noise and distortion in the digital audio signal. Using a bit depth of 8 would provide 256 dis-

crete levels of quantization. This may be sufficient for some audio material, but it isn’t high enough for most audio

content. CD-quality audio has a bit depth of 16, resulting in 65,536 discrete levels, and in professional audio

recording environments bit depths of 24 or higher are used.

When we digitize a signal, we are left with its raw, uncompressed digital representation. This is the media’s purest

digital form, but it requires significant storage space. For instance, a 44.1kHz, 16-bit LPCM audio file takes about

10MB per stereo minute. To digitize a 12-song album with the average song length of 5 minutes would take approx-

imately 600MB of storage. Even with the vast amounts of storage and bandwidth we have today, that is still pretty

large. We can see that uncompressed digital audio requires significant amounts of storage, but what about uncom-

pressed video? Let’s take a look at the elements of a digital video to see if we can determine the amount of storage

space it requires.

Video is composed of a sequence of images called frames. Each frame captures a scene for a point in time within

the video’s timeline. To create the illusion of motion, we need to see a certain number of frames played in fast suc-

cession. The number of frames displayed in one second is called video’s frame rate and is measured in frames per

second (FPS). Some of the most common frame rates are 24FPS, 25FPS, and 30FPS.

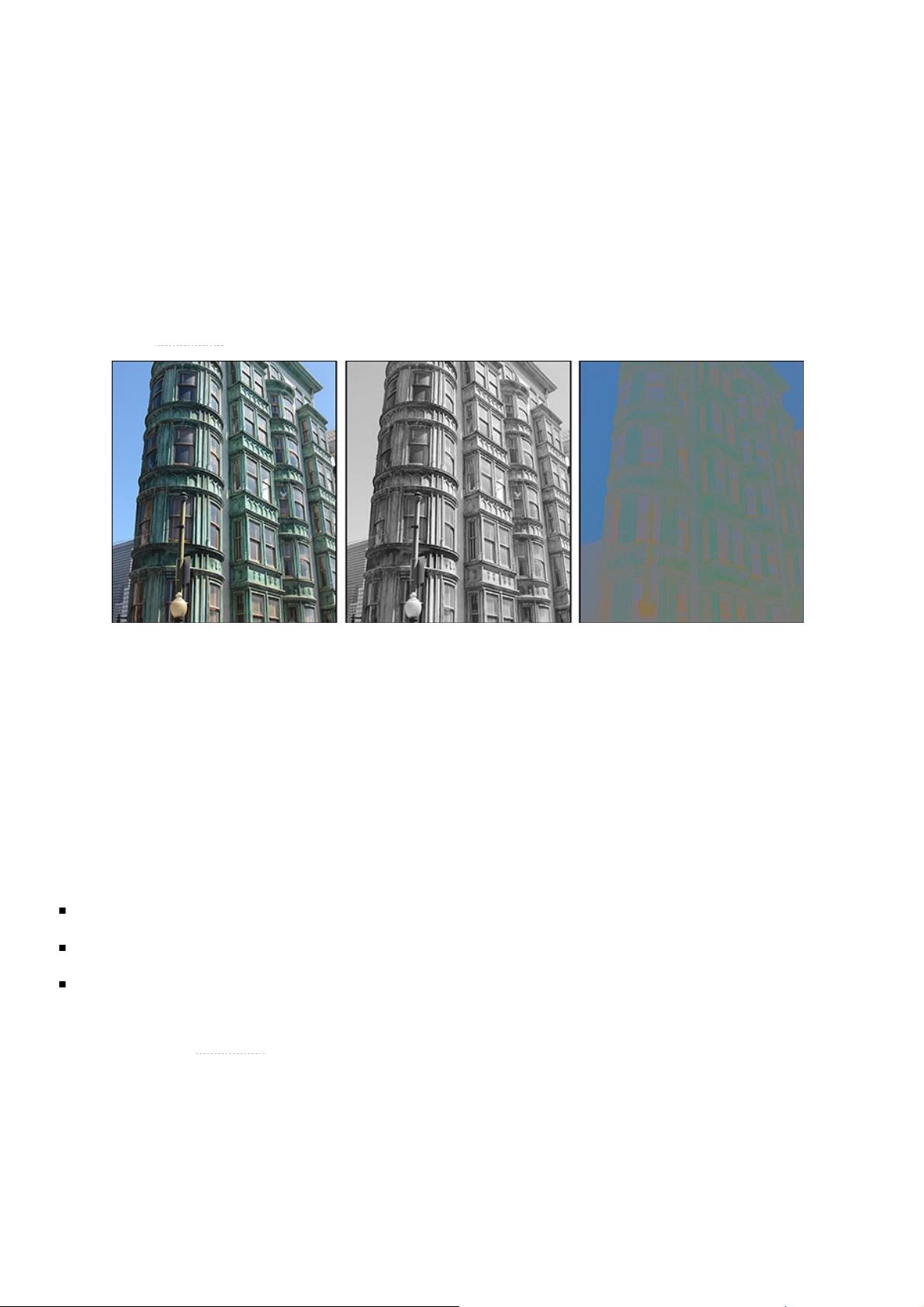

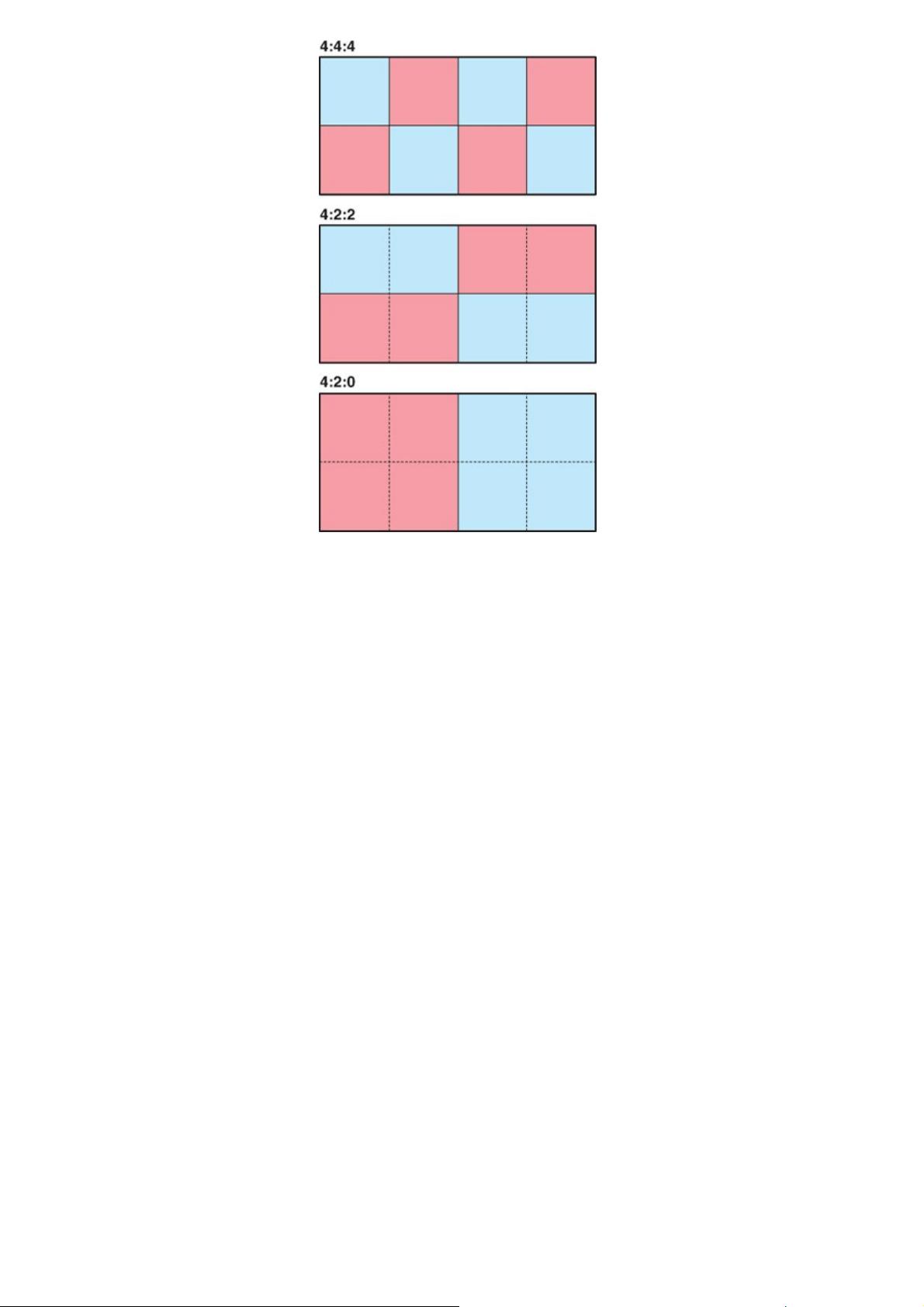

To understand the storage requirements for uncompressed video content, we first need to determine how big each

individual frame would be. A variety of common video sizes exist, but these days they usually have an aspect ratio

of 16:9, meaning there are 16 horizontal pixels for every 9 vertical pixels. The two most common sizes of this as-

pect ratio are 1280 × 720 and 1920 × 1080. What about the pixels themselves? If we were to represent each pixel

in the RGB color space using 8 bits, that means we’d have 8 bits for red, 8 bits for green, and 8 bits for blue, or 24

bits. With all the inputs gathered, let’s perform some calculations.

Table 1.1 shows the storage requirements for un-

compressed video at 30FPS at the two most common resolutions.

Table 1.1 Uncompressed Video Storage Requirements

Houston, we have a problem. Clearly, as a storage and transmission format, this would be untenable. A decade

from now these sizes may seem trivial, but today this isn’t feasible for most uses. Because this isn’t a reasonable

way to store and transfer video in most cases, we need to find way to reduce this size. This brings us to the topic of

compression.