write

give

find

create

make

describe

design

generate

classify

have

explain

tell

identify

output

predict

detect

function

essay

letter

paragraph

example

list

set

advice

word

number

sentence

way

program

list

algorithm

function

list

story

sentence

program

situation

person

process

time

system

game

algorithm

structure

list

number

sentence

series

sentence

sentiment

article

text

list

array

coin

set

difference

concept

story

joke

sentiment

topic

number

word

sentiment

sarcasm

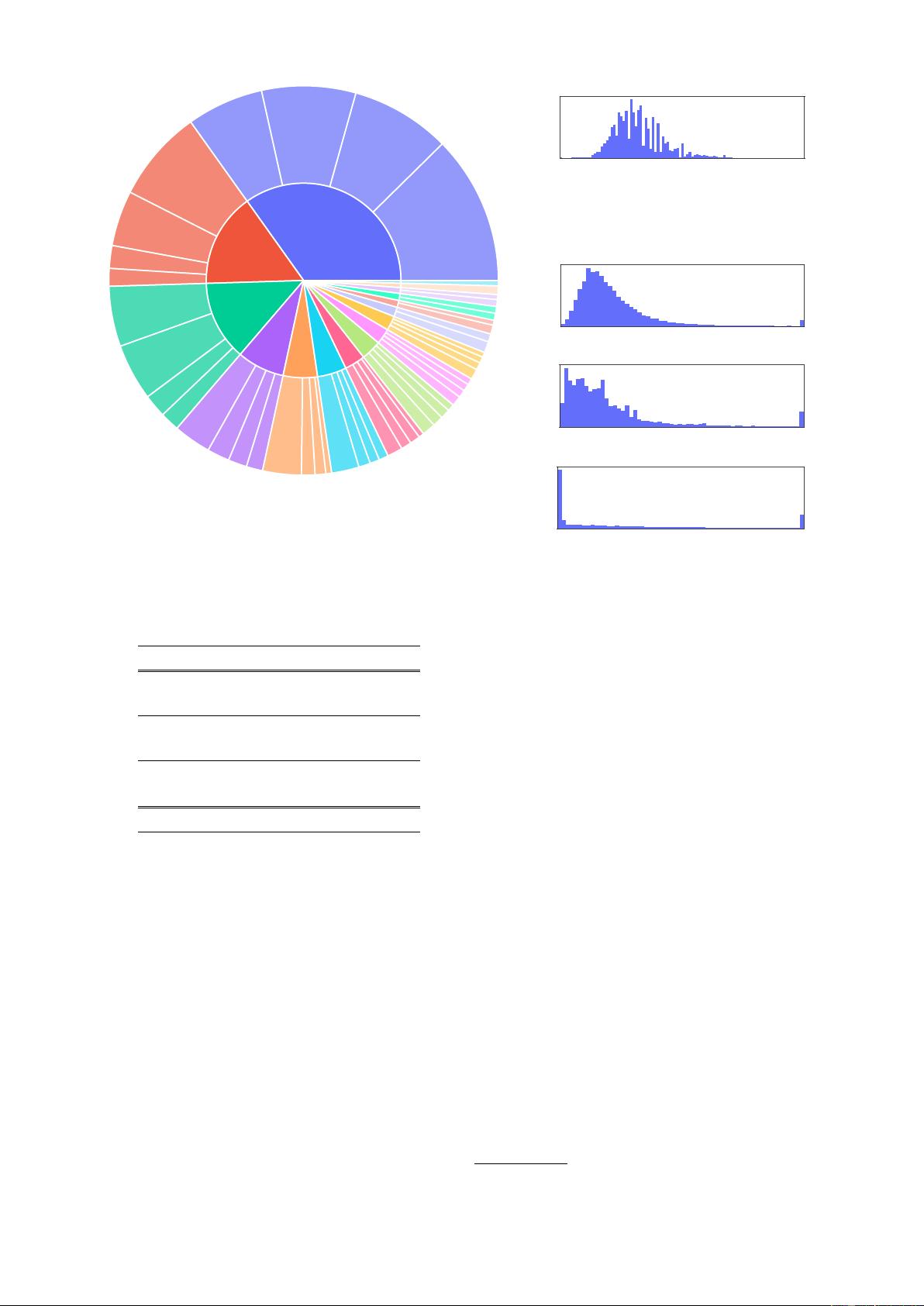

Figure 3: The top 20 most common root verbs (inner circle) and

their top 4 direct noun objects (outer circle) in the generated

instructions. Despite their diversity, the instructions shown here

only account for 14% of all the generated instructions because

many instructions (e.g., “Classify whether the user is satisfied

with the service.”) do not contain such a verb-noun structure.

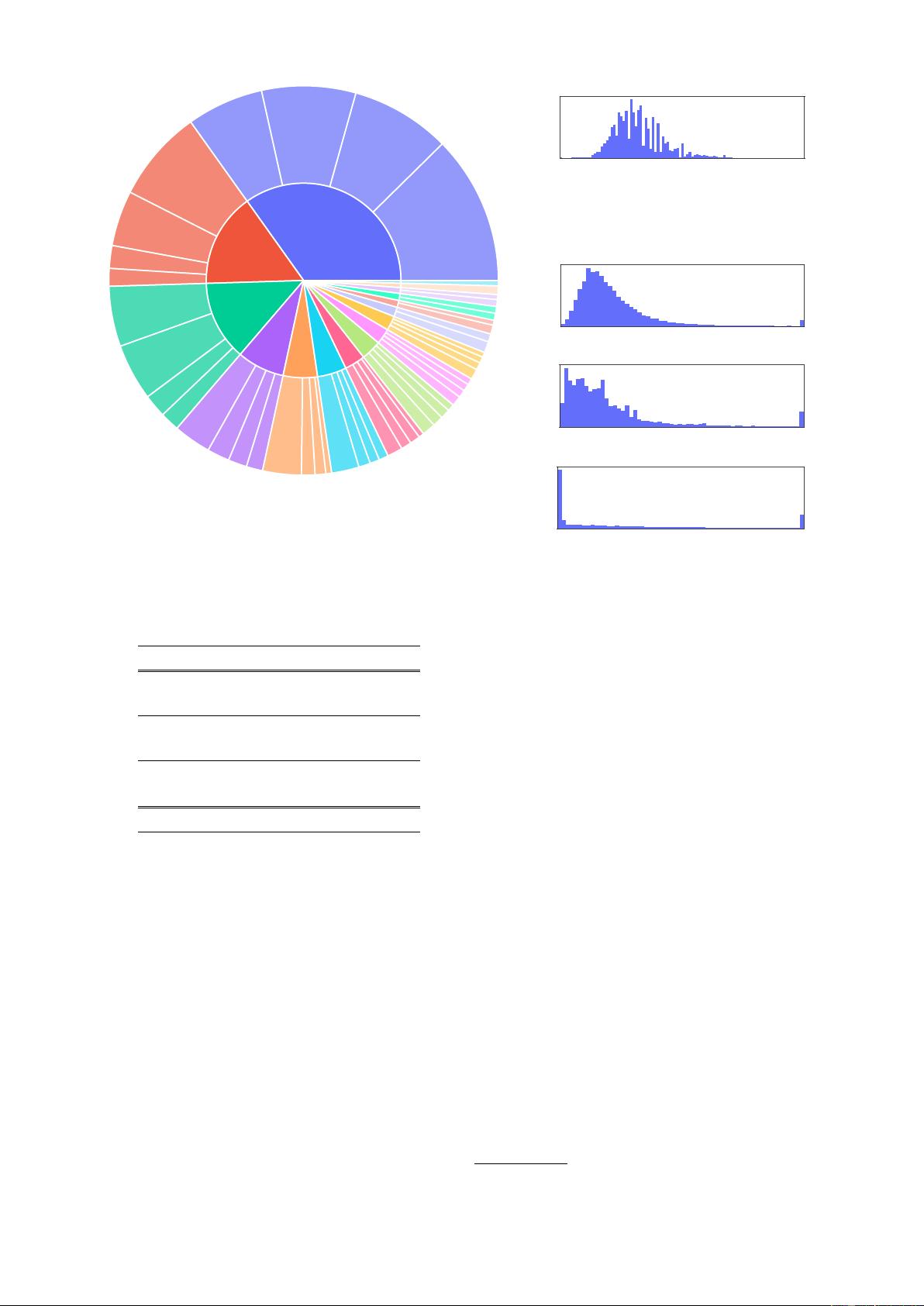

0 0.2 0.4 0.6 0.8 1

0

1000

2000

3000

ROUGE-L Overlap with the Most Similar Seed Instruction

# Instructions

Figure 4: Distribution of the ROUGE-L scores

between generated instructions and their most

similar seed instructions.

10 20 30 40 50 60

0

2000

4000

6000

Instruction Length

# Instructions

10 20 30 40 50 60

0

1000

2000

3000

Input Length

# Inputs

10 20 30 40 50 60

0

10k

20k

30k

Onput Length

# Onputs

Figure 5: Length distribution of the generated

instructions, non-empty inputs, and outputs.

Quality Review Question Yes %

Does the instruction

describe a valid task?

92%

Is the input appropriate

for the instruction?

79%

Is the output a correct and acceptable

response to the instruction and input?

58%

All fields are valid 54%

Table 2: Data quality review for the instruction, input,

and output of the generated data. See Table 10 and

Table 11 for representative valid and invalid examples.

4 Experimental Results

We conduct experiments to measure and compare

the performance of models under various instruc-

tion tuning setups. We first describe our models

and other baselines, followed by our experiments.

4.1 GPT3

SELF-INST

: finetuning GPT3 on its

own instruction data

Given the instruction-generated instruction data, we

conduct instruction tuning with the GPT3 model

itself (“davinci” engine). As described in §2.3, we

use various templates to concatenate the instruction

and input, and train the model to generate the output.

This finetuning is done through the OpenAI fine-

tuning API.

8

We use the default hyper-parameters,

except that we set the prompt loss weight to 0, and

we train the model for 2 epochs. We refer the reader

to Appendix A.3 for additional finetuning details.

The resulting model is denoted by GPT3

SELF-INST

.

4.2 Baselines

Off-the-shelf LMs. We evaluate T5-LM (Lester

et al., 2021; Raffel et al., 2020) and GPT3 (Brown

et al., 2020) as the vanilla LM baselines (only pre-

training, no additional finetuning). These baselines

will indicate the extent to which off-the-shelf LMs

are capable of following instructions naturally im-

mediately after pretraining.

Publicly available instruction-tuned models.

T

0

and T

𝑘

-INSTRUCT are two instruction-tuned

models proposed in Sanh et al. (2022) and Wang

et al. (2022), respectively, and are demonstrated

to be able to follow instructions for many NLP

tasks. Both of these models are finetuned from

the T5 (Raffel et al., 2020) checkpoints and are pub-

licly available.

9

For both of these models, we use

8

See OpenAI’s documentation on finetuning.

9

T0 is available at here and T𝑘-INSTRUCT is here.