MARPE et al.: CABAC IN THE H.264/AVC VIDEO COMPRESSION STANDARD 623

frequently observed bins can be treated using a joint, typically

zero-order, probability model. Compared to the conventional

approach of using context models in the original domain of the

source with typically large alphabet size (like e.g., components

of motion vector differences or transform coefficient levels) this

additional freedom in the design offers a flexible instrument for

using higher order conditional probabilities without suffering

from context “dilution” effects. These effects are often observed

in cases, where a large number of conditional probabilities have

to be adaptively estimated on a relatively small (coding) time

interval, such that there are not enough samples to reach a reli-

able estimate for each model.

2

For instance, when operating in the original alphabet domain,

aquitemoderatelychosensecond-order model for agivensyntax

element alphabet of size

will result in the intractably

large number of

symbol probabilities to

be estimated for that particular syntax element only. Even for a

zero-order model, the task of tracking 255 individual probability

estimates according to the previous example is quite demanding.

However, typically measured probability density functions (pdf)

of prediction residuals or transformed prediction errors can be

modeled by highly peaked Laplacian, generalized Gaussian or

geometric distributions [28], where it is reasonable to restrict the

estimationofindividualsymbolstatisticstotheareaofthelargest

statistical variations at the peak of the pdf. Thus, if, for instance,

a binary tree resulting from a Huffman code design would be

chosen as a binarization for such a source and its related pdf,

only the nodes located in the vicinity of the root node would

be natural candidates for being modeled individually, whereas a

joint model would be assigned to all nodes on deeper tree levels

corresponding to the “tail” of the pdf. Note that this design is

different from the example given in Fig. 2, where each (internal)

node has its own model.

In the CABAC framework, typically only the root node would

be modeled using higher order conditional probabilities. In the

above example of a second-order model, this would result in

only four different binary probability models instead of

dif-

ferent

-ary probability models with .

2) Design of CABAC Binarization Schemes: As already

indicated above, a binary representation for a given nonbinary

valued syntax element provided by the binarization process

should be close to a minimum-redundancy code. On the one

hand, this allows easy access to the most probable symbols by

means of the binary decisions located at or close to the root

node for the subsequent modeling stage. On the other hand,

such a code tree minimizes the number of binary symbols to

encode on the average, hence minimizing the computational

workload induced by the binary arithmetic coding stage.

However, instead of choosing a Huffman tree for a given

training sequence, the design of binarization schemes in

CABAC (mostly) relies on a few basic code trees, whose struc-

ture enables a simple on-line computation of all code words

without the need for storing any tables. There are four such

basic types: the unary code, the truncated unary code, the

th

order Exp-Golomb code, and the fixed-length code. In addition,

there are binarization schemes based on a concatenation of

2

A more rigorous treatment of that problem can be found in [23] and [24]

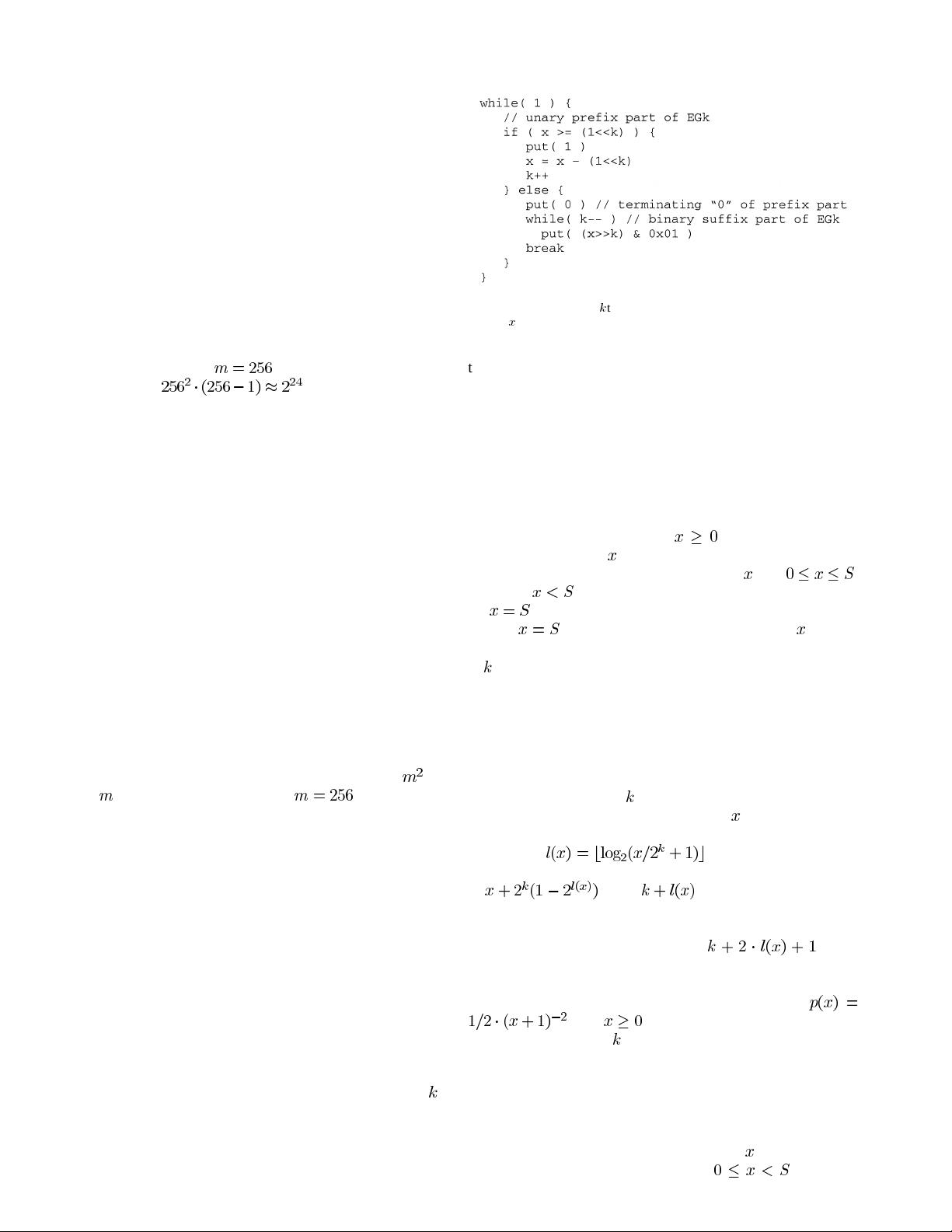

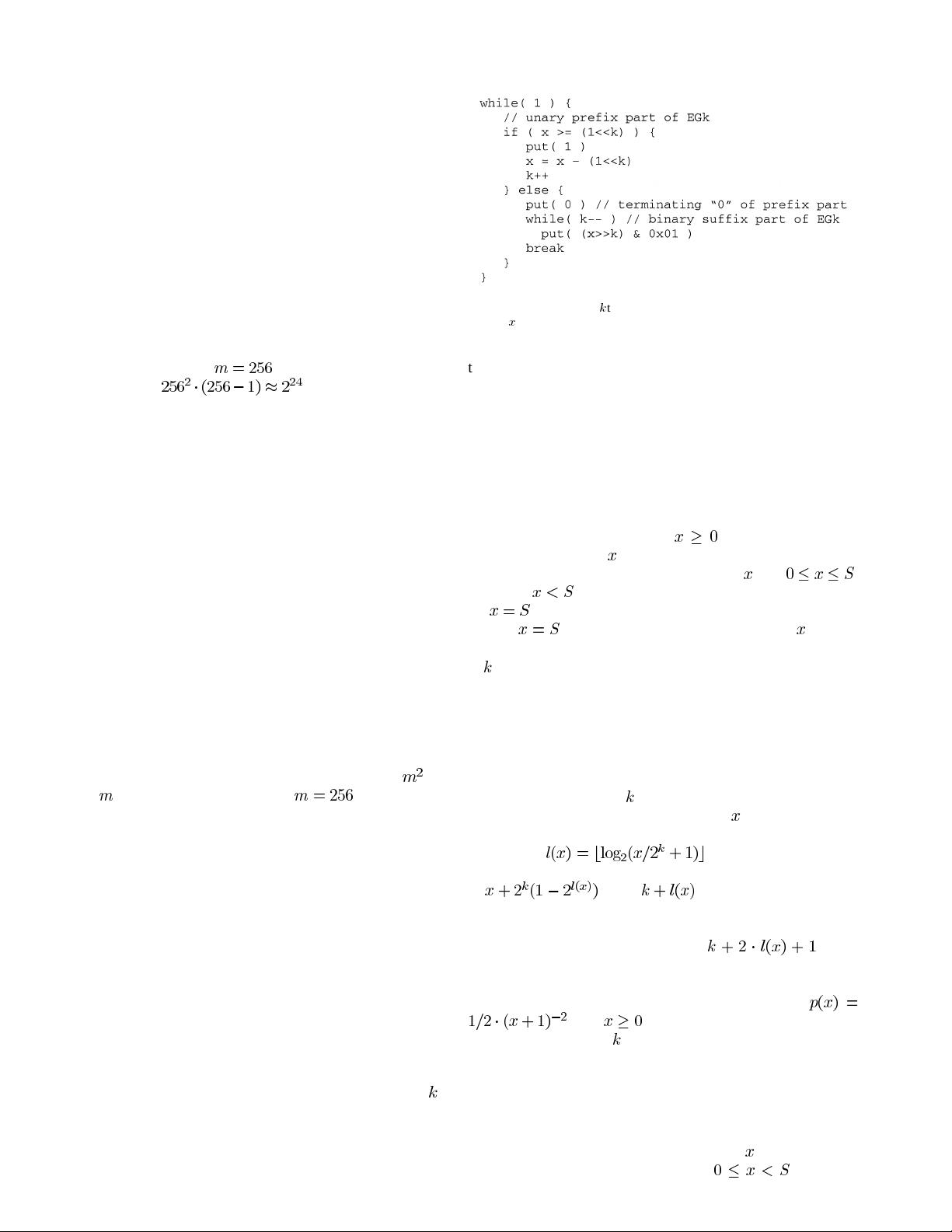

Fig. 3. Construction of

k

th order EGk code for a given unsigned integer

symbol

x

.

these elementary types. As an exception of these structured

types, there are five specific, mostly unstructured binary trees

that have been manually chosen for the coding of macroblock

types and submacroblock types. Two examples of such trees

are shown in Fig. 2.

In the remaining part of this section, we explain in more detail

the construction of the four basic types of binarization and its

derivatives.

Unary and Truncated Unary Binarization Scheme: For each

unsigned integer valued symbol

, the unary code word

in CABAC consists of

“1” bits plus a terminating “0” bit. The

truncated unary (TU) code is only definedfor

with ,

where for

the code is given by the unary code, whereas

for

the terminating “0” bit is neglected such that the TU

code of

is given by a codeword consisting of “1” bits

only.

th order Exp-Golomb Binarization Scheme: Exponential

Golomb codes were first proposed by Teuhola [29] in the

context of run-length coding schemes. This parameterized

family of codes is a derivative of Golomb codes, which have

been proven to be optimal prefix-free codes for geometrically

distributed sources [30]. Exp-Golomb codes are constructed by

a concatenation of a prefix and a suffix code word. Fig. 3 shows

the construction of the

th order Exp-Golomb (EGk) code

word for a given unsigned integer symbol

. The prefix part of

the EGk code word consists of a unary code corresponding to

the value of

.

The EGk suffix part is computed as the binary representation

of

using significant bits, as can be

seen from the pseudo-C code in Fig. 3.

Consequently, for the EGk binarization, the number of sym-

bols having the same code length of

is ge-

ometrically growing. By inverting Shannon’s relationship be-

tween ideal code length and symbol probability, we can, e.g.,

easily deduce that EG0 is the optimal code for a pdf

with . This implies that for an appropri-

ately chosen parameter

, the EGk code represents a fairly good

first-order approximation of the ideal prefix-free code for tails

of typically observed pdf’s, at least for syntax elements that are

representing prediction residuals.

Fixed-Length (FL) Binarization Scheme: For the application

of FL binarization, a finite alphabet of values of the corre-

sponding syntax element is assumed. Let

denote a given

value of such a syntax element, where

. Then, the