DA LIO et al.: ARTIFICIAL CO-DRIVERS AS A UNIVERSAL ENABLING TECHNOLOGY 247

reduce speed in curves to maintain the accuracy in lateral

position).

III. C

O-DRIVER OF THE INTERACTIVE PRO JE CT

A. Theoretical Foundations and Design Guidelines

The main goal is to design an agent that is capable of

enacting the “like me” framework, which means that it must

have sensory-motor strategies similar to that of a human and

that it must be capable of using them to mirror human behavior

for inference of intentions and human–machine interaction.

Designing human motor strategies is a relatively easy step:

One may take inspiration from human optimality motor prin-

ciples. For example, we already used the minimum jerk/time

tradeoff and the acceleration willingness envelope to produce

human “reference maneuvers” for advanced driver assistance

systems ( ADAS) [63], [92], [93].

Implementing inference of intentions by mirroring, and

human-peer interactions, is the second less assured step. Two

notable examples (MOSAIC and HAMMER) have been men-

tioned for the general robotics application domain. In the

driving domain, DIPLECS demonstrated learning of the ECOM

structure from human-driving expert-annotated training sets,

and classification of human driver states. However, no co-

driver, in the sense of the definition given in Section I, has been

demonstrated as yet.

The main research question and contribution of this paper is

thus producing a co-driver example implementation to demon-

strate the effectiveness of the simulation/mirroring mechanism

and the following interactions and to focus on the important

potential application impacts that follow from these.

The co-driver has been developed by a combination of direct

synthesis (OC) at the motor primitive level, as well as manual

tuning at higher behavioral levels (the latter being carried

out after inspection of salient situations whenever the two

agents happen to disagree). The final system is thus the cu-

mulation of having compared correct human behaviors (while

discarding incorrect human behaviors) with the developing

agent within many situations encountered during months of

development.

However, in Section VI, we describe how the same archi-

tecture can be potentially employed in the future to implement

“learning by simulation,” namely, optimizing higher-level be-

haviors with simulated interactions via the forward emulators,

to let the system build knowledge automatically (instead of

manually), particularly to accommodate rare events and to

continuously improve its reliability.

Note that, while the main purpose of this system is “un-

derstanding” human goals for preventive safety (see below),

emergency handling and efficient vehicle control intervention

may be added by means of new behaviors (no longer necessarily

human-like) in future versions.

B. Example Implementation

“InteractIVe” is the current flagship project of the European

Commission in the intelligent vehicle domain [99]. It tackles

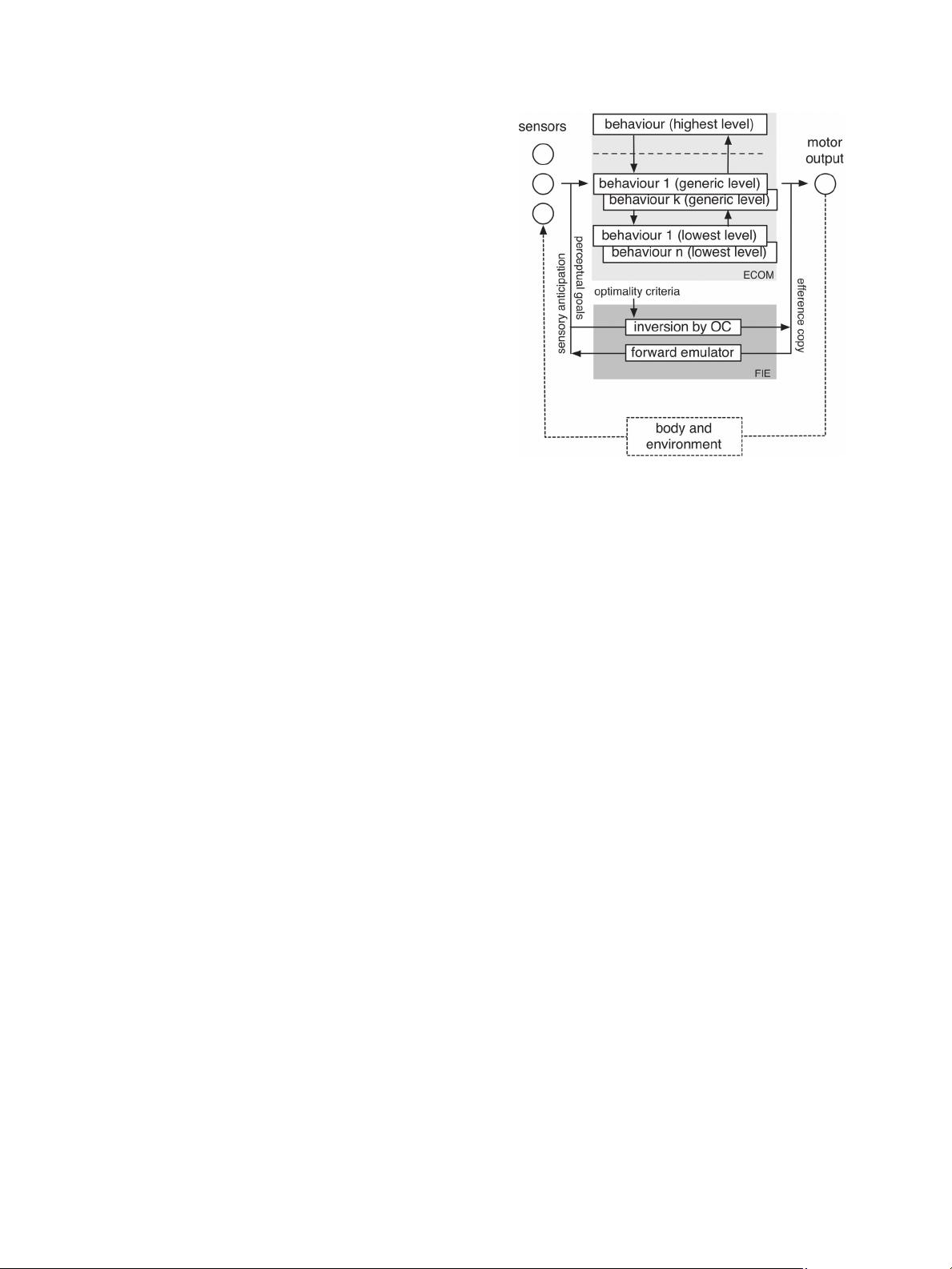

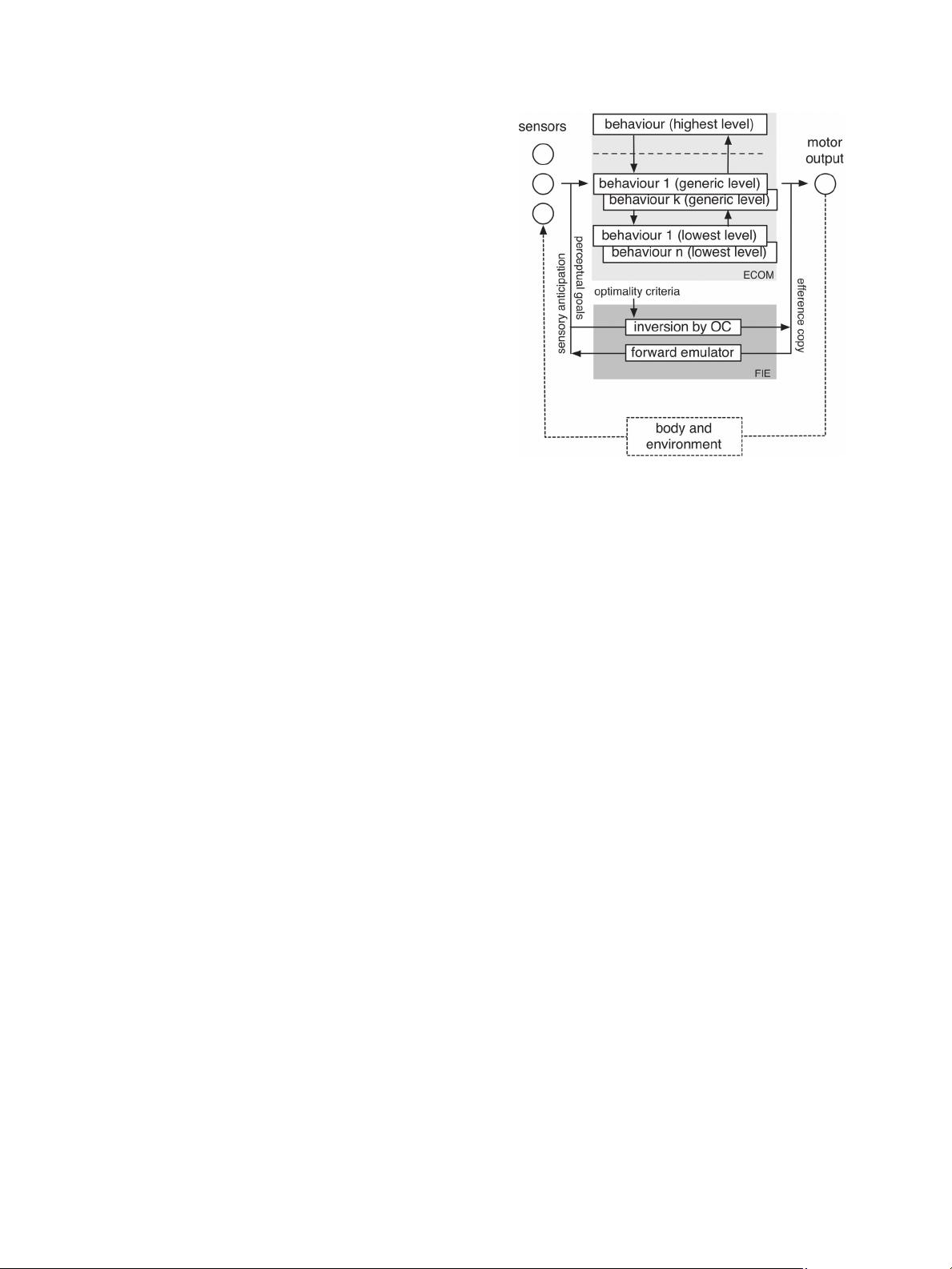

Fig. 4. Architecture of the co-driver for the CRF implementation of the

Continuous Support function.

vehicle safety in a systematic way by means of three different

subprojects focusing on different time scales: from early holis-

tic preventive safety, to automatic collision avoidance, and to

collision mitigation.

Preventive safety deals with normal driving and with pre-

venting dangerous situations. For this, a “continuous-support

function” has been conceived, which monitors driving and acts

whenever necessary. This functionality integrates, in a unique

human–machine interaction, several distinct forms of the driver

assistance system.

To implement the Continuous Support function, the co-driver

metaphor was adopted. It has been implemented within four

demonstrators of differing kinds. The following describes the

Centro Ricerche Fiat (CRF) implementation, which is closest

to the premises in Section II.

C. Co-Driver Architecture (CRF Implementation)

Fig. 4 shows the adopted architecture. The agent’s “body” is

the car, the agent’s “environment” is the road and its users, and

the “motor output” is the longitudinal and lateral control.

This architecture may be seen to resemble that in Fig. 3,

albeit extended in that the perception–action link is here ex-

plicitly expanded into a subsumptive hierarchy of PA loops.

As indicated in Section II, the input/output structure of layers

within the subsumption hierarchy is characterized by (progres-

sive generalizations of) perceptions and actions. The actual

implementation is built up from functions with input and output

characteristics described in the following section.

By comparison to Fig. 2(b), this architecture is enriched

with forward/inverse models, which make it possible to operate

offline for any purposes requiring “extended deliberation,” e.g.,

for human intention recognition.