reconstruction (depth) and sensor fusion, while mentioning

other fields as image processing (denoising and super-

resolution), segmentation, motion estimation, tracking and

human pose estimation (used for pedestrian movement

analysis). The detection part is split into 2-D and 3-D. The 3-D

method is classified as camera-based, LiDAR-based, radar-

based and sensor fusion-based. Similarly, depth estimation is

categorized as monocular image-based, stereo-based and sensor

fusion-based.

A. Image Processing

Image quality and resolution are requested in the perception.

The existing denoising methods can fall into end-to-end CNN

and combination of CCN with prior knowledge. A survey of

image denoising by deep learning is given in [60].

The super-resolution methods based on deep learning can be

roughly categorized into supervised and unsupervised. The

supervised manner is split into pre-upsampling, post-

upsampling, progressive-upsampling and iterative up-and-

down sampling. The unsupervised manner could be zero-shot

learning, weak supervised and prior knowledge-based. An

overview of image super-resolution refers to [59].

B. 2-D Detection

There are good survey papers in this domain [76, 77]. Here we

only briefly introduce some important methods in the short

history.

The object detection by deep learning are roughly named as

one-stage and two-stage methods. The first two stage method

is R-CNN (region-based) [61] with feature extraction by CNN;

fast R-CNN [63] improves it with SPP (spatial pyramid

pooling) [62] layers that generate a fixed-length representation

without rescaling; faster RCNN [64] realizes end-to-end

detection with the introduced RPN (region proposal network);

FPN (feature pyramid network) [67] proposes a top-down

architecture with lateral connections to build the feature

pyramid for detection with a wide variety of scales; a GAN

version of fast RCNN is achieved [69].

YOLO (You Only Look Once) is the first one-stage detector in

deep learning era, which divides the image into regions and

predicts bounding boxes and probabilities for each region

simultaneously [66]. SSD (single shot detector) was the second

one-stage detector with the introduction of the multi-reference

and multi-resolution [65], which can detect objects of different

scales on different layers of the network. PeleeNet [72] is used

for detection as the SSD backbone, a combination of DenseNet

and MobileNet.

YOLO already has 4 versions [68, 70-71, 75], which further

improve the detection accuracy while keeps a very high

detection speed:

• YOLO v2[68] replaces dropout and VGG Net with BN and

GoogleNet respectively, introduces anchor boxes as prior

in training, takes images with different sizes by removing

fully connected layers, apply DarkNet for acceleration and

WordTree for 9000 classes in object detection;

• YOLO v3 [71] uses multi-label classification, replaces the

softmax function with independent logistic classifiers,

applies feature pyramid like FPN, replace DarkNet-19 with

DarkNet-53 (skip connection);

• YOLO v4 [75] uses Weighted-Residual-Connections

(WRC) and Cross-Stage-Partial-Connections (CSP), takes

Mish-activation, DropBlock regularization and Cross

mini-Batch Normalization (CmBN), runs Self-adversarial-

training (SAT) and Mosaic data augmentation in training,

and CIoU loss in bounding box regression.

RetinaNet is a method with a new loss function named “focal

loss” to handle the extreme foreground-background class

imbalance [70]. Focal Loss enables the one-stage detectors to

achieve comparable accuracy of two-stage detectors while

maintaining very high detection speed. VoVNet [73] is another

variation of DenseNet comprised of One-Shot Aggregation

(OSA) applied for both one-stage and two-stage efficient object

detection. EfficientDet [74] applies a weighted bi-directional

FPN (BiFPN) and EfficientNet backbones.

Recently anchor-free methods get more noticed due to the

proposal of FPN and Focal Loss [78-90]. Anchors are

introduced to refine to the final detection location, first occurred

in SSD, then in faster R-CNN and YOLO v2. The anchors are

defined as the grid on the image coordinates at all possible

locations, with different scale and aspect ratio. However, it is

found fewer anchors result in better speed but deteriorate the

accuracy.

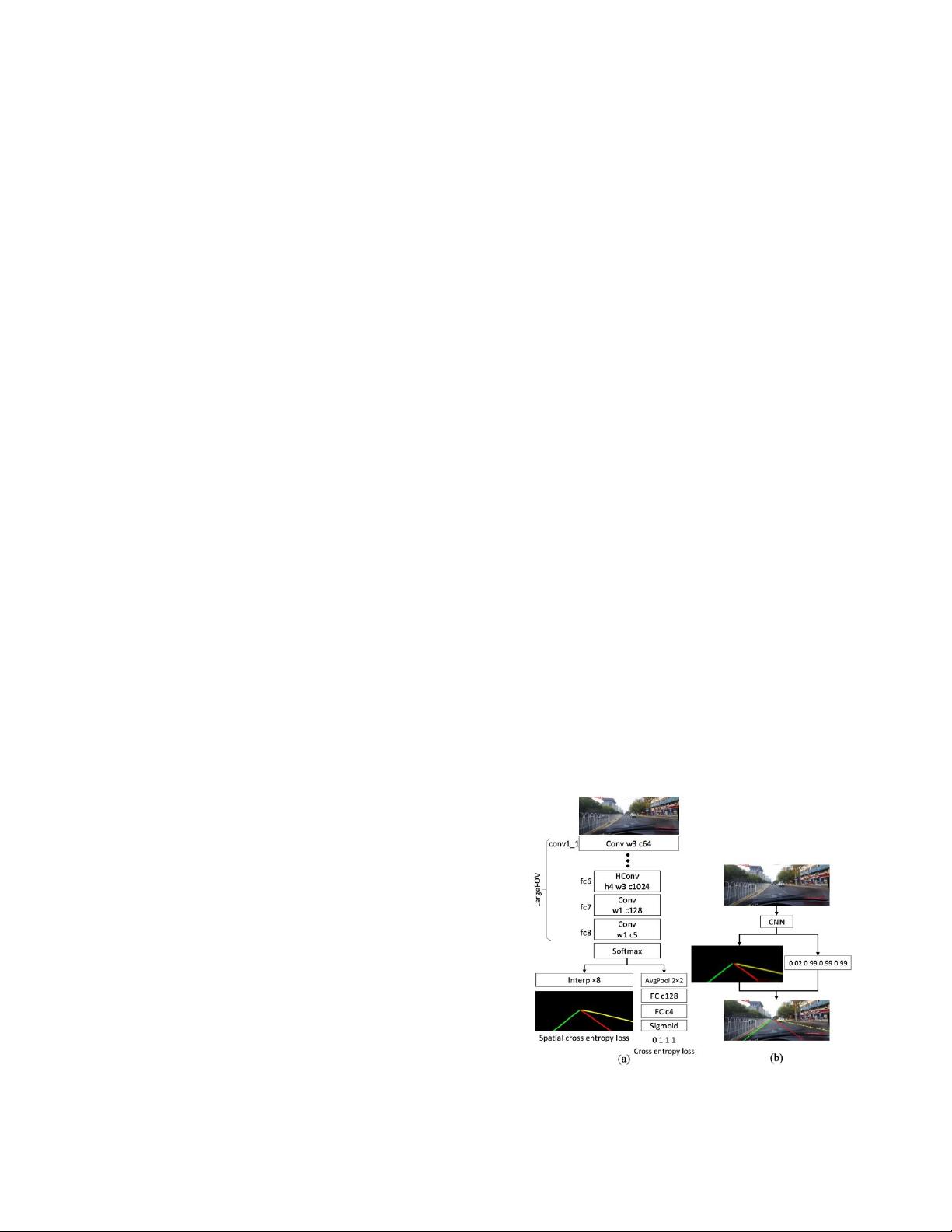

Fig. 5. Spatial CNN for lane detection, from reference [94].