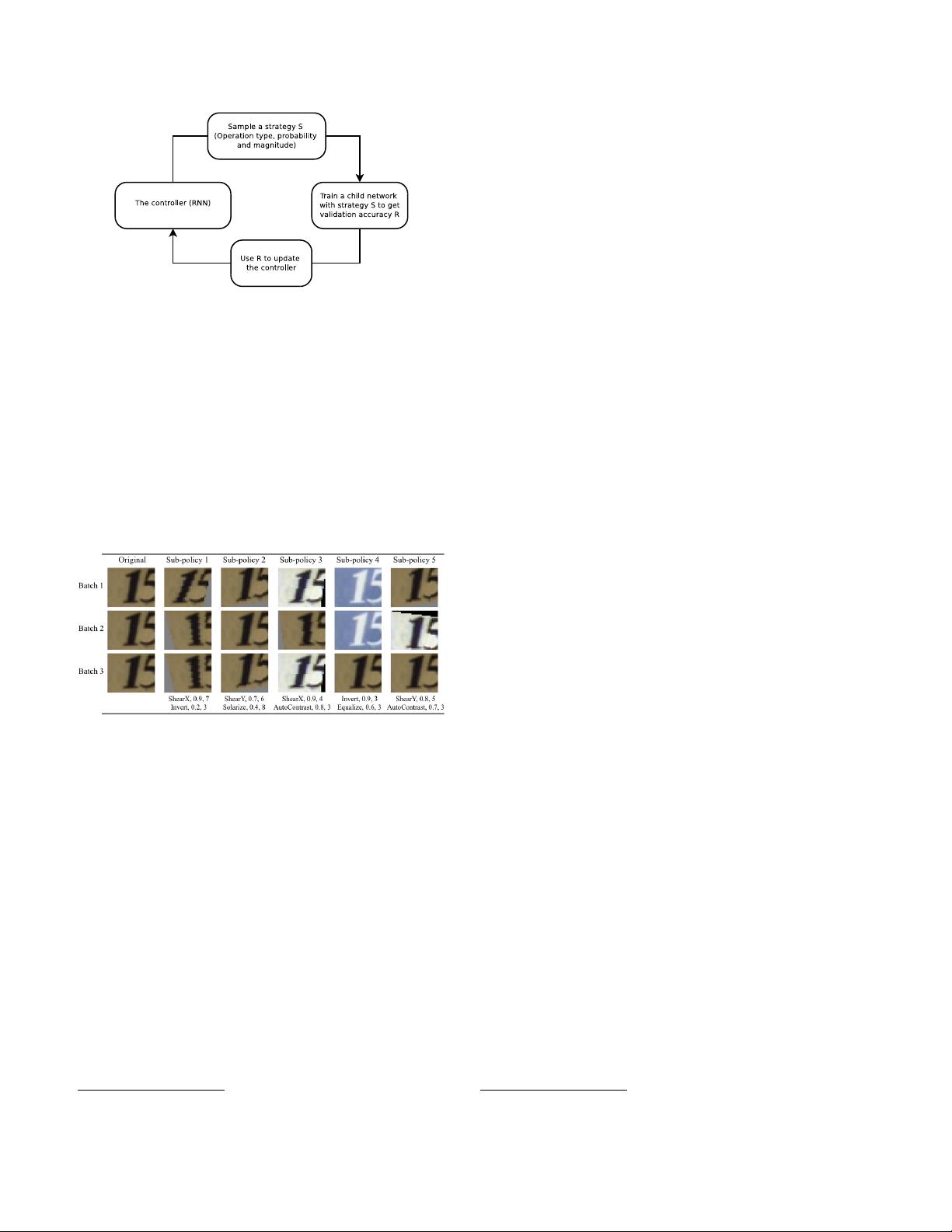

Figure 1. Overview of our framework of using a search method

(e.g., Reinforcement Learning) to search for better data augmen-

tation policies. A controller RNN predicts an augmentation policy

from the search space. A child network with a fixed architecture

is trained to convergence achieving accuracy R. The reward R will

be used with the policy gradient method to update the controller

so that it can generate better policies over time.

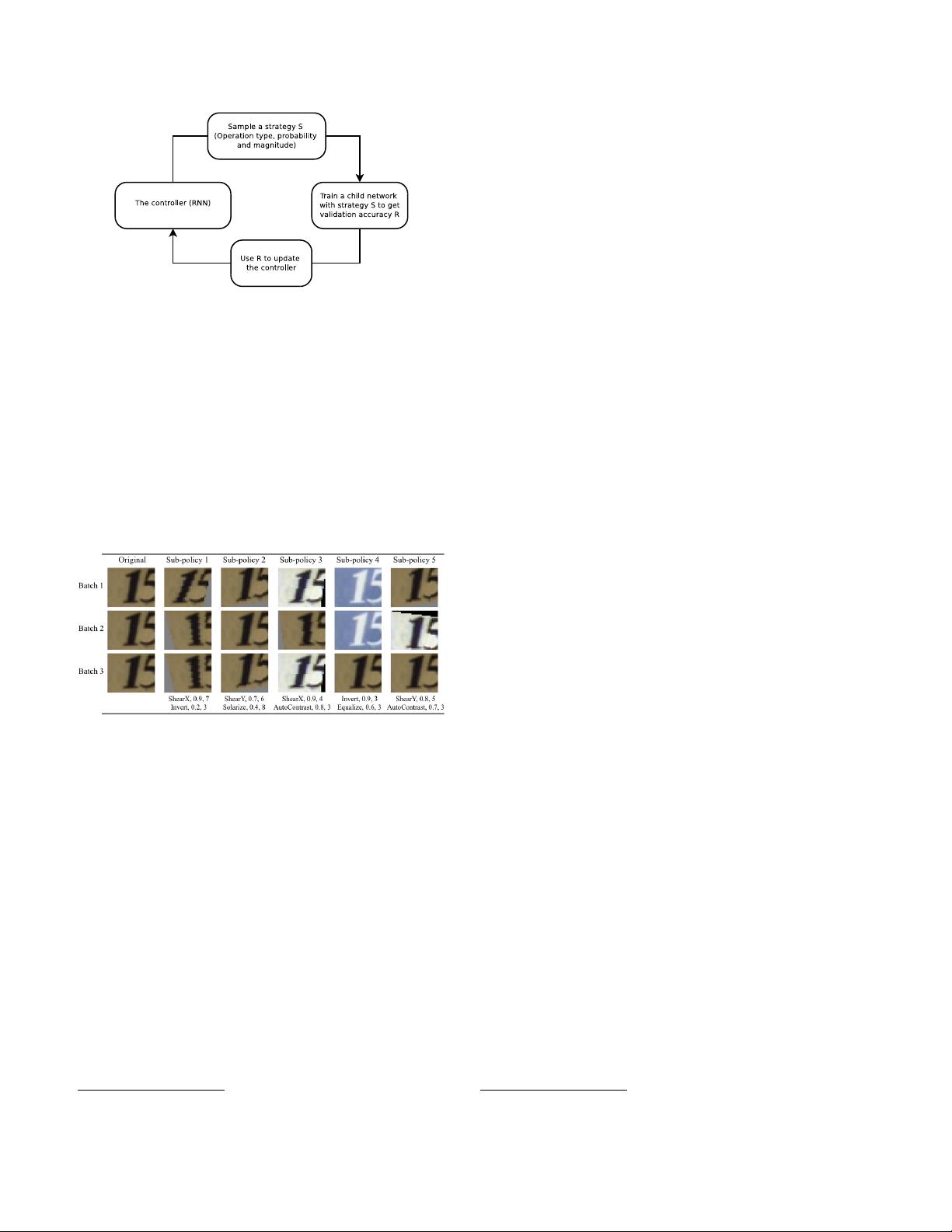

probability of applying ShearX is 0.9, and when applied,

has a magnitude of 7 out of 10. We then apply Invert with

probability of 0.8. The Invert operation does not use the

magnitude information. We emphasize that these operations

are applied in the specified order.

Figure 2. One of the policies found on SVHN, and how it can be

used to generate augmented data given an original image used to

train a neural network. The policy has 5 sub-policies. For every

image in a mini-batch, we choose a sub-policy uniformly at ran-

dom to generate a transformed image to train the neural network.

Each sub-policy consists of 2 operations, each operation is associ-

ated with two numerical values: the probability of calling the op-

eration, and the magnitude of the operation. There is a probability

of calling an operation, so the operation may not be applied in that

mini-batch. However, if applied, it is applied with the fixed mag-

nitude. We highlight the stochasticity in applying the sub-policies

by showing how one image can be transformed differently in dif-

ferent mini-batches, even with the same sub-policy. As explained

in the text, on SVHN, geometric transformations are picked more

often by AutoAugment. It can be seen why Invert is a commonly

selected operation on SVHN, since the numbers in the image are

invariant to that transformation.

The operations we used in our experiments are from PIL,

a popular Python image library.

1

For generality, we consid-

ered all functions in PIL that accept an image as input and

1

https://pillow.readthedocs.io/en/5.1.x/

output an image. We additionally used two other promis-

ing augmentation techniques: Cutout [12] and SamplePair-

ing [24]. The operations we searched over are ShearX/Y,

TranslateX/Y, Rotate, AutoContrast, Invert, Equalize, So-

larize, Posterize, Contrast, Color, Brightness, Sharpness,

Cutout [12], Sample Pairing [24].

2

In total, we have 16

operations in our search space. Each operation also comes

with a default range of magnitudes, which will be described

in more detail in Section 4. We discretize the range of mag-

nitudes into 10 values (uniform spacing) so that we can use

a discrete search algorithm to find them. Similarly, we also

discretize the probability of applying that operation into 11

values (uniform spacing). Finding each sub-policy becomes

a search problem in a space of (16× 10 × 11)

2

possibilities.

Our goal, however, is to find 5 such sub-policies concur-

rently in order to increase diversity. The search space with 5

sub-policies then has roughly (16× 10×11)

10

≈ 2.9×10

32

possibilities.

The 16 operations we used and their default range of val-

ues are shown in Table 1 in the Appendix. Notice that there

is no explicit “Identity” operation in our search space; this

operation is implicit, and can be achieved by calling an op-

eration with probability set to be 0.

Search algorithm details: The search algorithm that

we used in our experiment uses Reinforcement Learning,

inspired by [71, 4, 72, 5]. The search algorithm has two

components: a controller, which is a recurrent neural net-

work, and the training algorithm, which is the Proximal

Policy Optimization algorithm [53]. At each step, the con-

troller predicts a decision produced by a softmax; the pre-

diction is then fed into the next step as an embedding. In

total the controller has 30 softmax predictions in order to

predict 5 sub-policies, each with 2 operations, and each op-

eration requiring an operation type, magnitude and proba-

bility.

The training of controller RNN: The controller is

trained with a reward signal, which is how good the policy is

in improving the generalization of a “child model” (a neural

network trained as part of the search process). In our exper-

iments, we set aside a validation set to measure the gen-

eralization of a child model. A child model is trained with

augmented data generated by applying the 5 sub-policies on

the training set (that does not contain the validation set). For

each example in the mini-batch, one of the 5 sub-policies is

chosen randomly to augment the image. The child model

is then evaluated on the validation set to measure the accu-

racy, which is used as the reward signal to train the recurrent

network controller. On each dataset, the controller samples

about 15,000 policies.

Architecture of controller RNN and training hyper-

parameters: We follow the training procedure and hyper-

parameters from [72] for training the controller. More con-

2

Details about these operations are listed in Table 1 in the Appendix.