7

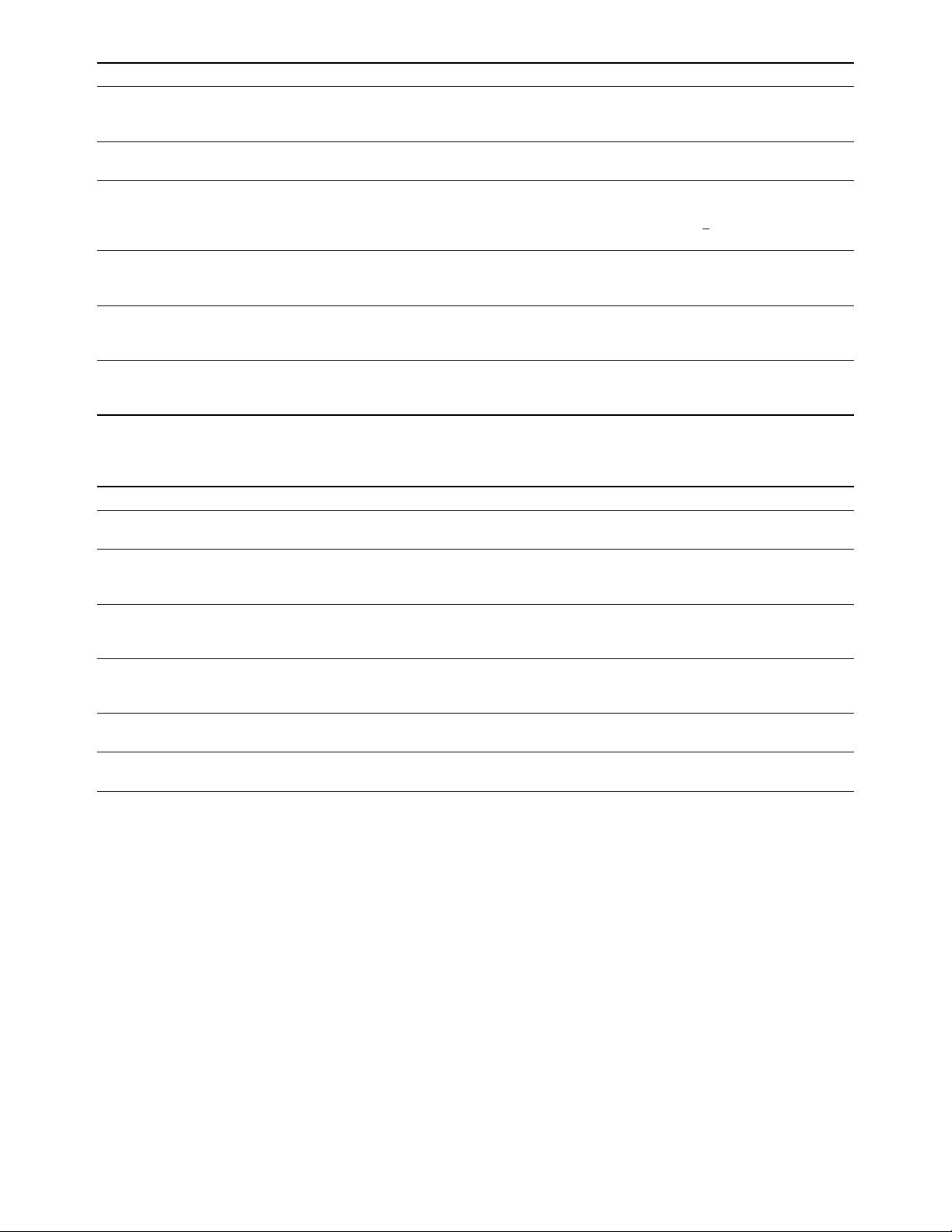

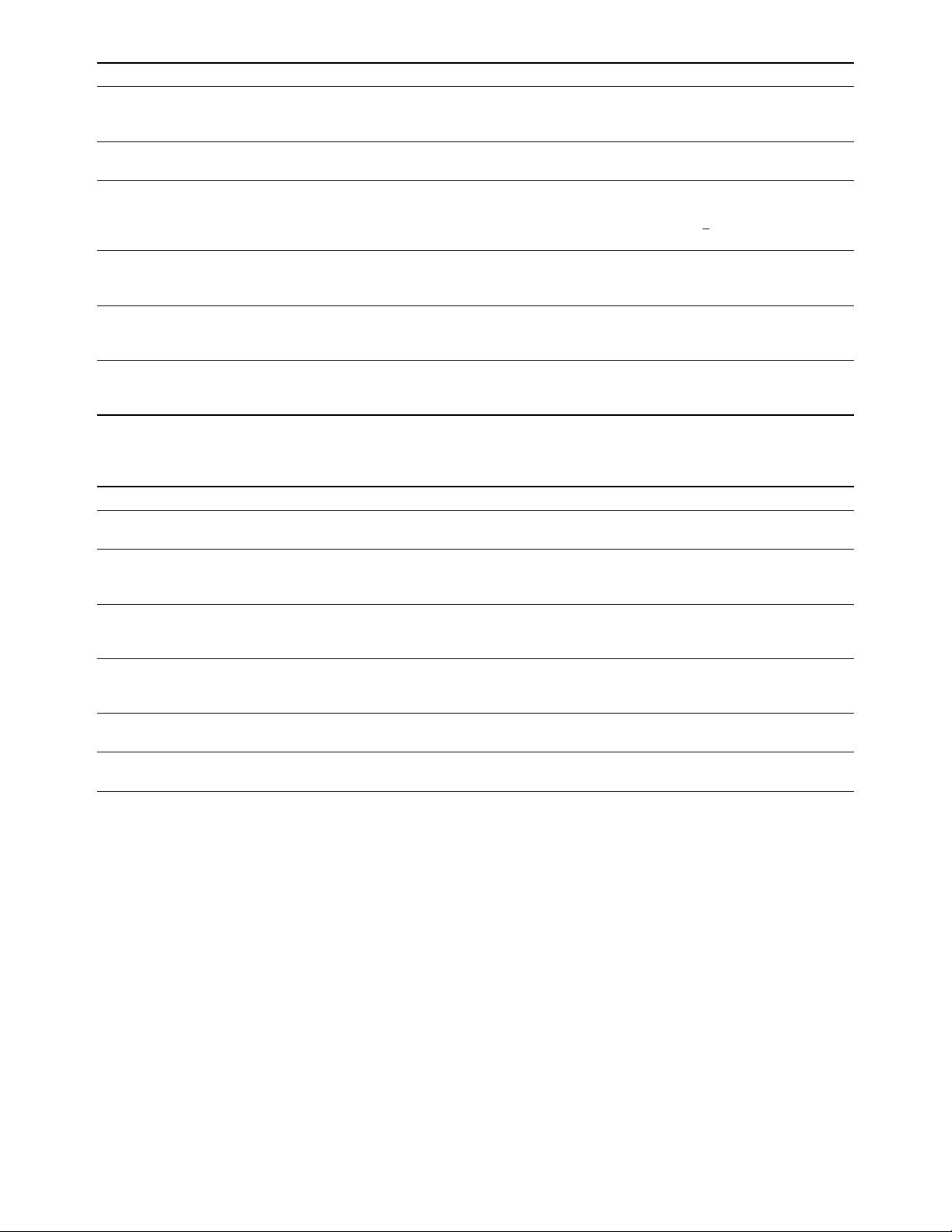

Dataset Year Description #Cites

MIT Ped.[30] 2000 One of the first pedestrian detection datasets. Consists of ∼500 training and ∼200

testing images (built based on the LabelMe database). url: http://cbcl.mit.edu/

software-datasets/PedestrianData.html

1515

INRIA [12] 2005 One of the most famous and important pedestrian detection datasets at early time.

Introduced by the HOG paper [12]. url: http://pascal.inrialpes.fr/data/human/

24705

Caltech

[59, 60]

2009 One of the most famous pedestrian detection datasets and benchmarks. Consists

of ∼190,000 pedestrians in training set and ∼160,000 in testing set. The metric

is Pascal-VOC @ 0.5 IoU. url: http://www.vision.caltech.edu/Image Datasets/

CaltechPedestrians/

2026

KITTI [61] 2012 One of the most famous datasets for traffic scene analysis. Captured in Karl-

sruhe, Germany. Consists of ∼100,000 pedestrians (∼6,000 individuals). url:

http://www.cvlibs.net/datasets/kitti/index.php

2620

CityPersons

[62]

2017 Built based on CityScapes dataset [63]. Consists of ∼19,000 pedestrians in training

set and ∼11,000 in testing set. Same metric with CalTech. url: https://bitbucket.

org/shanshanzhang/citypersons

50

EuroCity [64] 2018 The largest pedestrian detection dataset so far. Captured from 31 cities in 12

European countries. Consists of ∼238,000 instances in ∼47,000 images. Same

metric with CalTech.

1

TABLE 2

An overview of some popular pedestrian detection datasets.

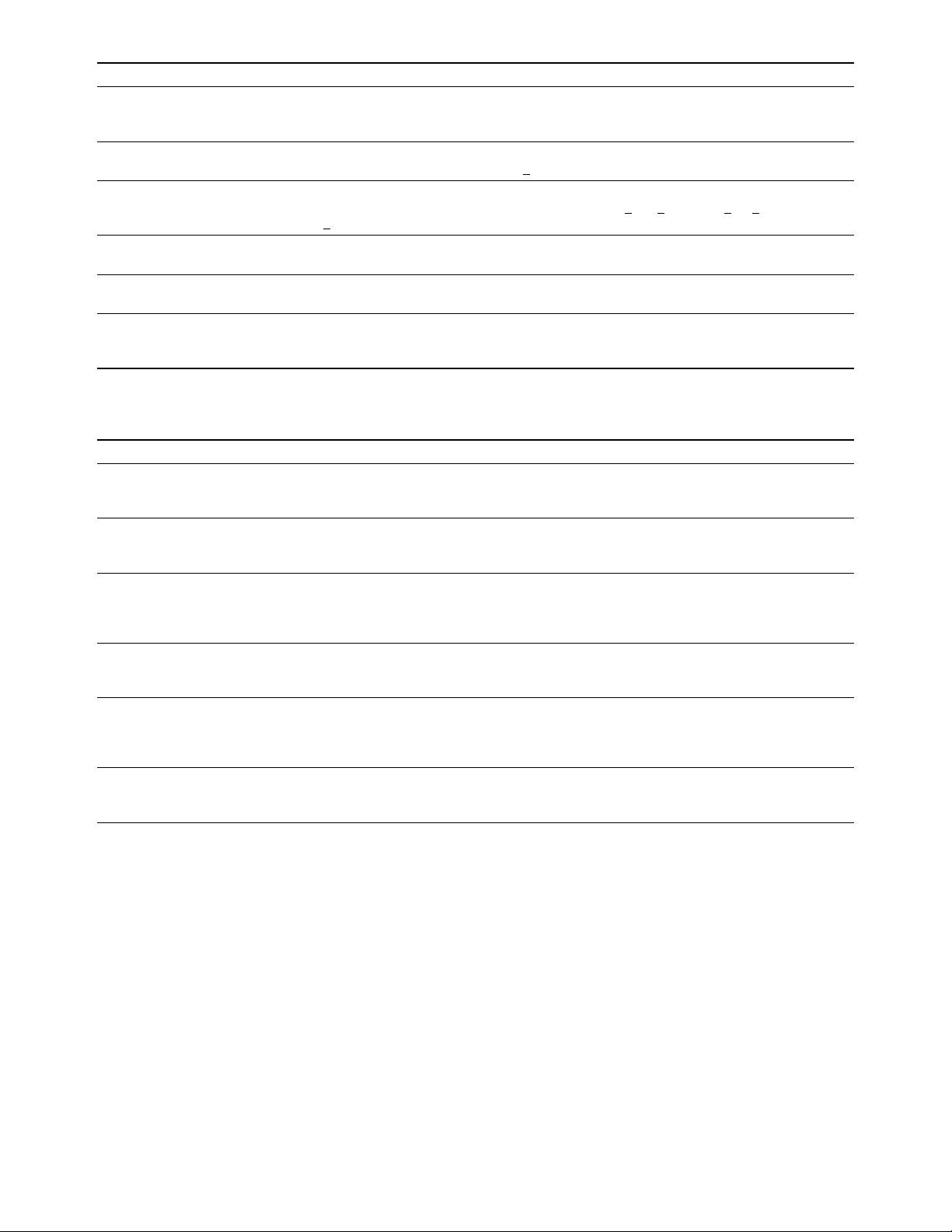

Dataset Year Description #Cites

FDDB [65] 2010 Consists of ∼2,800 images and ∼5,000 faces from Yahoo! With occlusions, pose

changes, out-of-focus, etc. url: http://vis-www.cs.umass.edu/fddb/index.html

531

AFLW [66] 2011 Consists of ∼26,000 faces and 22,000 images from Flickr with rich facial landmark

annotations. url: https://www.tugraz.at/institute/icg/research/team-bischof/

lrs/downloads/aflw/

414

IJB [67] 2015 IJB-A/B/C consists of over 50,000 images and videos frames, for both

recognition and detection tasks. url: https://www.nist.gov/programs-projects/

face-challenges

279

WiderFace

[68]

2016 One of the largest face detection dataset. Consists of ∼32,000 images and 394,000

faces with rich annotations i.e., scale, occlusion, pose, etc. url: http://mmlab.ie.

cuhk.edu.hk/projects/WIDERFace/

193

UFDD [69] 2018 Consists of ∼6,000 images and ∼11,000 faces. Variations include weather-based

degradation, motion blur, focus blur, etc. url: http://www.ufdd.info/

1

WildestFaces

[70]

2018 With ∼68,000 video frames and ∼2,200 shots of 64 fighting celebrities in uncon-

strained scenarios. The dataset hasn’t been released yet.

2

TABLE 3

An overview of some popular face detection datasets.

robot arm trying to grasp a spanner).

Recently, there are some further developments of the

evaluation in the Open Images dataset, e.g., by considering

the group-of boxes and the non-exhaustive image-level cate-

gory hierarchies. Some researchers also have proposed some

alternative metrics, e.g., “localization recall precision” [94].

Despite the recent changes, the VOC/COCO-based mAP is

still the most frequently used evaluation metric for object

detection.

2.3 Technical Evolution in Object Detection

In this section, we will introduce some important building

blocks of a detection system and their technical evolutions

in the past 20 years.

2.3.1 Early Time’s Dark Knowledge

The early time’s object detection (before 2000) did not follow

a unified detection philosophy like sliding window detec-

tion. Detectors at that time were usually designed based on

low-level and mid-level vision as follows.

• Components, shapes and edges

“Recognition-by-components”, as an important cogni-

tive theory [98], has long been the core idea of image

recognition and object detection [13, 99, 100]. Some early

researchers framed the object detection as a measurement of

similarity between the object components, shapes and con-

tours, including Distance Transforms [101], Shape Contexts

[35], and Edgelet [102], etc. Despite promising initial results,

things did not work out well on more complicated detec-