3D Semantic Segmentation with Submanifold Sparse Convolutional Networks

Benjamin Graham

Facebook AI Research

benjamingraham@fb.com

Martin Engelcke

∗

University of Oxford

martin@robots.ox.ac.uk

Laurens van der Maaten

Facebook AI Research

lvdmaaten@fb.com

Abstract

Convolutional networks are the de-facto standard for an-

alyzing spatio-temporal data such as images, videos, and

3D shapes. Whilst some of this data is naturally dense (e.g.,

photos), many other data sources are inherently sparse. Ex-

amples include 3D point clouds that were obtained using

a LiDAR scanner or RGB-D camera. Standard “dense”

implementations of convolutional networks are very ineffi-

cient when applied on such sparse data. We introduce new

sparse convolutional operations that are designed to pro-

cess spatially-sparse data more efficiently, and use them

to develop spatially-sparse convolutional networks. We

demonstrate the strong performance of the resulting mod-

els, called submanifold sparse convolutional networks (SS-

CNs), on two tasks involving semantic segmentation of 3D

point clouds. In particular, our models outperform all prior

state-of-the-art on the test set of a recent semantic segmen-

tation competition.

1. Introduction

Convolutional networks (ConvNets) constitute the state-

of-the art method for a wide range of tasks that involve

the analysis of data with spatial and/or temporal struc-

ture, such as photos, videos, or 3D surface models. While

such data frequently comprises a densely populated (2D or

3D) grid, other datasets are naturally sparse. For instance,

handwriting is made up of one-dimensional lines in two-

dimensional space, pictures made by RGB-D cameras are

three-dimensional point clouds, and polygonal mesh mod-

els form two-dimensional surfaces in 3D space.

The curse of dimensionality applies, in particular, to data

that lives on grids that have three or more dimensions: the

number of points on the grid grows exponentially with its

dimensionality. In such scenarios, it becomes increasingly

important to exploit data sparsity whenever possible in or-

der to reduce the computational resources needed for data

processing. Indeed, exploiting sparsity is paramount when

∗

Work done while interning at Facebook AI Research

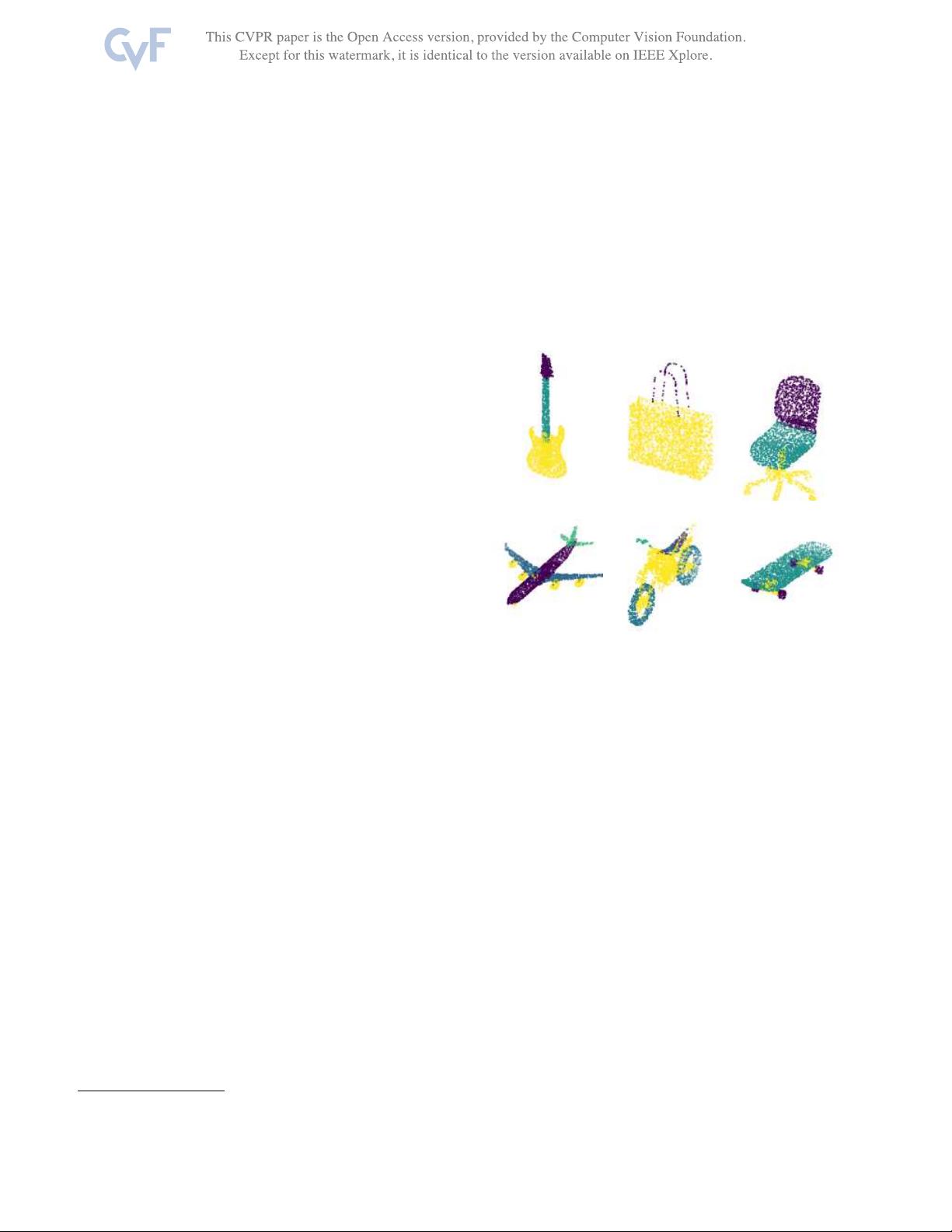

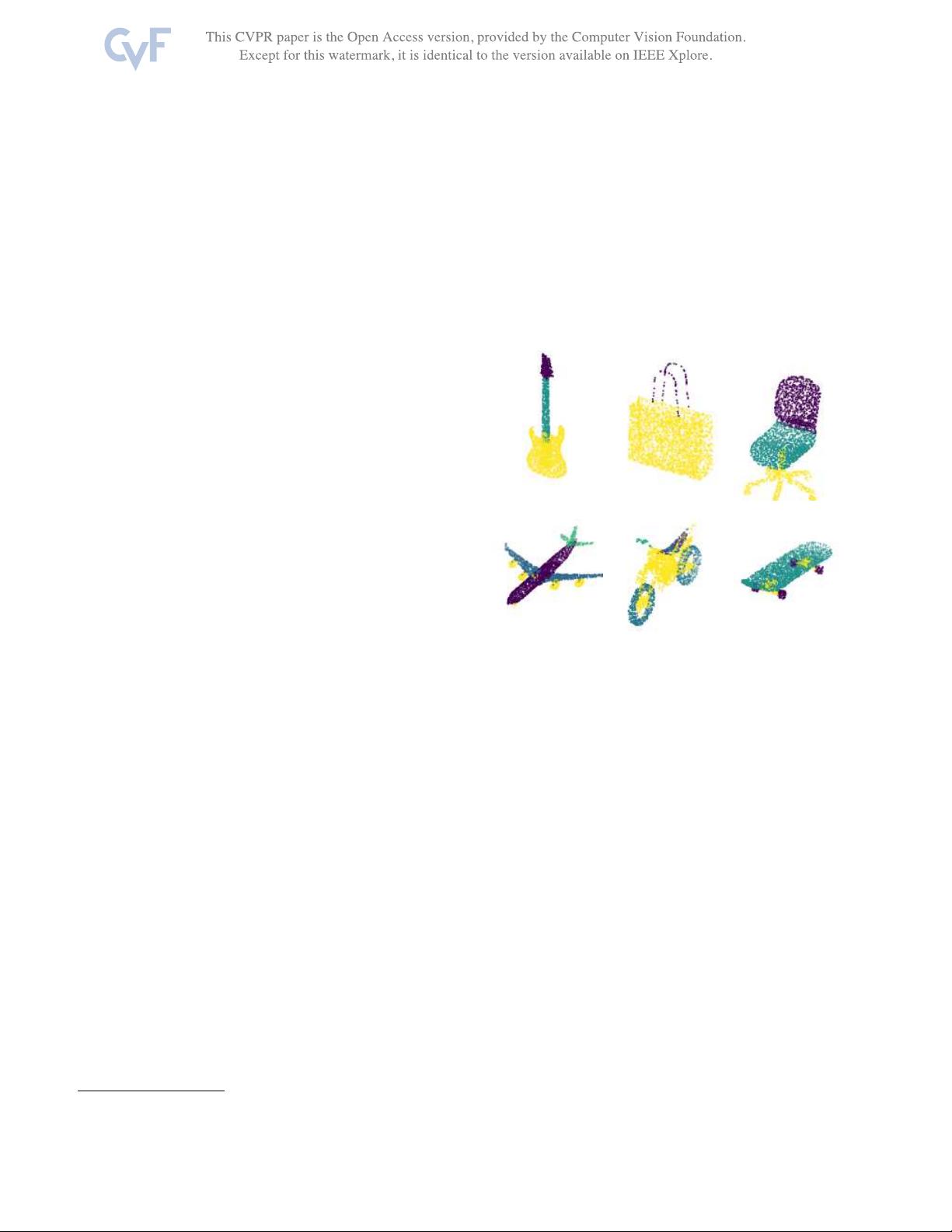

Figure 1: Examples of 3D point clouds of objects from the

ShapeNet part-segmentation challenge [

23]. The colors of

the points represent the part labels.

analyzing, e.g., RGB-D videos which are sparsely popu-

lated 4D structures.

Traditional convolutional network implementations are

optimized for data that lives on densely populated grids,

and cannot process sparse data efficiently. More recently,

a number of convolutional network implementations have

been presented that are tailored to work efficiently on sparse

data [

3, 4, 18]. Mathematically, some of these imple-

mentations are identical to regular convolutional networks,

but they require fewer computational resources in terms of

FLOPs and/or memory [

3, 4]. Prior work uses a sparse ver-

sion of the im2col operation that restricts computation

and storage to “active” sites [4], or uses the voting algo-

rithm from [

22] to prune unnecessary multiplications by ze-

ros [

3]. OctNets [18] modify the convolution operator to

produce “averaged” hidden states in parts of the grid that

are outside the region of interest.

One of the downsides of prior sparse implementations of

convolutional networks is that they “dilate” the sparse data

in every layer by applying “full” convolutions. In this work,

9224