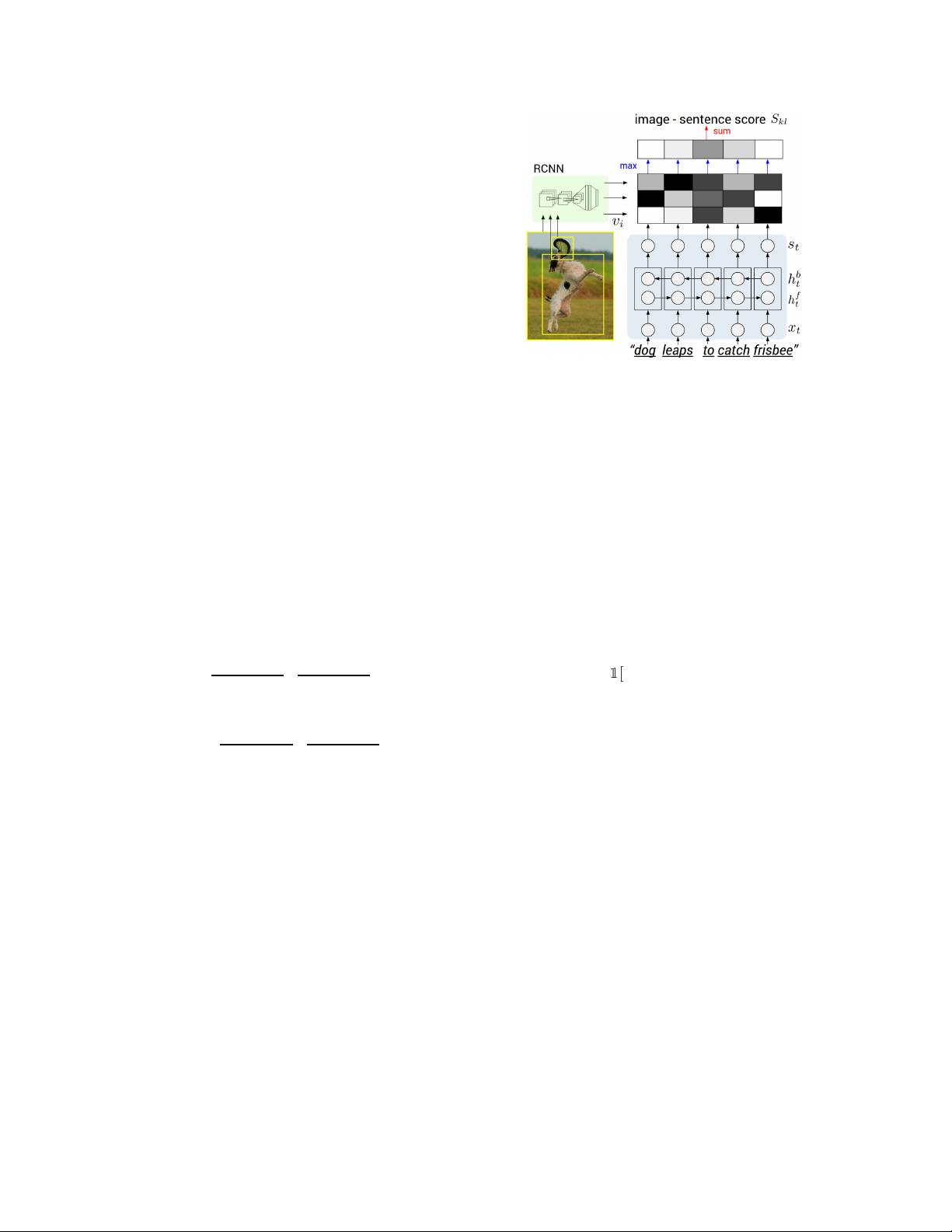

image-sentence score as a function of the individual region-

word scores. Intuitively, a sentence-image pair should have

a high matching score if its words have a confident support

in the image. The model of Karpathy et a. [24] interprets the

dot product v

T

i

s

t

between the i-th region and t-th word as a

measure of similarity and use it to define the score between

image k and sentence l as:

S

kl

=

X

t∈g

l

X

i∈g

k

max(0, v

T

i

s

t

). (7)

Here, g

k

is the set of image fragments in image k and g

l

is the set of sentence fragments in sentence l. The indices

k, l range over the images and sentences in the training set.

Together with their additional Multiple Instance Learning

objective, this score carries the interpretation that a sentence

fragment aligns to a subset of the image regions whenever

the dot product is positive. We found that the following

reformulation simplifies the model and alleviates the need

for additional objectives and their hyperparameters:

S

kl

=

X

t∈g

l

max

i∈g

k

v

T

i

s

t

. (8)

Here, every word s

t

aligns to the single best image region.

As we show in the experiments, this simplified model also

leads to improvements in the final ranking performance.

Assuming that k = l denotes a corresponding image and

sentence pair, the final max-margin, structured loss remains:

C(θ) =

X

k

h

X

l

max(0, S

kl

− S

kk

+ 1)

| {z }

rank images

(9)

+

X

l

max(0, S

lk

− S

kk

+ 1)

| {z }

rank sentences

i

.

This objective encourages aligned image-sentences pairs to

have a higher score than misaligned pairs, by a margin.

3.1.4 Decoding text segment alignments to images

Consider an image from the training set and its correspond-

ing sentence. We can interpret the quantity v

T

i

s

t

as the un-

normalized log probability of the t-th word describing any

of the bounding boxes in the image. However, since we are

ultimately interested in generating snippets of text instead

of single words, we would like to align extended, contigu-

ous sequences of words to a single bounding box. Note that

the na

¨

ıve solution that assigns each word independently to

the highest-scoring region is insufficient because it leads to

words getting scattered inconsistently to different regions.

To address this issue, we treat the true alignments as latent

variables in a Markov Random Field (MRF) where the bi-

nary interactions between neighboring words encourage an

Figure 3. Diagram for evaluating the image-sentence score S

kl

.

Object regions are embedded with a CNN (left). Words (enriched

by their context) are embedded in the same multimodal space with

a BRNN (right). Pairwise similarities are computed with inner

products (magnitudes shown in grayscale) and finally reduced to

image-sentence score with Equation 8.

alignment to the same region. Concretely, given a sentence

with N words and an image with M bounding boxes, we

introduce the latent alignment variables a

j

∈ {1 . . . M} for

j = 1 . . . N and formulate an MRF in a chain structure

along the sentence as follows:

E(a) =

X

j=1...N

ψ

U

j

(a

j

) +

X

j=1...N −1

ψ

B

j

(a

j

, a

j+1

) (10)

ψ

U

j

(a

j

= t) = v

T

i

s

t

(11)

ψ

B

j

(a

j

, a

j+1

) = β

1

[a

j

= a

j+1

]. (12)

Here, β is a hyperparameter that controls the affinity to-

wards longer word phrases. This parameter allows us to

interpolate between single-word alignments (β = 0) and

aligning the entire sentence to a single, maximally scoring

region when β is large. We minimize the energy to find the

best alignments a using dynamic programming. The output

of this process is a set of image regions annotated with seg-

ments of text. We now describe an approach for generating

novel phrases based on these correspondences.

3.2. Multimodal Recurrent Neural Network for

generating descriptions

In this section we assume an input set of images and their

textual descriptions. These could be full images and their

sentence descriptions, or regions and text snippets, as in-

ferred in the previous section. The key challenge is in the

design of a model that can predict a variable-sized sequence

of outputs given an image. In previously developed lan-

guage models based on Recurrent Neural Networks (RNNs)

[40, 50, 10], this is achieved by defining a probability distri-

bution of the next word in a sequence given the current word

and context from previous time steps. We explore a simple