SATTLER ET AL. – ROBUST AND COMMUNICATION-EFFICIENT FEDERATED LEARNING FROM NON-IID DATA 4

0 20000 40000

Iterations

0.2

0.4

0.6

0.8

Accuracy

VGG11* @ CIFAR

IID Data

FedAvg

n=100

no comp.

signSGD

sparse top-k

p=0.01

0 20000 40000

Iterations

0.2

0.4

0.6

0.8

VGG11* @ CIFAR

NON-IID Data (2)

0 20000 40000

Iterations

0.2

0.4

0.6

0.8

VGG11* @ CIFAR

NON-IID Data (1)

0 5000 10000

Iterations

0.6

0.7

0.8

0.9

Accuracy

Logistic @ MNIST

IID Data

0 5000 10000

Iterations

0.6

0.7

0.8

0.9

Logistic @ MNIST

NON-IID Data (2)

0 5000 10000

Iterations

0.6

0.7

0.8

0.9

Logistic @ MNIST

NON-IID Data (1)

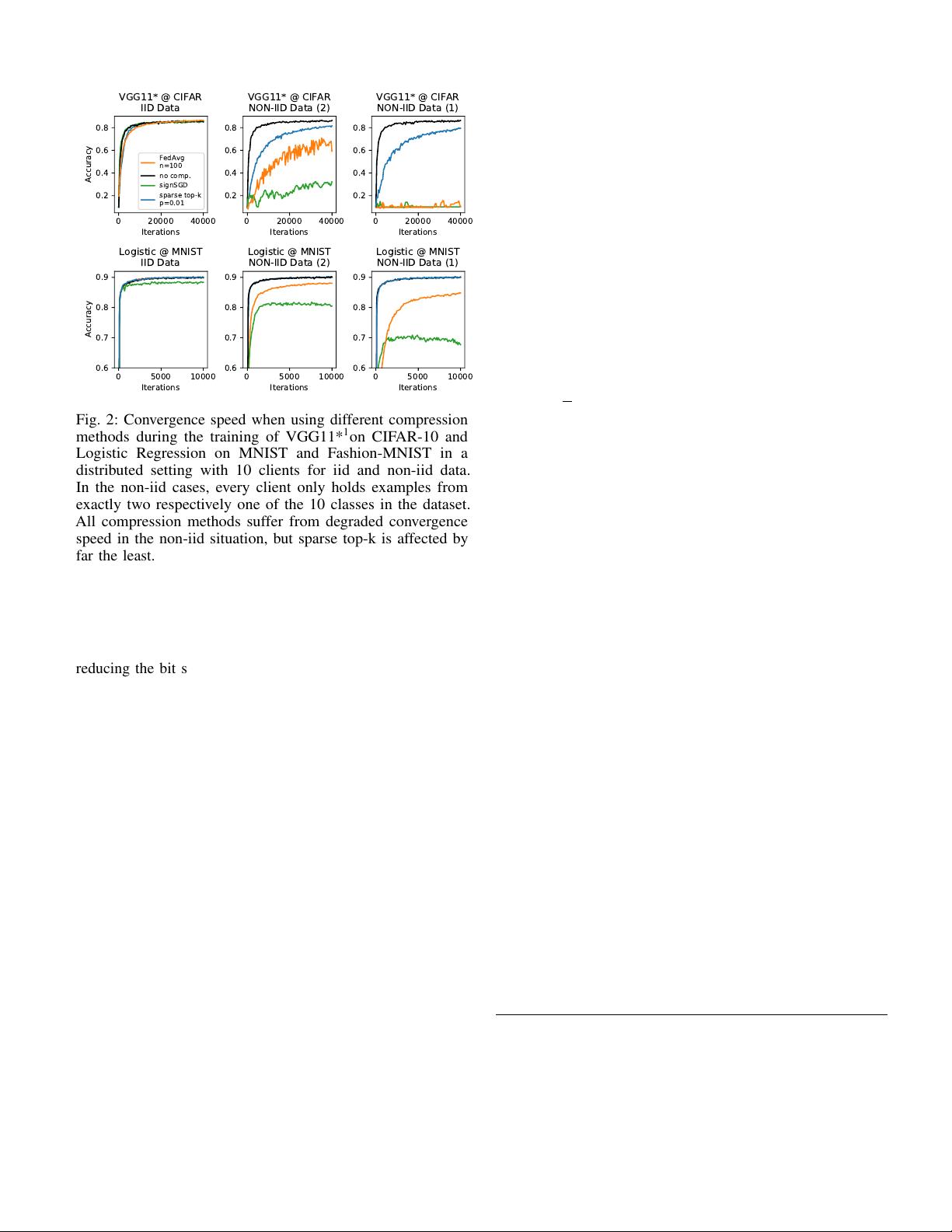

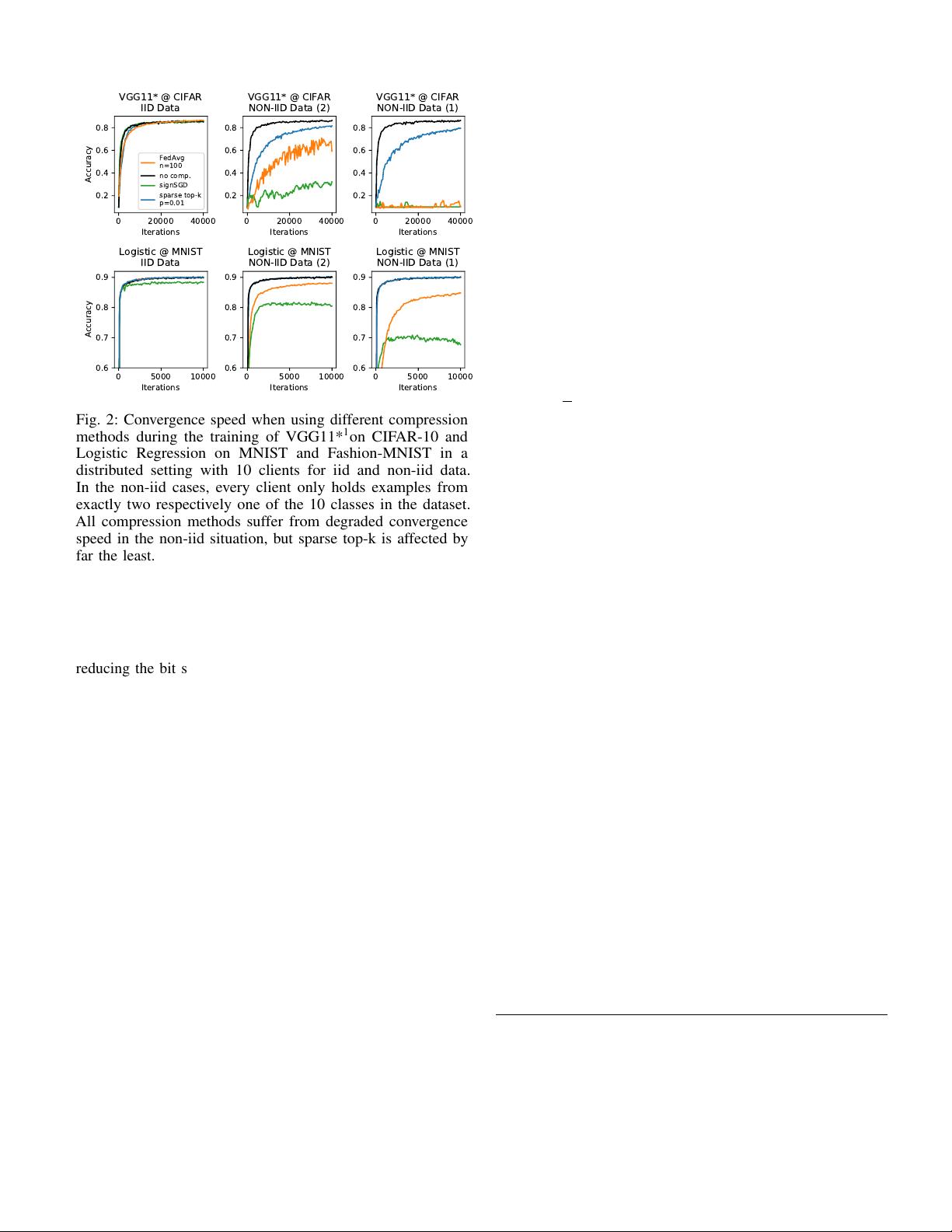

Fig. 2: Convergence speed when using different compression

methods during the training of VGG11*

1

on CIFAR-10 and

Logistic Regression on MNIST and Fashion-MNIST in a

distributed setting with 10 clients for iid and non-iid data.

In the non-iid cases, every client only holds examples from

exactly two respectively one of the 10 classes in the dataset.

All compression methods suffer from degraded convergence

speed in the non-iid situation, but sparse top-k is affected by

far the least.

reducing the bit size per update by a factor of

×32

. signSGD

also incorporates download compression by aggregating the

binary updates from all clients by means of a majority vote.

Other authors propose to stochastically quantize the gradients

during upload in an unbiased way (TernGrad [

19

], QSGD

[

20

], ATOMO [

21

]). These methods are theoretically appealing,

as they inherit the convergence properties of regular SGD

under relatively mild assumptions. However their empirical

performance and compression rates do not match those of

sparsification methods.

Out of all the above listed methods, only Federated Averaging

and signSGD compress both the upstream and downstream

communication. All other methods are of limited utility in the

Federated Learning setting defined in Section II as they leave

the communication from the server to the clients uncompressed.

Notation:

In the following calligraphic

W

will refer to the

entirety of parameters of a neural network, while regular

uppercase

W

refers to one specific tensor of parameters within

W

and lowercase

w

refers to one single scalar parameter of

the network. Arithmetic operations between neural network

parameters are to be understood element-wise.

IV. LIMITATIONS OF EXISTING COMPRESSION METHODS

The related work on efficient distributed deep learning almost

exclusively considers iid data distributions among the clients,

i.e. they assume unbiasedness of the local gradients with respect

to the full-batch gradient according to

E

x∼p

i

[∇

W

l(x, W)] = ∇

W

R(W) ∀i = 1, .., n (2)

where

p

i

is the distribution of data on the

i

-th client and

R(W)

is the empirical risk function over the combined training data.

While this assumption is reasonable for parallel training

where the distribution of data among the clients is chosen by the

practitioner, it is typically not valid in the Federated Learning

setting where we can generally only hope for unbiasedness in

the mean

1

n

n

X

i=1

E

x

i

∼p

i

[∇

W

l(x

i

, W)] = ∇

W

R(W) (3)

while the individual client’s gradients will be biased towards

the local dataset according to

E

x∼p

i

[∇

W

l(x, W)] = ∇

W

R

i

(W) 6= ∇

W

R(W) ∀i = 1, .., n.

(4)

As it violates assumption

(2)

, a non-iid distribution of

the local data renders existing convergence guarantees as

formulated in [

19

][

20

][

29

][

21

] inapplicable and has dramatic

effects on the practical performance of communication-efficient

distributed training algorithms as we will demonstrate in the

following experiments.

A. Preliminary Experiments

We run preliminary experiments with a simplified version of

the well-studied 11-layer VGG11 network [

28

], which we train

on the CIFAR-10 [

30

] dataset in a Federated Learning setup

using 10 clients. For the iid setting we split the training data

randomly into equally sized shards and assign one shard to

every one of the clients. For the "non-iid (

m

)" setting we assign

every client samples from exactly

m

classes of the dataset. The

data splits are non-overlapping and balanced such that every

client ends up with the same number of data points. The detailed

procedure that generates the split of data is described in Section

B of the appendix. We also perform experiments with a simple

logistic regression classifier, which we train on the MNIST

dataset [

31

] under the same setup of the Federated Learning

environment. Both models are trained using momentum SGD.

To make the results comparable, all compression methods use

the same learning rate and batch size.

1

We denote by VGG11* a simplified version of the original VGG11

architecture described in [

28

], where all dropout and batch normalization

layers are removed and the number of convolutional filters and size of all

fully-connected layers is reduced by a factor of 2.