IEICE TRANS. INF. & SYST., VOL.E94–D, NO.10 OCTOBER 2011

1

PAPER

Sp ecial Section on Information-Based Induction Sciences and Machine Learning

A Short Introduction to Learning to Rank

Hang LI

†

, Nonmember

SUMMARY Learning to rank refers to machine learning

techniques for training the model in a ranking task. Learning

to rank is useful for many applications in Information Retrieval,

Natural Language Processing, and Data Mining. Intensive stud-

ies have been conducted on the problem and significant progress

has been made [1], [2]. This short paper gives an introduction

to learning to rank, and it specifically explains the fundamen-

tal problems, existing approaches, and future work of learning to

rank. Several learning to rank methods using SVM techniques

are described in details.

key words: Learning to rank, information retrieval, natural

language processing, SVM

1. Ranking Problem

Learning to rank can be employed in a wide variety

of applications in Information Retrieval (IR), Natural

Language Processing (NLP), and Data Mining (DM).

Typical applications are document retrieval, expert

search, definition search, collaborative filtering, ques-

tion answering, keyphrase extraction, document sum-

marization, and machine translation [2]. Without loss

of generality, we take document retrieval as example in

this article.

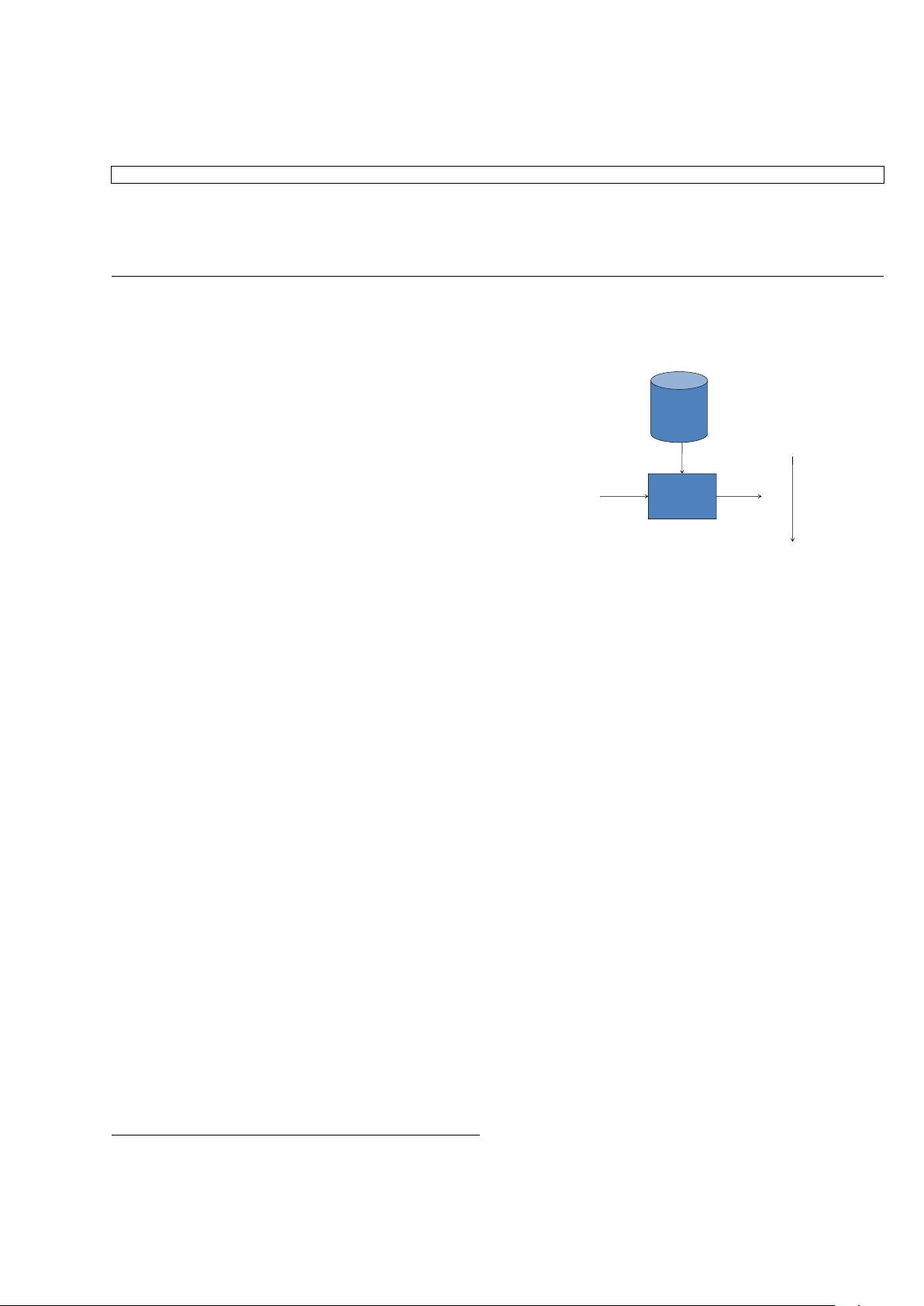

Document retrieval is a task as follows (Fig. 1).

The system maintains a collection of documents. Given

a query, the system retrieves documents containing the

query words from the collection, ranks the documents,

and returns the top ranked documents. The ranking

task is performed by using a ranking model f (q, d ) to

sort the documents, where q denotes a query and d

denotes a document.

Traditionally, the ranking model f(q, d) is created

without training. In the BM25 model, for example, it

is assumed that f(q, d) is represented by a conditional

probability distribution P (r|q, d) where r takes on 1 or

0 as value and denotes being relevant or irreverent, and

q and d denote a query and a document respectively. In

Language Model for IR (LMIR), f (q, d) is represented

as a conditional probability distribution P (q|d). The

probability models can be calculated with the words

appearing in the query and do cument, and thus no

training is needed (only tuning of a small number of

parameters is necessary) [3].

Manuscript received December 31, 2010.

Manuscript revised June 1, 2011.

†

The author is with Microsoft Research Asia

DOI: 10.1587/transinf.E94.D.1

ranking based on

relevance

Retrieval

System

q

nq

q

q

d

d

d

,

2,

1,

M

query

ranking of documents

Fig. 1 Document Retrieval

A new trend has recently arisen in document re-

trieval, particularly in web search, that is, to employ

machine learning techniques to automatically construct

the ranking model f (q, d). This is motivated by a num-

ber of facts. At web search, there are many signals

which can represent relevance, for example, the anchor

texts and PageRank score of a web page. Incorporating

such information into the ranking model and automat-

ically constructing the ranking model using machine

learning techniques becomes a natural choice. In web

search engines, a large amount of search log data, such

as click through data, is accumulated. This makes it

possible to derive training data from search log data

and automatically create the ranking model. In fact,

learning to rank has become one of the key technolo-

gies for modern web search.

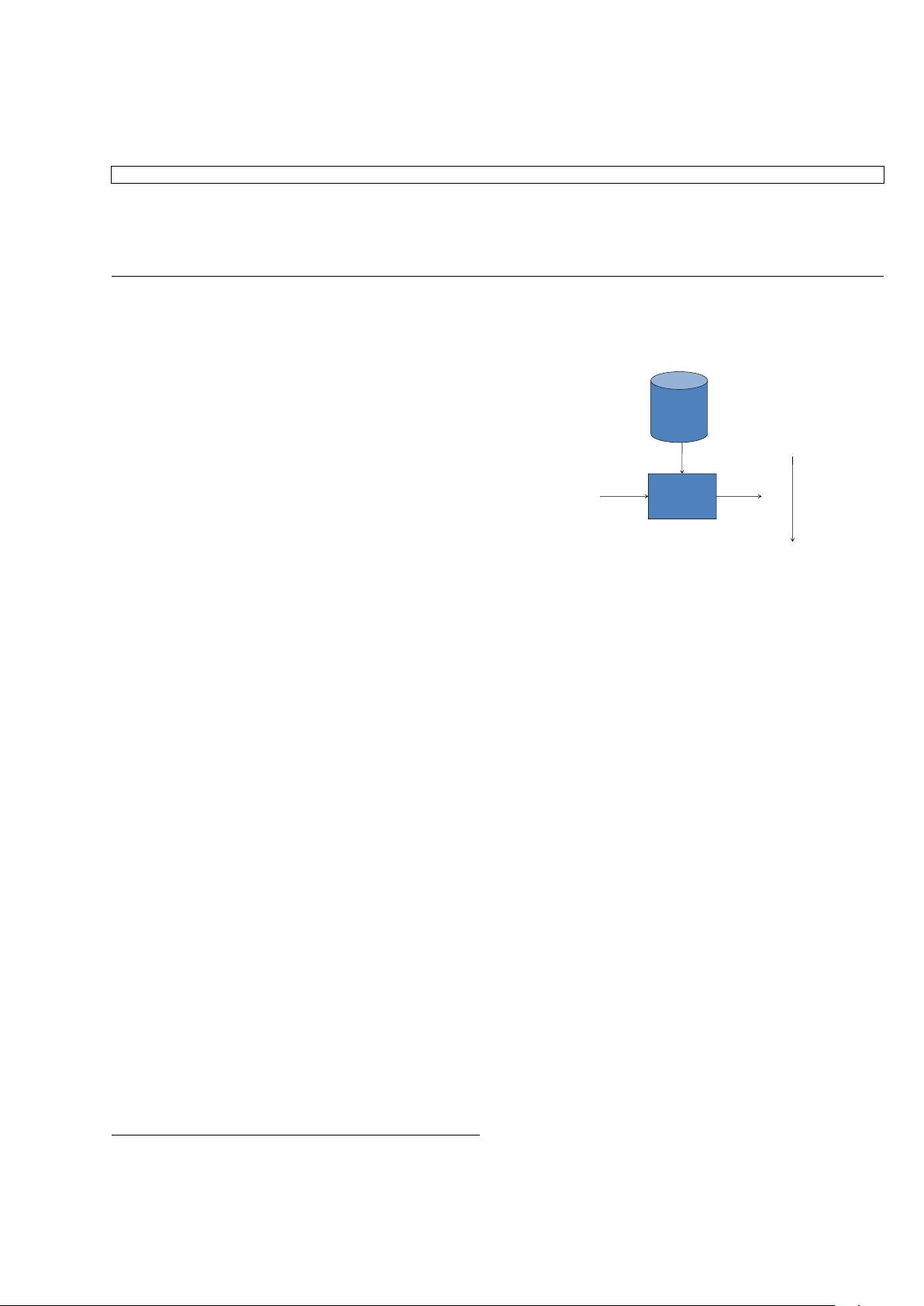

We describe a number of issues in learning for rank-

ing, including training and testing, data labeling, fea-

ture construction, evaluation, and relations with ordi-

nal classification.

1.1 Training and Testing

Learning to rank is a supervised learning task and thus

has training and testing phases (see Fig. 2).

The training data consists of queries and docu-

ments. Each query is associated with a number of docu-

ments. The relevance of the documents with respect to

the query is also given. The relevance information can

be represented in several ways. Here, we take the most

widely used approach and assume that the relevance of

a document with respect to a query is represented by

Copyright

c

2011 The Institute of Electronics, Information and Communication Engineers