Chapter 1 ■ the World of Big data and iot

5

From there, as architectures grew and changed, the need for more advanced data management systems

became necessary along with the need to do some level of advanced analytics. For example, a popular

scenario was the need to start studying customers and what they were buying. The analytics allowed, for

example, businesses to provide purchase recommendations and other opportunities to drive business

opportunities.

This scenario, along with more detailed data insight requirements, also brought around more powerful

database that added more analytics processing, dealing with cubes, dimensions, and fact tables for doing

OLAP analysis. Modern databases provided greater power and additional proficiencies for accessing

information through query syntaxes such as SQL. This also abstracted the complexity of the underlying

storage mechanism, making it easy to work with relational data and the volume of data it supported.

But there quickly came a point when we needed more than what current enterprise-level relational

database systems provided. Exponential data growth was a reality and we needed deeper and distinct

analysis on historical, current, and future data. This was critical to business success. The need to look and

analyze each individual record was right in front of us, but the infrastructure and current data management

systems didn’t and couldn’t scale. It was difficult to take current systems and infrastructure and apply them

to the deeper analytics of analyzing individual transactions.

This isn’t to say that the current architecture and systems are going away. There is and will be a need

for powerful relational database and business intelligence systems like SQL Server and SQL Server Analysis

Services. But as the amount data grew and the need to get deeper insights into that data was needed, it was

hard to take the same processing, architecture, and analysis model and scale it to meet the requirements of

storing and processing very granular transactions.

The strain on the data architecture along with the different types of data has challenged us to come up

with more efficient and scalable forms of storing and processing information. Our data wasn’t just relational

anymore. It was coming in the forms of social media, sensor and device information, weblogs, and more.

About this time we saw the term “No-SQL” bounced around. Many thought that this meant that relational

was dead. But in reality the term means “not only SQL,” meaning that data comes in all forms and types:

relational and non-, or semi-, relational.

Thus, in essence, we can think about big data as representing the vast amounts and different types of

data that organizations continue to work with, and try to gain insights into, on a daily basis. One attribute

of big data is that it can be generated at a very rapid rate, sometimes called a “fire hose” rate. The data is

valuable but previously not practical to store or analyze due to the cost of appropriate methods.

Additionally, when we talk about solutions to big data, it’s just not about the data itself; it also includes

the systems and processes to uncover and gain insights hidden inside all that wonderful, sweet data. Today’s

big data solutions are comprised of a set of technologies and processes that allow you to efficiently store and

analyze the data to gain the desired data insights.

The Three Vs of Big Data

Companies begin to look at big data solutions when their current traditional database systems reach the

limit of performance, scale, and cost. These big data solutions, in addition to helping overcome database

system limitations, also provide a way to more effectively provide the much-needed avenue for gaining

the greatly required insight into the data to further examine and mine data in a way that isn’t possible in

database systems. As such, big data is typically described and defined in terms of the three Vs to understand

big data and solve big data issues: volume, variety, and velocity.

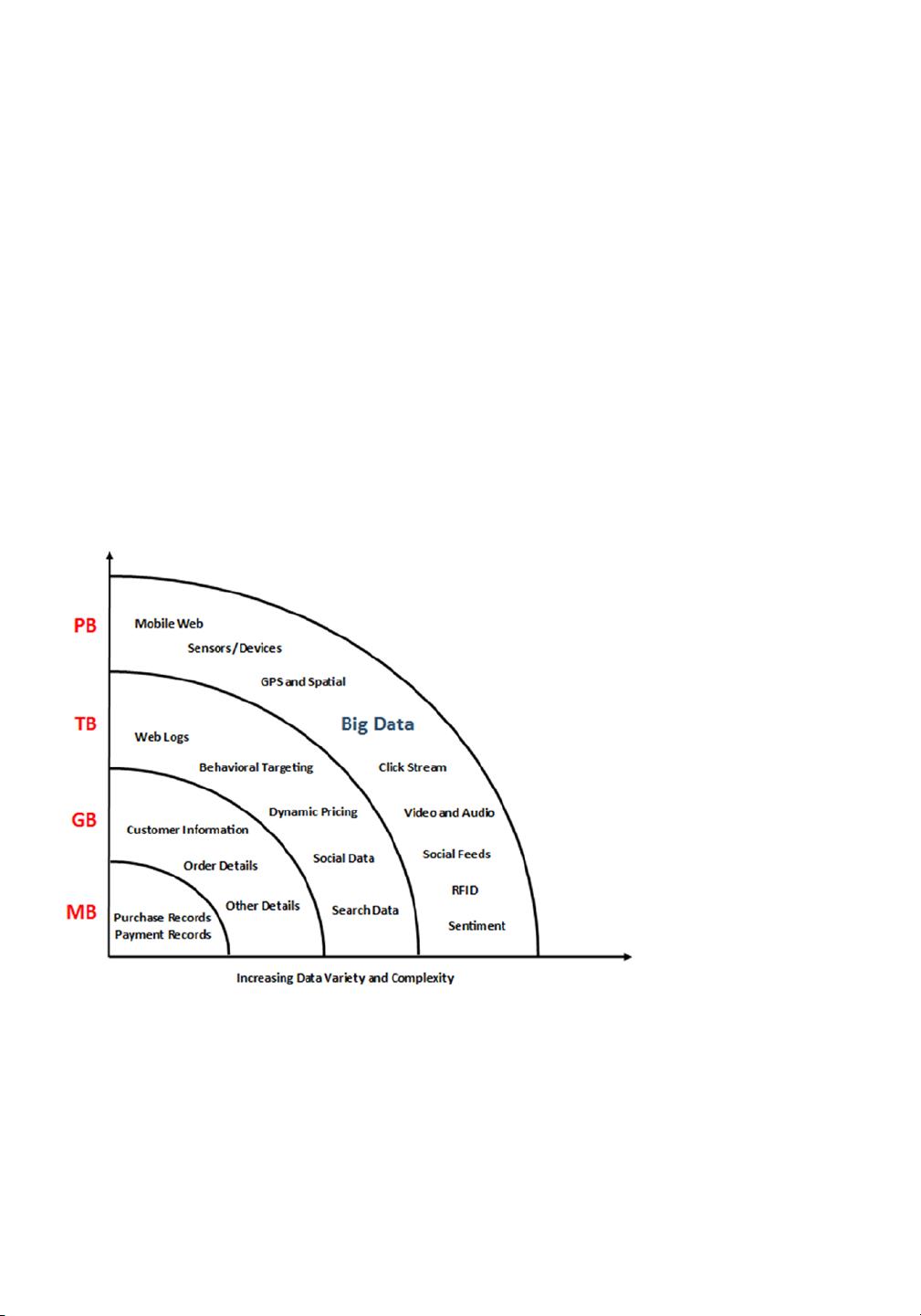

Volume

The volume of data has reference to the scale of data. Where relational database systems work in the high

terabyte range, big data solutions store and process hundreds or thousands of terabytes, petabytes, and even

exabytes, with the total volume growing exponentially. A big data solution must have the storage to handle

and manage this volume of data and be designed to scale and work efficiently across multiple machines in a

distributed environment. Organizations today need to handle mass quantities of data each day.