3 Our Model

Similar to extractive and abstractive models, the

proposed event-driven model consists of two

steps, namely candidate extraction and headline

generation.

3.1 Candidate Extraction

We exploit events as the basic units for candidate

extraction. Here an event is a tuple (S, P, O),

where S is the subject, P is the predicate and O is

the object. For example, for the sentence “Ukraine

Delays Announcement of New Government”, the

event is (Ukraine, Delays, Announcement). This

type of event structures has been used in open

information extraction (Fader et al., 2011), and has

a range of NLP applications (Ding et al., 2014; Ng

et al., 2014).

A sentence is a well-formed structure with

complete syntactic information, but can contain

redundant information for text summarization,

which makes sentences very sparse. Phrases can

be used to avoid the sparsity problem, but with

little syntactic information between phrases, fluent

headline generation is difficult. Events can be

regarded as a trade-off between sentences and

phrases. They are meaningful structures without

redundant components, less sparse than sentences

and containing more syntactic information than

phrases.

In our system, candidate event extraction is

performed on a bipartite graph, where the two

types of nodes are lexical chains (Section 3.1.2)

and events (Section 3.1.1), respectively. Mutual

Reinforcement Principle (Zha, 2002) is applied

to jointly learn chain and event salience on the

bipartite graph for a given input. We obtain the

top-k candidate events by their salience measures.

3.1.1 Extracting Events

We apply an open-domain event extraction

approach. Different from traditional event

extraction, for which types and arguments are pre-

defined, open event extraction does not have a

closed set of entities and relations (Fader et al.,

2011). We follow Hu’s work (Hu et al., 2013) to

extract events.

Given a text, we first use the Stanford

dependency parser

1

to obtain the Stanford typed

dependency structures of the sentences (Marneffe

and Manning, 2008). Then we focus on

1

http://nlp.stanford.edu/software/lex-parser.shtml

DT NNPS MD VB DT NNP NNP POS NNS

the Keenans could demand the Aryan Nations ’ assets

nsubj

aux

dobj

det nn

poss

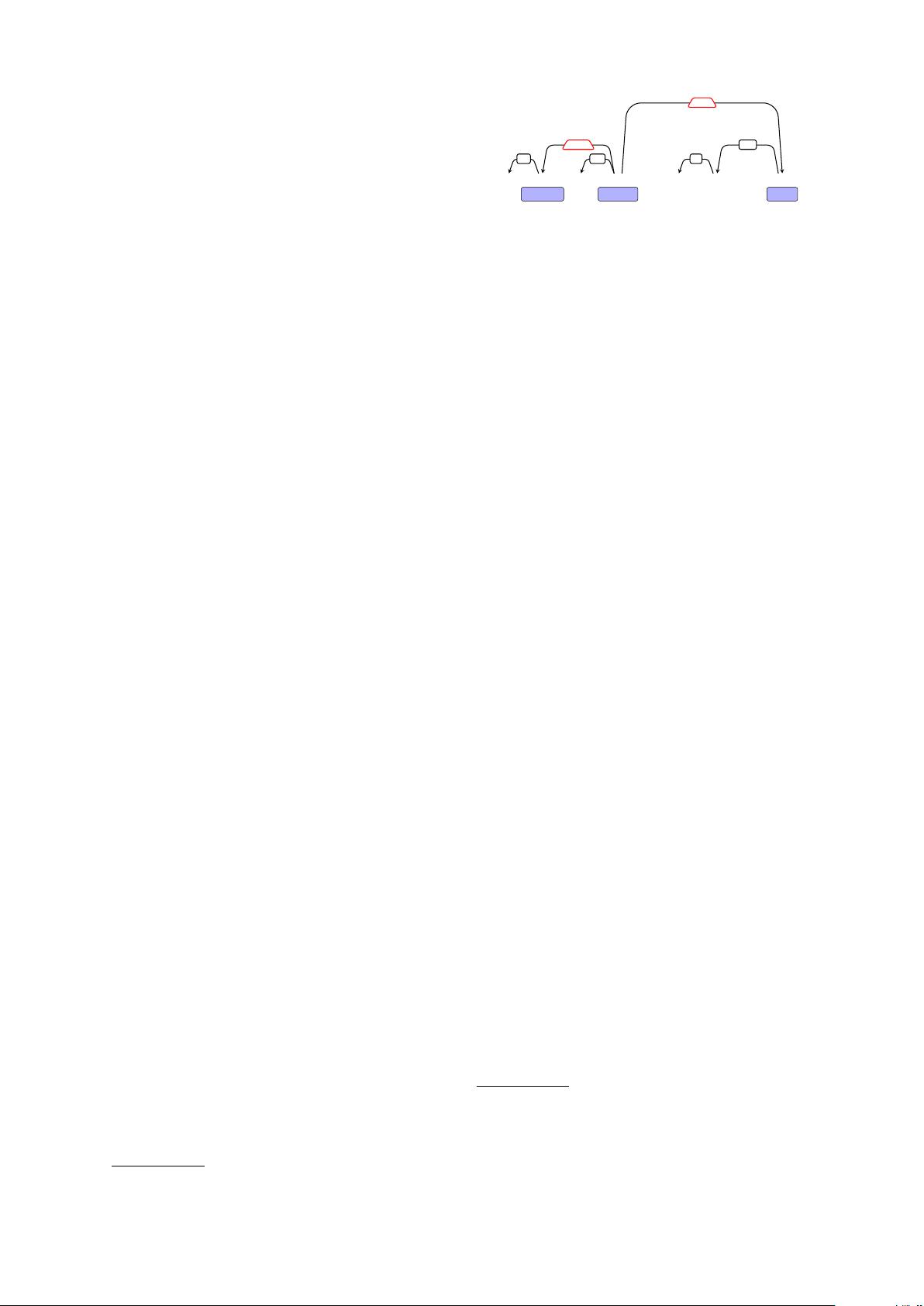

Figure 2: Dependency tree for the sentence

“the Keenans could demand the Aryan Nations’

assets”.

two relations, nsubj and dobj, for extracting

event arguments. Event arguments that have

the same predicate are merged into one event,

represented by tuple (Subject, Predicate, Object).

For example, given the sentence, “the Keenans

could demand the Aryan Nations’ assets”, Figure

2 present its partial parsing tree. Based

on the parsing results, two event arguments

are obtained: nsubj(demand, Keenans) and

dobj(demand, assets). The two event arguments

are merged into one event: (Keenans, demand,

assets).

3.1.2 Extracting Lexical Chains

Lexical chains are used to link semantically-

related words and phrases (Morris and Hirst, 1991;

Barzilay and Elhadad, 1997). A lexical chain is

analogous to a semantic synset. Compared with

words, lexical chains are less sparse for event

ranking.

Given a text, we follow Boudin and Morin

(2013) to construct lexical chains based on the

following principles:

1. All words that are identical after stemming

are treated as one word;

2. All NPs with the same head word fall into one

lexical chain;

2

3. A pronoun is added to the corresponding

lexical chain if it refers to a word in the chain

(The coreference resolution is performed

using the Stanford Coreference Resolution

system);

3

4. Lexical chains are merged if their main words

are in the same synset of WordNet.

4

2

NPs are extracted according to the dependency relations

nn and amod. As shown in Figure 2, we can extract the noun

phrase Aryan Nations according to the dependency relation

nn(Nations, Aryan).

3

http://nlp.stanford.edu/software/dcoref.shtml

4

http://wordnet.princeton.edu/