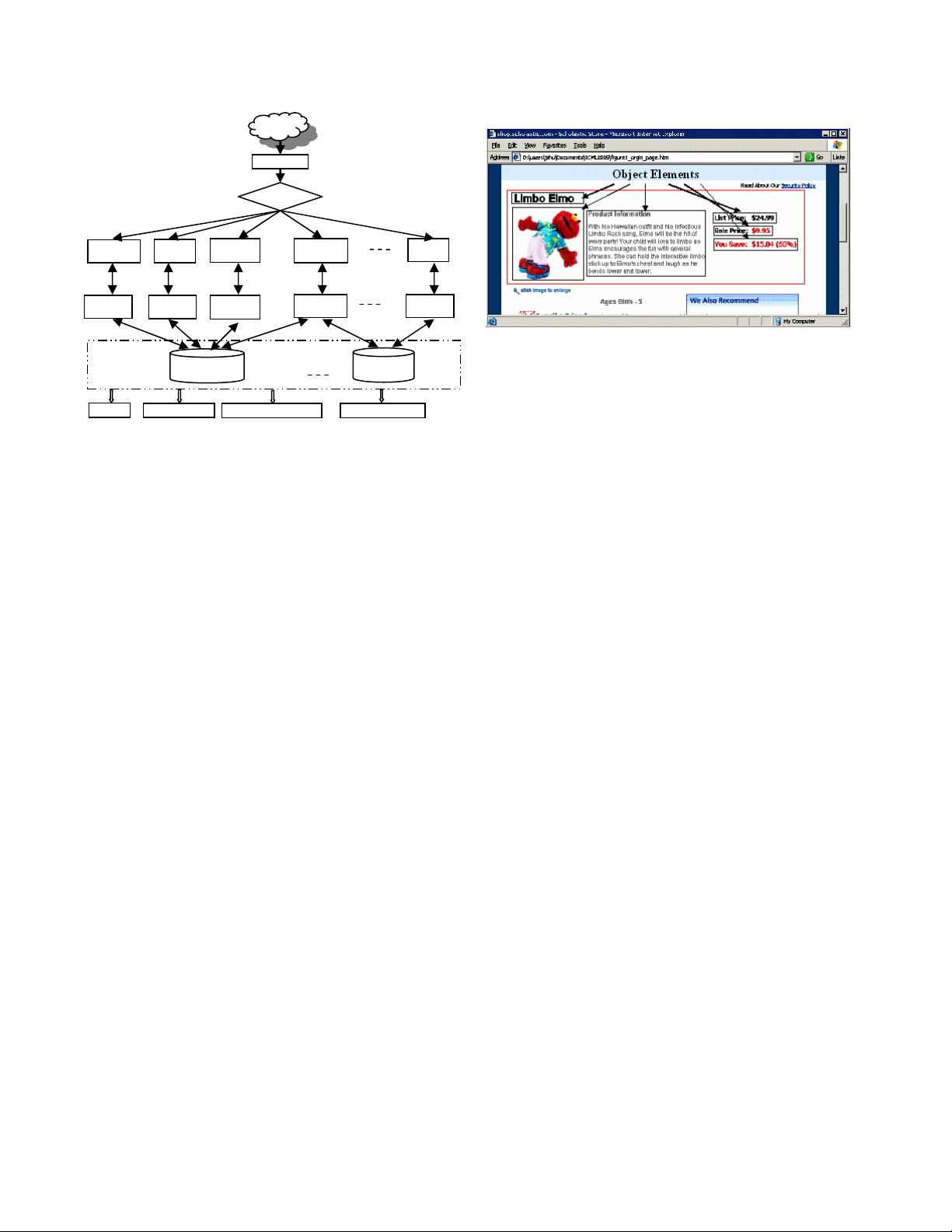

Figure 3. System Architecture

3.1 Crawler and Classifier

The tasks of the crawler and classifier are to automatically collect

all relevant webpages/documents that contain object information

for a specific vertical domain. The crawled webpages/documents

will be passed to the corresponding object extractor for extracting

the structured object information and building the object

warehouse.

3.1.1 Insight

If we use the nodes to denote the objects and edges to denote the

relationship links between the objects, we can see that the objects

information with a vertical domain forms an object relationship

graph. For example, in Libra academic search, we have three

different types of nodes to representing papers, authors, and

conferences/journals, and three different types of edges (i.e. links)

pointing to paper objects that represent three varying types of

relationships. They are cited-by, authored-by, and published-by.

The ultimate goal of the crawler is to effectively and efficiently

collect relevant webpages and to build a complete object

relationship graph with as many nodes and edges and as many

attribute values for each node as possible (assuming we have

perfect extractors and aggregators).

3.1.2 Our Approach

We build a “focused” crawler that uses the page classifier and the

existing partial object relationship graph to guide the crawling

process. Basically, in addition to the web graph which is used by

most page-level crawlers, we employ an object relationship graph

to guide our crawling algorithm.

Since the classifier is coupled with the crawler, it needs to be very

fast to ensure efficient crawling. Based on our experience in

building a classifier for Libra and Windows Live Product Search,

we found that we could always use some strong heuristics to

quickly prune most of irrelevant pages. For example, in our

product pages classifier, we can use the price identifiers (such as

dollar signs $) to efficiently prune most non-product pages. The

average time of our product classifier is around 0.1 millisecond,

and its precision is around 0.8, with recall around 0.9.

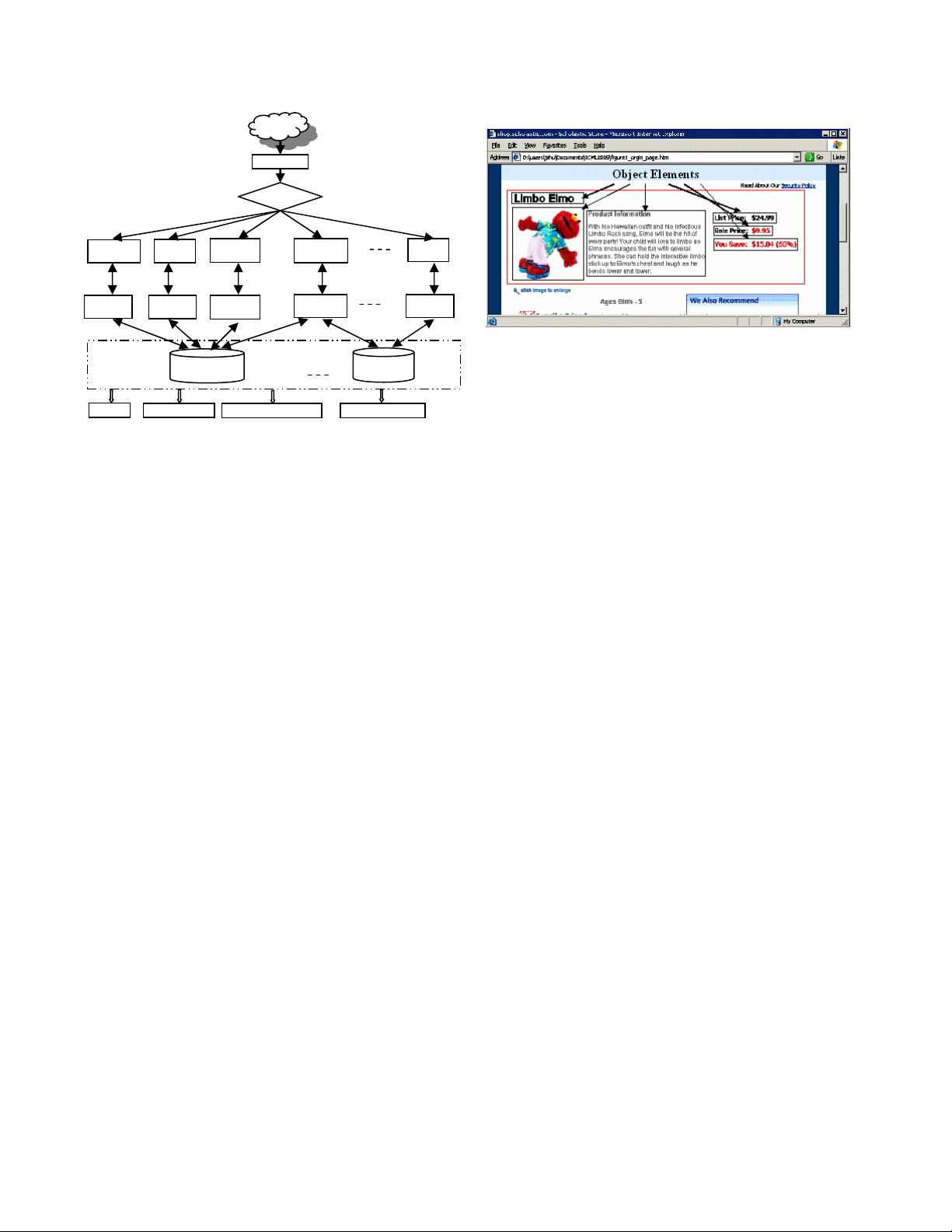

Figure 4. An Object Block with 6 Elements (contained in the

red rectangle) in a Webpage.

3.2 Object Extractor

Information (e.g. attributes) about a web object is usually

distributed in many web sources and within small segments of

webpages. The task of an object extractor is to extract metadata

about a given type of objects from every web page containing this

type of objects. For example, for each crawled product web page,

we extract name, image, price and description of each product. If

all of these product pages or just half of them are correctly

extracted, we will have a huge collection of metadata about real-

world products that could be used for further knowledge

discovery and query answering. Our statistical study on 51,000

randomly crawled webpages shows that about 12.6 percent are

product pages. That is, there are about 1 billion product pages

within a search index containing 9 billion crawled webpages.

However, how to extract product information from webpages

generated by many (maybe tens of thousands of) different

templates is non-trivial. One possible solution is that we first

distinguish webpages generated by different templates, and then

build an extractor for each template. We say that this type of

solution is template-dependent. However, accurately identifying

webpages for each template is not a trivial task because even

webpages from the same website may be generated by dozens of

templates. Even if we can distinguish webpages, template-

dependent methods are still impractical because learning and

maintenance of so many different extractors for different

templates will require substantial efforts.

3.2.1 Insight

By empirically studying webpages across websites about the same

type of objects across web sites, we find many template-

independent features.

Information about an object in a web page is generally

grouped together as an object block, as shown in Figure 4.

Using existing web page segmentation [7] and data record

extraction technologies [35], we can automatically detect

these object blocks, which we further segment into atomic

extraction entities called object elements. Each object

element provides partial information about a single attribute

of the web object.

web

Crawler

Classifier

Location

Extractor

Product

Extractor

Conference

Extractor

Author

Extractor

Paper

Extractor

Paper

Aggregator

Location

Aggregator

Product

Aggregator

Scientific web

Object Warehouse

Product Object

Warehouse

web Objects

PopRank

Object Relevance

Object Community Mining

Object Categorization

Conference

Aggregator

Author

Aggregator