Learning Features from Large-Scale, Noisy and Social

Image-Tag Collection

Hanwang Zhang

†

, Xindi Shang

†

, Huanbo Luan

†§

, Yang Yang

‡

, Tat-Seng Chua

†

†

National University of Singapore

‡

University of Electronic Science and Technology of China

§

Tsinghua University

{hanwangzhang,xindi1992,luanhuanbo,dlyyang}@gmail.com;dcscts@nus.edu.sg

ABSTRACT

Feature representation for multimedia content is the key to

the progress of many fundamental multimedia tasks. Al-

though recent advances in deep feature learning offer a promis-

ing route towards these tasks, they are limited in application

to domains where high-quality and large-scale training data

are hard to obtain. In this paper, we propose a novel deep

feature learning paradigm based on large, noisy and social

image-tag collections, which can be acquired from the inex-

haustible social multimedia content on the Web. Instead of

learning features from high-quality image-label supervision,

we propose to learn from the image-word semantic relations,

in a way of seeking a unified image-word embedding space,

where the pairwise feature similarities preserve the semantic

relations in the original image-word pairs. We offer an easy-

to-use implementation for the proposed paradigm, which is

fast and compatible for integrating into any state-of-the-art

deep architectures. Experiments on NUSWIDE benchmark

demonstrate that the features learned by our method signif-

icantly outperforms other state-of-the-art ones.

Categories and Subject Descriptors

H.3.3 [Information Search and Retrieval]: Retrieval

Model

Keywords

feature learning; visual-semantic embedding; multimodal anal-

ysis

1. INTRODUCTION

The progress in multimedia applications is largely due to

the advances of feature representations for multimedia con-

tent. For example, over the past decades, we have witnessed

the evolution of visual features from color histogram to SIFT

interest points and to the recent deep learning features, that

help to move a large varieties of multimedia applications

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for profit or commercial advantage and that copies bear this notice and the full cita-

tion on the first page. Copyrights for components of this work owned by others than

ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or re-

publish, to post on servers or to redistribute to lists, requires prior specific permission

and/or a fee. Request permissions from Permissions@acm.org.

MM’15, October 26–30, 2015, Brisbane, Australia.

c

2015 ACM. ISBN 978-1-4503-3459-4/15/10 ...$15.00.

DOI: http://dx.doi.org/10.1145/2733373.2806286.

ImageNet SBU

Desk

Chow

Cleartrip's office area near

my desk

A gorgeous butterfly stopped

by to check out dog park

progress on 8-6-08.

(a) Samples from ImageNet and SBU

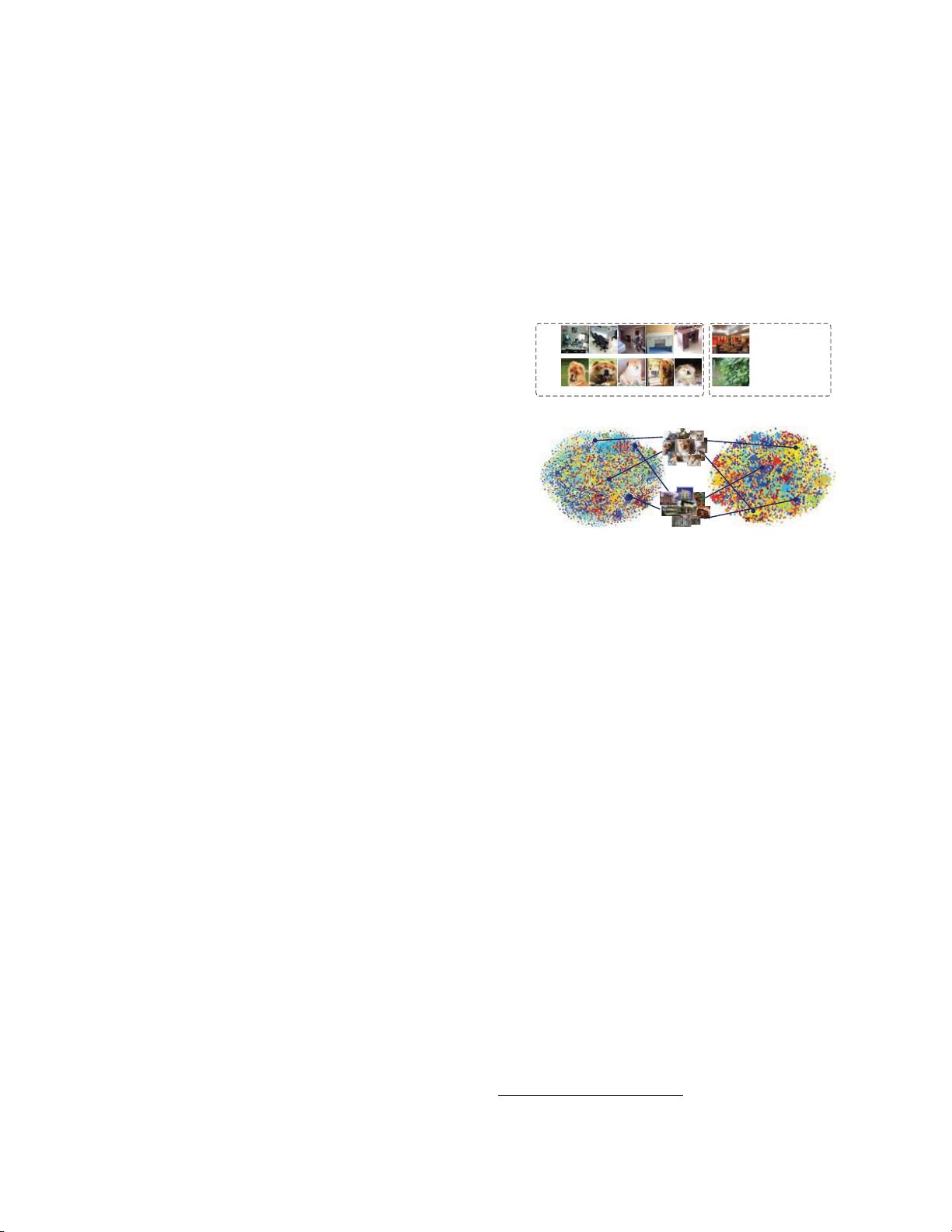

(b) Feature Visualization

Figure 1: t-SNE visualization for the features of a mil-

lion SBU images from Flickr. Different colors represent

different semantic categories. One of the distribution is

learned by deep learning a million images across 1,000

categories from high-quality labeled ImageNet and the

other is learned by a million images weakly-labeled by

over 30,000 unique tags by SBU (cf. Section 3 for de-

tails). Both sides show that the features are represen-

tative. Can you tell which side is learned by SBU? (see

answers below)

from academic prototypes into industrial products [14]. To-

day, a general consensus is that learning-based features by

deep neural networks can outperform most hand engineered

features and therefore free us to focus on designing algo-

rithms and end applications.

In order to learn strong features, we need a large-scale and

high-quality dataset. At this point, ImageNet with millions

of human-labeled images across thousands of semantic cate-

gories has offered us a reliable incubator to develop features.

However, this dataset is built by Web images five years ago

and hence it lags behind the fast evolving semantic and vi-

sual diversities in real-world scenarios. For example, differ-

ent emerging vertical domains like fashion (e.g., Taobao and

Amazon) would need different datasets in order to learn spe-

cific features for shoes and clothes domain. Moreover, videos

from emerging popular social networks (e.g., Snapchat and

Vine) would love features different from what were learned

from images. Building such datasets not only requires heavy

and tedious labeling efforts but also expert domain knowl-

edge, any of which is expensive. This awkward situation

1

Left: ImageNet. Right: SBU.