4

TABLE 2: A summary of attacks against server-based FL.

Attack Type

Attack Target Attacker Role FL Scenario Attack Complexity

Model Training Data Participant Server H2B H2C Attack Iteration Auxiliary Knowledge Required

One Round Multiple Rounds

Data Poisoning YES NO YES NO YES YES YES YES YES

Model Poisoning YES NO YES NO YES NO YES YES YES

Infer Class Representatives NO YES YES YES YES NO NO YES YES

Infer Membership NO YES YES YES YES NO NO YES YES

Infer Properties NO YES YES YES YES NO NO YES YES

Infer Training Inputs and Labels NO YES NO YES YES NO YES YES NO

2.3 Training Phase v.s. Inference Phase

Training Phase. Attacks conducted during the training

phase attempt to learn, influence, or corrupt the FL model it-

self [51]. During the training phase, the attacker can run data

poisoning attacks to compromise the integrity of the training

dataset [26], [28], [52], [53], [54], or model poisoning attacks

to compromise the integrity of the learning process [27], [55].

The attacker can also launch a range of inference attacks

on an individual participant’s update or on the aggregated

update from all participants.

Inference Phase. Attacks conducted during the inference

phase are called evasion/exploratory attacks [56]. They

generally do not alter the targeted model, but instead, either

trick it to produce wrong predictions (targeted/untargeted)

or collect evidence about the model characteristics. The

effectiveness of such attacks is largely determined by the

information that is available to the adversary about the

model. Inference phase attacks can be classified into white-

box attacks (i.e. with full access to the FL model) and black-

box attacks (i.e. only able to query the FL model). In FL, the

global model maintained by the server suffers from the same

evasion attacks as in the conventional ML setting when the

target model is deployed as a service. Moreover, the model

broadcast step in FL makes the global model a white-box to

any malicious participant. Thus, FL requires extra efforts to

defend against white-box evasion attacks.

3 POISONING ATTACKS

Depending on the attacker’s objective, poisoning attacks can

be classified into two categories: a) untargeted poisoning

attacks [55], [57], [58], [59], [60] and b) targeted poisoning

attacks [26], [27], [61], [62], [63], [64], [65], [66].

Untargeted poisoning attacks aim to compromise the

integrity of the target model, which may eventually result

in a denial-of-service attack. In targeted poisoning attacks,

the learnt model outputs the target label specified by the

adversary for particular testing examples, e.g., predicting

spams as non-spams, and predicting attacker-desired labels

for testing examples with a particular Trojan trigger (back-

door/trojan attacks). However, the testing error for other

testing examples is unaffected. Generally, targeted attacks

is more difficult to conduct than untargeted attacks as the

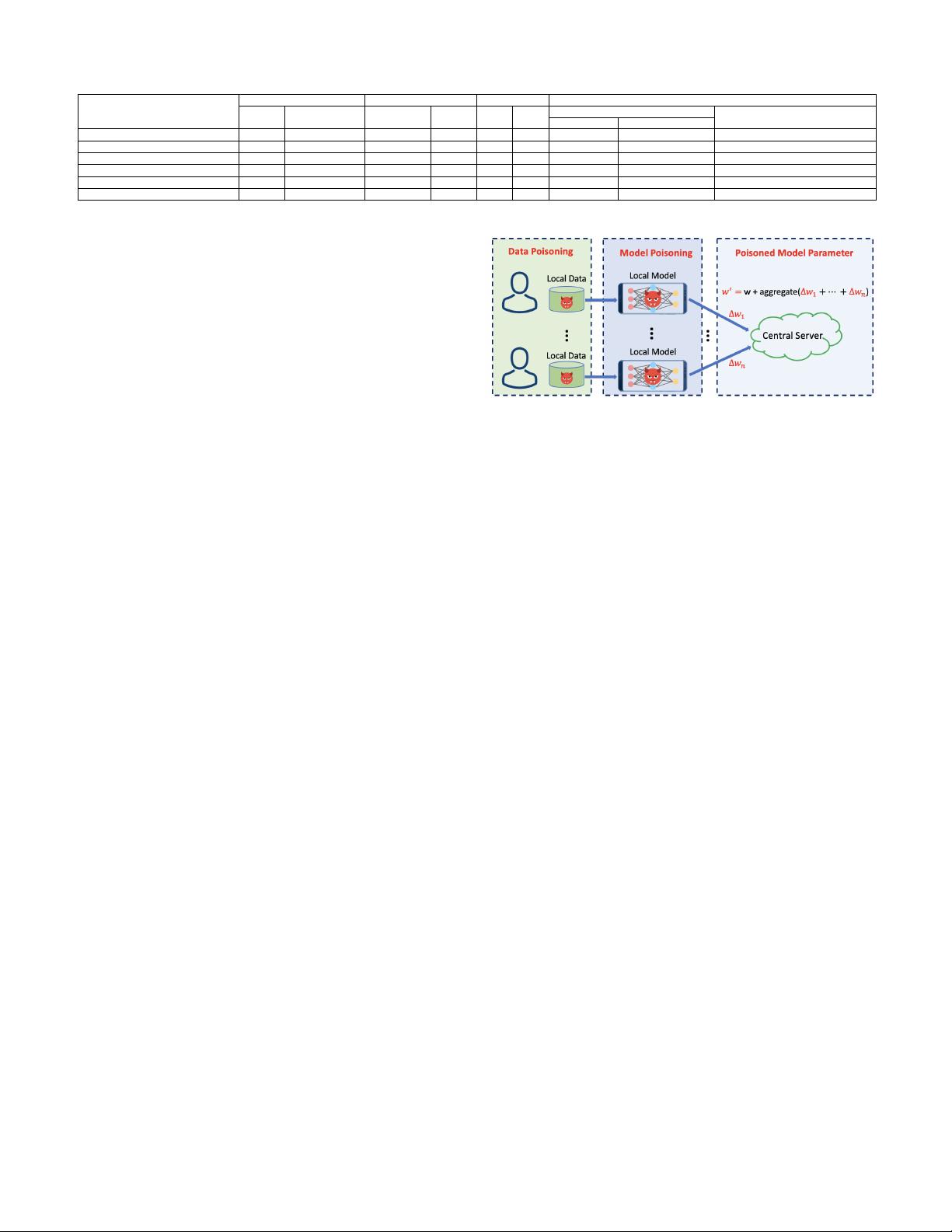

attacker has a specific goal to achieve. Poisoning attacks

during the training phase can be performed on the data or

on the model. Fig. 2 shows that the poisoned updates can

be sourced from two poisoning attacks: (1) data poisoning

attack during local data collection; and (2) model poisoning

attack during local model training process. At a high level,

both poisoning attacks attempt to modify the behavior of

the target model in some undesirable way.

Fig. 2: Data v.s. model poisoning attacks in FL.

3.1 Data Poisoning

Data poisoning attacks largely fall in two categories: 1)

clean-label [44], [65], [67], [68], [69], [70] and 2) dirty-

label [63], [64]. Clean-label attacks assume that the adver-

sary cannot change the label of any training data as there

is a process by which data are certified as belonging to

the correct class and the poisoning of data samples has to

be imperceptible. In contrast, in dirty-label poisoning, the

adversary can introduce a number of data samples it wishes

to be misclassified by the model with the desired target label

into the training data.

One common example of dirty-label poisoning attack

is the label-flipping attack [28], [58]. The labels of honest

training examples of one class are flipped to another class

while the features of the data are kept unchanged. For

example, the malicious participants in the system can poison

their dataset by flipping all 1s into 7s. A successful attack

produces a model that is unable to correctly classify 1s

and incorrectly predicts them to be 7s. Another weak but

realistic attack scenario is backdoor poisoning [24], [25],

[64]. Here, an adversary can modify individual features or

small regions of the original training dataset to implant a

backdoor trigger into the model. The model will behave

normally on clean data, yet will constantly predict a target

class whenever the trigger (e.g., a stamp on an image)

appears. In this way, the attacks are harder to be detected.

For backdoor attacks in FL, a global trigger pattern can be

decomposed into separate local patterns and embed them

into the training set of different adversarial participants

respectively [25].

Data poisoning attacks can be carried out by any FL par-

ticipant. Due to the distributed nature of FL, data poisoning

attacks are generally less effective than model poisoning

attacks [26], [27], [28], [29]. The impact on the FL model

depends on the extent to which the backdoor participants

engage in the attacks, and the amount of training data being

poisoned. A recent work shows that poisoning edge-case

(low probability) training samples are more effective [24].