Multi-user Location Correlation Protection with

Differential Privacy

Lu Ou

†

, Zheng Qin

†

, Yonghe Liu

⊤

,HuiYin

†§

, Yupeng Hu

†

, Hao Chen

†

†

College of Information Science and Engineering, Hunan University, Changsha 410082, China

⊤

Department of Computer Science and Engineering, University of Texas at Arlington, Arlington 76013, USA

§

Department of Mathematics and Computer Science, Changsha University, Changsha 410022, China

Abstract—In the big data era, with the rapid development of

location-based applications, GPS enabled devices and big data

institutions, location correlation privacy raises more and more

people’s concern. Because adversaries may combine location

correlations with their background knowledge to guess users’

privacy, such correlation should be protected to preserve users’

privacy. In order to deal with the location disclosure problem,

location perturbation and generalization have been proposed.

However, most proposed approaches depend on syntactic privacy

models without rigorous privacy guarantee. Furthermore, many

approaches only consider perturbing the locations of one user

without considering multi-user location correlations, so these

techniques cannot prevent various inference attacks well. Cur-

rently, differential privacy has been regarded as a standard for

privacy protection, but there are new challenges for applying

differential privacy in the location correlations protection. The

privacy protection not only should meet the needs of users who

request location-based services, but also should protect location

correlation among multiple users.

In this paper, we propose a systematic solution to protect

location correlations privacy among multiple users with rigorous

privacy guarantee. First of all, we propose a novel definition,

private candidate sets which are obtained by hidden Markov

models. Then, we quantify the location correlation between two

users by using the similarity of hidden Markov models. Finally,

we present a private trajectory releasing mechanism which can

preserve the location correlations among users who move under

hidden Markov models in a period of time. Experiments on

real-world datasets also show that multi-user location correlation

protection is efficient.

Keywords—differential privacy, hidden Markov models,

location-based services, location correlation, the similarity of

hidden Markov models, private trajectory releasing

I. INTRODUCTION

In the big data era, with the popularity of smart phones

and other GPS enabled devices, the Location Based Service

(LBS) is an integral part of our life. This service can bring

convenience in our life. Therefore, in order to improve quality

of our life, big data institutions may improve the quality of

LBSs. At the same time, big data institutions will achieve

location information about each user and other related infor-

mation from location-based servers such as trajectories and the

user’s interest to improve the quality of LBSs. However, the

big data institutions are honest and curious. They may mine

some sensitive information about users, such as a location

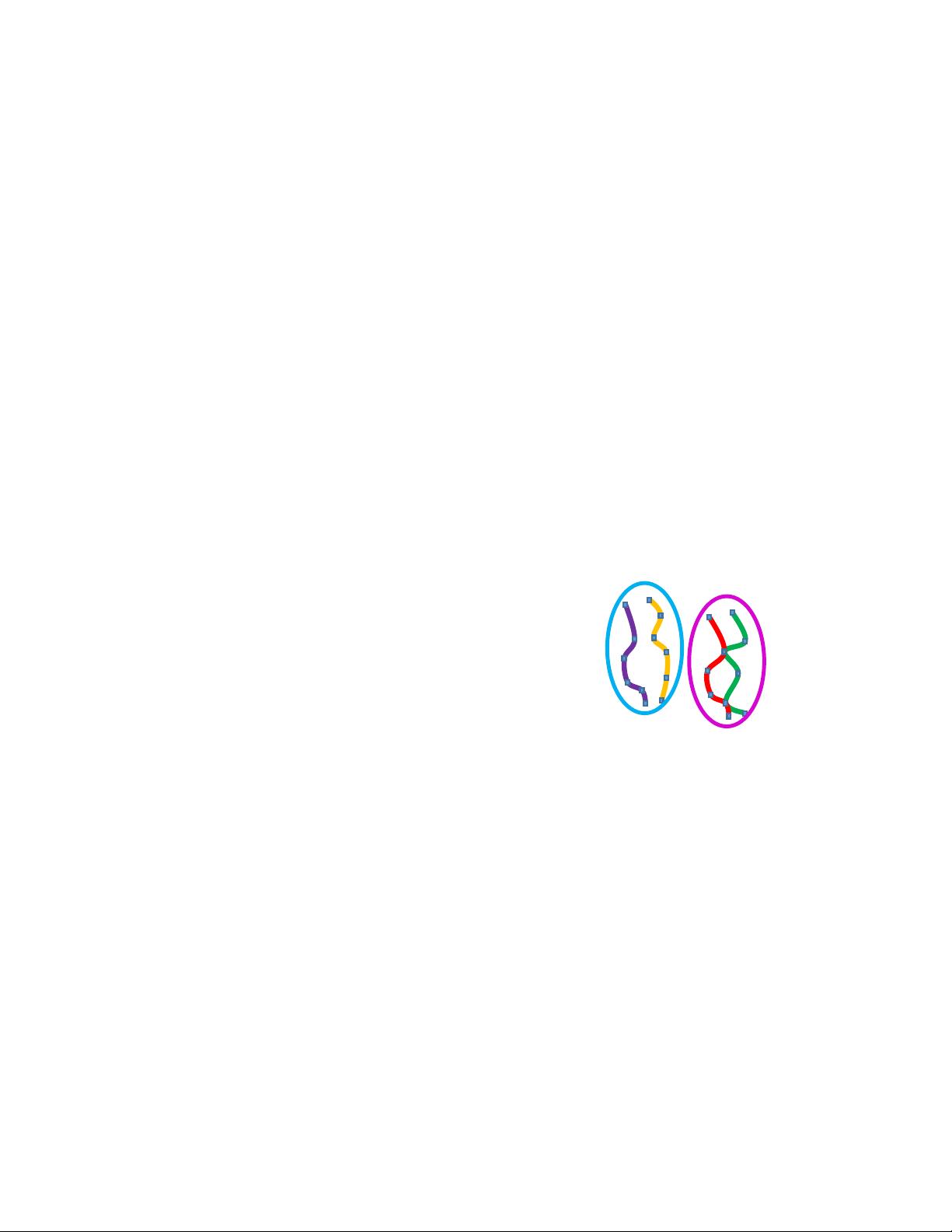

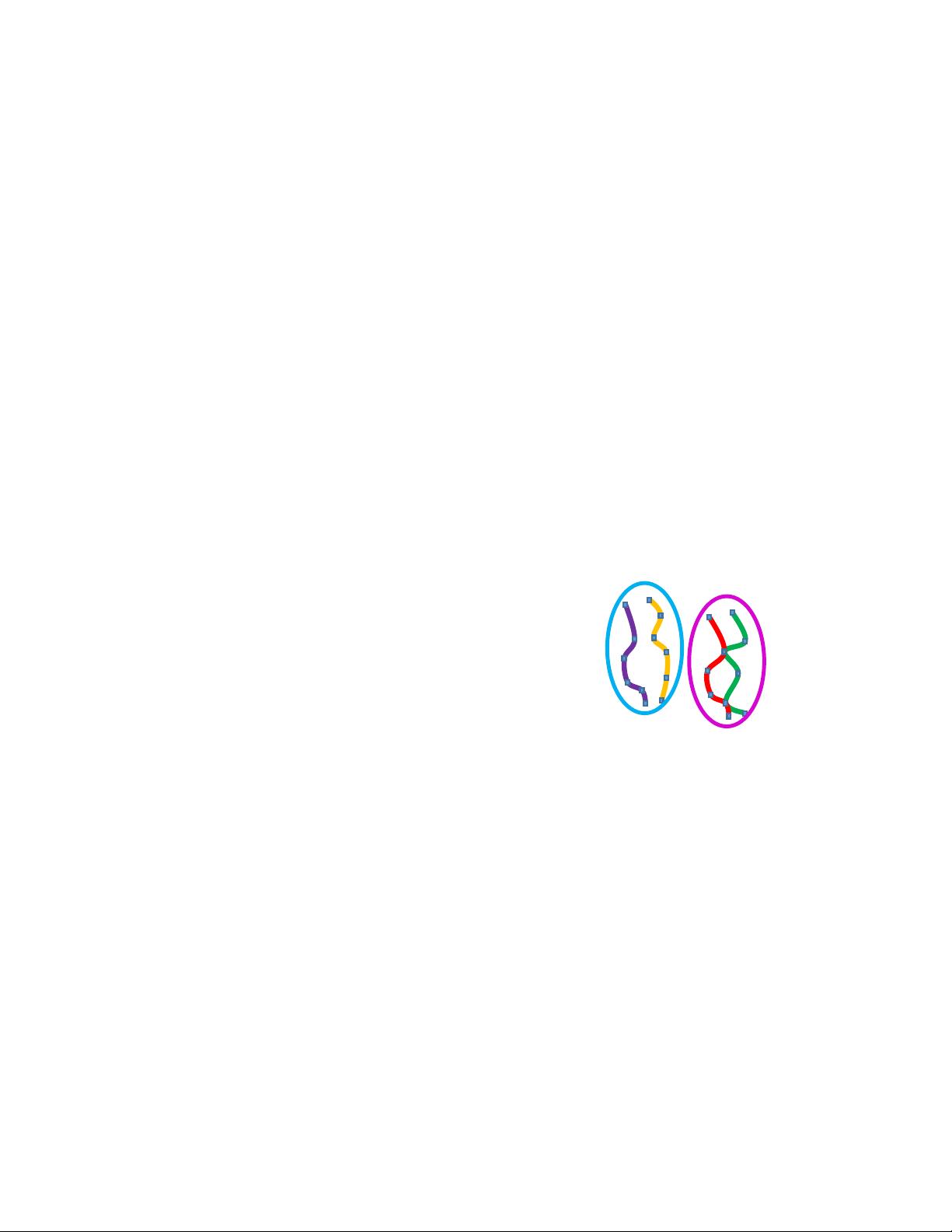

correlation. For instance, there is a big data institution of

catering who wants to analyze restaurant interests for different

occupational groups. Through data mining, they may find that

users who are in the same occupational group are often at

the same location at the same time or the different time (as

shown in Figure 1). Furthermore, they may use this location

correlation to mine social correlations among the users. If

social correlations among multiple users are exposed, some

sensitive information may be inferred. Now we give a scenario

to explain this problem. As we can see in Figure 1, Betty

and Jerry are students (i.e., they are in the same occupational

group). Simultaneously, they are at same location (i.e., the

location correlation between Betty and Jerry) twice. Therefore,

adversaries may consider that Jerry and Betty are classmates

according to their background knowledge. Then if Jerry’s age

is leaked, Betty’s age will be known by adversaries too. Thus,

location correlation should be hidden to preserve the user’s

privacy.

Jerry

Betty

Mia

Andy

Student

Doctor

Fig. 1. User Location Trace about Different Occupational Groups

There are several works on location privacy protection.

But most of these existing approaches and solutions focus on

a single user’s privacy. Although a few works also consider

temporal correlations of one user, no location correlations

among multiple users.

To protect location correlations privacy among multiple

users with rigorous privacy guarantee, we propose a novel pri-

vate trajectory releasing mechanism which is based on hidden

Markov models (HMMs) to deal with this location correlation

disclosure problem. By using HMMs, a probable trajectory

set can be generated to cover every eventuality (trajectory).

And by using a similarity of HMMs, we can quantify the

locations correlation between two users in a period of time.

Then, based on the probable trajectories and the correlated

trajectories, we calculate a private candidate set which is used

to achieve differential privacy. Finally, an extended differential

privacy approach is proposed to protect the privacy.

We implement our approach on real-world datasets, and

the experimental results show that our approach could protect