978-1-4244-7153-9/10/$26.00 ©2010 IEEE

The Hadoop Distributed File System

Konstantin Shvachko, Hairong Kuang, Sanjay Radia, Robert Chansler

Yahoo!

Sunnyvale, California USA

{Shv, Hairong, SRadia, Chansler}@Yahoo-Inc.com

Abstract—The Hadoop Distributed File System (HDFS) is

designed to store very large data sets reliably, and to stream

those data sets at high bandwidth to user applications. In a large

cluster, thousands of servers both host directly attached storage

and execute user application tasks. By distributing storage and

computation across many servers, the resource can grow with

demand while remaining economical at every size. We describe

the architecture of HDFS and report on experience using HDFS

to manage 25 petabytes of enterprise data at Yahoo!.

Keywords: Hadoop, HDFS, distributed file system

I. INTRODUCTION AND RELATED WORK

Hadoop [1][16][19] provides a distributed file system and a

framework for the analysis and transformation of very large

data sets using the MapReduce [3] paradigm. An important

characteristic of Hadoop is the partitioning of data and compu-

tation across many (thousands) of hosts, and executing applica-

tion computations in parallel close to their data. A Hadoop

cluster scales computation capacity, storage capacity and IO

bandwidth by simply adding commodity servers. Hadoop clus-

ters at Yahoo! span 25 000 servers, and store 25 petabytes of

application data, with the largest cluster being 3500 servers.

One hundred other organizations worldwide report using

Hadoop.

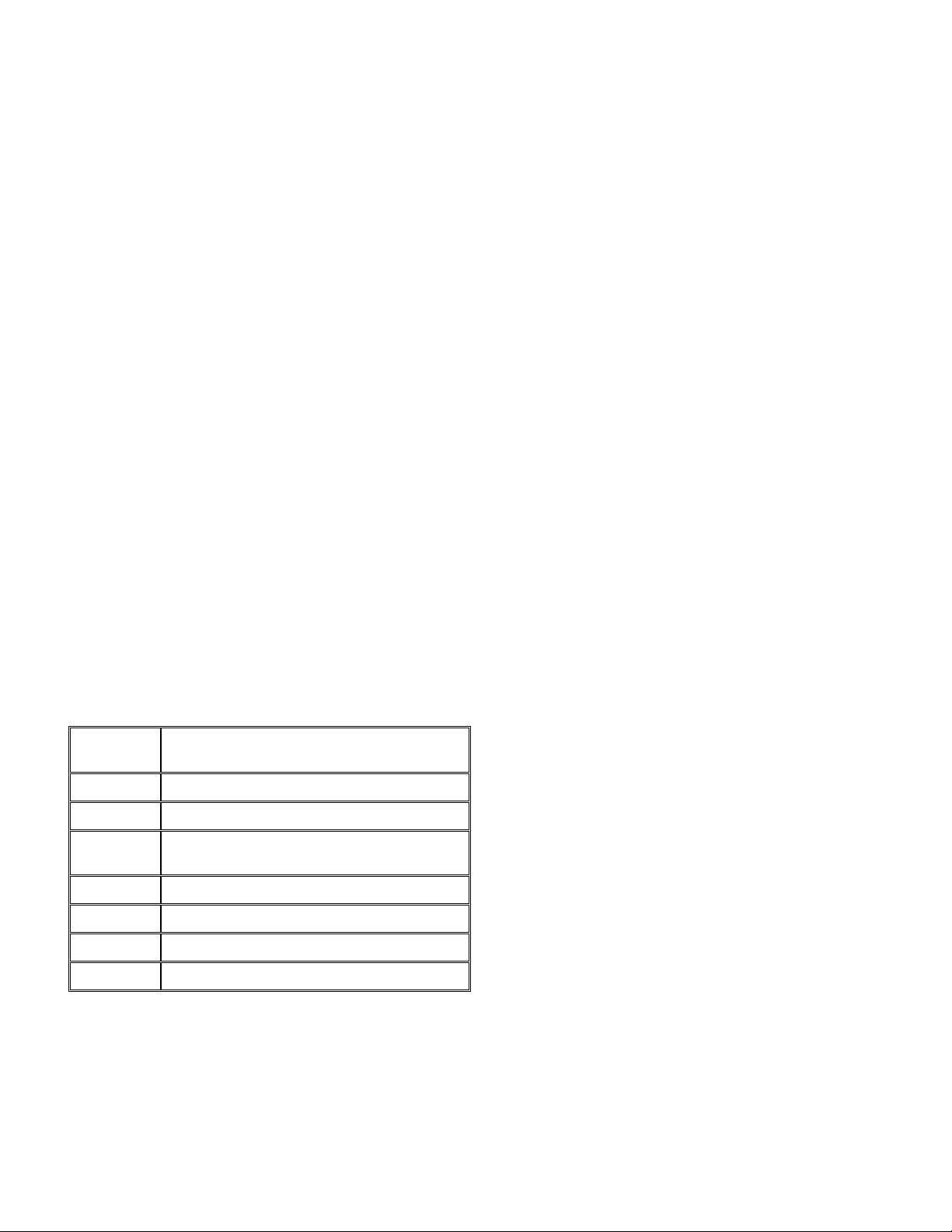

Table 1. Hadoop project components

Hadoop is an Apache project; all components are available

via the Apache open source license. Yahoo! has developed and

contributed to 80% of the core of Hadoop (HDFS and MapRe-

duce). HBase was originally developed at Powerset, now a

department at Microsoft. Hive [15] was originated and devel-

developed at Facebook. Pig [4], ZooKeeper [6], and Chukwa

were originated and developed at Yahoo! Avro was originated

at Yahoo! and is being co-developed with Cloudera.

HDFS is the file system component of Hadoop. While the

interface to HDFS is patterned after the UNIX file system,

faithfulness to standards was sacrificed in favor of improved

performance for the applications at hand.

HDFS stores file system metadata and application data

separately. As in other distributed file systems, like PVFS

[2][14], Lustre [7] and GFS [5][8], HDFS stores metadata on a

dedicated server, called the NameNode. Application data are

stored on other servers called DataNodes. All servers are fully

connected and communicate with each other using TCP-based

protocols.

Unlike Lustre and PVFS, the DataNodes in HDFS do not

use data protection mechanisms such as RAID to make the data

durable. Instead, like GFS, the file content is replicated on mul-

tiple DataNodes for reliability. While ensuring data durability,

this strategy has the added advantage that data transfer band-

width is multiplied, and there are more opportunities for locat-

ing computation near the needed data.

Several distributed file systems have or are exploring truly

distributed implementations of the namespace. Ceph [17] has a

cluster of namespace servers (MDS) and uses a dynamic sub-

tree partitioning algorithm in order to map the namespace tree

to MDSs evenly. GFS is also evolving into a distributed name-

space implementation [8]. The new GFS will have hundreds of

namespace servers (masters) with 100 million files per master.

Lustre [7] has an implementation of clustered namespace on its

roadmap for Lustre 2.2 release. The intent is to stripe a direc-

tory over multiple metadata servers (MDS), each of which con-

tains a disjoint portion of the namespace. A file is assigned to a

particular MDS using a hash function on the file name.

II. ARCHITECTURE

A. NameNode

The HDFS namespace is a hierarchy of files and directo-

ries. Files and directories are represented on the NameNode by

inodes, which record attributes like permissions, modification

and access times, namespace and disk space quotas. The file

content is split into large blocks (typically 128 megabytes, but

user selectable file-by-file) and each block of the file is inde-

pendently replicated at multiple DataNodes (typically three, but

user selectable file-by-file). The NameNode maintains the

namespace tree and the mapping of file blocks to DataNodes