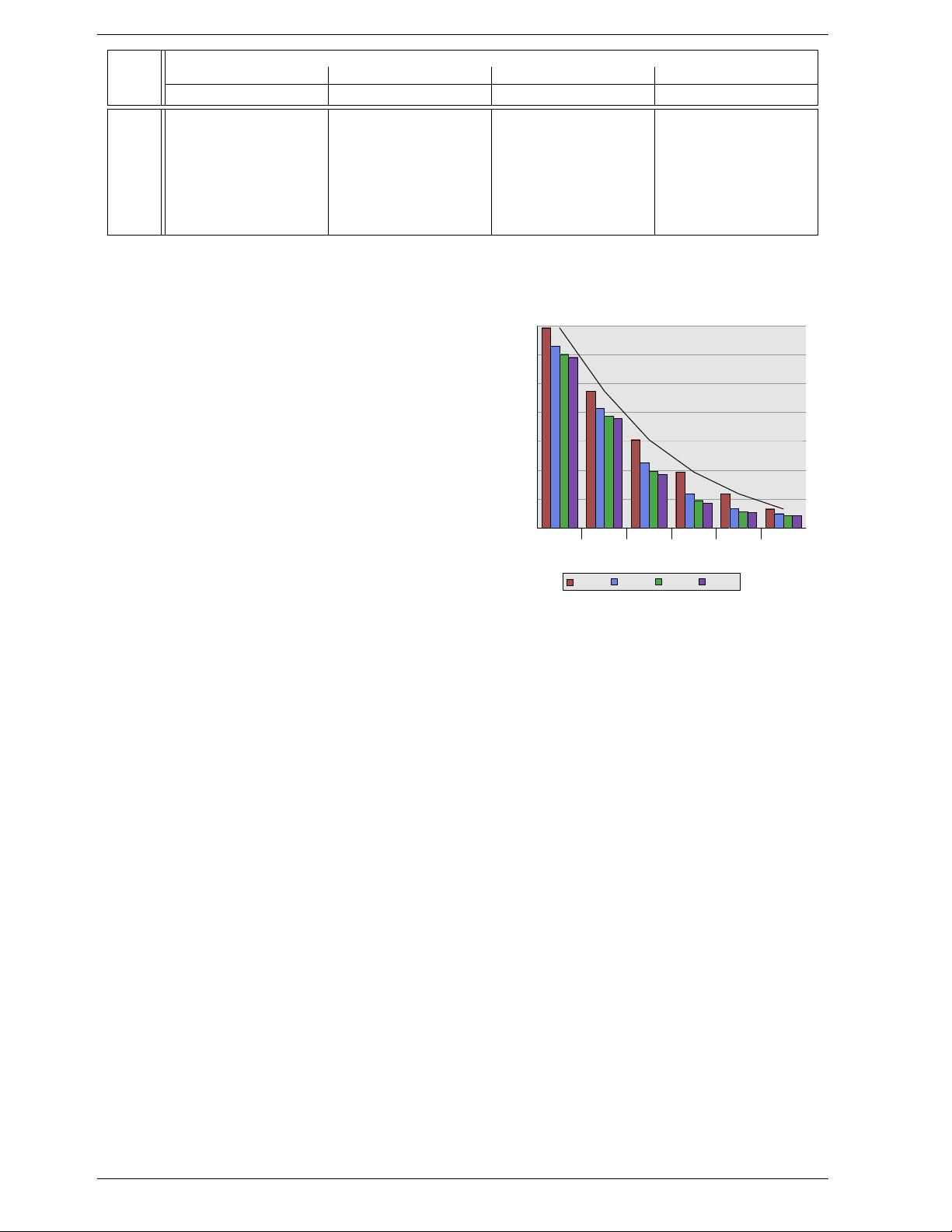

L2 Associativity

Cache Direct 2 4 8

Size

CL=32 CL=64 CL=32 CL=64 CL=32 CL=64 CL=32 CL=64

512k 27,794,595 20,422,527 25,222,611 18,303,581 24,096,510 17,356,121 23,666,929 17,029,334

1M 19,007,315 13,903,854 16,566,738 12,127,174 15,537,500 11,436,705 15,162,895 11,233,896

2M 12,230,962 8,801,403 9,081,881 6,491,011 7,878,601 5,675,181 7,391,389 5,382,064

4M 7,749,986 5,427,836 4,736,187 3,159,507 3,788,122 2,418,898 3,430,713 2,125,103

8M 4,731,904 3,209,693 2,690,498 1,602,957 2,207,655 1,228,190 2,111,075 1,155,847

16M 2,620,587 1,528,592 1,958,293 1,089,580 1,704,878 883,530 1,671,541 862,324

Table 3.1: Effects of Cache Size, Associativity, and Line Size

Given our 4MB/64B cache and 8-way set associativity

the cache we are left with has 8,192 sets and only 13

bits of the tag are used in addressing the cache set. To

determine which (if any) of the entries in the cache set

contains the addressed cache line 8 tags have to be com-

pared. That is feasible to do in very short time. With an

experiment we can see that this makes sense.

Table 3.1 shows the number of L2 cache misses for a

program (gcc in this case, the most important benchmark

of them all, according to the Linux kernel people) for

changing cache size, cache line size, and associativity set

size. In section 7.2 we will introduce the tool to simulate

the caches as required for this test.

Just in case this is not yet obvious, the relationship of all

these values is that the cache size is

cache line size × associativity × number of sets

The addresses are mapped into the cache by using

O = log

2

cache line size

S = log

2

number of sets

in the way the figure on page 15 shows.

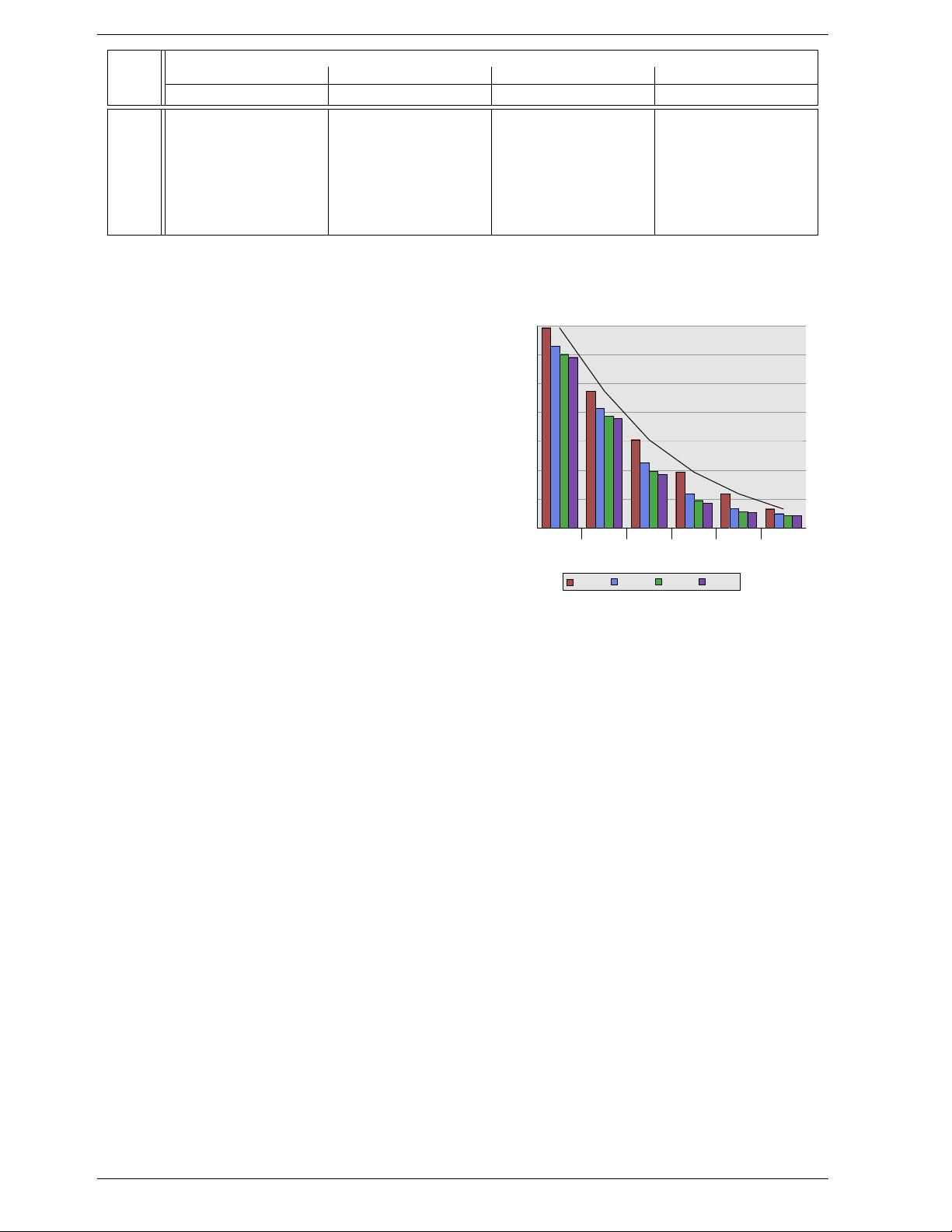

Figure 3.8 makes the data of the table more comprehen-

sible. It shows the data for a fixed cache line size of

32 bytes. Looking at the numbers for a given cache size

we can see that associativity can indeed help to reduce

the number of cache misses significantly. For an 8MB

cache going from direct mapping to 2-way set associative

cache saves almost 44% of the cache misses. The proces-

sor can keep more of the working set in the cache with

a set associative cache compared with a direct mapped

cache.

In the literature one can occasionally read that introduc-

ing associativity has the same effect as doubling cache

size. This is true in some extreme cases as can be seen

in the jump from the 4MB to the 8MB cache. But it

certainly is not true for further doubling of the associa-

tivity. As we can see in the data, the successive gains are

0

4

8

12

16

20

24

28

512k

1M 2M 4M

8M 16M

Cache Size

Cache Misses (in Millions)

Direct

2-way 4-way 8-way

Figure 3.8: Cache Size vs Associativity (CL=32)

much smaller. We should not completely discount the ef-

fects, though. In the example program the peak memory

use is 5.6M. So with a 8MB cache there are unlikely to

be many (more than two) uses for the same cache set.

With a larger working set the savings can be higher as

we can see from the larger benefits of associativity for

the smaller cache sizes.

In general, increasing the associativity of a cache above

8 seems to have little effects for a single-threaded work-

load. With the introduction of hyper-threaded proces-

sors where the first level cache is shared and multi-core

processors which use a shared L2 cache the situation

changes. Now you basically have two programs hitting

on the same cache which causes the associativity in prac-

tice to be halved (or quartered for quad-core processors).

So it can be expected that, with increasing numbers of

cores, the associativity of the shared caches should grow.

Once this is not possible anymore (16-way set associa-

tivity is already hard) processor designers have to start

using shared L3 caches and beyond, while L2 caches are

potentially shared by a subset of the cores.

Another effect we can study in Figure 3.8 is how the in-

crease in cache size helps with performance. This data

cannot be interpreted without knowing about the working

Ulrich Drepper Version 1.0 19