Distributed

Memory

Storage

Message

Passing

Framework

Memory Cloud

(Distributed Key-Value Store)

Trinity Specification Language

Graph Model

Graph Operations

GetInlinks(), Outlinks.Foreach(...), etc

Figure 2: System Layers

the data storage. Due to the diversity of graphs and the

diversity of graph applications, it is hard , if not entirely im-

possible, to support efficient general purpose graph compu-

tation using fixed graph schema. Instead of using fixed graph

schema and fixed computation models, Trinity let users de-

fine graph schema, communication protocols, and compu ta-

tion paradigms through TSL.

3. THE MEMORY CLOUD

We create a distributed memory cloud as Trinity’s stor-

age infrastructure. The memory cloud consists of 2

p

mem-

ory trunks, each of which is stored on a machine. Usually,

we have 2

p

> m, where m is the number of machines. In

other words, each machine hosts multiple memory trunks.

The reason we p artition a machine’s local memory space

into multiple memory trunks is twofold: 1) Trunk level par-

allelism can be achieved without any overhead of locking;

2) The performance of a single huge hash table is subop ti-

mal due to a higher probability of hashing conflicts. Essen-

tially, the entire memory cloud is partitioned into 2

p

mem-

ory trunks. To support fault-tolerant data persistence, these

memory trunks are also backed up in a shared distributed

file system called TFS (Trinity File System), which is similar

to HDFS [10].

On top of the memory cloud, we create a key-value store.

A key-value pair forms the most basic data structure of the

graph system or any system built on top of the memory

cloud. Here, keys are 64-bit globally unique identifiers, and

values are blobs of arbitrary length. As the memory cloud

is distributed across multiple machines, we cannot address

a key-value pair using its physical memory address. To ad-

dress a key-value p air, Trinity uses a hashing mechanism.

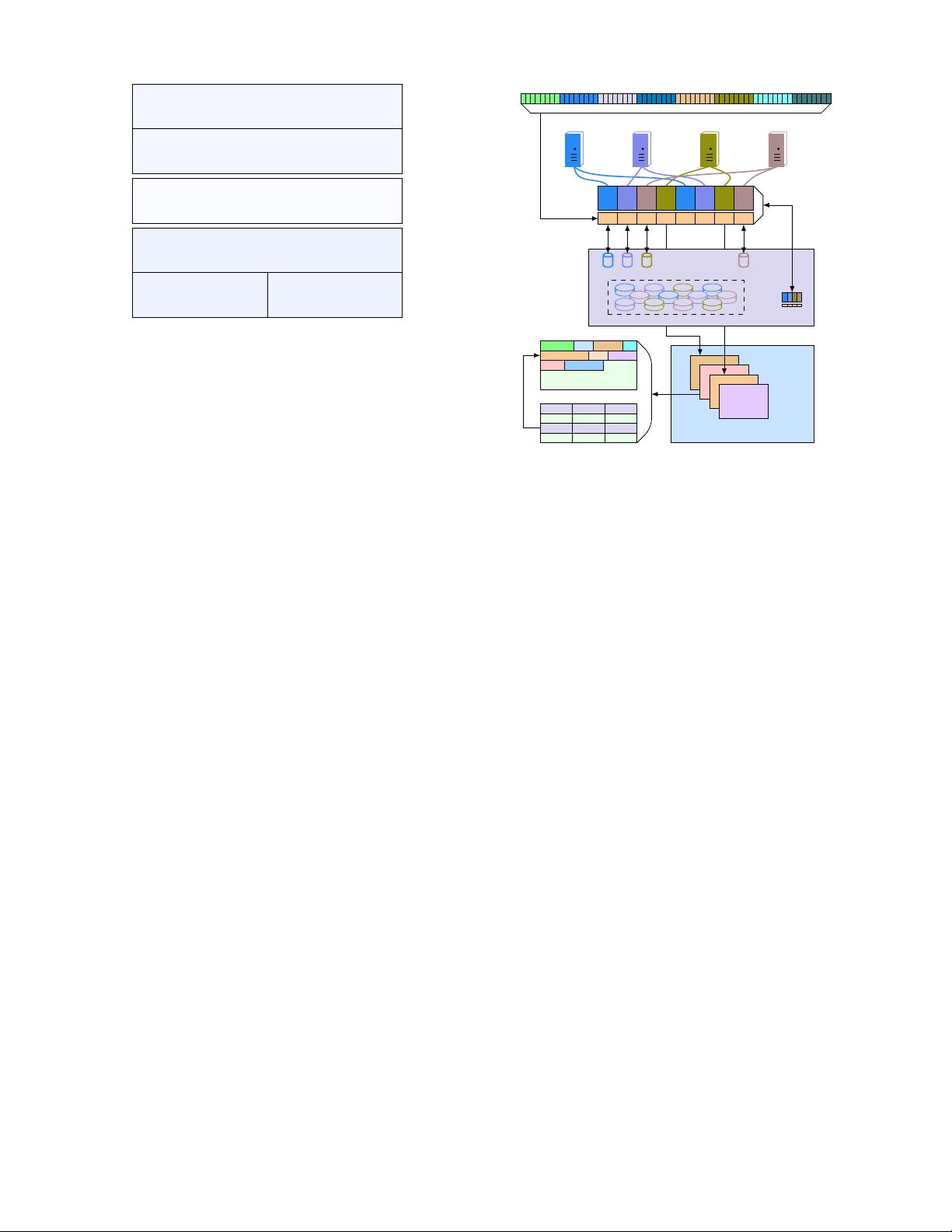

In order to locate the value of a given key, we first 1) iden-

tify the machine that stores the key-value pair, and then 2)

locate the key-value pair in one of the memory trunks on

that machine. Through this hashing mechanism (shown in

Figure 3), we provide a globally add ressable memory space.

Specifically, given a 64-bit key, to locate its correspond -

ing value in the memory cloud, we hash the key to a p-bit

value i ∈ [0, 2

p

− 1]. This means the key-value pair is stored

in memory trunk i within the memory cloud. To find out

which m achine memory t runk i is in, we maintain an “ad-

dressing table” that contains 2

p

slots, where each slot stores

a machine ID. Essentially, we implement a consistent hash-

ing mechanism that allows machines to join and leave the

64-bit UID

hash

machine 0 machine 1 machine 2 machine m

. . .

0 1

m

2

· · ·

1 2

m

p -bit

hash code

0 1 2 3

· · ·

j

k

2

p

-1

Addressing

Table

. . .

0 1 2

2

p

-1

Trinity File System

. . .

Memory

Trunk

Trinity

Slave

Memory Trunks

cell bytes

Memory Trunk

UID

Offset

Size

01. . . 321 123

10. . . 423 211

· · · · · · · · ·

Figure 3: Data Partitioning and Addressing

memory cloud (described later). Furthermore, in order for

global addressing to work, each machine keeps a replica of

the addressing table, and we will describe how we ensure the

consistency of the addressing tables in Section 6.2.

We then locate the key-value pair in memory trunk i,

which is stored on th e machine whose ID is in slot i of the

addressing table. Each memory trunk is associated with a

hash table. We hash the 64-bit key again to fin d the offset

and size of the key-value pair in the hash table. Given the

memory offset and the size, we retrieve th e key-value pair

from the memory trunk.

The addressing table provides a mechanism that allows

machines to dynamically join and leave the memory cloud.

When a machine fails, we reload the memory trunks it owns

from the TFS to other alive machines. All we need to do

is to update the addressing table so that the corresponding

slots point to the machines that host the data now. Simi-

larly, when new machines join the memory cloud, we relocate

some memory trunks t o those new machines and update the

addressing table accordingly.

Each key-value pair in the memory cloud may contain

some meta data for various purposes. Most notably, we

may associate each key-value pair with a spin lock. Spin

locks are used for concurrency control and physical memory

pinning. Multiple threads m ay try to access the same key-

value pair concurrently. A physical key-value pair may also

be moved by the memory defragmentation thread as elabo-

rated in Section 6.1. Therefore, we must en sure a key-value

pair is locked and pinned to a fixed memory position before

allowing any thread to manipulate it. For applications that

are not read-only, the spin lock mechanism allows a thread

to access a pinned physical key-value pair exclusively by re-

quiring all threads to acquire the lock before accessing or

moving a cell.

4. DATA MODEL

Trinity is d esigned to handle graph data of d iverse char-

acteristics. In this section, we describe data modeling issues