Understanding Deep Image Representations by Inverting Them

Aravindh Mahendran

University of Oxford

aravindh@robots.ox.ac.uk

Andrea Vedaldi

University of Oxford

vedaldi@robots.ox.ac.uk

Abstract

Image representations, from SIFT and Bag of Visual

Words to Convolutional Neural Networks (CNNs), are a

crucial component of almost any image understanding sys-

tem. Nevertheless, our understanding of them remains lim-

ited. In this paper we conduct a direct analysis of the visual

information contained in representations by asking the fol-

lowing question: given an encoding of an image, to which

extent is it possible to reconstruct the image itself? To an-

swer this question we contribute a general framework to

invert representations. We show that this method can invert

representations such as HOG more accurately than recent

alternatives while being applicable to CNNs too. We then

use this technique to study the inverse of recent state-of-the-

art CNN image representations for the first time. Among our

findings, we show that several layers in CNNs retain pho-

tographically accurate information about the image, with

different degrees of geometric and photometric invariance.

1. Introduction

Most image understanding and computer vision methods

build on image representations such as textons [17], his-

togram of oriented gradients (SIFT [20] and HOG [4]), bag

of visual words [3][27], sparse [37] and local coding [34],

super vector coding [40], VLAD [10], Fisher Vectors [23],

and, lately, deep neural networks, particularly of the convo-

lutional variety [15, 25, 38]. However, despite the progress

in the development of visual representations, their design is

still driven empirically and a good understanding of their

properties is lacking. While this is true of shallower hand-

crafted features, it is even more so for the latest generation

of deep representations, where millions of parameters are

learned from data.

In this paper we conduct a direct analysis of representa-

tions by characterising the image information that they re-

tain (Fig. 1). We do so by modeling a representation as a

function Φ(x) of the image x and then computing an ap-

proximated inverse φ

−1

, reconstructing x from the code

Φ(x). A common hypothesis is that representations col-

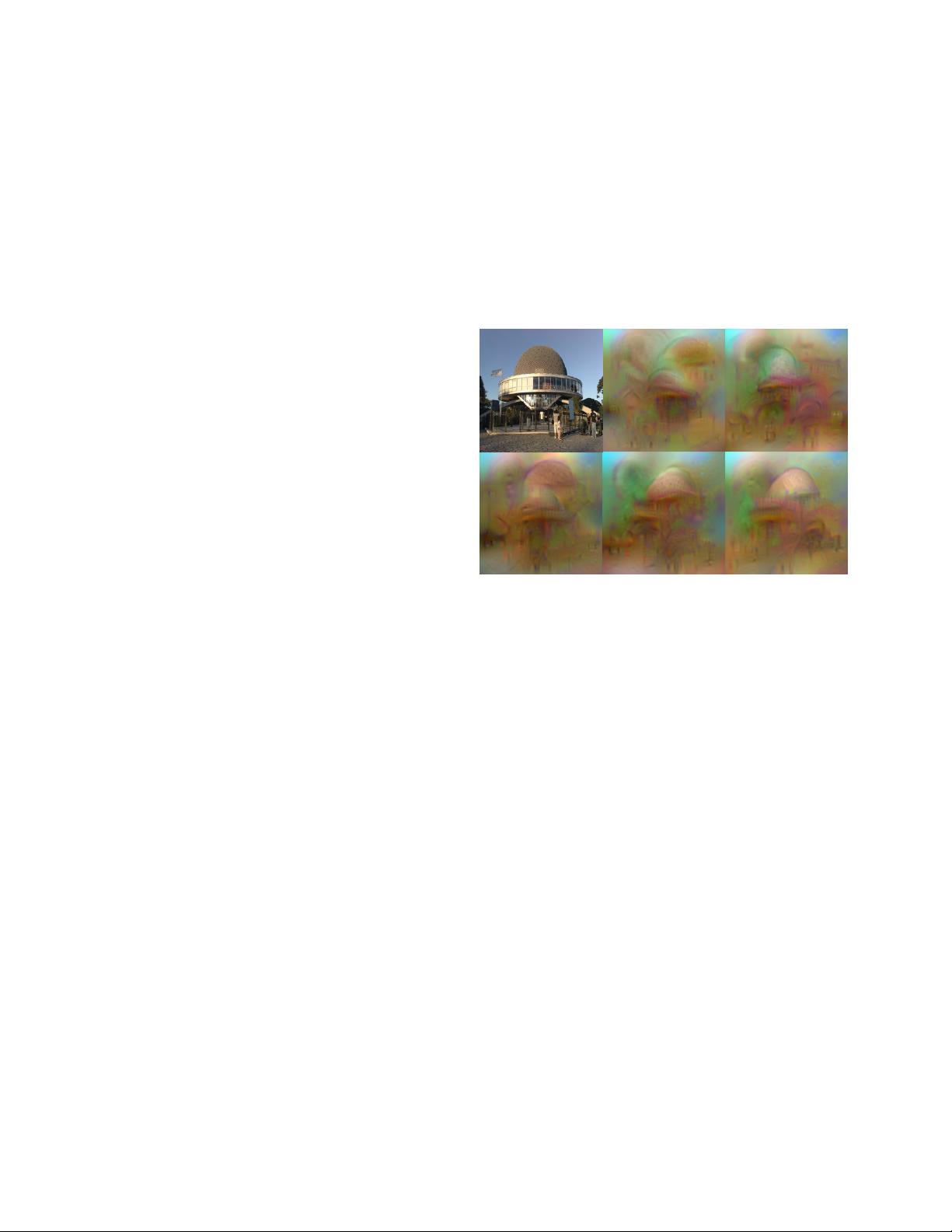

Figure 1. What is encoded by a CNN? The figure shows five

possible reconstructions of the reference image obtained from the

1,000-dimensional code extracted at the penultimate layer of a ref-

erence CNN[15] (before the softmax is applied) trained on the Im-

ageNet data. From the viewpoint of the model, all these images are

practically equivalent. This image is best viewed in color/screen.

lapse irrelevant differences in images (e.g. illumination or

viewpoint), so that Φ should not be uniquely invertible.

Hence, we pose this as a reconstruction problem and find

a number of possible reconstructions rather than a single

one. By doing so, we obtain insights into the invariances

captured by the representation.

Our contributions are as follows. First, we propose a

general method to invert representations, including SIFT,

HOG, and CNNs (Sect. 2). Crucially, this method uses only

information from the image representation and a generic

natural image prior, starting from random noise as initial

solution, and hence captures only the information contained

in the representation itself. We discuss and evaluate differ-

ent regularization penalties as natural image priors. Sec-

ond, we show that, despite its simplicity and generality, this

method recovers significantly better reconstructions from

HOG compared to recent alternatives [33]. As we do so,

we emphasise a number of subtle differences between these

representations and their effect on invertibility. Third, we

apply the inversion technique to the analysis of recent deep

CNNs, exploring their invariance by sampling possible ap-

proximate reconstructions. We relate this to the depth of the

representation, showing that the CNN gradually builds an

1