Group Normalization

Yuxin Wu Kaiming He

Facebook AI Research (FAIR)

{yuxinwu,kaiminghe}@fb.com

Abstract

Batch Normalization (BN) is a milestone technique in the

development of deep learning, enabling various networks

to train. However, normalizing along the batch dimension

introduces problems — BN’s error increases rapidly when

the batch size becomes smaller, caused by inaccurate batch

statistics estimation. This limits BN’s usage for training

larger models and transferring features to computer vision

tasks including detection, segmentation, and video, which

require small batches constrained by memory consumption.

In this paper, we present Group Normalization (GN) as

a simple alternative to BN. GN divides the channels into

groups and computes within each group the mean and vari-

ance for normalization. GN’s computation is independent

of batch sizes, and its accuracy is stable in a wide range

of batch sizes. On ResNet-50 trained in ImageNet, GN has

10.6% lower error than its BN counterpart when using a

batch size of 2; when using typical batch sizes, GN is com-

parably good with BN and outperforms other normaliza-

tion variants. Moreover, GN can be naturally transferred

from pre-training to fine-tuning. GN can outperform its BN-

based counterparts for object detection and segmentation in

COCO,

1

and for video classification in Kinetics, showing

that GN can effectively replace the powerful BN in a variety

of tasks. GN can be easily implemented by a few lines of

code in modern libraries.

1. Introduction

Batch Normalization (Batch Norm or BN) [26] has been

established as a very effective component in deep learning,

largely helping push the frontier in computer vision [59, 20]

and beyond [54]. BN normalizes the features by the mean

and variance computed within a (mini-)batch. This has been

shown by many practices to ease optimization and enable

very deep networks to converge. The stochastic uncertainty

of the batch statistics also acts as a regularizer that can ben-

efit generalization. BN has been a foundation of many state-

of-the-art computer vision algorithms.

1

https://github.com/facebookresearch/Detectron/

blob/master/projects/GN.

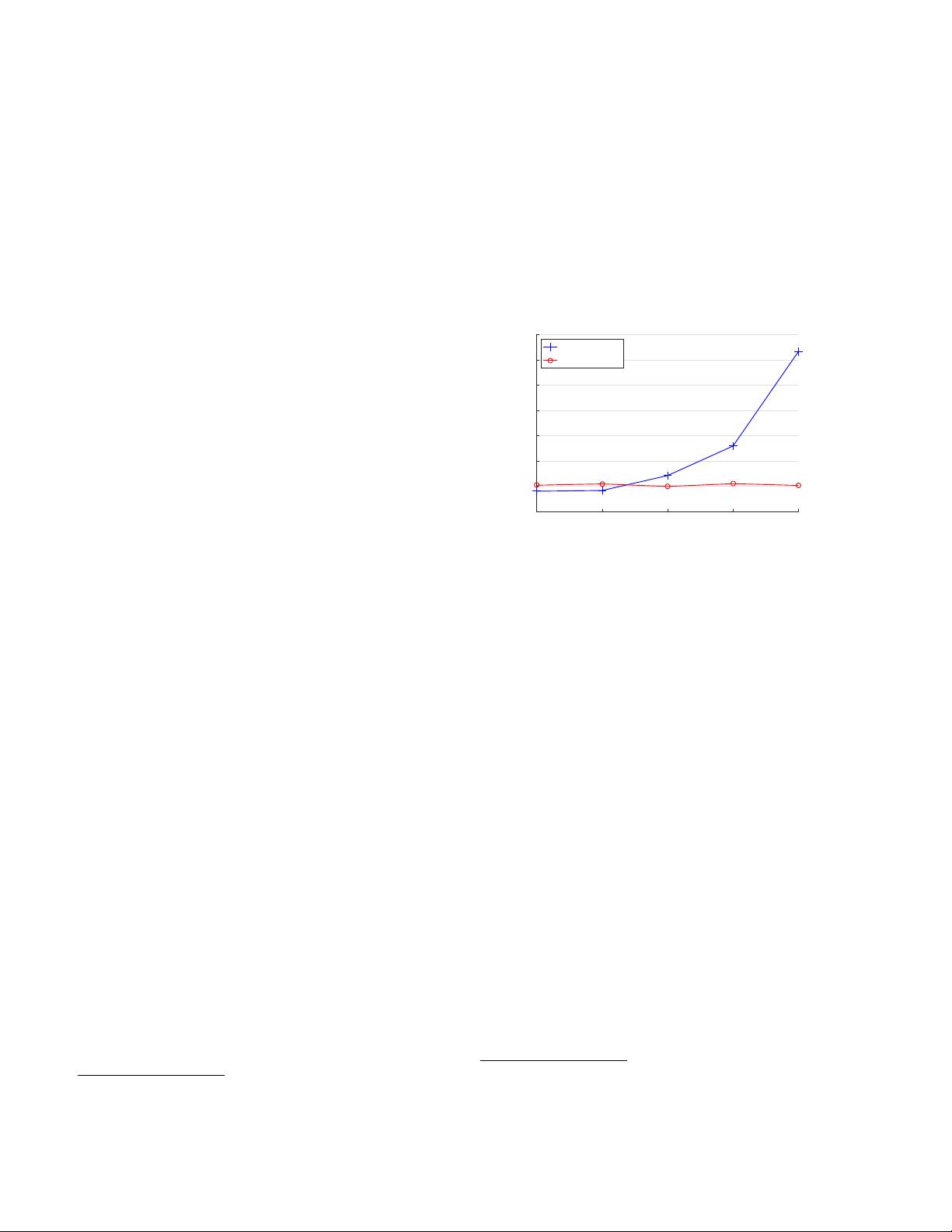

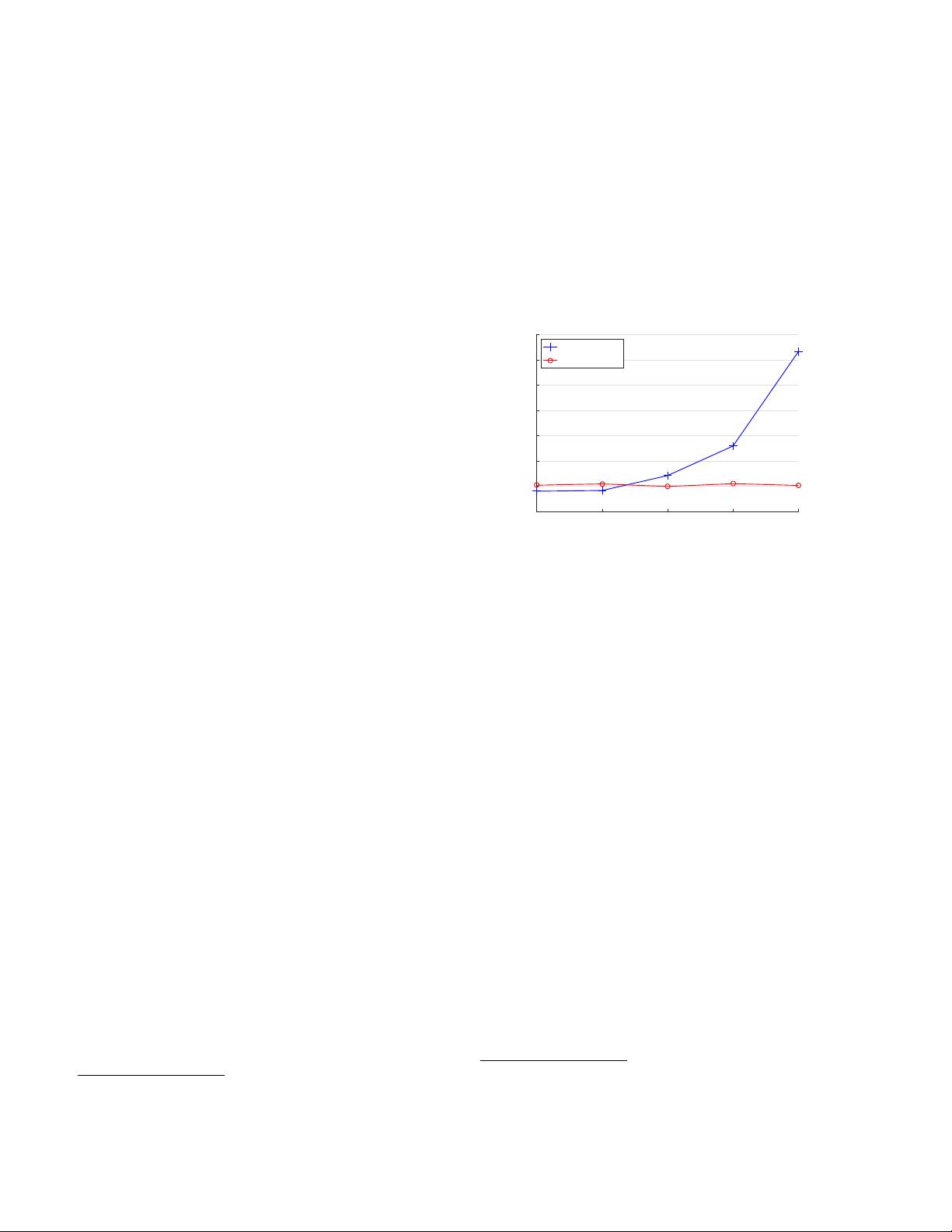

2481632

batch size (images per worker)

22

24

26

28

30

32

34

36

error (%)

Batch Norm

Group Norm

Figure 1. ImageNet classification error vs. batch sizes. This is

a ResNet-50 model trained in the ImageNet training set using 8

workers (GPUs), evaluated in the validation set.

Despite its great success, BN exhibits drawbacks that are

also caused by its distinct behavior of normalizing along

the batch dimension. In particular, it is required for BN

to work with a sufficiently large batch size (e.g., 32 per

worker

2

[26, 59, 20]). A small batch leads to inaccurate

estimation of the batch statistics, and reducing BN’s batch

size increases the model error dramatically (Figure 1). As

a result, many recent models [59, 20, 57, 24, 63] are trained

with non-trivial batch sizes that are memory-consuming.

The heavy reliance on BN’s effectiveness to train models in

turn prohibits people from exploring higher-capacity mod-

els that would be limited by memory.

The restriction on batch sizes is more demanding in com-

puter vision tasks including detection [12, 47, 18], segmen-

tation [38, 18], video recognition [60, 6], and other high-

level systems built on them. For example, the Fast/er and

Mask R-CNN frameworks [12, 47, 18] use a batch size of

1 or 2 images because of higher resolution, where BN is

“frozen” by transforming to a linear layer [20]; in video

classification with 3D convolutions [60, 6], the presence of

spatial-temporal features introduces a trade-off between the

temporal length and batch size. The usage of BN often re-

quires these systems to compromise between the model de-

sign and batch sizes.

2

In the context of this paper, we use “batch size” to refer to the number

of samples per worker (e.g., GPU). BN’s statistics are computed for each

worker, but not broadcast across workers, as is standard in many libraries.

1

arXiv:1803.08494v3 [cs.CV] 11 Jun 2018