3-stage models, whose first stage patch size is 8. For later

stages, the patch sizes are set to 2, which downsizes the fea-

ture map resolution by 2. Following the practice in ResNet,

we increase the hidden dimension twice when downsizing

the feature map resolution by 2. We list a few representative

model configurations in Table 1. Different attention types

(a) have different choices of number of global tokens n

g

.

But they share the same model configurations. Thus we do

not specify a and n

g

in Table 1. Readers refer to the Supple-

mentary for the complete list of model configurations used

in this paper,

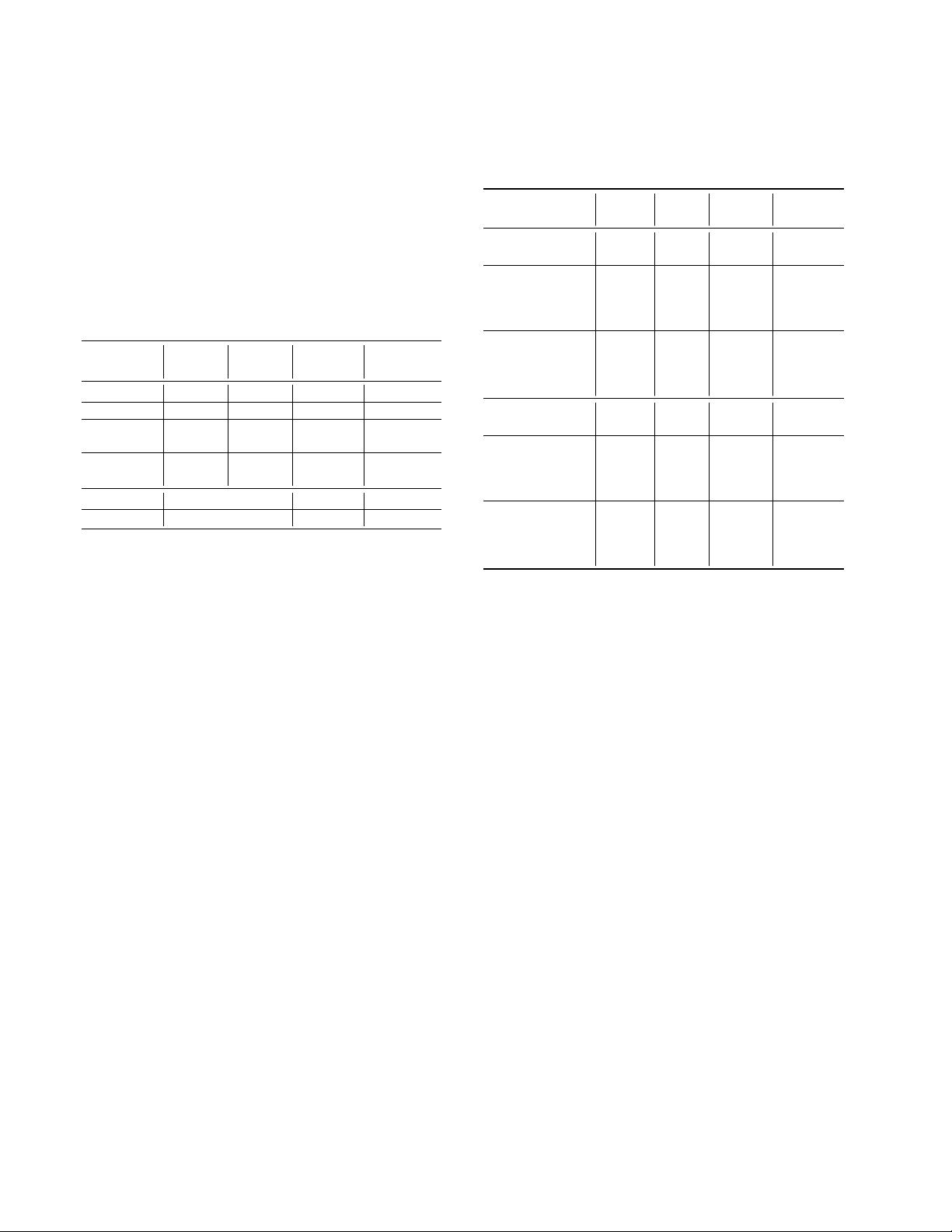

Size

Stage1 Stage2 Stage3 Stage4

n,p,h,d n,p,h,d n,p,h,d n,p,h,d

Tiny 1,4,1,48 1,2,3,96 9,2,3,192 1,2,6,384

Small 1,4,3,96 2,2,3,192 8,2,6,384 1,2,12,768

Medium-D 1,4,3,96 4,2,3,192 16,2,6,384 1,2,12,768

Medium-W 1,4,3,192 2,2,6,384 8,2,8,512 1,2,12,768

Base-D 1,4,3,96 8,2,3,192 24,2,6,384 1,2,12,768

Base-W 1,4,3,192 2,2,6,384 8,2,12,768 1,2,16,1024

Tiny-3stage 2,8,3,96 9,2,3,192 1,2,6,384

Small-3stage 2,8,3,192 9,2,6,384 1,2,12,768

Table 1. Model architecture for multi-scale stacked ViTs. Archi-

tecture parameters for each E-ViT stageE-ViT(a × n/p ; h, d):

number of attention blocks n, input patch size p, number of heads

h and hidden dimension d. See the meaning of these parameters

in Figure 1 (Bottom).

How to connect global tokens between consecutive

stages? The choice varies at different stages and among dif-

ferent tasks. For the tasks in this paper, e.g., classification,

object detection, instance segmentation, we simply discard

the global tokens and only reshape the local tokens as the

input for next stage. In this choice, global tokens only plays

a role of an efficient way to globally communicate between

distant local tokens, or can be viewed as a form of global

memory. These global tokens are useful in vision-language

tasks, in which the text tokens serve as the global tokens

and will be shared across stages.

Should we use the average-pooled layer-normed features

or the LayerNormed CLS token’s feature for image clas-

sification? The choice makes no difference for flat mod-

els. But the average-pooled feature performs better than the

CLS feature for multi-scale models, especially for the multi-

scale models with only one attention block in the last stage

as shown in Table 1. Readers refer to the Supplementary for

an ablation study.

As reported in Table 2, the multi-scale models perform

better than the flat models even in low-resolution classi-

fication problems. This shows the importance of multi-

scale structure on classification tasks. However, the full

self-attention mechanism suffers from the quartic compu-

tation/memory complexity w.r.t. the resolution of feature

maps, as shown in Table 2. Thus, it is impossible to train

4-stage multi-scale ViTs with full attention using the same

setting (batch size and hardware) used for DeiT training.

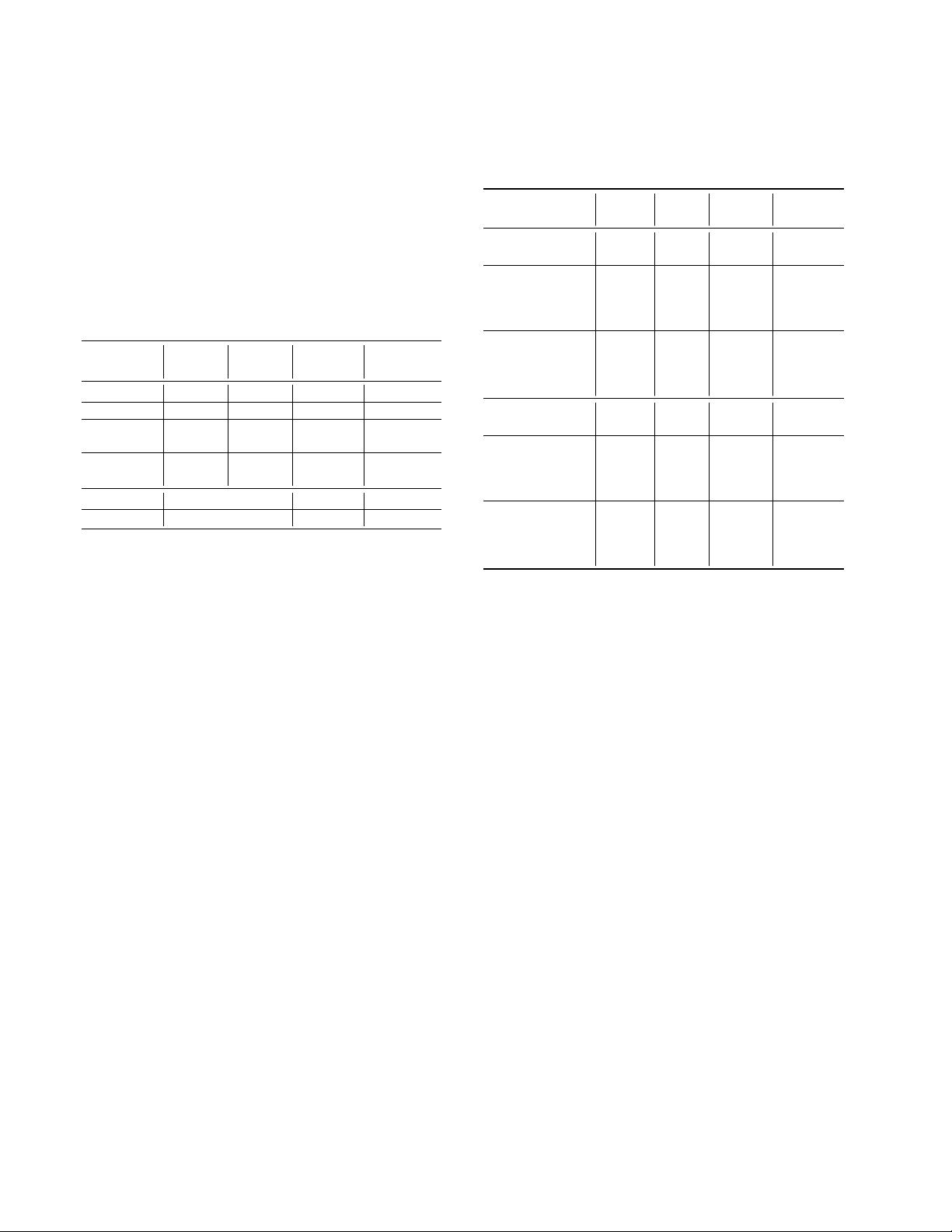

Model

#Params FLOPs Memory Top-1

(M) (G) (M) (%)

Ti-DeiT / 16 [40] 5.7 1.3 33.4 72.2

Ti-E-ViT(full/16) 5.7 1.3 33.4 73.2/73.1

Ti-Full-1,10,1 7.12 1.35 45.8 75.9

Ti-Full-2,9,1 6.78 1.45 60.6 75.8

Ti-Full-1,1,9,1 6.71 2.29 155.0 76.1

Ti-Full-1,2,8,1 6.37 2.39 170.5 75.6

Ti-ViL-1,10,1 7.12 1.27 38.3 75.6±0.23

Ti-ViL-2,9,1 6.78 1.29 45.5 75.9±0.08

Ti-ViL-1,1,9,1 6.71 1.33 52.7 76.2±0.12

Ti-ViL-1,2,8,1 6.37 1.35 60.0 76.0±0.10

S-DeiT / 16 [40] 22.1 4.6 67.1 79.9

S-E-ViT(full/16) 22.1 4.6 67.1 80.4/80.7

S-Full-1,10,1 27.58 4.84 78.5 81.7

S-Full-2,9,1 26.25 5.05 93.8 81.7

S-Full-1,1,9,1 25.96 6.74 472.9 –

S-Full-1,2,8,1 24.63 6.95 488.3 –

S-ViL-1,10,1 27.58 4.67 73.0 81.6

S-ViL-2,9,1 26.25 4.71 81.4 81.8

S-ViL-1,1,9,1 25.96 4.82 108.5 81.8

S-ViL-1,2,8,1 24.63 4.86 116.8 82.0

Table 2. Flat vs Multi-scale Models with full self-attention: Num-

ber of paramers, FLOPS, training time, memory per image (with

Pytorch Automatic Mixed Precision enabled), and ImageNet ac-

curacy with image size 224. “Ti-Full-2,9,1” stands for a tiny-scale

3-stage multiscale ViT with a = full attention and with 2,9,1

number of attention blocks in each stage, respectively. Similarly,

small models start with “S-”. Since all our multi-scale models

use average-pooled feature from the last stage for classification,

we report Top-1 accuracy of E-ViT(full/16) both with the CLS

feature (first) and with the average-pooled feature (second). The

multi-scale models consistently outperform the flat models, but

the memory usage of full attention quickly blows up when only

one high-resolution block is introduced. The Vision Longformer

(“ViL-”) saves FLOPs and memory, without performance drop.

3.2. Vision Longformer: An Efficient Attention

Mechanism

We propose to use the ”local attention + global memory”

efficient mechanism, as illustrated in Figure 2 (Left), to re-

duce the computational and memory cost when using E-ViT

to generate high-resolution feature maps. The 2-D Vision

Longformer is an extension of the 1-D Longformer [2] orig-

inally developed for NLP tasks. We add n

g

global tokens

(including the CLS token) that are allowed to attend to all

other tokens, serving as global memory. We also allow lo-

cal tokens to only attend to global tokens and their local 2-D

neighbors within a window size, and thus limit the increase

of the attention cost to be linear w.r.t. number of input to-

kens. In summary, there are four components in this ”local