9

are upsampled via up-sampling layers in the decoder to

output the final segmentation map. With skip-connection

incorporated, TransUnet sets new records (at the time of pub-

lication) on synapse multi-organ segmentation dataset [156]

and automated cardiac diagnosis challenge (ACDC) [155].

In other work, Zhang et al. propose TransFuse [157] to

effectively fuse features from the Transformer and CNN

layers via BiFusion module. The BiFusion module leverages

the self-attention and multi-modal fusion mechanism to

selectively fuse the features. Extensive evaluation of Trans-

Fuse on multiple modalities (2D and 3D), including Polyp

segmentation, skin lesion segmentation, Hip segmentation,

and prostate segmentation, demonstrate its efficacy. Both

TransUNet [96] and TransFuse [157] require pre-training on

ImageNet dataset [158] to effectively learn the positional

encoding of the images. To learn this positional bias without

any pre-training, Valanarasu et al. [128] propose a modified

gated axial attention layer [159] that works well on small

medical image segmentation datasets. Furthermore, to boost

segmentation performance, they propose a Local-Global

training scheme to focus on the fine details of input images.

Extensive experimentation on brain anatomy segmentation

[160], gland segmentation [161], and MoNuSeg (microscopy)

[162] demonstrate the effectiveness of their proposed gated

axial attention module.

In another work, Tang et al. [163] introduce Swin UNETR,

a novel self-supervised learning framework with proxy tasks

to pre-train Transformer encoder on 5,050 images of CT

dataset. They validate the effectiveness of pre-training by fine-

tuning the Transformer encoder with a CNN-based decoder

on the downstream task of MSD and BTCV segmentation

datasets. Similarly, Sobirov et al. [164] show that transformer-

based models can achieve comparable results to state-of-the-

art CNN-based approaches on the task of head and neck

tumor segmentation. Few works have also investigated the

effectiveness of Transformer layers by integrating them into

the encoder of UNet-based architectures in a plug-and-play

manner. For instance, Cheng et al. [165] propose TransClaw

UNet by integrating Transformer layers in the encoding part

of the Claw UNet [166] to exploit multi-scale information.

TransClaw-UNet achieves an absolute gain of 0.6 in dice

score compared to Claw-UNet on Synapse multi-organ

segmentation dataset and shows excellent generalization.

Similarly, inspired from the LeViT [167], Xu et al. [168]

propose LeViT-UNet which aims to optimize the trade-off

between accuracy and efficiency. LeViT-UNet is a multi-

stage architecture that demonstrates good performance and

generalization ability on Synapse and ACDC benchmarks.

Transformer between Encoder and Decoder.

In this

category, Transformer layers are between the encoder and

decoder of a U-Shape architecture. These architectures are

more suitable to avoid the loss of details during down-

sampling in the encoder layers. The first work in this

category is TransAttUNet [169] that leverages guided at-

tention and multi-scale skip connection to enhance the

flexibility of traditional UNet. Specifically, a robust self-

aware attention module has been embedded between the

encoder and decoder of UNet to concurrently exploit the

expressive abilities of global spatial attention and transformer

self-attention. Extensive experiments on five benchmark

medical imaging segmentation datasets demonstrate the

Convolutional

embedding

Transformer

blocks

Convolutional

down-sampling

Transformer

blocks

Incorporating long-term

dependencies into high-

level features.

1. Precise spatial encoding.

2. High-resolution low-level features.

Modeling object concepts from high-

level features at multiple scales.

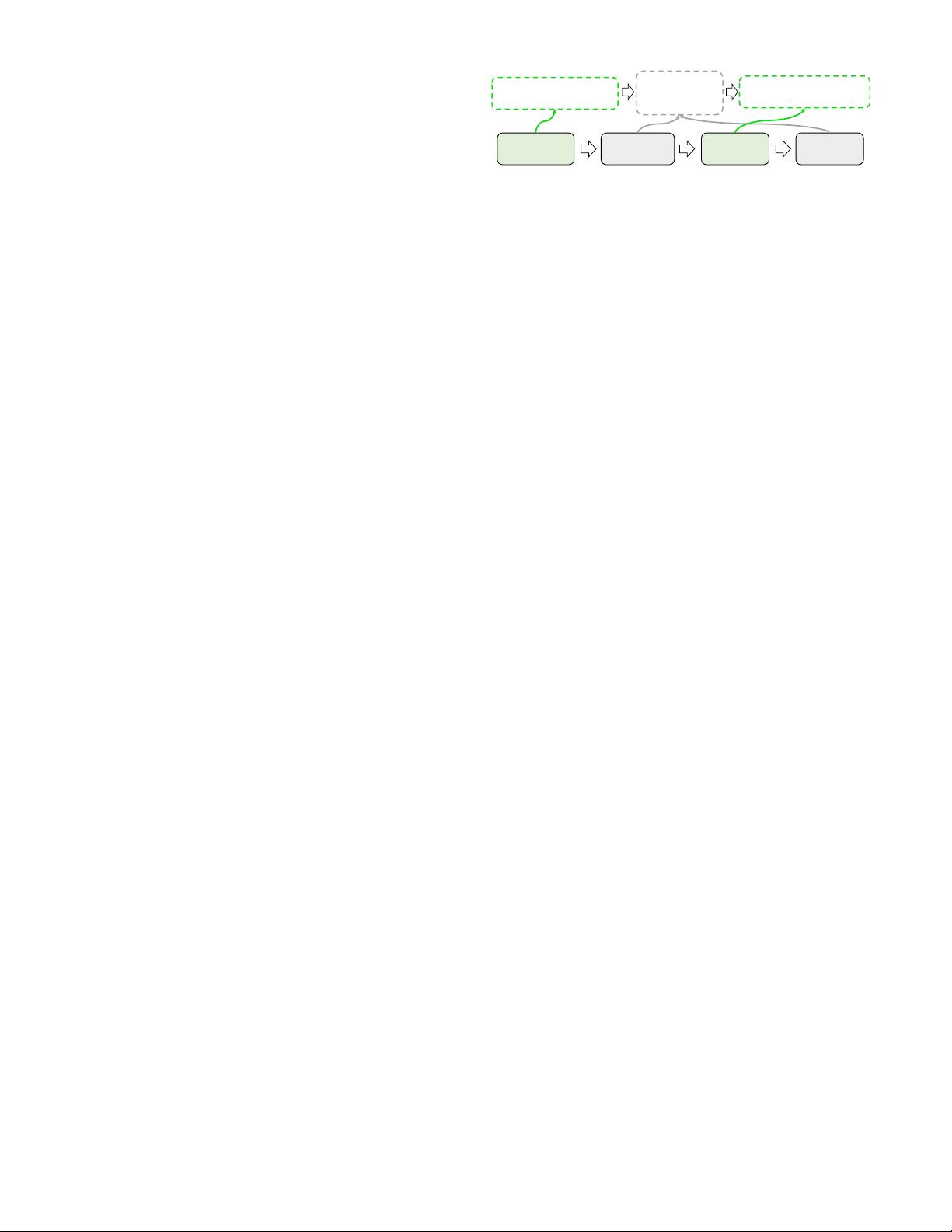

Figure 9: Overview of the interleaved encoder not-another

transFormer (nnFormer) [144] for volumetric medical image

segmentation. Note that convolution and transformer layers

are interleaved to give full play to their strengths. Image

taken from [144].

effectiveness of TransAttUNet architecture. Similarly, Yan

et al. [170] propose Axial Fusion Transformer UNet (AFTer-

UNet) that contains a computationally efficient axial fusion

layer between encoder and decoder to effectively fuse inter

and intra-slice information for 3D medical image segmen-

tation. Experimentation on BCV [171], Thorax-85 [172], and

SegTHOR [173] datasets demonstrate the effectiveness of

their proposed fusion layer.

Transformer in Encoder and Decoder.

Few works inte-

grate Transformer layers in both encoder and decoder of

a U-shape architecture to better exploit the global context

for medical image segmentation. The first work in this

category is UTNet that efficiently reduces the complexity of

the self-attention mechanism from quadratic to linear [174].

Furthermore, to model the image content effectively, UTNet

exploits the two-dimensional relative position encoding [20].

Experiments show strong generalization ability of UTNet on

multi-label and multi-vendor cardiac MRI challenge dataset

cohort [175]. Similarly, to optimally combine convolution and

transformer layers for medical image segmentation, Zhou et

al. [144] propose nnFormer, an interleave encoder-decoder

based architecture, where convolution layer encodes precise

spatial information and Transformer layer encodes global

context as shown in Fig. 9. Like Swin Transformers [126], the

self-attention in nnFormer has been computed within a local

window to reduce the computational complexity. Moreover,

deep supervision in the decoder layers has been employed to

enhance performance. Experiments on ACDC and Synapse

datasets show that nnFormer surpass Swin-UNet [125]

(transformer-based medical segmentation approach) by over

7

%

(dice score) on Synapse dataset. In other work, Lin et

al. propose Dual Swin Transformer UNet (DS-TransUNet)

[176] to incorporate the advantages of Swin Transformer in

U-shaped architecture for medical image segmentation. They

split the input image into non-overlapping patches at two

scales and feed them into the two Swin Transformer-based

branches of the encoder. A novel Transformer Interactive

Fusion module has been proposed to build long-range

dependencies between different scale features in encoder.

DS-TransUNet outperforms CNN-based methods on four

standard datasets related to Polyp segmentation, ISIC 2018,

GLAS, and Datascience bowl 2018.

Transformer in Decoder.

Li et al. [177] investigate the use

of Transformer as an upsampling block in the decoder of

the UNet for medical image segmentation. Specifically, they

adopt a window-based self-attention mechanism to better

complement the upsampled feature maps while maintaining