ƉĞƌͲZŽ/

conv

RoI

pool

conv

RoIs

conv

ZWE

vote

feature

maps

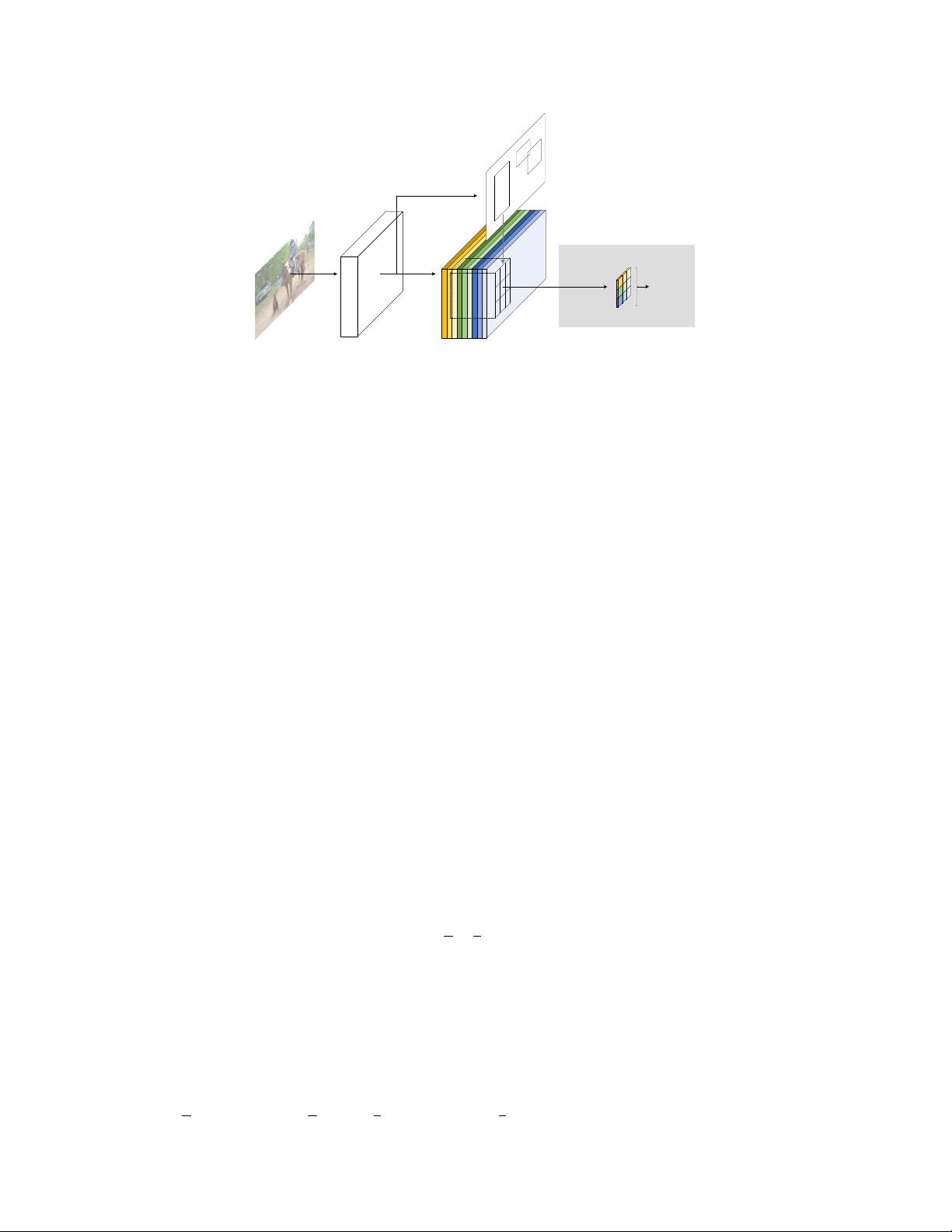

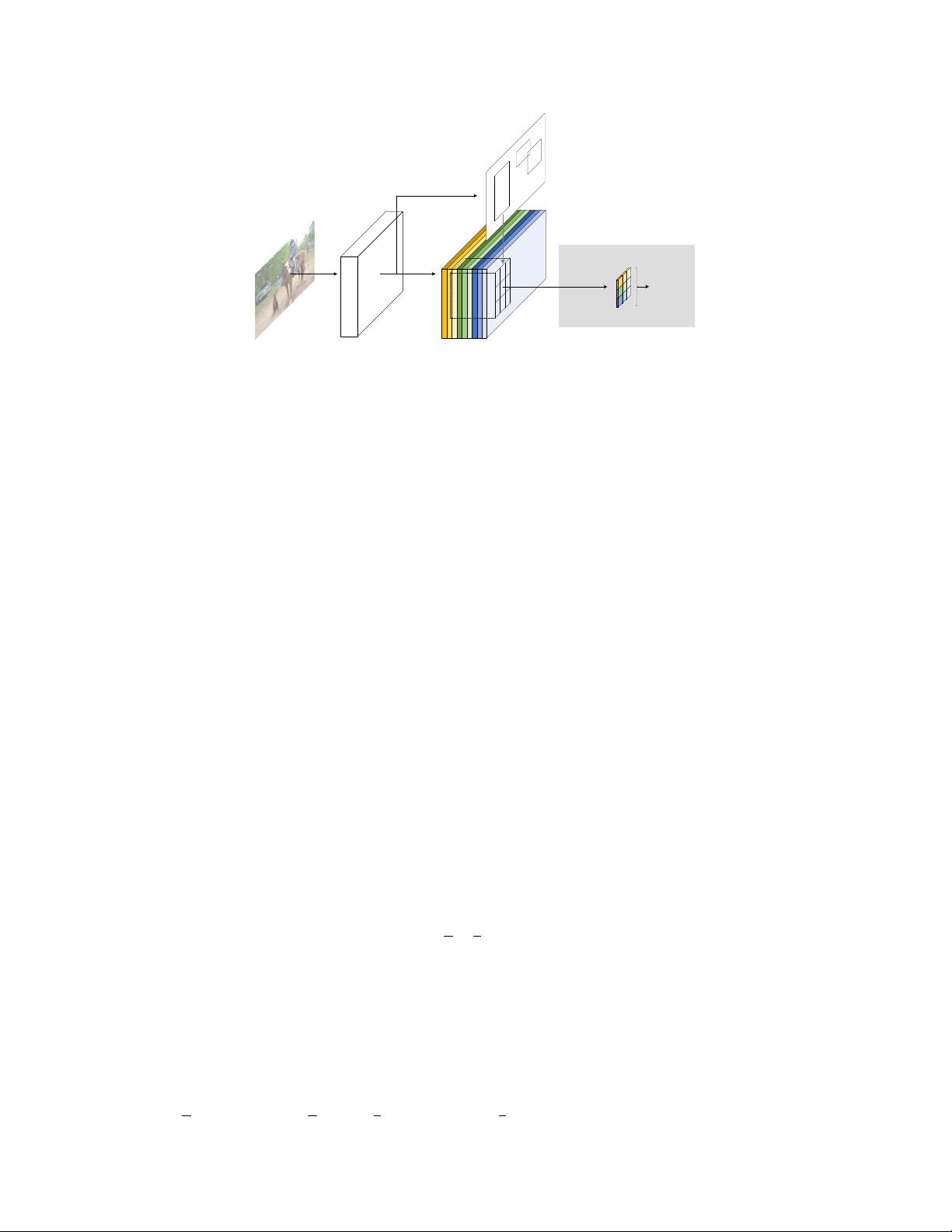

Figure 2: Overall architecture of R-FCN. A Region Proposal Network (RPN) [

18

] proposes candidate

RoIs, which are then applied on the score maps. All learnable weight layers are convolutional and are

computed on the entire image; the per-RoI computational cost is negligible.

accuracy on several benchmarks [

5

,

13

,

20

]. We extract candidate regions by the Region Proposal

Network (RPN) [

18

], which is a fully convolutional architecture in itself. Following [

18

], we share

the features between RPN and R-FCN. Figure 2 shows an overview of the system.

Given the proposal regions (RoIs), the R-FCN architecture is designed to classify the RoIs into object

categories and background. In R-FCN, all learnable weight layers are convolutional and are computed

on the entire image. The last convolutional layer produces a bank of

k

2

position-sensitive score

maps for each category, and thus has a

k

2

(C + 1)

-channel output layer with

C

object categories (

+1

for background). The bank of

k

2

score maps correspond to a

k × k

spatial grid describing relative

positions. For example, with

k × k = 3 × 3

, the 9 score maps encode the cases of {top-left, top-center,

top-right, ..., bottom-right} of an object category.

R-FCN ends with a position-sensitive RoI pooling layer. This layer aggregates the outputs of the

last convolutional layer and generates scores for each RoI. Unlike [

8

,

6

], our position-sensitive RoI

layer conducts selective pooling, and each of the

k × k

bin aggregates responses from only one score

map out of the bank of

k × k

score maps. With end-to-end training, this RoI layer shepherds the last

convolutional layer to learn specialized position-sensitive score maps. Figure 1 illustrates this idea.

Figure 3 and 4 visualize an example. The details are introduced as follows.

Backbone architecture.

The incarnation of R-FCN in this paper is based on ResNet-101 [

9

], though

other networks [

10

,

23

] are applicable. ResNet-101 has 100 convolutional layers followed by global

average pooling and a 1000-class fc layer. We remove the average pooling layer and the fc layer and

only use the convolutional layers to compute feature maps. We use the ResNet-101 released by the

authors of [

9

], pre-trained on ImageNet [

20

]. The last convolutional block in ResNet-101 is 2048-d,

and we attach a randomly initialized 1024-d 1

×

1 convolutional layer for reducing dimension (to be

precise, this increases the depth in Table 1 by 1). Then we apply the

k

2

(C + 1)

-channel convolutional

layer to generate score maps, as introduced next.

Position-sensitive score maps & Position-sensitive RoI pooling.

To explicitly encode position

information into each RoI, we divide each RoI rectangle into

k × k

bins by a regular grid. For an RoI

rectangle of a size

w × h

, a bin is of a size

≈

w

k

×

h

k

[

8

,

6

]. In our method, the last convolutional layer

is constructed to produce

k

2

score maps for each category. Inside the

(i, j)

-th bin (

0 ≤ i, j ≤ k − 1

),

we define a position-sensitive RoI pooling operation that pools only over the (i, j)-th score map:

r

c

(i, j | Θ) =

X

(x,y )∈bin(i,j)

z

i,j,c

(x + x

0

, y + y

0

| Θ)/n. (1)

Here

r

c

(i, j)

is the pooled response in the

(i, j)

-th bin for the

c

-th category,

z

i,j,c

is one score map

out of the

k

2

(C + 1)

score maps,

(x

0

, y

0

)

denotes the top-left corner of an RoI,

n

is the number

of pixels in the bin, and

Θ

denotes all learnable parameters of the network. The

(i, j)

-th bin spans

bi

w

k

c ≤ x < d(i + 1)

w

k

e

and

bj

h

k

c ≤ y < d(j + 1)

h

k

e

. The operation of Eqn.(1) is illustrated in

3