8*8+1

M/8

N/8

8*8+1

8*8

M/8

N/8

M/8

N/8

Input Image

MagicPoint

Heatmap

M

N

M

N

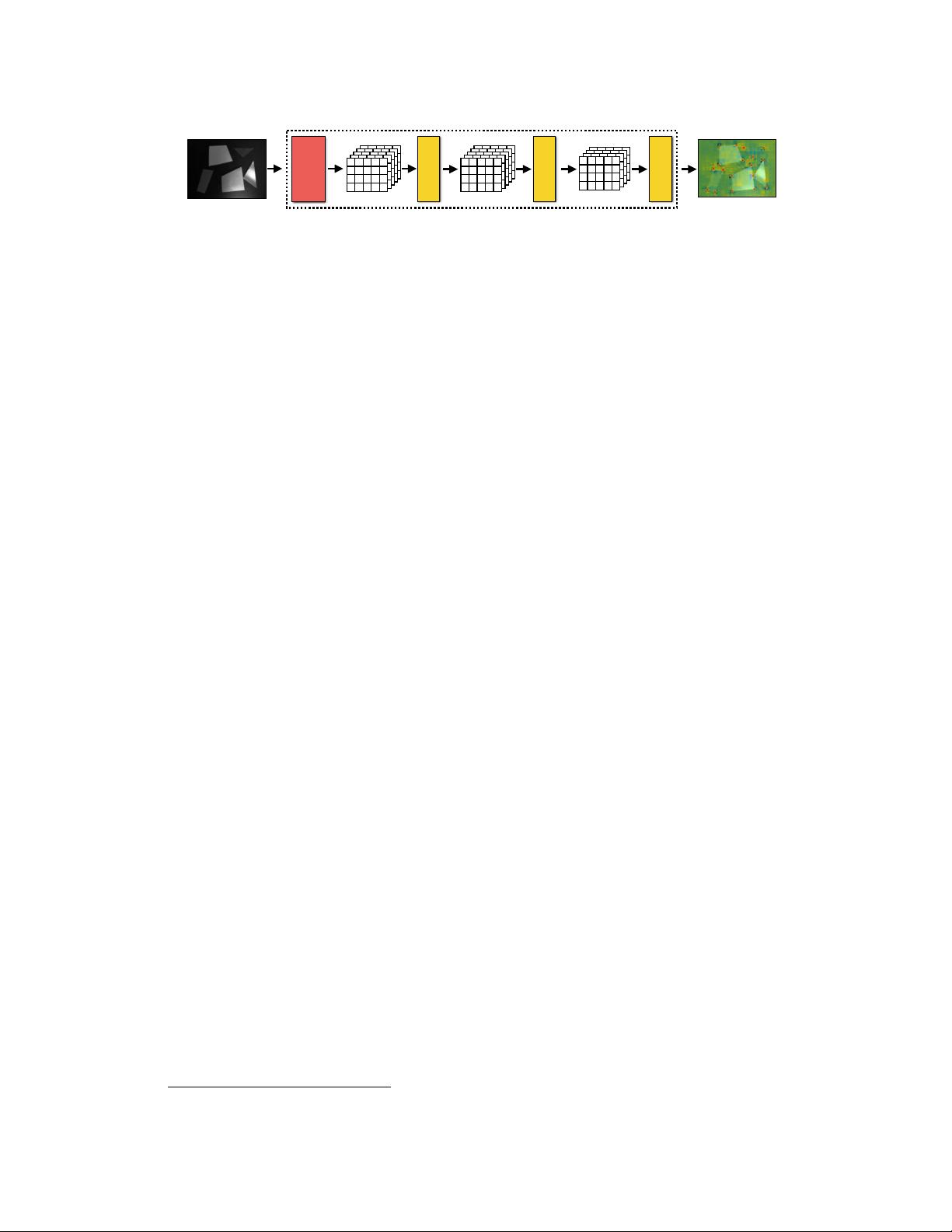

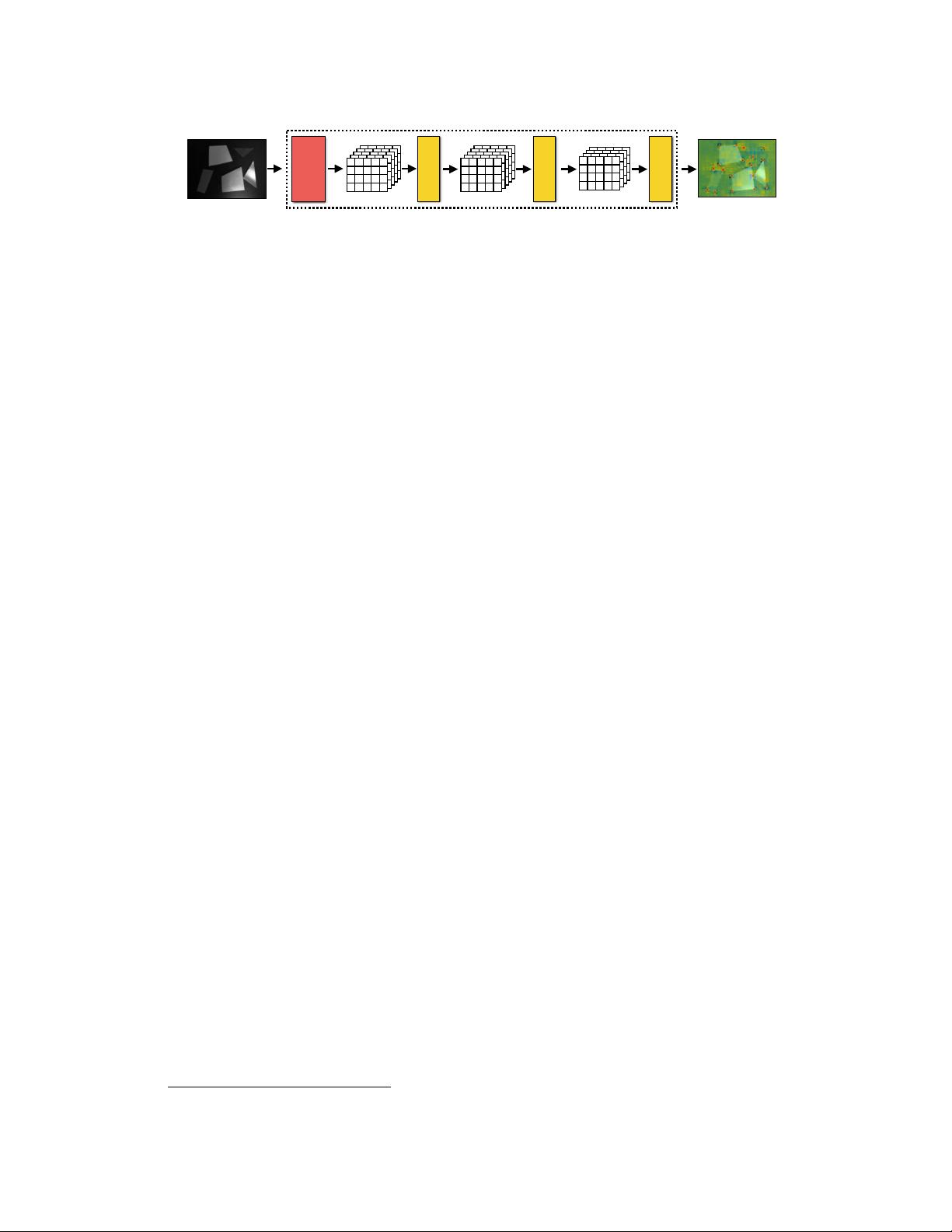

Figure 2: MagicPoint architecture. The MagicPoint network operates on grayscale images and outputs a

“point-ness” probability for each pixel. We use a VGG-style encoder combined with an explicit decoder. Each

spatial location in the final 15x20x65 tensor represents a probability distribution over a local 8x8 region plus a

single dustbin channel which represents no point being detected (8 ∗ 8 + 1 = 65). The network is trained using

a standard cross entropy loss, using point supervision from the 2D shape renderer (see examples in Figure 3).

3 Deep Point-Based Tracking Overview

The general architecture of our Deep Point-Based Tracking system is shown in Figure 1. There are

two convolutional neural networks that perform the majority of computation in the tracking system:

MagicPoint and MagicWarp. We discuss these two models in detail below.

3.1 MagicPoint Overview

MagicPoint Motivation. The first step in most sparse SLAM pipelines is to detect stable 2D interest

point locations in the image. This step is traditionally performed by computing corner-like gradient

response maps such as the second moment matrix [15] or difference of Gaussians [16] and detecting

local maxima. The process is typically repeated at various image scales. Additional steps may

be performed to evenly distribute detections throughout the image, such as requiring a minimum

number of corners within an image cell [6]. This process typically involves a high amount of domain

expertise and hand engineering, which limits generalization and robustness. Ideally, interest points

should be detected in high sensor noise scenarios and low light. Lastly, we should get a confidence

score for each point we detect that can be used to help reject spurious points and up-weigh confident

points later in the SLAM pipeline.

MagicPoint Architecture. We designed a custom convolutional network architecture and training

data pipeline to help meet the above criteria. Ultimately, we want to map an image I to a point

response image P with equivalent resolution, where each pixel of the output corresponds to a prob-

ability of “corner-ness” for that pixel in the input. The standard network design for dense prediction

involves an encoder-decoder pair, where the spatial resolution is decreased via pooling or strided

convolution, and then upsampled back to full resolution via upconvolution operations, such as done

in [17]. Unfortunately, upsampling layers tend to add a high amount of compute, thus we designed

the MagicPoint with an explicit decoder

1

to reduce the computation of the model. The convolu-

tional neural network uses a VGG style encoder to reduce the dimensionality of the image from

120x160 to 15x20 cell grid, with 65 channels for each spatial position. In our experiments we chose

the QQVGA resolution of 120x160 to keep the computation small. The 65 channels correspond to

local, non-overlapping 8x8 grid regions of pixels plus an extra dustbin channel which corresponds

to no point being detected in that 8x8 region. The network is fully convolutional, using 3x3 con-

volutions followed by BatchNorm normalization and ReLU non-linearity. The final conv layer is a

1x1 convolution and more details are shown in Figure 2.

MagicPoint Training. What parts of an image are interest points? They are typically defined by

computer vision and SLAM researchers as uniquely identifiable locations in the image that are stable

across a variety of viewpoint, illumination, and image noise variations. Ultimately, when used as a

preprocessing step for a Sparse SLAM system, they must detect points that work well for a given

SLAM system. Designing and choosing hyper parameters of point detection algorithms requires

expert and domain specific knowledge, which is why we have not yet seen a single dominant point

extraction algorithm persisting across many SLAM systems.

There is no large database of interest point labeled images that exists today. To avoid an expensive

data collection effort, we designed a simple renderer based on available OpenCV [19] functions.

We render simple geometric shapes such as triangles, quadrilaterals, stars, lines, checkerboards, 3D

1

Our decoder has no parameters, and is known as “sub-pixel convolution” [18] or “depth to space” inside

TensorFlow.

3